We are excited to share that several new vector quantization capabilities are now available in public preview in MongoDB Atlas Vector Search: support for binary quantized vector ingestion, automatic scalar quantization, and automatic binary quantization and rescoring.

Together with our recently released support for scalar quantized vector ingestion, these capabilities will empower developers to scale semantic search and generative AI applications more cost-effectively. For a primer on vector quantization, check out our previous blog post.

Enhanced developer experience with native quantization in Atlas Vector Search

Effective quantization methods—specifically scalar and binary quantization—can now be done automatically in Atlas Vector Search. This makes it easier and more cost-effective for developers to use Atlas Vector Search to unlock a wide range of applications, particularly those requiring over a million vectors.

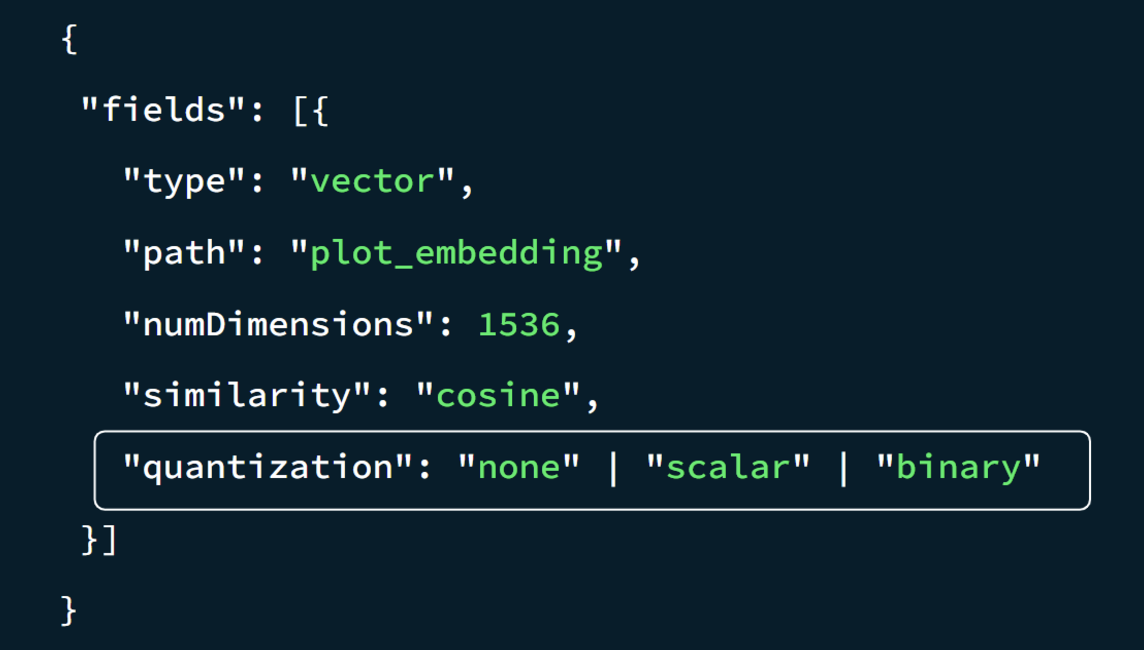

With the new “quantization” index definition parameters, developers can choose to use full-fidelity vectors by specifying “none,” or they can quantize vector embeddings by specifying the desired quantization type—”scalar” or “binary” (Figure 1). This native quantization capability supports vector embeddings from any model provider as well as MongoDB’s BinData float32 vector subtype.

Atlas Vector Search

Scalar quantization—converting a float point into an integer—is generally used when it’s crucial to maintain search accuracy on par with full-precision vectors. Meanwhile, binary quantization—converting a float point into a single bit of 0 or 1—is more suitable for scenarios where storage and memory efficiency are paramount, and a slight reduction in search accuracy is acceptable. If you’re interested in learning more about this process, check out our documentation.

Binary quantization with rescoring: Balance cost and accuracy

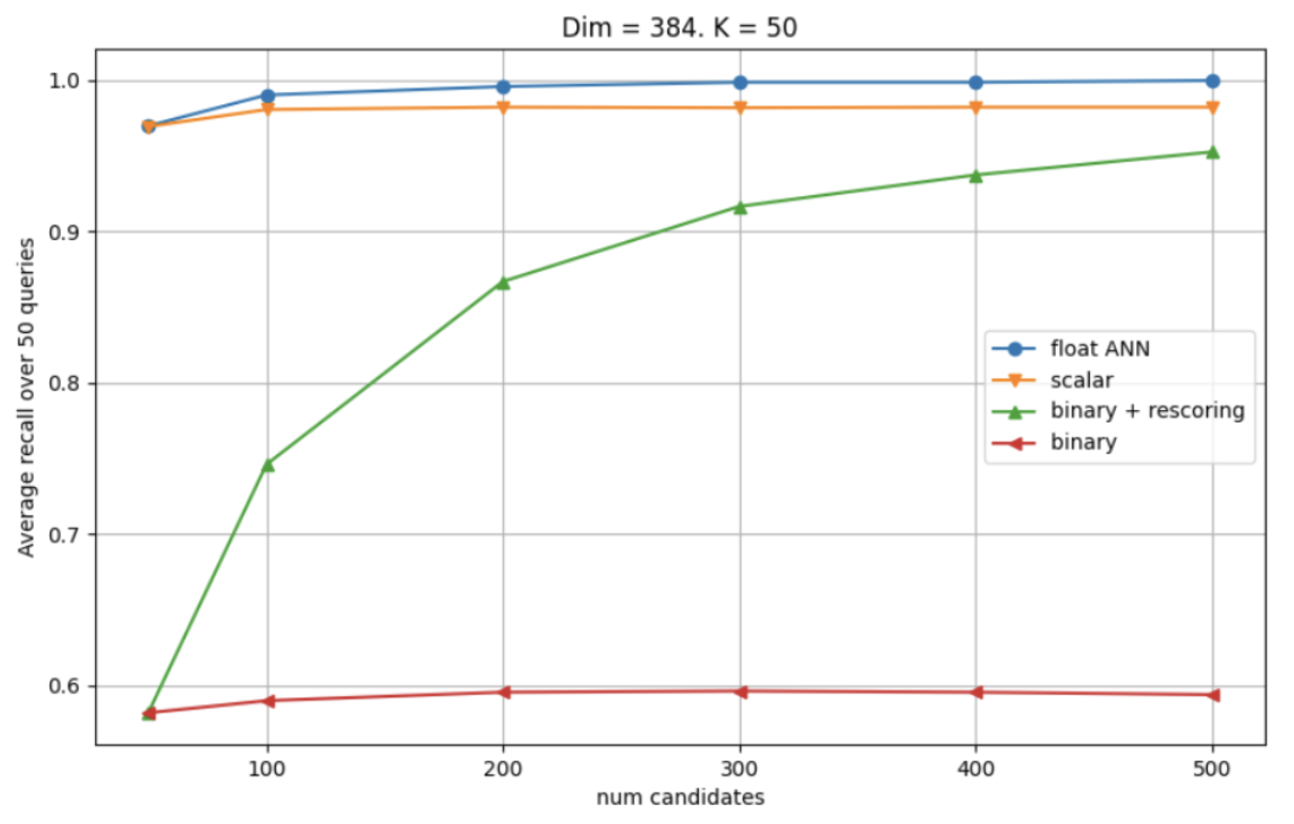

Compared to scalar quantization, binary quantization further reduces memory usage, leading to lower costs and improved scalability—but also a decline in search accuracy. To mitigate this, when “binary” is chosen in the “quantization” index parameter, Atlas Vector Search incorporates an automatic rescoring step, which involves re-ranking a subset of the top binary vector search results using their full-precision counterparts, ensuring that the final search results are highly accurate despite the initial vector compression.

Empirical evidence demonstrates that incorporating a rescoring step when working with binary quantized vectors can dramatically enhance search accuracy, as shown in Figure 2 below.

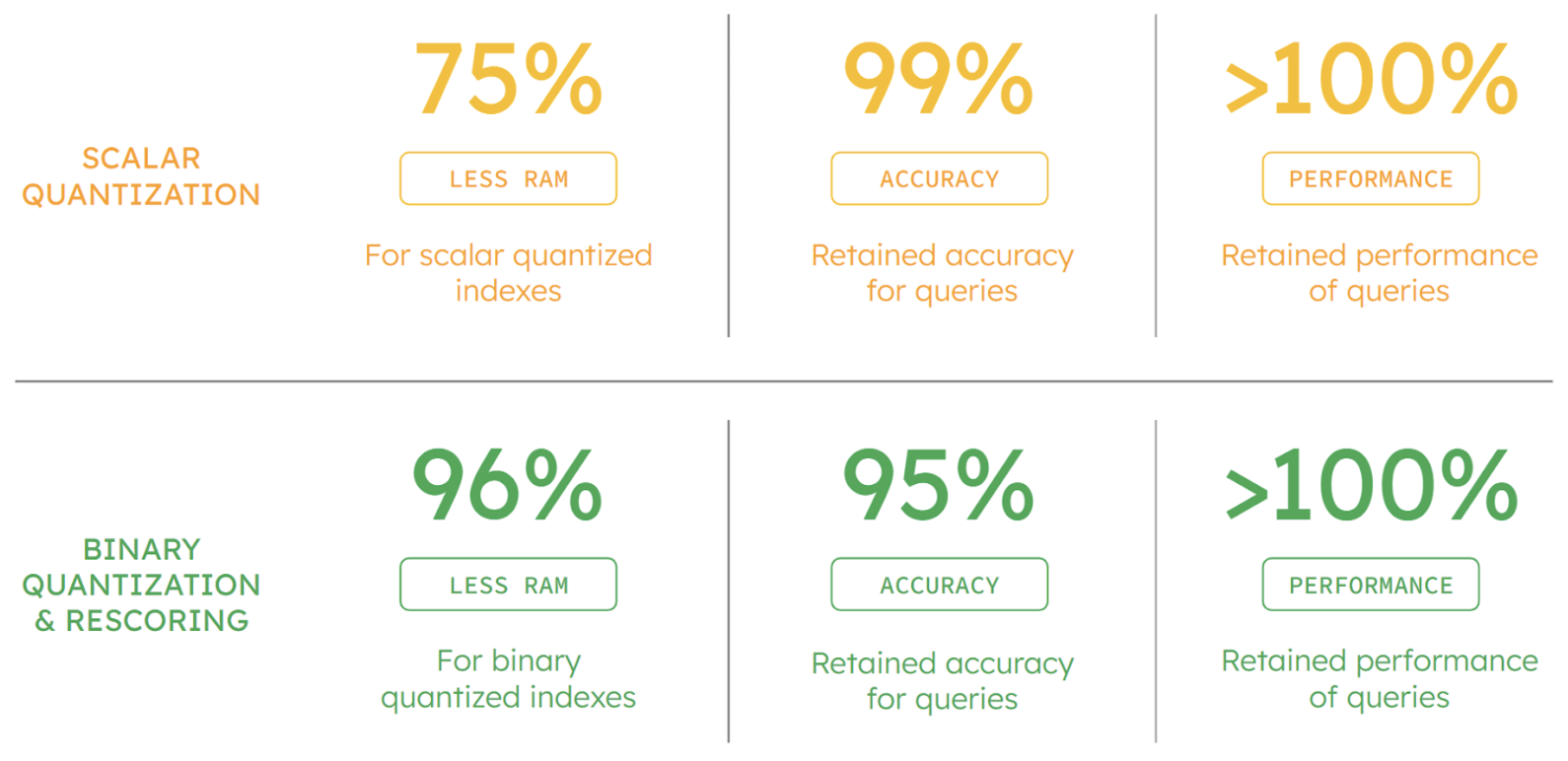

And as Figure 3 shows, in our tests, binary quantization reduced processing memory requirement by 96% while retaining up to 95% search accuracy and improving query performance.

It’s worth noting that even though the quantized vectors are used for indexing and search, their full-fidelity vectors are still stored on disk to support rescoring. Furthermore, retaining the full-fidelity vectors enables developers to perform exact vector search for experimental, high-precision use cases, such as evaluating the search accuracy of quantized vectors produced by different embedding model providers, as needed. For more on evaluating the accuracy of quantized vectors, please see our documentation.

So how can developers make the most of vector quantization? Here are some example use cases that can be made more efficient and scaled effectively with quantized vectors:

-

Massive knowledge bases can be used efficiently and cost-effectively for analysis and insight-oriented use cases, such as content summarization and sentiment analysis. Unstructured data like customer reviews, articles, audio, and videos can be processed and analyzed at a much larger scale, at a lower cost and faster speed.

-

Using quantized vectors can enhance the performance of retrieval-augmented generation (RAG) applications. The efficient processing can support query performance from large knowledge bases, and the cost-effectiveness advantage can enable a more scalable, robust RAG system, which can result in better customer and employee experience.

-

Developers can easily A/B test different embedding models using multiple vectors produced from the same source field during prototyping. MongoDB’s flexible document model lets developers quickly deploy and compare embedding models’ results without the need to rebuild the index or provision an entirely new data model or set of infrastructure.

-

The relevance of search results or context for large language models (LLMs) can be improved by incorporating larger volumes of vectors from multiple sources of relevance, such as different source fields (product descriptions, product images, etc.) embedded within the same or different models.

To get started with vector quantization in Atlas Vector Search, see the following developer resources:

Source: Read More