Organizations are increasingly using generative AI and machine learning (ML) tools to create value from large volumes of unstructured data, such as text, images, and audio files. One technique to efficiently search over unstructured data is to use a specialized machine learning model to transform the source data (e.g. text) into a vector, making it possible to efficiently search through this data. With the rise of natural language processing (NLP), computer vision, and Retrieval Augmented Generation (RAG), searching over vectors has become an essential tool for organizations looking to unlock the full potential of their data.

Amazon Aurora PostgreSQL-Compatible Edition with the open source pgvector vector search extension offers a powerful and scalable solution for building a vector store. Aurora lets developers leverage the extensibility of PostgreSQL and allows them to seamlessly incorporate vector search capabilities into their generative AI applications.

Many organizations want to bring generative AI applications into their software-as-a-service (SaaS) deployments. These applications often use a “multi-tenant” designs, which isolates the data of a tenant (such as, a customer of the SaaS app) from other tenants to meet security and privacy guidelines. In a multi-tenant environment, multiple customers share the same application instance and often the same database. Without proper isolation, there’s a risk that one tenant could potentially access or manipulate another tenant’s data, leading to severe privacy breaches and security vulnerabilities. When you query to retrieve vector embeddings, they need to be tenant-aware to retrieve only tenant-scoped data from the vector store and you need a way to enforce tenant isolation.

In this post, we explore the process of building a multi-tenant generative AI application using Aurora PostgreSQL-Compatible for vector storage. In Part 1 (this post), we present a self-managed approach to building the vector search with Aurora. In Part 2, we present a fully managed approach using Amazon Bedrock Knowledge Bases to simplify the integration of the data sources, the Aurora vector store, and your generative AI application.

Solution overview

Consider a multi-tenant use case where users submit home survey requests for the property they are planning to buy. Home surveyors conduct a survey of the property and update their findings. The home survey report with the updated findings is stored in an Amazon Simple Storage Service (Amazon S3) bucket. The home survey company is now planning to provide a feature to allow their users to ask natural language questions about the property. Embedding models are used to convert the home survey document into vector embeddings. The vector embeddings of the document and the original document data are then ingested into a vector store. Finally, the RAG approach enhances the prompt to the large language model (LLM) with contextual data to generate a response back to the user.

There are two main approaches to building a vector-based application. One approach is to directly handle the conversion of the vector embeddings and write SQL queries to insert and retrieve from the table containing vectors. We call this the self-managed approach, because you are responsible for managing the pipeline. If you want more context for how we are discussing this, watch this session: A practitioners guide to data for generative AI. Another option is the fully-managed approach using Amazon Bedrock Knowledge Bases. This offloads the complexities like vector embedding conversion, SQL query handling, data pipeline management and integrations with a low-code implementation (covered in Part 2).

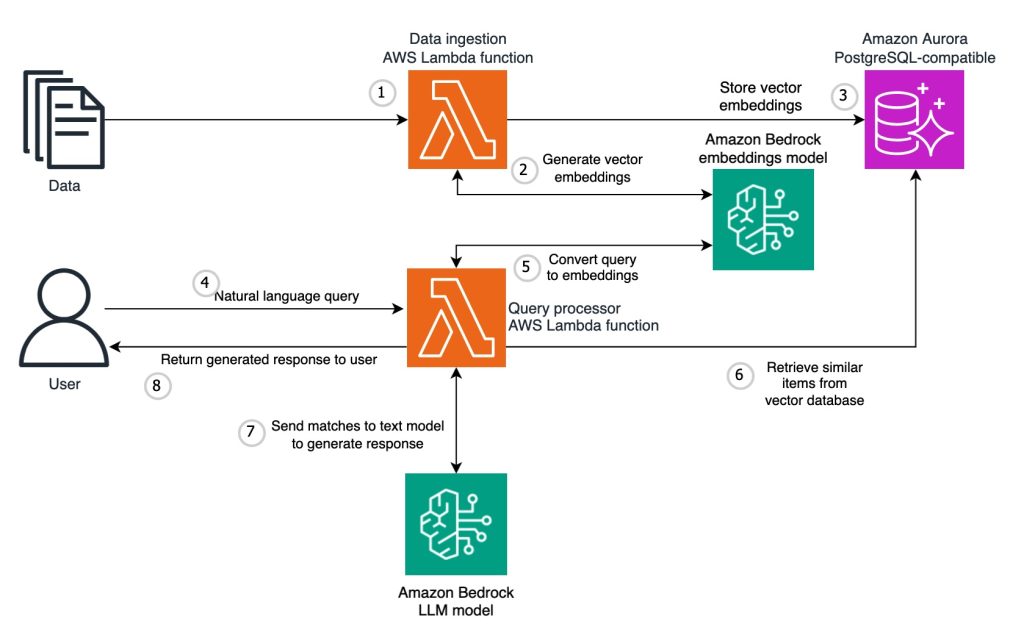

This post shows how to implement the self-managed approach. This involves writing code to take your structured or unstructured data, split it into smaller chunks, send it to an embedding model, and then store the resulting vector embeddings in Amazon Aurora. An example implementation using Aurora PostgreSQL-Compatible is shown in the following diagram.

The high-level steps in this architecture are:

- Data is ingested through an AWS Lambda function.

- The Lambda function splits the document into smaller chunks and calls an embeddings model in Amazon Bedrock to convert each chunk to vector embeddings.

- The vector embeddings are stored in Aurora with pgvector.

- A user asks a natural language query.

- A query processor Lambda function calls an embedding model in Amazon Bedrock to convert the natural language query to embeddings.

- A SQL query is run against the vector store to retrieve similar documents.

- The matching documents are sent to a text-based model in Amazon Bedrock to augment the response.

- The final response is returned to the user.

The following are key vector terminologies:

- Vector embeddings – These are numerical floating-point representations of data in a multi-dimensional format that encapsulates meaningful information, semantic relationships, and contextual characteristics.

- Embedding model – Embedding models are LLMs like Amazon Titan Embeddings G1 that convert raw data into vector embeddings format.

- Dimension – This denotes the size of the vector output from the embeddings model. The Amazon Titan Embeddings V2, supports flexible embeddings dimensions (1,024, 512, 256).

- Chunking – This is the process of breaking the data into smaller pieces before converting them into vector embeddings. Smaller chunks improve future retrieval efficiency. To learn more about different chunking strategies, see How content chunking and parsing works for Amazon Bedrock knowledge bases.

- pgvector – This is an open-source extension feature in PostgreSQL that supports the vector and similarity search capability.

- HNSW index – This graph-based indexing method groups similar vectors into increasingly dense layers, which allows for efficient, high-quality searches over a smaller set of vectors.

- IVFFlat index – This indexing method divides vectors into lists, and then searches a subset of those lists that are closest to the query vector.

In the following sections, we walk through the steps to create a vector store; ingest, retrieve, and augment the vector data; and enforce multi-tenant data isolation.

Prerequisites

To follow along with the steps in this post, you need the following resources:

- An AWS account with AWS Identity and Access Management (IAM) permissions to create an Aurora PostgreSQL database.

- An Aurora PostgreSQL instance (provisioned or Amazon Aurora Serverless v2) with the RDS Data API enabled. For the full list of supported versions, see Data API with Aurora PostgreSQL Serverless v2 and provisioned. Starting with Amazon Aurora PostgreSQL-compatible 16.3, 15.7, 14.12, and 13.15, Amazon Aurora Serverless v2 supports scaling to 0 capacity, including for vector workloads.

- The PostgreSQL psql command line tool. For installation instructions, see Connecting to a DB instance running the PostgreSQL database engine.

- Python installed with the Boto3 library.

Additionally, clone the AWS samples repository for data-for-saas-patterns and move to the folder samples/multi-tenant-vector-database/amazon-aurora/self-managed:

git clone https://github.com/aws-samples/data-for-saas-patterns.git

cd samples/multi-tenant-vector-database/amazon-aurora/self-managed

Create a vector store with Aurora PostgreSQL-compatible

Configure the Aurora PostgreSQL database to enable the pgvector extension and build the required schema for the vector store. You will need to use a user with the rds_superuser privilege to enable the pgvector extension. These steps vary slightly between the self-managed and fully managed approaches, so you can use different schema and table names to test them separately. Run all the SQL commands from the 1_build_vector_db_on_aurora.sql script using psql or the Amazon RDS console query editor to build the vector store configuration:

- Create and verify the pgvector extension (requires rds_superuser privileges). The below query returns the version of pgvector that’s installed:

CREATE EXTENSION IF NOT EXISTS vector;

SELECT extversion FROM pg_extension WHERE extname='vector';

extversion

-----------

0.7.0- Create a schema and the vector table (require database owner privileges):

CREATE SCHEMA self_managed;

CREATE TABLE self_managed.kb (id uuid PRIMARY KEY, embedding vector(1024),

chunks text, metadata jsonb, tenantid bigint);

- Create the index:

CREATE INDEX on self_managed.kb USING hnsw (embedding vector_cosine_ops);- Enable row-level security:

ALTER TABLE self_managed.kb enable row level security;

CREATE POLICY tenant_policy ON self_managed.kb USING

(tenantid = current_setting('self_managed.kb.tenantid')::bigint);- Create the app_user role and grant permissions:

CREATE ROLE app_user LOGIN;

password app_user

GRANT ALL ON SCHEMA self_managed to app_user;

GRANT SELECT ON TABLE self_managed.kb to app_user;After you run all the commands, the schema should contain the vector table, index, and row-level security policy:

d self_managed.kb;

Table "self_managed.kb"

Column | Type | Collation | Nullable | Default

-----------+-----------------------+-----------+----------+---------

id | uuid | | not null |

embedding | vector(1024) | | |

chunks | text | | |

metadata | jsonb | | |

tenantid | bigint | | |

Indexes:

"kb_pkey" PRIMARY KEY, btree (id)

"kb_embedding_idx" hnsw (embedding vector_cosine_ops)

Policies:

POLICY "tenant_policy"

USING (((tenantid)::text = ((current_setting('self_managed.kb.tenantid'::text))::character varying)::text))We use the following fields in the vector table:

- id – The UUID field will be the primary key for the vector store.

- embedding – The vector field that will be used to store the vector embeddings. The argument 1024 denotes the number of dimensions.

- chunks – A text field to store the raw text from your source data in chunks

- metadata – The JSON metadata field (stored using the jsonb data type), which is used to store source attribution, particularly when using the managed ingestion through Amazon Bedrock Knowledge Bases.

- tenantid – This field is used to identify and tie the data and chunks to specific tenants in a SaaS multi-tenant pooled environment.

For improving retrieval performance, pgvector supports the HNSW and IVFFlat index types. It’s typically simpler to start with using a HNSW index due to its ease of management and better query performance / recall profile. For highly selective queries that filter out most of the results, consider using a b-tree index or iterative index scans. For more detailed info on how these indexes work, refer to A deep dive into IVFFlat and HNSW techniques (and if you’re curious for the latest info – check out DAT423).

Ingest the vector data

Self-managed ingestion logic involves writing some code to take your data, send it to an embeddings model, and then store the resulting vector embeddings in your vector store. Embeddings are numerical representations of the real-world objects in a multi-dimensional space that capture the properties and relationships between real-world data. To convert data into embeddings, you can use the Amazon Titan Text Embeddings model. Review the following example code to understand how to convert text data into vector embeddings using the Amazon Titan embeddings model. The sample code described for the self-managed approach can be found in the notebook 2_sql_based_self_managed_rag.ipynb.

def generate_vector_embeddings(data):

body = json.dumps({

"inputText": data,

})

# Invoke embedding model

response = bedrock_runtime.invoke_model(

body=body,

modelId='amazon.titan-embed-text-v2:0' ,

accept='application/json',

contentType='application/json'

)

response_body = json.loads(response['body'].read())

embedding = response_body.get('embedding')

return embeddingWith the function to generate vector embeddings defined, the next step is to insert these vector embeddings into the database. You can use the RDS Data API to run the insert queries and simplify the connection to the Aurora database cluster. The RDS Data API simplifies setting up secure network access to your database, and removes the need to manage connections and drivers to connect to the Aurora PostgreSQL database. The following code shows the function to insert the vector embeddings using the RDS Data API:

def insert_into_vector_db(embedding, chunk, metadata, tenantid):

# Insert query parameters

params = []

params.append({"name": "id", "value": {"stringValue": str(uuid.uuid4())}})

params.append({"name": "embedding", "value": {"stringValue": str(embedding)}})

params.append({"name": "chunks", "value": {"stringValue": chunk}})

params.append({"name": "metadata", "value": {"stringValue": json.dumps(metadata)}, "typeHint": "JSON"})

params.append({"name": "tenantid", "value": {"longValue": tenantid}})

# Invoke the Insert query using RDS Data API

response = rdsData.execute_statement(

resourceArn=cluster_arn,

secretArn=secret_arn,

database=db_name,

sql="INSERT INTO self_managed.kb(id, embedding, chunks, metadata, tenantid) VALUES (:id::uuid,:embedding::vector,:chunks, :metadata::jsonb, :tenantid::bigint)",

parameters=params

)

return responseNext, the input document is split into smaller chunks. In this example, we use PyPDFLoader to load and parse the PDF documents and the LangChain framework’s RecursiveCharacterTextSplitter class for implementing the chunking of the documents. You can choose your chunking strategy and implement your own custom code for chunking.

# Load the document

file_name = "../multi_tenant_survey_reports/Home_Survey_Tenant1.pdf"

loader = PyPDFLoader(file_name)

doc = loader.load()

# Split documents into chunks

text_splitter = RecursiveCharacterTextSplitter(

chunk_size=10000,

chunk_overlap=150

)

chunks = text_splitter.split_documents(doc)

# Generate vector embeddings and insert into vector db

for chunk in chunks:

embedding = generate_vector_embeddings(chunk.page_content)

insert_response = insert_into_vector_db(embedding, chunk.page_content, file_name, "Tenant1")

After you insert the vector embeddings, connect to the Aurora PostgreSQL database using psql to verify that the data has been inserted into the vector table:

SELECT count(*) FROM self_managed.kb;

count

-------

3

(1 row)Retrieve the vector data

The retrieval of the vector data is used in the RAG process to enhance the prompt with domain-specific data before sending the question to the LLM. The natural language question from the end-user is first converted into a vector embedding. The query retrieves the vectors from the database that are most similar to the input vector embedding, which is measured by computing the distance between the vectors and returning the closest ones. The pgvector extension supports several distance functions (such as L2 distance, inner product, cosine distance, L1 distance, Hamming distance, and Jaccard distance) to get the nearest neighbor matches to a vector.

The following function uses the RDS Data API for self-managed data retrieval using the cosine distance operator (<=>):

# function to query the vector database using cosine distance

def query_vector_database(embedding):

paramSet = [{'name':'embedding', 'value':{'stringValue': str(embedding)}}]

response = rdsData.execute_statement(resourceArn=cluster_arn,

secretArn=secret_arn,

database=db_name,

sql='SELECT id,metadata,chunks FROM self_managed.kb ORDER BY embedding <=> :embedding::vector LIMIT 5; ',

parameters = paramSet)

return responseCombine the generate_vector_embeddings() and query_vector_database() retrieval functions to implement the self-managed vector search for any question or query from the end- user:

question = "What is the condition of the roof in my survey report?"

embedding = generate_vector_embeddings(question)

query_response = query_vector_database(embedding)

print(query_response)

# Relevant chunks of source doc (trimmed for brevity)

'"../multi_tenant_survey_reports/Home_Survey_Tenant1.pdf"'...

{'stringValue': 'Type: Vinyl Condition: Fair Notes: Several areas with cracked ...Augment the vector data

The next step is to augment the raw data into the prompt that is sent to an LLM. In Amazon Bedrock, you can choose which foundation model (FM) to use. This example uses Anthropic Claude on Amazon Bedrock to generate an answer to the user’s question along with the augmented context. The data chunks retrieved from the vector store can be used to enhance the prompt with more contextual and domain-specific data before sending it to the LLM. The following code is an example of how to invoke the LLM on Amazon Bedrock using the InvokeModel API:

def generate_message(bedrock_runtime, model_id, system_prompt, messages, max_tokens):

body=json.dumps(

{

"anthropic_version": "bedrock-2023-05-31",

"max_tokens": max_tokens,

"system": system_prompt,

"messages": messages

}

)

response = bedrock_runtime.invoke_model(body=body, modelId=model_id)

response_body = json.loads(response.get('body').read())

return response_body

def invoke_llm_with_rag(messages):

model_id = 'anthropic.claude-3-haiku-20240307-v1:0'

response = generate_message (bedrock_runtime, model_id, "", messages, 300)

return responseYou first generate the vector embedding of the user’s natural language question. Next, you query the vector store to retrieve all the data chunks that are semantically related to the generated embedding. These retrieved data chunks are augmented into the prompt as context before sending the prompt to the LLM. The following is an example of how to define the prompt and augment it with the domain data retrieved from the vector store. This example is also available to view in the samples repository:

# Define the query data and convert it to vector embeddings

def get_contexts(retrievalResults):

contexts = []

for retrievedResult in retrievalResults:

for chunk in retrievedResult:

contexts.append(chunk['stringValue'])

return contexts

contexts = get_contexts(query_response['records'])

prompt = f"""

Human: Use the following pieces of context to provide a concise answer to the question at the end. If you don't know the answer, just say that you don't know, don't try to make up an answer.

<context>

{contexts}

</context

Question: {question}

Assistant:

"""

messages=[{ "role":'user', "content":[{'type':'text','text': prompt.format(contexts, question)}]}]

llm_response = invoke_llm_with_rag(messages)

print(llm_response['content'][0]['text'])Generated response from LLM:

According to the survey report, the condition of the roof is described as "Good".

The report mentions that the roof is made of asphalt shingles with an estimated

age of 8 years, and notes that there are "A few missing shingles on the southwest corner".

Enforce multi-tenant data isolation

To explore multi-tenancy and data isolation, you can onboard a few more tenants by inserting their documents into the vector store:

insert_tenant_document("../multi_tenant_survey_reports/Home_Survey_Tenant2.pdf", tenantid=2)

insert_tenant_document("../multi_tenant_survey_reports/Home_Survey_Tenant3.pdf", tenantid=3)

insert_tenant_document("../multi_tenant_survey_reports/Home_Survey_Tenant4.pdf", tenantid=4)

insert_tenant_document("../multi_tenant_survey_reports/Home_Survey_Tenant5.pdf", tenantid=5)After all new documents are inserted, retrieve the vector data using the same question as in the previous example. This will result in retrieving the data chunks from all documents because the data is pooled into a single vector store for all tenants. You can observe from the sample output of the following query that the retrieved data contains data chunks from multiple tenant documents:

# Define the query data and convert it to vector embeddings to query from the vector store

question = "What is the condition of the roof in my survey report?"

embedding = generate_vector_embeddings(question)

query_response = query_vector_database(embedding)

print(query_response)#Sample Output(trimmed)

'"../multi_tenant_survey_reports/Home_Survey_Tenant4.pdf"'...'Condition: Fair Notes: Laminate is swollen in areas, vinyl cracking ...

'"../multi_tenant_survey_reports/Home_Survey_Tenant2.pdf"'...Heater Age: 10+ years Pipe Material: Mix of copper...

'"../multi_tenant_survey_reports/Home_Survey_Tenant1.pdf"'...'HVAC System Heating Type: Forced air Cooling Type: Central AC...In this self-managed approach, built-in PostgreSQL row-level security is used to achieve tenant isolation. This provides a layer of defense against misconfiguration and prevents data from crossing the tenant boundary.

To learn more about using row-level security with the RDS Data API for multi-tenant data access, see Enforce row-level security with the RDS Data API.

You need to update the query_vector_database() function to support the row-level security feature of PostgreSQL. As part of querying the vector store, you set the current tenantid on the current request using the SET command along with the SQL command to query the vector table. You can review the modified query_vector_database_using_rls() function that allows you to implement tenant isolation:

def query_vector_database_using_rls(embedding, tenantid):

paramSet = [{"name": "embedding", "value": {"stringValue": str(embedding)}}]

tr = rdsData.begin_transaction(

resourceArn = cluster_arn,

secretArn = secret_arn_rls,

database = db_name)

rdsData.execute_statement(resourceArn=cluster_arn,

secretArn=secret_arn_rls,

database=db_name,

sql='SELECT set_config('self_managed.kb.tenantid', CAST(:tenantid AS TEXT), true)',

parameters=[

{'name': 'tenantid', 'value': {'longValue': tenantid}}

],

transactionId = tr['transactionId'])

response = rdsData.execute_statement(resourceArn=cluster_arn,

secretArn=secret_arn_rls,

database=db_name,

sql='SELECT id,tenantid,metadata,chunks FROM self_managed.kb ORDER BY embedding <=> :embedding::vector LIMIT 5; ',

parameters=paramSet,

transactionId = tr['transactionId'])

cr = rdsData.commit_transaction(

resourceArn = cluster_arn,

secretArn = secret_arn_rls,

transactionId = tr['transactionId'])

return responseFinally, ask the same question and retrieve the tenant-specific document chunks using the row-level security feature of PostgreSQL. The output will be from the document chunks specific to the tenantid passed as the filter key:

question = "What is the condition of the roof ?"

embedding = generate_vector_embeddings(question)

query_response = query_vector_database_using_rls(embedding, "Tenant3")

print(query_response)#Sample output(trimmed)

'"../multi_tenant_survey_reports/Home_Survey_Tenant3.pdf"'...'Water Heater Age: 2 years Pipe Material: PEX Condition: Excellent Notes: Highly eTicient, no issues...

'"../multi_tenant_survey_reports/Home_Survey_Tenant3.pdf"'...'Siding Type: Stucco Condition: Good Notes: Some minor cracks but overall good shape...Clean up

To avoid incurring future charges, delete all the resources created in the prerequisites section.

Conclusion

In this post, we showed you how to work with Aurora PostgreSQL-Compatible with pgvector using a self-managed ingestion pipeline and how to enforce tenant isolation using row-level security. Several factors like performance, indexing strategies, and semantic search capabilities need to be considered when choosing a database for generative AI applications. Choosing a self-managed ingestion pipeline gives you more flexibility, but the trade-off is more operational complexity. When choosing a vector store, it’s important to consider the backup, restore, and performance characteristics. To understand the different factors for choosing the vector store, refer to Key considerations when choosing a database for your generative AI applications.

In Part 2, we discuss the fully managed approach of using Amazon Bedrock Knowledge Bases and how to enforce tenant data isolation using metadata filtering.

About the Authors

Josh Hart is a Principal Solutions Architect at AWS. He works with ISV customers in the UK to help them build and modernize their SaaS applications on AWS.

Josh Hart is a Principal Solutions Architect at AWS. He works with ISV customers in the UK to help them build and modernize their SaaS applications on AWS.

Nihilson Gnanadason is a Senior Solutions Architect at AWS. He works with ISVs in the UK to build, run, and scale their software products on AWS.

Nihilson Gnanadason is a Senior Solutions Architect at AWS. He works with ISVs in the UK to build, run, and scale their software products on AWS.

Source: Read More