This post is the continuation of the series on building multi-tenant vector stores with Amazon Aurora PostgreSQL-Compatible Edition. In Part 1, we explored a self-managed approach for building a multi-tenant vector search. The self-managed approach uses direct SQL queries and the RDS Data API to ingest and retrieve data from the vector store. It also enforces multi-tenant data isolation using built-in row-level security policies.

In this post, we discuss the fully managed approach using Amazon Bedrock Knowledge Bases to simplify the integration of the data source with your generative AI application using Aurora. Amazon Bedrock is a fully managed service that makes foundation models (FMs) from leading AI startups and Amazon available through an API, so you can choose from a wide range of FMs to find the model that is best suited for your use case.

Solution overview

Consider a multi-tenant use case where users raise home survey requests for the property they are planning to buy. Home surveyors conduct a survey of the property and update their findings. The home survey report with the updated findings is stored in an Amazon Simple Storage Service (Amazon S3) bucket. The home survey company is now planning to provide a feature to allow their users to ask natural language questions about the property. Embedding models are used to convert the home survey document into vector embeddings. The vector embeddings of the document and the original document data are then ingested into a vector store. Finally, the Retrieval Augmented Generation (RAG) approach enhances the prompt to the large language model (LLM) with contextual data to generate a response back to the user.

Amazon Bedrock Knowledge Bases is a fully managed capability that helps you implement the entire RAG workflow, from ingestion to retrieval and prompt augmentation, without having to build custom integrations to data sources and manage data flows.

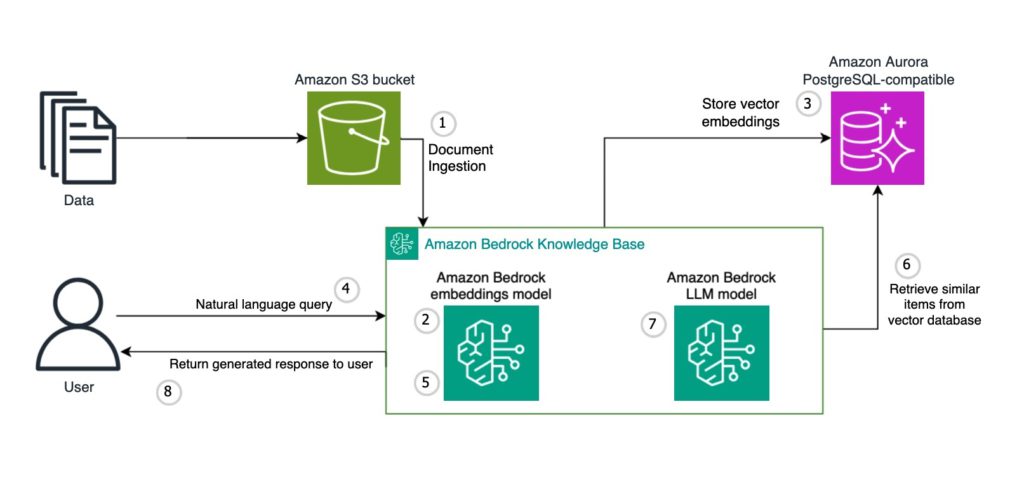

Part 1 explored the self-managed approach to directly handle the conversion of vector embeddings and writing your SQL queries to insert data into the table. An alternative option is the fully managed approach by using features like Amazon Bedrock Knowledge Bases, which offloads the complexities with a low-code approach. We demonstrate how to use this feature to manage the creation and persistence of the vector embeddings into an Aurora PostgreSQL vector store. An example implementation using Amazon Bedrock Knowledge Bases and an Amazon Aurora PostgreSQL-compatible vector store is shown in the following diagram.

The high-level steps in this architecture are:

- Data is ingested from an S3 bucket through Amazon Bedrock Knowledge Bases.

- Amazon Bedrock Knowledge Bases calls an embeddings model in Amazon Bedrock to convert the documents to vector embeddings.

- The vector embeddings along with the data chunks and metadata are stored in Aurora with pgvector.

- A user asks a natural language query.

- The embeddings model configured in Amazon Bedrock Knowledge Bases converts the query to embeddings. This is the same embeddings model used for data ingestion.

- Amazon Bedrock Knowledge Bases runs a query against the vector store to retrieve similar documents.

- The matching documents are sent to an LLM in Amazon Bedrock to augment the response.

- The final response is returned to the user.

In the following sections, we walk through the steps to create a vector store; ingest, retrieve, and augment the vector data; and enforce multi-tenant data isolation.

Prerequisites

To follow along with the steps in this post, you need the following resources:

- An AWS account with AWS Identity and Access Management (IAM) permissions to create an Amazon Aurora PostgreSQL-compatible database.

- An Amazon Aurora PostgreSQL-compatible instance version 16.4 or higher with the RDS Data API enabled. For the full list of supported versions, see Data API with Aurora PostgreSQL Serverless v2 and provisioned.

- The PostgreSQL psql command line tool. For installation instructions, see Connecting to a DB instance running the PostgreSQL database engine.

- Python installed with the Boto3

Additionally, clone the AWS samples repository for data-for-saas-patterns and move to the folder samples/multi-tenant-vector-database/amazon-aurora/aws-managed:

Create a vector store with Amazon Aurora PostgreSQL-compatible

Start with configuring the Aurora PostgreSQL database to enable the pgvector extension and build the required schema for the vector store. These steps vary slightly between the self-managed and fully managed approaches, so you should use a different schema and table names. Run all the SQL commands from the 1_build_vector_db_on_aurora.sql script using psql or the Amazon RDS console query editor or any PostgreSQL query editor tool to build the vector store configuration.

- Create and verify the pgvector extension:

- Create a schema and vector table:

- Create the index:

- Create a user and grant permissions:

After you run the commands, the schema should contain the vector table and index:

We use the following fields for the vector table:

- id – The UUID field will be the primary key for the vector store.

- embedding – The vector field that will be used to store the vector embeddings. The argument 1024 denotes the dimensions or the size of the vector used by the embeddings model. The Amazon Titan Embeddings V2 model supports flexible embeddings dimensions (1024, 512, 256).

- chunks – A text field to store the raw text from your source data in chunks.

- metadata – The JSON metadata field (stored using the jsonb data type), which is used to store source attribution, particularly when using the managed ingestion through Amazon Bedrock Knowledge Bases.

- tenantid – This field is used to identify and tie the data and chunks to specific tenants in a software as a service (SaaS) multi-tenant pooled environment. We also use this field as the key for filtering the data during retrieval.

Ingest the vector data

To ingest the data, you need to create the knowledge base and configure the data source, embedding model, and the underlying vector store. You can create the knowledge base on the Amazon Bedrock console or using code. For creating through code, review the bedrock_knowledgebase_managed_rag.ipynb notebook, which provides all the required IAM policies and role and the step-by-step instructions.

After you configure the knowledge base with the data source and the vector store, you can start uploading the documents to an S3 bucket and ingest them into the vector store. Amazon Bedrock Knowledge Bases simplifies the data ingestion into the Aurora PostgreSQL-compatible vector store with a single API call:

Wait for the ingestion job to complete, then query the Aurora PostgreSQL-compatible database to verify the vector embeddings are ingested and stored into the vector table. A few rows are created for the single document ingested depending on the number of chunks the document has been split into using the standard fixed size chunking strategy. Splitting the document into manageable chunks can impact the efficiency and quality of the data retrieval. To learn more about different chunking strategies, see How content chunking works for knowledge bases. You can verify the data has been stored in the vector store and the number of chunks by running the following SQL command:

Preparing for prompt augmentation with vector similarity search

We perform a vector similarity search in RAG to find the appropriate source data (in this case text chunks) to enhance the prompt with domain-specific data before sending the question to the LLM. The natural language question from the end-user is first converted into vector embeddings. Then it retrieves the vector data from the database that closely matches the input vector embedding.

Using the Amazon Bedrock Knowledge Bases APIs abstracts the retrieval mechanism based on the configured vector store and relieves you from writing the complex SQL-based search queries. The following code is an example showing the Retrieve API of Amazon Bedrock Knowledge Bases:

You can use the Retrieve API to retrieve the vector data chunks from the Tenant1 document based on any given natural language question from the end-user:

Building an augmented prompt

The next step is building the augmented prompt that will be sent to a foundation model (FM). In Amazon Bedrock, you can choose which foundation model to use. This example uses Anthropic Claude on Amazon Bedrock to generate an answer to the user’s question along with the augmented context. The data chunks retrieved from the vector store can be used to enhance the prompt with more contextual and domain-specific data before sending it to the FM. The following code is an example of how you can invoke the Anthropic’s Claude FM on Amazon Bedrock using the InvokeModel API:

When using Amazon Bedrock Knowledge Bases, you need to invoke the Retrieve API, passing the user question and knowledge base ID. Amazon Bedrock Knowledge Bases generates the vector embeddings and queries the vector store to retrieve the closest and related embeddings. The following code is an example of how to frame the prompt and augment it with the domain data retrieved from the vector store:

Enforce multi-tenant data isolation

To explore multi-tenancy and data isolation, you can onboard a few more tenants by uploading their documents into the knowledge base data source. You need to ingest these new documents and wait for the ingestion to complete. See the following code:

After all the new documents are ingested, retrieve the vector data using the same natural language question as in the previous example. This will result in retrieving the data chunks from all the documents because the data is pooled into a single table for all tenants. You can observe from the sample output of the Retrieve API that the retrieved data contains data chunks from multiple tenant documents:

Tenant data isolation is critical in a multi-tenant SaaS deployment, and you need a way to enforce isolation such that the Retrieve API is tenant-aware to retrieve only tenant-scoped data from the vector store. Amazon Bedrock Knowledge Bases supports a feature called metadata and filtering. You can use this feature to implement tenant data isolation with vector stores. To enable the filters, first tag all the documents in the data source with their respective tenant metadata. For each document, add a metadata.json document with its respective tenantid metadata tag, like in the following code:

When the tagging is complete for all the documents, you can upload the metadata.json file into the S3 bucket and ingest these metadata files into the knowledge base:

Next, update the retrieve function to add filtering based on the tenantid tag. During retrieval, a filter configuration uses the tenantid to make sure that only tenant-specific data chunks are retrieved from the underlying vector store of the knowledge base. The following code is the updated retrieve function with metadata filtering enabled:

Finally, you can ask the same question and retrieve the tenant-specific document chunks using the knowledge base metadata and filtering feature. The output will be only from the document chunks specific to the tenantid passed as the filter key value:

Best practices for multi-tenant vector store deployments

There are several factors like performance, indexing strategies, and semantic search capabilities that need to be considered when choosing a vector store for generative AI applications. For more information, refer to Key considerations when choosing a database for your generative AI applications.

When deploying a multi-tenant vector store solution in production, consider the following best practices for scaling:

- For the self-managed implementation, consider using read replicas for read-heavy workloads.

- Implement connection pooling to manage database connections efficiently. For more details, see Enforce row-level security with the RDS Data API.

- Plan a vertical and horizontal scaling strategy. For more details, see Scaling your relational database for SaaS.

The following are best practices for performance:

- Optimize the chunk size and strategy for your specific use case. Smaller chunk sizes are suitable for smaller documents or where some loss of context is acceptable, for example, in simple Q&A use-cases. Larger chunks maintain a longer context where the results for larger documents should be very specific, but these can exceed the context length of the model and increase cost. For more details, see A practitioner’s guide to data for Generative AI.

- Test and validate an appropriate embedding model for your use case. The embedding model’s characteristics (including its dimensions) impact both query performance and search quality. Different embedding models have different recall rates, with some smaller models potentially performing better than larger ones.

- For highly selective queries that filter out most of the results, consider using a B-tree index (such as on the

tenantidattribute) to guide the query planner. For low-selectivity queries, consider an approximate index such as HNSW. For more details on configuring HNSW indexes, see Best practices for querying vector data for gen AI apps in PostgreSQL. - Use Amazon Aurora Optimized Reads to improve query performance when your vector workload exceeds available memory. For more details, see Improve the performance of generative AI workloads on Amazon Aurora with Optimized Reads and pgvector.

- Monitor and optimize query performance using PostgreSQL query plans. For more details, see Monitor query plans for Amazon Aurora PostgreSQL.

In general, fully-managed features help to remove undifferentiated work, such as pipeline management, so you can focus on building the features that will delight your customers.

Clean up

To avoid incurring future charges, delete all the resources created in the prerequisites section and the knowledge bases you created.

Conclusion

In this post, we showed you how to work with an Aurora vector store using Amazon Bedrock Knowledge Bases and how to enforce tenant isolation with metadata filtering. In Part 1 of this series, we showed how you can do this with a self-managed ingestion pipeline. Tenant data isolation is important when operating a pooled data model of the vector store for your SaaS multi-tenant generative application. Now you have the choice to adopt either of these approaches depending on your specific requirements.

We invite you to try the self-managed and fully managed approaches to build a multi-tenant vector store and leave your feedback in the comments.

About the Authors

Josh Hart is a Principal Solutions Architect at AWS. He works with ISV customers in the UK to help them build and modernize their SaaS applications on AWS.

Josh Hart is a Principal Solutions Architect at AWS. He works with ISV customers in the UK to help them build and modernize their SaaS applications on AWS.

Nihilson Gnanadason is a Senior Solutions Architect at AWS. He works with ISVs in the UK to build, run, and scale their software products on AWS.

Nihilson Gnanadason is a Senior Solutions Architect at AWS. He works with ISVs in the UK to build, run, and scale their software products on AWS.

Source: Read More