Imagine that it’s a typical Tuesday afternoon and that you’re the operations manager for a major North American telecommunications company. Suddenly, your Network Operations Center (NOC) receives an alert that web traffic in Toronto has surged by hundreds of percentage points over the last hour—far above its usual baseline. At nearly the same moment, a major Toronto-based client complains that their video streams have been buffering nonstop.

Just a few years ago, a scenario like this would trigger a frantic scramble: teams digging into logs, manually writing queries, and attempting to correlate thousands of lines of data in different formats to find a single root cause.

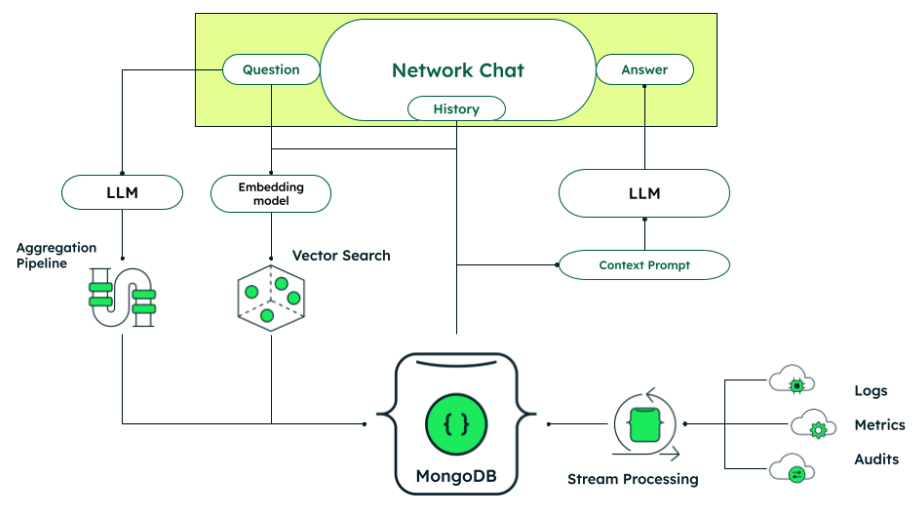

Today, there’s a more streamlined, AI-driven approach. By combining MongoDB’s developer data platform with large language models (LLMs) and a retrieval-augmented generation (RAG) architecture, you can move from reactive “firefighting” to proactive, data-informed diagnostics. Instead of juggling multiple monitoring dashboards or writing complicated queries by hand, you can simply ask for insights—and the system retrieves and analyzes the necessary data automatically.

Facing the unexpected traffic spike

Now let’s imagine the same situation, but this time with AI-assisted network management. Shortly after you spot a traffic surge in Toronto, your NOC chatbot pings you with a situation report: requests from one neighborhood are skyrocketing, and an unusually high percentage involve video streaming paths or caching servers.

Under the hood, MongoDB automatically ingests every log entry and telemetry event in real time—capturing IP addresses, geographic data, request paths, timestamps, router logs, and sensor data. Meanwhile, textual content (such as error messages, user complaints, and chat transcripts) is vectorized and stored in MongoDB for semantic search. This setup enables near-instant access to relevant information whenever a keyword like “buffering,” “video streams,” or “streaming lag” is mentioned, ensuring a fast, end-to-end diagnosis.

Zeroing in on the root cause

Instead of rummaging through separate logging tools, you pose a simple natural-language question to the system: “What might be causing the client’s video stream buffering problem in Toronto?” The LLM responds by generating a custom MongoDB Aggregation Pipeline—written in Python code—tailored to your query.

It might look something like this: a $match stage to filter for the last twenty-four hours of data in Toronto, a $group stage to roll up metrics by streaming services, and a $sort stage to find the largest error counts.

The code is automatically served back to you, and with a quick confirmation, you execute it on your MongoDB cluster. A moment later, the chatbot returns with a summarized explanation that points to an overloaded local CDN node, along with higher-than-expected requests from older routers known to misbehave under peak load.

Next, you ask the system to explain the core issue in simpler terms so you can share it with a business stakeholder. The LLM takes the numeric results from the Aggregation Pipeline, merges them with textual logs that mention “firmware out-of-date,” and then outputs a cohesive explanation. It even suggests that many of these older routers are still running last year’s firmware release—a known contributor to buffering issues on video streams during traffic spikes.

How retrieval-augmented generation (RAG) helps

The power behind this effortless insight is a RAG architecture, which marries semantic search with generative text responses. First, the LLM uses vector search in MongoDB to retrieve only those log entries, complaint records, and knowledge base articles that directly relate to streaming. Once it has these key data chunks, the LLM can generate—and continually refine—its analysis.

When the system references historical data to confirm that “similar spikes occurred during the playoffs last year” or that “users with older firmware frequently complain about buffering,” it’s not blindly guessing. Instead, it’s accessing domain-specific logs, user feedback, and diagnostic documents stored in MongoDB, and then weaving them together into a coherent explanation. This eliminates guesswork and slashes the time your team would otherwise spend on low-level data cleanup, correlation, and interpretation.

Executing automated remediation

Armed with these insights, your team can roll out a targeted fix, possibly involving an auto-update to the affected routers or load-balancing traffic to alternative CDN endpoints. MongoDB’s Change Streams can monitor for future anomalies. If a traffic spike starts to look suspiciously similar to the scenario you just solved, the system can raise a proactive alert or even initiate the fix automatically.

Refer to the official documentation to learn more about the change streams.

Meanwhile, the cost savings add up. You no longer need engineers manually piecing data together, nor do you endure prolonged user dissatisfaction while you try to figure out what’s happening. Everything from anomaly detection to root-cause analysis and recommended mitigation steps is fed through a single pipeline—visible and explainable in plain language.

A future of AI-driven operations

This scenario highlights how (gen) AI Ops and MongoDB complement each other to transform network management:

-

Schema flexibility: MongoDB’s document-based model effortlessly stores logs, performance metrics, and user feedback in a single, consistent environment.

-

Real-time performance: With horizontal scaling, you can ingest the massive volumes of data generated by network logs and user requests at any hour of the day.

-

Vector search integration: By embedding textual data (such as logs, user complaints, or FAQs) and storing those vectors in MongoDB, you enable instant retrieval of semantically relevant content—making it easy for an LLM to find exactly what it needs.

-

Aggregation + LLM: An LLM can auto-generate MongoDB Aggregation Pipelines to sift through numeric data with ease, while a second pass to the LLM composes a final summary that merges both numeric and textual analysis.

Once you see how much time and effort this end-to-end workflow saves, you can extend it across the entire organization. Whether it’s analyzing sudden traffic spikes in specific geographies, diagnosing a security event, or handling peak online shopping loads during a holiday sale, the concept remains the same: empower people to ask natural-language questions about complex data, rely on AI to craft the specialized queries behind the scenes, and store it all in a platform that can handle unbounded complexity.

Ready to embrace gen AI ops with MongoDB?

Network disruptions will never fully disappear, but how quickly and intelligently you respond can be a game-changer. By uniting MongoDB with LLM-based AI and a retrieval-augmented generation (RAG) strategy, you transform your network operations from a tangle of logs and dashboards into a conversational, automated, and deeply informed system.

Sign up for MongoDB Atlas to start building your own RAG-based workflows. With intelligent vector search, automated pipeline generation, and natural-language insight, you’ll be ready to tackle everything from video streams buffering complaints to the next unexpected traffic surge—before users realize there’s a problem.

If you would like to learn more about how to build gen AI applications with MongoDB, visit the following resources:

-

Learn more about MongoDB capabilities for artificial intelligence on our product page.

-

Get started with MongoDB Vector Search by visiting our product page.

-

Blog: Leveraging an Operational Data Layer for Telco Success

Want to learn more about why MongoDB is the best choice for supporting modern AI applications? Check out our on-demand webinar, “Comparing PostgreSQL vs. MongoDB: Which is Better for AI Workloads?” presented by MongoDB Field CTO, Rick Houlihan.

Source: Read More