This is a guest post co-written by Skello.

Skello is a human resources (HR) software-as-a-service (SaaS) platform that focuses on employee scheduling and workforce management. It caters to various sectors, including hospitality, retail, healthcare, construction, and industry. Skello features include schedule creation, time tracking, and payroll preparation. As of 2024, Skello supports 20,000 customers and has 400,000 daily users on the platform in Europe.

Planning and optimizing shifting teams involves many parameters to consider: legal constraints linked to collective agreements, the structure of the establishment, the way the payroll works, and so on. Skello’s value lies in its ability to coordinate and optimize the management of schedules between teams: considering individual requests, managing unforeseen events, or changing activity flows.

From a technical point of view, these business functionalities are broken down into microservices. It’s a big challenge! How can these services communicate with each other while keeping each other’s data up to date?

In 2021, we, the Skello IT team, migrated our platform to Amazon Web Services (AWS) due to the availability of existing solutions for hosting our monolithic application and the limitations of our former platform in scaling to accommodate our growing customer base. Prior to the migration, our database was already hosted on an AWS account, but it didn’t adhere to the Well-Architected Framework multi-account strategy, consisting of a single server without optimization or security measures in place.

After the migration to AWS, we implemented security standards for our database, such as encryption of data at rest and in transit or network isolation. The application stack was deployed across multiple Availability Zones (a Multi-AZ deployment) with a comprehensive backup strategy and automated backups with a retention period. Our post-migration architecture on Amazon Elastic Compute Cloud (Amazon EC2) for the monolith complied with AWS architectural recommendations. We implemented an Application Load Balancer with scaling policies configured based on traffic patterns and business metrics.

After our monolith was deployed on AWS, we began deploying new projects as microservices, each with its own database according to the component, such as Amazon DynamoDB or Amazon Relational Database Service (Amazon RDS). Our focus then shifted to splitting the monolith into microservices to reduce dependencies within the platform. The migration from a monolithic to a microservice architecture is an ambitious project, requiring the maintenance of synchronization and availability across the entire platform until the full microservice architecture is achieved. During this period, data must be synchronized in real time between the monolith components and the serverless components.

In this post, we show how Skello uses AWS Database Migration Service (AWS DMS) to synchronize data from an monolithic architecture to microservices and perform data ingestion from the monolithic architecture and microservices to our data lake.

Solution overview

We had the following problem: we needed to keep the data in sync between the monolith and the microservices in AWS deployed in the production account and data account. We have data for application usage (client or Skello platform) and from internal sources (business and product). The solutions architects and the data teams are owners of the application data. Internal data is used by different teams at Skello (sales or product). This data is useful for our business dashboards and tracking key performance indicators (KPIs). Data engineers are owners of this data and propose the corresponding data formats.

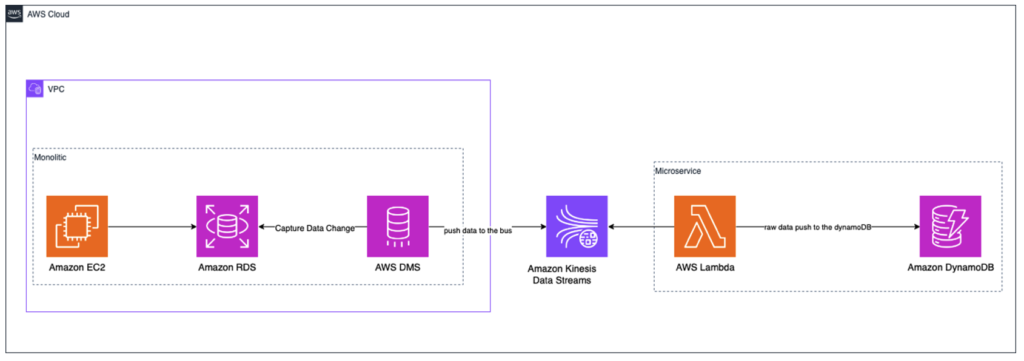

We synchronize data applications with change data capture (CDC) so that the monolith’s database updates the microservices with AWS DMS. The following diagram illustrates our solution architecture.

After the data is loaded in the Amazon Kinesis Data Streams application, the AWS Lambda functions belonging to the microservices retrieve this data on specific shards. Kinesis Data Streams is a serverless streaming data service that makes it straightforward to capture, process, and store data streams at any scale.

AWS DMS is a managed migration and replication service that helps move your database and analytics workloads to AWS quickly, securely, and with minimal downtime and zero data loss. AWS DMS provides a fast and straightforward way to replicate data across multiple target databases.

The monolithic database of Skello is based on Amazon RDS for PostgreSQL. It’s a historical database at Skello and the central point of the platform. Amazon RDS is a managed database service that makes it straightforward to set up, operate, and scale a relational database in the cloud.

In the following sections, we discuss the details of setting up the solution and its key components.

AWS DMS replication instance

To migrate the application data to several microservices, we need to use an AWS DMS replication instance to support the data migration.

We have the possibility to be in a Multi-AZ deployment, which allows high availability in production. We need to create different resources (replica instance, the replication tasks to deploy to the target) to deploy the AWS DMS service. The following Terraform code is the configuration we used to deploy the replication instance, although we could achieve the same result with AWS CloudFormation.

By using the source, we point to the database name source with the connection information so that our AWS DMS replication instance has network access and permission to the source.

AWS DMS tasks

The migration of data is mainly done through AWS DMS tasks. The migration is divided into three parts:

- Source data migration involves the configuration of the data source to make sure the task connects to the correct source endpoint. This process is important for the success of integration and data transfer.

- We apply the changes made by Terraform (as shown in the preceding code snippet) through the terraform apply command. This command executes the actions proposed in a Terraform plan to create, update, or destroy infrastructure resources.

- We start the replication of data on the target.

Our CDC task is based on a source endpoint (PostgreSQL database), the target endpoint is a Kinesis data stream, and the replication instance is the one mentioned earlier.

We use a single Kinesis data stream specifically for the Skello app to handle transactions with microservices. AWS DMS is configured with only one replication task that replicates all tables from the Skello app RDS to Kinesis. This approach streamlines the data flow and simplifies the architecture for change data capture (CDC) and real-time data processing.

We use Kinesis data stream on demand so the shard count is set to 0 in a Kinesis stream.

On-demand mode automates our event management, making it suitable for workloads that are variable or unpredictable by our application traffic.

We deployed a specific role AWS DMS uses for working with Kinesis Data Streams:

Then we created the endpoint using the following terraform code and referencing the role we just created and called dms_access_for_endpoint.

We have another process to synchronize the application data with CDC to address internal demands with a data visualization tool. We use the same AWS DMS task and Kinesis data stream as the first CDC task from the monolith. The following diagram illustrates the CDC workflow to migrate the data from the monolith and microservices to the data lake.

This process copies the database of the microservice to the data lake. The data microservice contains two Lambda functions. One function captures the data from the monolith and other microservices. The raw data is pushed to DynamoDB. The second function sends the formatted data to Amazon Data Firehose, which will aggregate and push the data to the data lake in an Amazon Simple Storage Service (Amazon S3) bucket. The replication model is the same for all ingestion. This process makes sure all changes made in the microservices’ databases are replicated in the data lake.

Results from this solution

These solutions provide us with a continuous update of our various databases with the monolith. The CDC approach is used on all our application workloads. When adding new tables, we perform a micro-interruption to automatically update the AWS DMS task through a terraform apply after the Terraform code updated. AWS DMS provides data recovery with a pointer. We are therefore confident in adding new tables.

Full load

Full loads are used at Skello to catch up data on new services or to copy data to our data lake to meet Skello’s internal needs.

In this case, the ingestion of data is important for product managers to understand the data and have more key performance indicators (KPIs) on usage of the data. The ingestion comes from a single AWS DMS task that pushes data to a data lake in a specific Parquet format. The following diagram illustrates this workflow.

This solution can be applied at any time of the day. However, we take some precautions when applying these changes when traffic is at its lowest, such as during lunchtime or the end of the day.

Full loads solicit the source database according to the table loaded (we have some tables that can contain millions of lines, such as the shifts).

Conclusion

In this post, we demonstrated how we use AWS DMS to synchronize data from our monolithic architecture to microservices and to our data lake. AWS DMS provides a versatile solution that addresses our various data synchronization requirements across different use cases, while enabling a streamlined configuration through infrastructure as code (IaC) with Terraform.

At Skello, we operate within a diverse data ecosystem. We invite you to explore more Skello blog posts about our data ecosystem, including one written by our Lead Data Engineer on data management across multiple AWS accounts.

If you have any questions or suggestions, feel free to share them in the comments section.

About the Author

Mihenandi-Fuki Wony is Lead Cloud & IT at Skello. He is responsible for optimizing AWS costs and managing the platform’s infrastructure. His main challenges include maintaining a scalable platform on AWS, implementing FinOps practices, and continuously improving infrastructure-as-code for deployments. In addition to his professional activities, Mihenandi-Fuki has been involved in solidarity actions to support families and volunteer work focused on integrating young people into the professional world.

Mihenandi-Fuki Wony is Lead Cloud & IT at Skello. He is responsible for optimizing AWS costs and managing the platform’s infrastructure. His main challenges include maintaining a scalable platform on AWS, implementing FinOps practices, and continuously improving infrastructure-as-code for deployments. In addition to his professional activities, Mihenandi-Fuki has been involved in solidarity actions to support families and volunteer work focused on integrating young people into the professional world.

Nicolas de Place is a Startup Solutions Architect who collaborates with emerging companies to develop effective technical solutions. He assists startups in addressing their technological challenges, with a current focus on data and AI/ML strategies. Drawing from his experience in various tech domains, Nicolas helps businesses optimize their data operations and leverage advanced technologies to drive growth and innovation.

Nicolas de Place is a Startup Solutions Architect who collaborates with emerging companies to develop effective technical solutions. He assists startups in addressing their technological challenges, with a current focus on data and AI/ML strategies. Drawing from his experience in various tech domains, Nicolas helps businesses optimize their data operations and leverage advanced technologies to drive growth and innovation.

Source: Read More