Amazon MemoryDB is a Valkey– and Redis OSS-compatible, durable, in-memory database service that delivers ultra-fast performance. With MemoryDB, data is stored in memory with Multi-AZ durability, which enables you to achieve microsecond read and single-digit millisecond write latency and high throughput. MemoryDB is often used for building durable microservices and latency-sensitive database workloads such as gaming leaderboards, session history, and media streaming.

Modern applications are built as a group of microservices and the latency for one component can impact the performance of the entire system. Monitoring latency is critical for maintaining optimal performance, enhancing user experience, and maintaining system reliability. In this post, we explore ways to monitor latency, detect anomalies, and troubleshoot high-latency issues effectively for your MemoryDB clusters.

Monitoring latency in Amazon MemoryDB

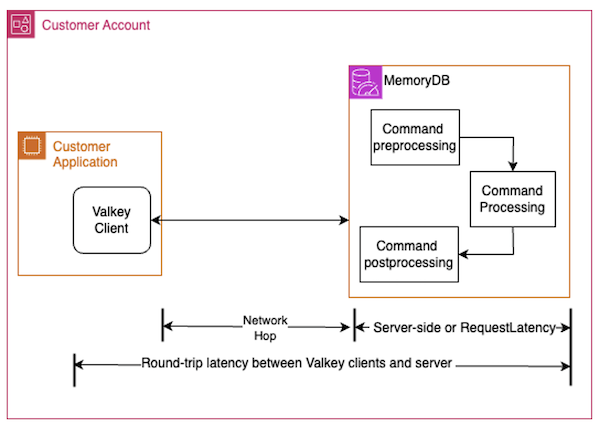

To achieve consistent performance, you should monitor end-to-end latency from the client-side, measuring the round-trip latency between Valkey clients and MemoryDB engine. This can help with identifying bottlenecks across the datapath. If you observe high end-to-end latency on your cluster, the latency could be incurred through operations on the server-side, client-side, or attributed to increased network latency. The following diagram illustrates the difference between the round-trip latency measured from the client and the server-side latency.

To observe the server-side latency, we introduced the SuccessfulReadRequestLatency and SuccessfulWriteRequestLatency Cloudwatch metrics which provide a precise measure of the time that the MemoryDB for Valkey engine takes to respond to a successfully executed request in microseconds. These new metrics are available from MemoryDB version 7.2 for Valkey or newer. These metrics are computed and published once per minute for each node. You can aggregate the metric data points over specified periods of time using various Amazon CloudWatch statistics such as Average, Sum, Min, Max, SampleCount, and any percentile between p0 and p100. The sample count includes only the commands that were successfully executed by the MemoryDB server. Additionally, the ErrorCount metric is the aggregated count of commands that failed to execute.

The SuccessfulReadRequestLatency and SuccessfulWriteRequestLatency metrics measure the latencies across various stages of command processing, including preprocessing, command execution and postprocessing stages within the MemoryDB for Valkey engine. MemoryDB engine uses enhanced I/O threads to handle processing network I/O from concurrent client connections. The commands are then queued up to be executed by the main thread sequentially and persisted into a Multi-AZ transactional log. Responses are sent back to the clients through I/O write threads as illustrated in the following diagram. The request latency metrics captures the time taken to process the request completely through these various stages.

These metrics are useful when troubleshooting performance issues with your application using a MemoryDB cluster. In this section, we present some practical examples of how to put these metrics to use.

Let’s say you are observing a high write latency while writing data into your application using a MemoryDB cluster. We recommend inspecting the SuccessfulWriteRequestLatency metric, which provides the time taken to process all successfully executed write requests in 1-minute time intervals. If you observe elevated latency on the client side but no corresponding increase in the SuccessfulWriteRequestLatency metrics, then it’s a good indicator that the MemoryDB engine is unlikely to be the primary cause of high latency. In this scenario, inspect the client-side resources such as memory, CPU, and network utilization to diagnose the cause. If there is an increase in the value of SuccessfulWriteRequestLatency metrics, then we recommend following the steps in the next section to troubleshoot the issue.

For most use cases, we recommend that you monitor the p50 statistics of the SuccessfulReadRequestLatency and SuccessfulWriteRequestLatency metrics. If your application is sensitive to tail latencies, we recommend that you monitor the p99 or p99.99 statistic.

The following screenshot shows an example of the SuccessfulReadRequestLatency metric in microseconds for a MemoryDB for Valkey cluster, indicating the p50 read latency during 1-minute intervals.

Troubleshooting high latency in MemoryDB

To troubleshoot high read/write latencies in your MemoryDB for Valkey clusters, inspect the following aspects of your cluster.

Long-running commands

Open-source Valkey and MemoryDB engines run commands in a single thread. If your application is running expensive commands on large data structures such as HGETALL or SUNION, a slow execution of these commands could result in subsequent requests from other clients to wait, thereby, increasing application latency. If you have latency concerns, we recommend either not using slow commands against large data structures, or running all your slow queries on a replica node.

You can use the Valkey SLOWLOG to help determine which commands took longer to complete.

The Valkey SLOWLOG contains details on the queries that exceed a specified runtime, and this runtime includes only the command processing time.

Queueing time

MemoryDB actively manages customer traffic to maintain optimal performance and replication reliability. High traffic is throttled and requests are queued when more commands are sent to the node than can be processed by the MemoryDB engine. The duration of time that a request is queued is also captured in the request latency metrics. If the request latency metrics remain high for an extended period of time, it is recommended to evaluate the cluster to decide if scaling your cluster is necessary. At times, a temporary burst in traffic could also cause high queuing time, resulting in high tail latency.

Data Persistence time

MemoryDB uses a distributed transactional log to provide Multi-AZ durability, consistency, and recoverability. Every write to MemoryDB is acknowledged only after the mutative command has been successfully executed by the Valkey engine and persisted into the transactional log. This additional time taken for persistence typically leads to higher SuccessfulWriteRequestLatency than read latencies. If the write latencies are consistently higher than single-digit millisecond, we recommend that you scale out by adding more shards. Each shard has its own dedicated transactional log, and adding more shards will increase the overall log capacity of the cluster.

Memory utilization

A MemoryDB node under memory pressure might use more memory than the available instance memory. In this situation, Valkey swaps data from memory to disk to free up space for incoming write operations. To determine whether a node is under pressure, review whether the FreeableMemory metric is low or the SwapUsage metric is greater than FreeableMemory. High swap activity on a node results in high request latency. If a node is swapping because of memory pressure, then scale up to a larger node type or scale out by adding shards. You should also make sure that sufficient memory is available on your cluster to accommodate traffic burst.

Data tiering

Amazon MemoryDB provides data tiering as a price-performance option for Valkey workloads where data is tiered between memory and local SSD. Data tiering is ideal for workloads that access up to 20% of their overall dataset regularly and for applications that can tolerate additional latency when accessing data on SSD.

The metrics BytesReadFromDisk and BytesWrittenToDisk, which indicate how much data is being read from and written to the SSD tier, can be used in conjunction with the SuccessfulWriteRequestLatency and SuccessfulReadRequestLatency metrics to determine the throughput associated with the tiered operations. For instance, if the value of the SuccessfulReadRequestLatency and BytesReadFromDisk metrics are high, it might indicate that the SSD is being accessed more frequently relative to memory, and you can scale up to a larger node type or scale out by adding shards so that more RAM is available to serve your active dataset.

Horizontal scaling for Valkey

By using online horizontal scaling for MemoryDB, you can scale your clusters dynamically with no downtime. This means that your cluster can continue to serve requests even while scaling or rebalancing is in process. If your cluster is nearing its capacity, the client write requests are throttled to allow scaling operations to proceed, which increases the request processing time. This latency is also reflected in the new metrics. It is recommended to follow the best practices for online cluster resizing such as initiating resharding during off-peak hours, and avoid expensive commands during scaling.

Elevated number of client connections

A MemoryDB node can support up to 65,000 client connections. A large number of concurrent connections can significantly increase the CPU usage, resulting in high application latency. To reduce this overhead, we recommend following best practices such as using connection pools, or reusing your existing Valkey connections.

Conclusion

Measuring latency for a MemoryDB for Valkey instance can be approached in various ways depending on the level of granularity required. Monitoring end-to-end latency from the client-side helps with identifying issues across the datapath, while the request latency metrics captures the time taken across various stages of command processing, including command preprocessing, command execution, and command postprocessing.

The new request latency metrics provide a more precise measure of the time the MemoryDB for Valkey engine takes to respond to a request. In this post, we discussed a few scenarios where these latency metrics could help in troubleshooting latency spikes in your MemoryDB cluster. Using the details in this post, you can detect, diagnose, and maintain healthy MemoryDB for Valkey clusters. Learn more about the metrics discussed in this post in our documentation.

About the author

Yasha Jayaprakash is a Software Development Manager at AWS with a strong background in leading development teams to deliver high-quality, scalable software solutions. Focused on aligning technical strategies with innovation, she is dedicated to delivering impactful and customer-centric solutions.

Yasha Jayaprakash is a Software Development Manager at AWS with a strong background in leading development teams to deliver high-quality, scalable software solutions. Focused on aligning technical strategies with innovation, she is dedicated to delivering impactful and customer-centric solutions.

Source: Read More