This post is co-written by Nicholas Dentandt, Senior software engineer and Team lead at Orca Security.

Orca Security, an AWS Partner, is an independent cybersecurity software provider whose patented agentless-first cloud security platform is trusted by hundreds of enterprises globally. Orca makes cloud security simple for enterprises moving to and scaling with AWS with its patented SideScanning technology and Unified Data Model.

At Orca Security, we use a variety of metrics to assess the significance of security alerts on cloud assets. For example, a vulnerability on an asset that isn’t exposed to the internet might pose minimal risk, but the same vulnerability on a publicly accessible machine could demand immediate attention. Our Amazon Neptune database plays a critical role in calculating the exposure of individual assets within a customer’s cloud environment. By building a graph that maps assets and their connectivity between one another and to the broader internet, the Orca Cloud Security Platform can evaluate both how an asset is exposed as well as how an attacker could potentially move laterally within an account.

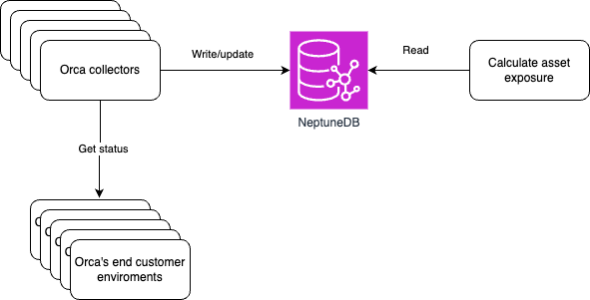

To better understand how our system works and where Amazon Neptune fits in, let’s take a look at our overall architecture:

As shown in the diagram, our system consists of several key components. Orca collectors gather data from our customers’ cloud environments and write/update information in our Amazon Neptune database. Our collectors query APIs made available by cloud providers. We process that data to produce a graph of connectivity between different types of assets and the internet. We store these complex relationships between cloud assets in Amazon Neptune. The Orca findings component reads this data from Amazon Neptune to generate security insights, which are then presented to our customers in an easy-to-understand format through Orca Dashboards. This architecture allows us to efficiently collect, process, and analyze vast amounts of data from diverse cloud environments, with the Amazon Neptune database being crucial for storing and querying the complex relationships between cloud assets – a key component for our security analysis. The data in the graph is used to generate exposure values for each asset, which is then combined with security findings to better highlight the urgency and importance of a security alert. In the following sections, we explore how we’ve optimized our use of Amazon Neptune to handle the scale and complexity of this data effectively.

The topology of our customers’ cloud environments varies greatly, requiring us to implement several techniques to achieve optimal performance of Amazon Neptune. These optimizations are crucial both during the graph-building process and when running customer queries through our API—particularly shortest path queries between two nodes. In this post, we explore some of the key strategies we’ve adopted to maximize the performance of our Amazon Neptune database.

Batching and caching: Minimizing overhead and speeding up inserts

Before diving into specific optimizations, it’s crucial to emphasize the importance of data modeling in graph databases. A well-designed data model is the foundation for efficient queries and scalable applications. Poor data modeling can lead to complex, slow-performing queries, regardless of other optimizations.

One impactful performance optimization we’ve implemented is the use of batching and caching mechanisms during data insert operations. Amazon Neptune, like most databases, performs best when handling large operations in bulk rather than processing many small, individual insertions.

Batching layer

We implemented this optimization by designing a batching layer that groups inserts into bulk operations. Our solution utilizes the Gremlin query language, which is well-suited for graph database operations. Rather than inserting each node or edge immediately, records are collected and processed after a target batch size is reached. This bulk insert approach significantly reduces the communication overhead between our application and the Amazon Neptune database, leading to a marked improvement in performance. We use programmatic insertion with Gremlin queries such as g.addV() for vertices and g.addE() for edges in these bulk operations. This approach gives us more fine-grained control over the insertion process while still benefiting from the performance gains of batching.

One challenge we faced in this approach was maintaining the integrity of edges in the graph. Because nodes must exist before edges can connect them, our logic makes sure nodes are always inserted when the edge batch reaches the target size. By guaranteeing that nodes are present in the database before edges are created, we maintain data consistency while still reaping the benefits of batching.

Multi-tenancy: Partition strategy optimization

In our multi-tenant environment, we leverage Gremlin’s PartitionStrategy, which is supported by Amazon Neptune, to effectively segregate customer data. While the implementation is relatively straightforward, leveraging Gremlin’s PartitionStrategy to tag each node and edge with a property identifying its “partition”, we discovered some valuable optimizations during our journey. For readers interested in implementing multi-tenant graphs with Amazon Neptune, we recommend reviewing AWS Prescriptive Guidance Multi-tenancy guidance for ISVs running Amazon Neptune databases.

A key learning emerged when we implemented custom pagination across partitioned data. Initially, we used simple numeric page attributes (such as “1”, “2”, “3”) across all partitions. However, we found that querying these common values across multiple partitions led to significant performance issues, as the same value existed in multiple partitions.

To illustrate this, imagine we have three partitions: “CustomerA”, “CustomerB”, and “CustomerC”. With our initial approach, each partition would have pages numbered “1”, “2”, “3”, and so on. When querying for page “1” across all partitions, the database would need to search through every partition, potentially leading to performance bottlenecks.

To address this, we modified our approach by prepending the partition key to these values. For example:

- CustomerA’s pages: “CustomerA-1”, “CustomerA-2”, “CustomerA-3”

- CustomerB’s pages: “CustomerB-1”, “CustomerB-2”, “CustomerB-3”

- CustomerC’s pages: “CustomerC-1”, “CustomerC-2”, “CustomerC-3”

This simple change ensured value uniqueness across partitions. Now, when querying for a specific page, like “CustomerB-2”, the database can quickly locate the exact partition and page, resulting in substantial performance improvements for our cross-partition queries. This optimization demonstrates how even small adjustments to data modeling in a partitioned environment can have significant performance implications.

Caching layer

In addition to batching, we introduced an in-memory caching layer to further improve performance during the graph building process. After nodes are inserted into Amazon Neptune, they’re stored in a least recently used (LRU) cache along with their IDs. This caching layer isn’t a separate service but rather an in-memory data structure that exists only for the duration of the graph building process for a single customer’s cloud environment.

By caching the recently inserted nodes and their IDs, we can bypass querying Amazon Neptune for them when building the graph, which would otherwise require costly database read operations. Instead, by retrieving nodes directly from the in-memory cache, we can respond to queries almost instantaneously. This optimization is particularly useful during the graph building process, where large numbers of related nodes are being inserted or queried in rapid succession.

The caching layer implementation works as follows: any time a new node is added to Amazon Neptune, the node itself and its ID are also added to the LRU cache. When building the graph, the process first checks the cache for the required nodes. If the nodes are found in the cache, they are retrieved directly from memory, avoiding the need for a database read operation. If the nodes aren’t in the cache, they’re fetched from Amazon Neptune and added to the cache for subsequent lookups.

By using an in-memory LRU cache, we can take advantage of temporal locality and serve node data from a faster memory cache, significantly reducing the overhead of repeated database reads during the graph building process for each customer’s cloud environment.

Concurrent in/out queries: Reducing query response time

Another critical optimization we implemented was the use of concurrent inbound and outbound queries for our API path queries. This technique addresses a key challenge in our system: efficiently traversing graphs with widely varying structures across different customers. By running queries in both directions simultaneously, we can adapt to each customer’s unique graph topology and significantly reduce query response times.

Parallel query execution

In a typical API path query, the application needs to explore the graph by traversing nodes and edges in a particular direction. However, we observed that customers often have widely different graph configurations—some with highly interconnected internal structures and others with numerous external inbound paths. The traversal method that works best for one structure may be suboptimal for another.

To illustrate this, consider the following diagram:

We use a breadth-first search algorithm for our graph traversal. If we were looking for paths between Ext Network 2 and EC2 Instance 1, starting the search from Ext Network 2 would require exploring all the additional branches in the dense internal network to arrive at the result. However, if we start our query at EC2 Instance 1, there is less density, so we only need to explore a single path to find the valid connection.

The performance improvements are even more significant for queries that do not have a path. Imagine searching through a very dense graph only to discover there are no paths at all, versus querying from the target node and immediately finding it has no inbound edges.

To overcome this variability, we run queries in both directions (inbound and outbound) concurrently and return the result from whichever query finishes first. This approach makes sure we always get the fastest possible response, regardless of the specific graph structure involved. By using parallelism, we’re able to drastically reduce the query response time for our API endpoints, making the system more efficient and responsive to diverse graph topologies.

Data modeling: Minimizing nodes and edges for optimal performance

Efficient data modeling plays a crucial role in maximizing the performance of a graph database. It’s important to understand that if you get the data model wrong, it can be extremely challenging to write efficient queries, regardless of other optimizations. Performance in graph databases is a product of both the data model and well-written queries.

For example, consider a scenario where you’re modeling a social network. A naive approach might create a direct connection between every user and all of their friends. While this seems intuitive, it can lead to performance issues as the number of users and connections grows. A more efficient model might use intermediate nodes to represent friend groups or interests, reducing the total number of edges and making queries more manageable.

Graph models with excessive numbers of nodes and edges can quickly lead to performance bottlenecks during queries, especially when traversing complex relationships. Our team focused on creating a streamlined data model that reduces unnecessary complexity while maintaining accuracy in representing relationships. This approach not only improves query performance but also enhances the scalability and maintainability of the entire system.

Case study: Load balancer modeling

A prime example of this optimization is how we model load balancers in our graph. Initially, we faced the challenge of representing load balancers and their connections to a potentially large number of targets. A naive approach would be to directly connect every load balancer node to every target, resulting in an explosion of edges.

Instead, we opted for a more efficient data model that better represents the underlying assets. For instance, when two load balancers reference the same auto scaling group in their target group, rather than duplicating the connections, we separate the auto scaling group as its own node. The load balancers’ backend nodes then connect directly to the auto scaling group, allowing us to reuse the existing relationships between the auto scaling group and the instances it manages. This also provides users with a clearer view of how their assets are exposed.

Although this approach involves creating additional nodes for each load balancer, it dramatically reduces the number of edges within the graph. By keeping query paths streamlined and more efficient, we achieve a graph model that remains lightweight, scalable, and significantly less costly in terms of query execution. This balance between node creation and edge reduction makes sure our Amazon Neptune database performs optimally, even with highly complex cloud architectures.

The following diagram compares the modeling process without splitting components and the improved data modeling process.

Modeling without splitting components:

Improved modeling:

Conclusion

Through the application of batching, caching, concurrent query execution, and efficient data modeling, we have optimized the performance of our Amazon Neptune database, delivering faster response times and reducing operational overhead. These optimizations allow us to scale effectively while handling the diverse graph structures of our customers. By continuing to refine these techniques and explore new ways to improve database efficiency, we make sure our application remains robust and performant in the face of growing data demands.

Let us know your thoughts or share your own experiences with optimizing graph databases in the comments. To learn more about Amazon Neptune, visit the Amazon Neptune User Guide or check out Applying the AWS Well-Architected Framework for Amazon Neptune which provides comprehensive guidance on building secure, high-performing, resilient, and efficient Amazon Neptune applications.

To explore how Orca Security can help secure your cloud environment using these optimized graph database techniques, visit our AWS Marketplace profile

About the Authors

Nicholas Dentandt is a Senior Software Engineer and Team Lead at Orca Security, where he leads the development of exposure calculations and attack paths. With over 10 years of experience in software development, Nicholas specializes in backend systems, with a passion for building scalable and efficient solutions to meet complex technical challenges.

Nicholas Dentandt is a Senior Software Engineer and Team Lead at Orca Security, where he leads the development of exposure calculations and attack paths. With over 10 years of experience in software development, Nicholas specializes in backend systems, with a passion for building scalable and efficient solutions to meet complex technical challenges.

Hemmy Yona is a Senior Solutions Architect at Amazon Web Services based in Israel. With 20 years of experience in software development and group management, Hemmy is passionate about helping customers build innovative, scalable, and cost-effective solutions. Outside of work, you’ll find Hemmy enjoying sports and traveling with family.

Hemmy Yona is a Senior Solutions Architect at Amazon Web Services based in Israel. With 20 years of experience in software development and group management, Hemmy is passionate about helping customers build innovative, scalable, and cost-effective solutions. Outside of work, you’ll find Hemmy enjoying sports and traveling with family.

Source: Read More