The development of multimodal large language models (MLLMs) has brought new opportunities in artificial intelligence. However, significant challenges persist in integrating visual, linguistic, and speech modalities. While many MLLMs perform well with vision and text, incorporating speech remains a hurdle. Speech, a natural medium for human interaction, plays an essential role in dialogue systems, yet the differences between modalities—spatial versus temporal data representations—create conflicts during training. Traditional systems relying on separate automatic speech recognition (ASR) and text-to-speech (TTS) modules are often slow and impractical for real-time applications.

Researchers from NJU, Tencent Youtu Lab, XMU, and CASIA have introduced VITA-1.5, a multimodal large language model that integrates vision, language, and speech through a carefully designed three-stage training methodology. Unlike its predecessor, VITA-1.0, which depended on external TTS modules, VITA-1.5 employs an end-to-end framework, reducing latency and streamlining interaction. The model incorporates vision and speech encoders along with a speech decoder, enabling near real-time interactions. Through progressive multimodal training, it addresses conflicts between modalities while maintaining performance. The researchers have also made the training and inference code publicly available, fostering innovation in the field.

Technical Details and Benefits

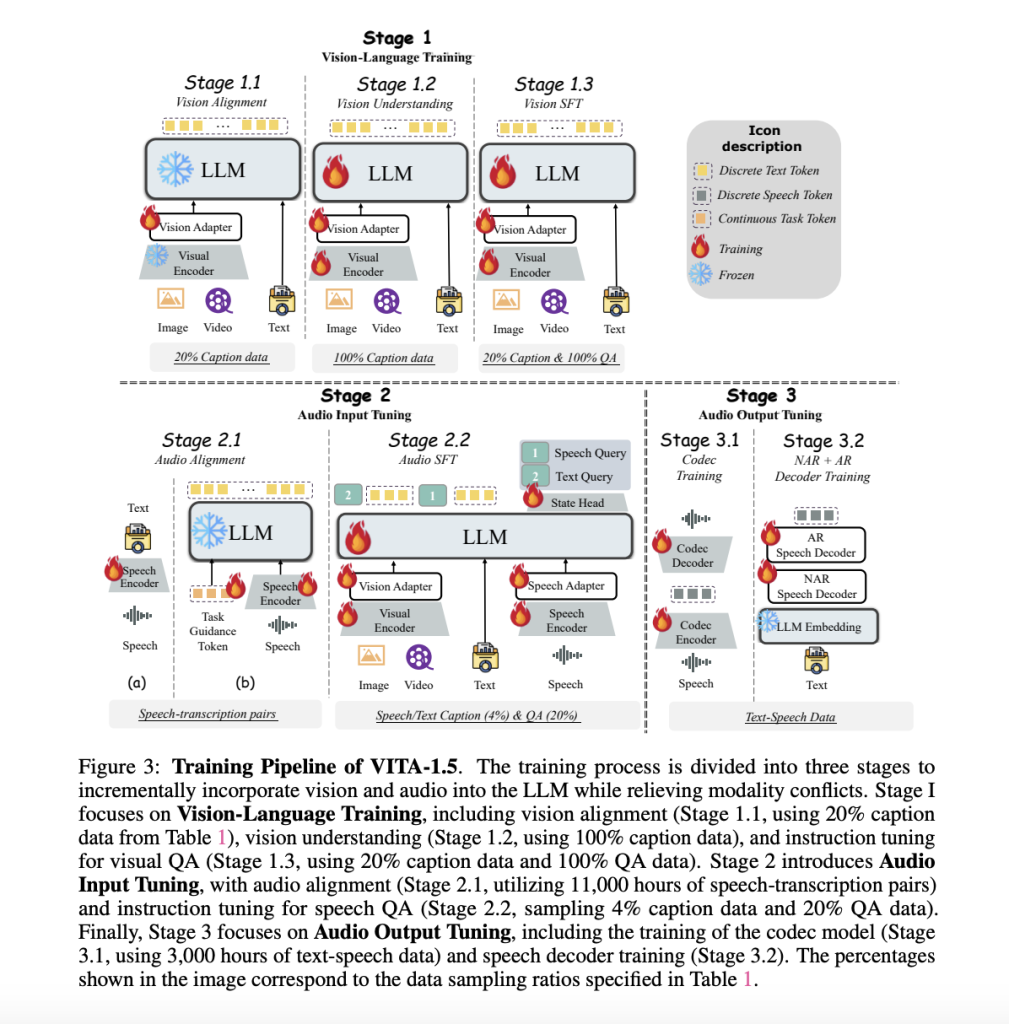

VITA-1.5 is built to balance efficiency and capability. It uses vision and audio encoders, employing dynamic patching for image inputs and downsampling techniques for audio. The speech decoder combines non-autoregressive (NAR) and autoregressive (AR) methods to ensure fluent and high-quality speech generation. The training process is divided into three stages:

- Vision-Language Training: This stage focuses on vision alignment and understanding, using descriptive captions and visual question answering (QA) tasks to establish a connection between visual and linguistic modalities.

- Audio Input Tuning: The audio encoder is aligned with the language model using speech-transcription data, enabling effective audio input processing.

- Audio Output Tuning: The speech decoder is trained with text-speech paired data, enabling coherent speech outputs and seamless speech-to-speech interactions.

These strategies effectively address modality conflicts, allowing VITA-1.5 to handle image, video, and speech data seamlessly. The integrated approach enhances its real-time usability, eliminating common bottlenecks in traditional systems.

Results and Insights

Evaluations of VITA-1.5 on various benchmarks demonstrate its robust capabilities. The model performs competitively in image and video understanding tasks, achieving results comparable to leading open-source models. For example, on benchmarks like MMBench and MMStar, VITA-1.5’s vision-language capabilities are on par with proprietary models like GPT-4V. Additionally, it excels in speech tasks, achieving low character error rates (CER) in Mandarin and word error rates (WER) in English. Importantly, the inclusion of audio processing does not compromise its visual reasoning abilities. The model’s consistent performance across modalities highlights its potential for practical applications.

Conclusion

VITA-1.5 represents a thoughtful approach to resolving the challenges of multimodal integration. By addressing conflicts between vision, language, and speech modalities, it offers a coherent and efficient solution for real-time interactions. Its open-source availability ensures that researchers and developers can build upon its foundation, advancing the field of multimodal AI. VITA-1.5 not only enhances current capabilities but also points toward a more integrated and interactive future for AI systems.

Check out the Paper and GitHub Page. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter and join our Telegram Channel and LinkedIn Group. Don’t Forget to join our 60k+ ML SubReddit.

FREE UPCOMING AI WEBINAR (JAN 15, 2025): Boost LLM Accuracy with Synthetic Data and Evaluation Intelligence–Join this webinar to gain actionable insights into boosting LLM model performance and accuracy while safeguarding data privacy.

FREE UPCOMING AI WEBINAR (JAN 15, 2025): Boost LLM Accuracy with Synthetic Data and Evaluation Intelligence–Join this webinar to gain actionable insights into boosting LLM model performance and accuracy while safeguarding data privacy.

The post VITA-1.5: A Multimodal Large Language Model that Integrates Vision, Language, and Speech Through a Carefully Designed Three-Stage Training Methodology appeared first on MarkTechPost.

Source: Read MoreÂ