The self-attention mechanism is a building block of transformer architectures that faces huge challenges both in the theoretical foundations and practical implementation. Despite such successes in natural language processing, computer vision, and other areas, their development often relies on heuristic approaches, limiting interpretability and scalability. Self-attention mechanisms are also vulnerable to data corruption and adversarial attacks, which makes them unreliable in practice. All these issues need to be addressed to enhance the robustness and efficiency of transformer models.

Conventional self-attention techniques, including softmax attention, derive weighted averages based on similarity to establish dynamic relationships among input tokens. Although these methods prove effective, they encounter significant limitations. The lack of a formalized framework hinders adaptability and comprehension of their underlying processes. Moreover, self-attention mechanisms exhibit a tendency for performance decline in the presence of adversarial or noisy circumstances. Lastly, substantial computational demands restrict their application in settings characterized by limited resources. These limitations call for theoretically principled, computationally efficient methods that are robust to data anomalies.

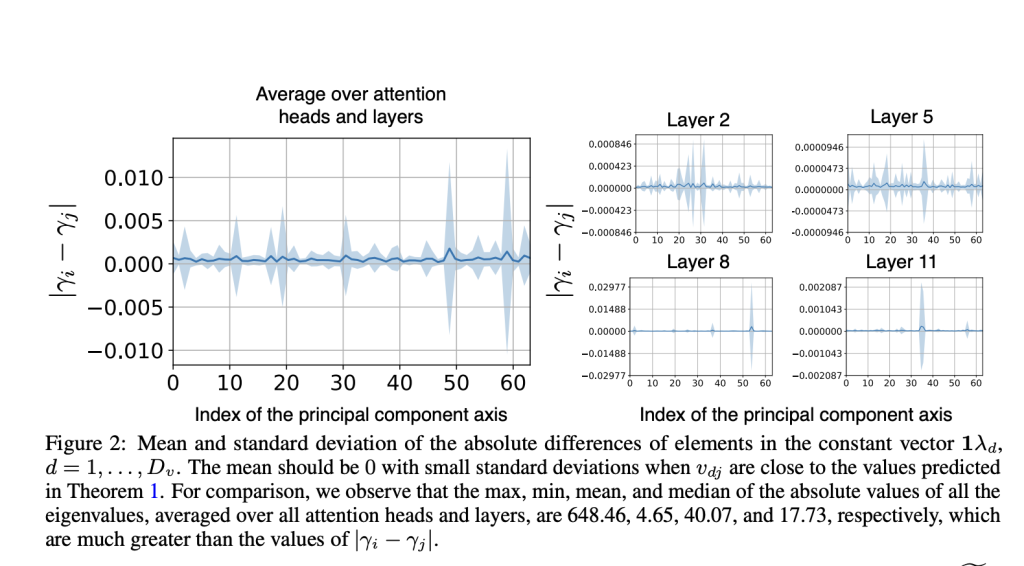

Researchers from the National University of Singapore propose a groundbreaking reinterpretation of self-attention using Kernel Principal Component Analysis (KPCA), establishing a comprehensive theoretical framework. This novel interpretation brings forward several key contributions. It mathematically restates self-attention as a projection of query vectors onto the principal component axes of the key matrix in a feature space, making it more interpretable. Furthermore, it is shown that the value matrix encodes the eigenvectors of the Gram matrix of key vectors, establishing a close link between self-attention and the principles of KPCA. The researchers present a robust mechanism to address vulnerabilities in data: Attention with Robust Principal Components (RPC-Attention). Utilizing Principal Component Pursuit (PCP) to distinguish untainted data from distortions in the primary matrix markedly bolsters resilience. This methodology creates a connection between theoretical precision and practical enhancements, thereby increasing the efficacy and dependability of self-attention mechanisms.

The construction incorporates multiple sophisticated technical components. Within the KPCA framework, query vectors are oriented with the principal component axes according to their representation in feature space. Principal Component Pursuit is applied to decompose the primary matrix into low-rank and sparse components that mitigate the problems created by data corruption. An efficient implementation is realized by carefully replacing softmax attention with a more robust alternative mechanism in certain transformer layers that balance efficiency and robustness. This is validated by extensive testing on classification datasets like ImageNet-1K, segmentation datasets like ADE20K, and language modeling like WikiText-103, proving the versatility of the approach in various domains.

The work significantly improves accuracy, robustness, and resilience on different tasks. The mechanism improves clean accuracy in object classification and error rates under corruption and adversarial attacks. In language modeling, it demonstrates a lower perplexity, which reflects an enhanced linguistic understanding. Its usage in image segmentation presents superior performance on clean and noisy datasets, supporting its adaptability to various challenges. These results illustrate its potential to overcome the critical limitations of traditional self-attention methods.

Researchers reformulate self-attention through KPCA, thus giving a principled theoretical basis and a resilient attention mechanism to tackle the vulnerabilities of data and computational challenges. The contributions greatly enhance the understanding and capabilities of transformer architectures to develop more robust and efficient applications in AI.

Check out the Paper and GitHub Page. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter and join our Telegram Channel and LinkedIn Group. Don’t Forget to join our 60k+ ML SubReddit.

FREE UPCOMING AI WEBINAR (JAN 15, 2025): Boost LLM Accuracy with Synthetic Data and Evaluation Intelligence–Join this webinar to gain actionable insights into boosting LLM model performance and accuracy while safeguarding data privacy.

FREE UPCOMING AI WEBINAR (JAN 15, 2025): Boost LLM Accuracy with Synthetic Data and Evaluation Intelligence–Join this webinar to gain actionable insights into boosting LLM model performance and accuracy while safeguarding data privacy.

The post From Kernels to Attention: Exploring Robust Principal Components in Transformers appeared first on MarkTechPost.

Source: Read MoreÂ