Graph Neural Networks GNNs have become a powerful tool for analyzing graph-structured data, with applications ranging from social networks and recommendation systems to bioinformatics and drug discovery. Despite their effectiveness, GNNs face challenges like poor generalization, interpretability issues, oversmoothing, and sensitivity to noise. Noisy or irrelevant node features can propagate through the network, negatively impacting performance. To address these challenges, dropping strategies have been introduced, which improve robustness by selectively removing components such as edges, nodes, or messages during training. While methods like DropEdge, DropNode, and DropMessage rely on random or heuristic-based criteria, they lack a systematic approach to identify and exclude components that degrade model performance. This highlights the need for principled methods that prioritize explainability and reduce over-complexity during training.

Recent work has explored explainable artificial intelligence (XAI) as a foundation for improving GNN-dropping strategies. Unlike existing random or heuristic-based methods, XAI-based approaches leverage instance-level explainability techniques to identify and exclude harmful graph components. These methods use saliency maps or perturbation-based explanations to pinpoint noisy or irrelevant nodes, ensuring that the retained graph structure aligns with meaningful contributions to the model’s predictions. XAI-based methods have significantly improved performance and robustness compared to traditional dropping techniques. This framework integrates seamlessly with gradient-based saliency methods but is adaptable to various explainability techniques, providing a more principled and effective approach for enhancing GNN training and generalization.

Researchers from the University of Trento and the University of Cambridge have introduced xAI-Drop, an explainability-driven dropping regularizer for GNNs. xAI-Drop identifies and excludes noisy graph elements during training by leveraging local explainability and over-confidence as key indicators. This approach prevents the model from focusing on spurious patterns, enabling it to learn more robust and interpretable representations. Empirical evaluations on diverse benchmarks demonstrate xAI-Drop’s superior accuracy and improved explanation quality compared to existing dropping strategies. Key contributions include integrating explainability as a guiding principle and showcasing its effectiveness in node classification and link prediction tasks.

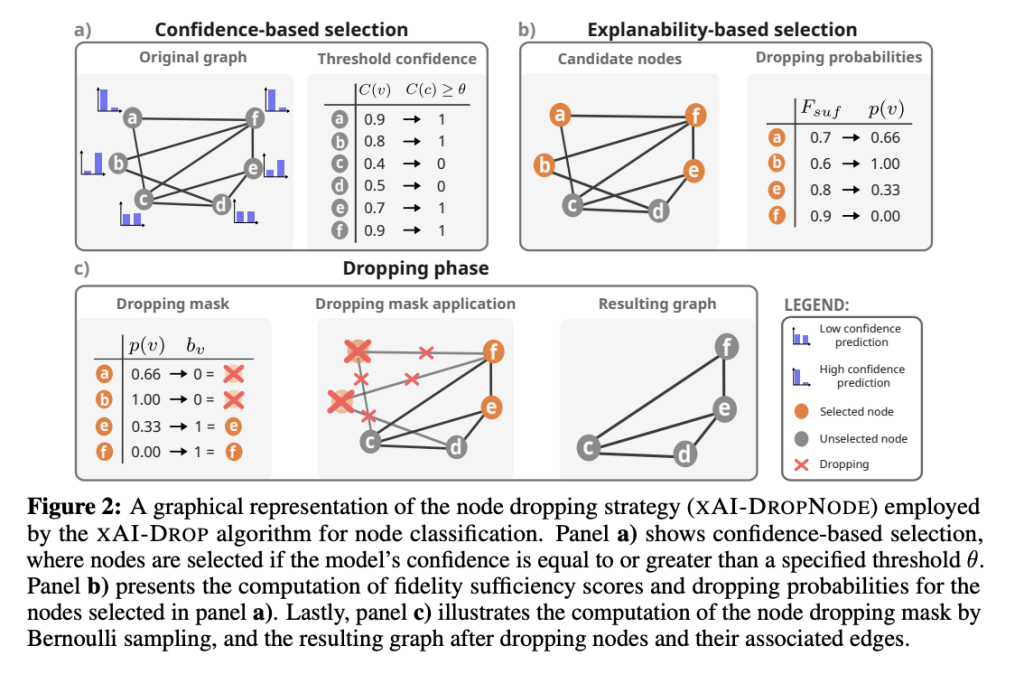

The XAI-DROP framework enhances the training of GNNs by selectively removing nodes or edges based on explainability and confidence. For node classification, nodes with high prediction confidence but low explainability (measured by fidelity sufficiency) are identified and assigned dropping probabilities using a Box-Cox transformation. A Bernoulli distribution determines whether these nodes and their edges are removed, producing a modified adjacency matrix for training. The approach can also target edges for link prediction, evaluating edge-level confidence and explainability. XAI-DROP effectively reduces noise during training, improving model performance in transductive and inductive settings.

The experimental results show that XAI-DROP consistently surpasses random and XAI-based strategies across all datasets and GNN architectures. It effectively identifies and removes noisy components within graphs, improving performance. XAI-DROPNODE achieved the highest test accuracy and explainability for node classification tasks compared to other methods. Similarly, XAI-DROPEDGE demonstrated superior AUC scores and enhanced explainability for link prediction tasks. These outcomes underline the method’s robustness and effectiveness in optimizing GNN performance across diverse scenarios.

In conclusion, XAI-DROP is a powerful framework for graph-based tasks that combines predictive accuracy and interpretability. Its ability to enhance explainability through saliency maps while maintaining or improving classification and prediction performance sets it apart from existing approaches. XAI-DROP proves its versatility by excelling across various datasets, architectures, and tasks, offering a promising solution for tackling challenges in graph-based learning applications.

Check out the Paper. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter and join our Telegram Channel and LinkedIn Group. Don’t Forget to join our 60k+ ML SubReddit.

FREE UPCOMING AI WEBINAR (JAN 15, 2025): Boost LLM Accuracy with Synthetic Data and Evaluation Intelligence–Join this webinar to gain actionable insights into boosting LLM model performance and accuracy while safeguarding data privacy.

FREE UPCOMING AI WEBINAR (JAN 15, 2025): Boost LLM Accuracy with Synthetic Data and Evaluation Intelligence–Join this webinar to gain actionable insights into boosting LLM model performance and accuracy while safeguarding data privacy.

The post XAI-DROP: Enhancing Graph Neural Networks GNNs Training with Explainability-Driven Dropping Strategies appeared first on MarkTechPost.

Source: Read MoreÂ