Vision Transformers (ViTs) have become a cornerstone in computer vision, offering strong performance and adaptability. However, their large size and computational demands create challenges, particularly for deployment on devices with limited resources. Models like FLUX Vision Transformers, with billions of parameters, require substantial storage and memory, making them impractical for many use cases. These limitations restrict the real-world application of advanced generative models. Addressing these challenges calls for innovative methods to reduce the computational burden without compromising performance.

Researchers from ByteDance Introduce 1.58-bit FLUX

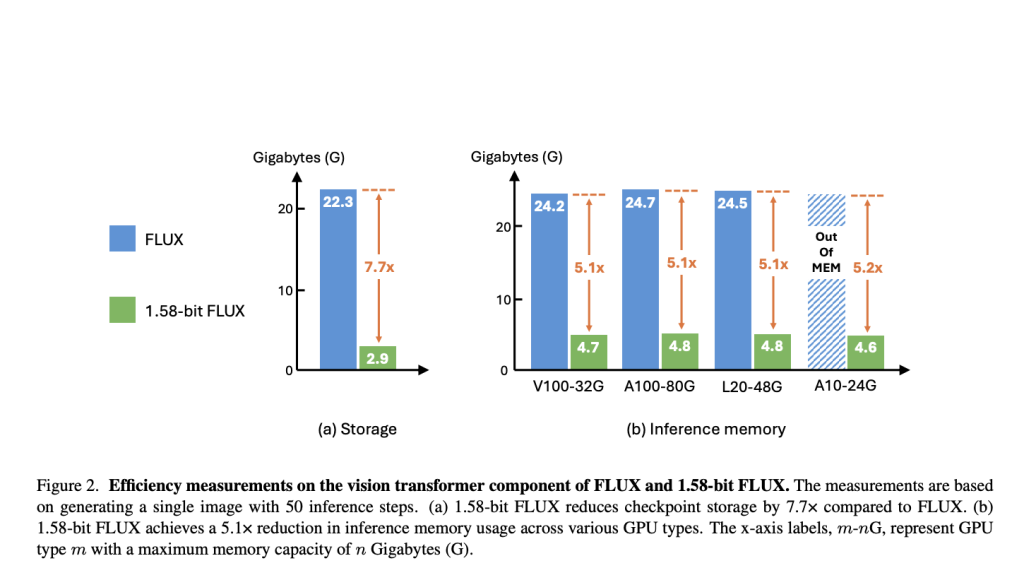

Researchers from ByteDance have introduced the 1.58-bit FLUX model, a quantized version of the FLUX Vision Transformer. This model reduces 99.5% of its parameters (11.9 billion in total) to 1.58 bits, significantly lowering computational and storage requirements. The process is unique in that it does not rely on image data, instead using a self-supervised approach based on the FLUX.1-dev model. By incorporating a custom kernel optimized for 1.58-bit operations, the researchers achieved a 7.7× reduction in storage and a 5.1× reduction in inference memory usage, making deployment in resource-constrained environments more feasible.

Technical Details and Benefits

The core of the 1.58-bit FLUX lies in its quantization technique, which restricts model weights to three values: +1, -1, or 0. This approach compresses parameters from 16-bit precision to 1.58 bits. Unlike traditional methods, this data-free quantization relies solely on a calibration dataset of text prompts, removing the need for image data. To handle the complexities of low-bit operations, a custom kernel was developed to optimize computations. These advances lead to substantial reductions in storage and memory requirements while maintaining the ability to generate high-resolution images of 1024 × 1024 pixels.

Results and Insights

Extensive evaluations of the 1.58-bit FLUX model on benchmarks such as GenEval and T2I CompBench demonstrated its efficacy. The model delivered performance on par with its full-precision counterpart, with minor deviations observed in specific tasks. In terms of efficiency, the model achieved a 7.7× reduction in storage and a 5.1× reduction in memory usage across various GPUs. Deployment-friendly GPUs, such as the L20 and A10, further highlighted the model’s practicality with notable latency improvements. These results indicate that 1.58-bit FLUX effectively balances efficiency and performance, making it suitable for a range of applications.

Conclusion

The development of 1.58-bit FLUX addresses critical challenges in deploying large-scale Vision Transformers. Its ability to significantly reduce storage and memory requirements without sacrificing performance represents a step forward in efficient AI model design. While there is room for improvement, such as enhancing activation quantization and fine-detail rendering, this work sets a solid foundation for future advancements. As research continues, the prospect of deploying high-quality generative models on everyday devices becomes increasingly realistic, broadening access to powerful AI capabilities.

Check out the Paper. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter and join our Telegram Channel and LinkedIn Group. Don’t Forget to join our 60k+ ML SubReddit.

The post ByteDance Research Introduces 1.58-bit FLUX: A New AI Approach that Gets 99.5% of the Transformer Parameters Quantized to 1.58 bits appeared first on MarkTechPost.

Source: Read MoreÂ

Trending: LG AI Research Releases EXAONE 3.5: Three Open-Source Bilingual Frontier AI-level Models Delivering Unmatched Instruction Following and Long Context Understanding for Global Leadership in Generative AI Excellence….

Trending: LG AI Research Releases EXAONE 3.5: Three Open-Source Bilingual Frontier AI-level Models Delivering Unmatched Instruction Following and Long Context Understanding for Global Leadership in Generative AI Excellence….