Reinforcement Learning, despite its popularity in a variety of fields, faces some fundamental difficulties that refrain users from exploiting its full potential. To begin with, algorithms like PPO, which are widely used, suffer from the curse of sample inefficiency (the need for multiple episodes to learn basic actions). Moving on, Off-Policy methods like SAC and DrQ offer some immunity against the above problem. They are applicable in the real world while being compute-efficient, but they have drawbacks. Off-policy methods often require dense reward signals, which means their performance is undermined in rewards’ sparsity or local optima. This suboptimality can be attributed to naive exploration schemes such as ε-greedy and Boltzmann exploration. The scalability and simplicity of these algorithms are appealing enough for users to accept the trade-off with optimality.

Intrinsic exploration has recently shown great potential in this regard, where reward signals such as information gain and curiosity improve the exploration of RL agents. Approaches to maximizing information gain show great theoretical potential and have even achieved empirical state-of-the-art (SOTA). While this approach appears promising in theory, a gap exists in balancing intrinsic and naive extrinsic exploration objectives. This article discusses the latest research that claims to find a balance between intrinsic and extrinsic exploration in practice.

Researchers from ETH Zurich and UC Berkeley have put forth MAXINFORL, which improves the naive old exploration techniques and aligns them theoretically and practically with intrinsic rewards. MAXINFORL is a novel class of Off-policy model-free algorithms for continuous state-action spaces that augment existing RL methods with directed exploration. It takes the standard Boltzmann exploration technique and enhances it through an intrinsic reward. The authors propose a practical auto-tuning procedure simplifying the trade-off between exploration and rewards. Thus, the algorithms modified by MAXINFORL explore by visiting trajectories that achieve the maximum information gain while efficiently solving the task. The authors also show that the proposed algorithms benefit from all theoretical properties of contraction and convergence that hold for other max-entropy RL algorithms, such as SAC.

Let us jog down memory lane and review intrinsic rewards, precisely information gains, to get the fundamentals right. They enable RL agents to acquire information in a more principled manner by directing agents toward underexplored regions. In MAXINFORL, the authors use intrinsic rewards to guide exploration such that, instead of random sampling, the exploration is informed to cover the state-action spaces efficiently. For this, the authors modify ε-greedy selection to learn Optimal Q for extrinsic and intrinsic rewards, determining the action to be taken. Thus, ε–MAXINFORL augments the Boltzmann Exploration strategy. However, the augmented policy presents a trade-off between value function maximization and the entropy of states, rewards, and actions. MAXINFORL introduces two exploration bonuses in this augmentation: policy entropy and information gain. Additionally, in this strategy, the Q-function and policy update rules converge to an optimal policy.

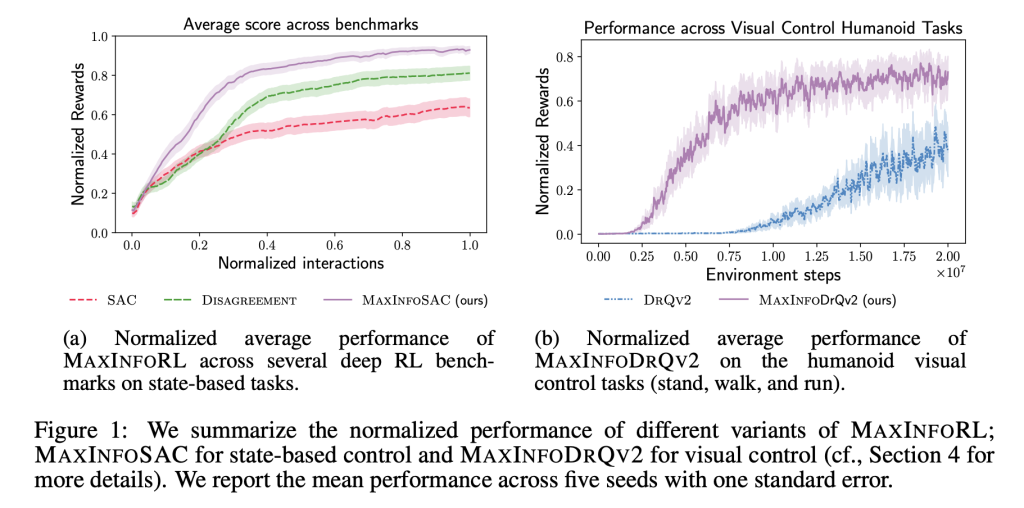

The research team evaluated MAXINFORL with Boltzmann exploration across several deep RL benchmarks on state-based and visual control tasks. The SAC method was used for state-based tasks, and for visual control tasks, the authors combined the algorithm with DrQ. The authors compared MAXINFORL against various baselines across tasks of different dimensionality. It was observed that MAXINFORLSAC performed consistently across all tasks, while other baselines struggled to maintain comparable performance. Even in environments requiring complex exploration, MAXINFORL achieved the best performance. The paper also compared the performance of SAC with and without MAXINFORL and found a stark improvement in speed. For visual tasks, MAXINFORL also achieved substantial gains in performance and sample efficiency.

Conclusion: Researchers presented MAXINFORL algorithms that augmented naive extrinsic exploration techniques to achieve intrinsic rewards by targeting high entropy in state rewards and actions.. In a variety of benchmark tasks involving state-based and visual control, it outperformed off-policy baselines. However, since it required training several models, it was burdened by computational overhead.

Check out the Paper. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter and join our Telegram Channel and LinkedIn Group. Don’t Forget to join our 60k+ ML SubReddit.

The post Researchers from ETH Zurich and UC Berkeley Introduce MaxInfoRL: A New Reinforcement Learning Framework for Balancing Intrinsic and Extrinsic Exploration appeared first on MarkTechPost.

Source: Read MoreÂ

Trending: LG AI Research Releases EXAONE 3.5: Three Open-Source Bilingual Frontier AI-level Models Delivering Unmatched Instruction Following and Long Context Understanding for Global Leadership in Generative AI Excellence….

Trending: LG AI Research Releases EXAONE 3.5: Three Open-Source Bilingual Frontier AI-level Models Delivering Unmatched Instruction Following and Long Context Understanding for Global Leadership in Generative AI Excellence….