Imitation learning (IL) is one of the methods in robotics where robots are trained to mimic human actions based on expert demonstrations. This method relies on supervised machine learning and requires significant human-generated data to guide the robot’s behavior. Although effective for complex tasks, imitation learning is limited by the lack of large-scale datasets and challenges in scaling data collection, unlike language and vision models. Learning from human video demonstrations faces big challenges because robots cannot match the sensitivity and flexibility of human hands. These differences make it hard for imitation learning to work effectively or scale up for general robot tasks.

Traditional imitation learning (IL) relied on human-operated robots, which were effective but faced significant limitations. These systems are based on teleoperation via gloves, motion capture, and VR devices and rely on complex setups and the low-latency control loop. They also relied on physical robots and special-purpose hardware, which was difficult to scale. Although robots could perform tasks such as inserting batteries or tying shoelaces using expert data collected by these approaches, the need for special equipment made such approaches impractical for large-scale or more general use.

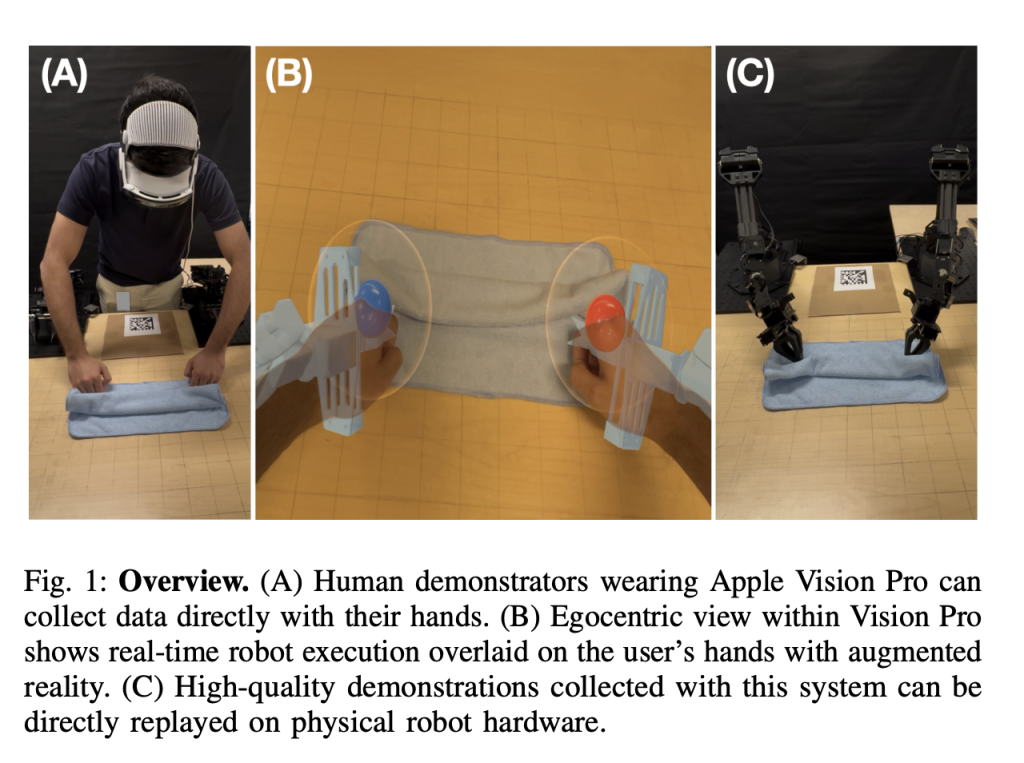

To solve this, a group of researchers from Apple and the University of Colorado Boulder proposed the ARMADA system, which integrates the Apple Vision Pro headset with external robot control using a combination of ROS and WebSockets. This setup let communication between the devices, where the system could be plug-and-play and was flexible to many robot platforms, such as Franka and UR5, by only replacing 3D model files and data formatting for the headset. The ARMADA app handled robot visualization, data storage, and a user interface, receiving transformation frames for robot links, capturing image frames from cameras, and tracking human skeleton data for processing. The robot node managed control, data storage, and constraint calculation, transforming skeletal data into robot commands and detecting workspace violations, singularities, and speed issues for real-time feedback.

The robot’s movements were aligned with human wrist and finger positions, tracked through ARKit in vision 2.0, using inverse kinematics to calculate joint positions and control a gripper based on finger spacing. Constraints like singularity, workspace limits, and speed violations were visualized through color changes, virtual boundaries, or on-screen text. Researchers used the ARMADA system to perform three tasks: picking a tissue from a box, placing a toy into a cardboard box, and wiping a table with both hands. Each task had five starting states, and success was based on specific criteria. Wearing Apple Vision Pro with ARMADA software on visionOS 2.0, participants provided 45 demonstrations under three feedback conditions: No Feedback, Feedback, and Post Feedback. Wrist and finger movements were tracked in real-time using ARKit, and robot movements were controlled via inverse kinematics, with joint trajectories recorded for replay.

Upon evaluation, the results showed that feedback visualization significantly improved replay success rates for tasks like Pick Tissue, Declutter, and Bimanual Wipe, with gains of up to 85% compared to no feedback. Post-feedback demonstrations also showed improvements but were less effective than real-time feedback. Participants found the feedback intuitive and useful for understanding robot motion, and the system worked well for users with varying experience levels. Common failure modes without feedback included imprecise robot poses and gripper issues. Participants adjusted their behavior during demonstrations, slowing down and changing hand positions, and could visualize feedback after removing it.

In summary, the proposed ARMADA system addressed the challenge of scalable data collection for robot imitation learning by using augmented reality for real-time feedback to improve data quality and compatibility with physical robots. The results showed the importance of feedback for aligning robot-free demonstrations with real robot kinematics. While the study focused on simpler tasks, future research can explore more complex ones and refine techniques. This system can serve as a baseline for future robotics research, particularly in training robot control policies through imitation learning with visual observations.

Check out the Paper. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter and join our Telegram Channel and LinkedIn Group. Don’t Forget to join our 60k+ ML SubReddit.

The post Apple Researchers Introduce ARMADA: An AI System for Augmenting Apple Vision Pro with Real-Time Virtual Robot Feedback appeared first on MarkTechPost.

Source: Read MoreÂ

Trending: LG AI Research Releases EXAONE 3.5: Three Open-Source Bilingual Frontier AI-level Models Delivering Unmatched Instruction Following and Long Context Understanding for Global Leadership in Generative AI Excellence….

Trending: LG AI Research Releases EXAONE 3.5: Three Open-Source Bilingual Frontier AI-level Models Delivering Unmatched Instruction Following and Long Context Understanding for Global Leadership in Generative AI Excellence….