As your organization transitions its workloads to AWS, you may encounter various technical and operational factors to consider when migrating your Oracle databases hosted on Exadata to the Amazon Relational Database Service (Amazon RDS) for Oracle platform. These include:

- Very high I/O indicators on Exadata, such as I/O throughput and IOPS

- Insufficient insight into the Exadata features utilized by applications

- Apprehensions about potential performance degradation following migration without leveraging Exadata capabilities like Smart Scan, Storage Indexes, Smart Flash Cache, etc.

This post offers a comprehensive guide to address these factors to minimize impact on performance post migration. It helps you understand and assess Exadata database workload characteristics by analyzing the usage of Exadata-specific. The post also explores alternative strategies and optimizations available on Amazon RDS for Oracle.

We provide guidance on the following topics to help with these factors:

- Approach to Exadata workload migration to RDS Oracle

- Determine Exadata Smart IO usage using AWR

- Analyze database-level I/O usage using AWR

- Finding SQLs utilizing Exadata features

- Leveraging alternatives on RDS for Oracle for Exadata-specific features

Approach to Exadata workload migration to RDS Oracle

Take a strategic, granular approach to migrating Exadata databases to Amazon RDS for Oracle.

First, thoroughly understand the existing workloads on Exadata by analyzing I/O patterns, query complexity, data access methods, and utilization of Exadata-specific features.

Organizations consolidate multiple databases on an Exadata database machine to optimize resources and save costs. So, instead of analyzing all workloads running on Exadata as a whole, divide the workloads into manageable pieces. Focus on individual databases or schemas, which will become individual RDS Oracle instances or tenants of Multi-Tenant RDS Oracle instance. This granular approach allows for better assessment of performance and resource implications, enabling targeted optimization and validation to ensure a successful migration without unexpected performance degradation. Avoid a one-size-fits-all approach and tailor the migration strategy to the unique requirement of each workload. This upfront analysis and segmentation improve the chances of smooth migration to RDS for Oracle.

When evaluating Exadata databases for migration, remember that the application layer remains the same for Oracle Database, whether on Exadata or another platform. The key question is whether the application SQL will perform similarly. Focus on performance-related considerations, examining how the current database utilizes Exadata’s specialized features. Assessing the application’s dependence on Exadata-specific features is critical for predicting performance impacts and developing mitigation strategies when migration to Amazon RDS for Oracle. The migration evaluation should center around assessing performance implications, not just verifying application functionality. Careful analysis of the workload characteristics and benchmarking against the new platform are recommended to accurately gauge the expected performance outcomes.

Here are some steps you can follow:

- Analyze the Automatic Workload Repository (AWR) reports: AWR reports provide detailed performance statistics and can help identify areas where Exadata features are being utilized. Look for metrics related to I/O performance, SQL execution times, and wait events. AWR report has a section “Exadata Top Database Consumers†which can be used to understand which databases are most demanding. This will list top 10 databases by IOPS and Throughput. It will also list top 50 PDBs by IOPS and Throughput.

- By collaborating closely with AWS Database Solutions Architects and AWS Partners, organizations can take advantage of their specialized knowledge and expertise to streamline the process of gathering the necessary data to plan the Exadata to Amazon RDS for Oracle migration strategy. AWS Solution Architects and Partners can provide insights into best practices, tools, and methodologies for data collection and migration planning.

- Within Oracle Database, you can use various DBA history views or

V$views to gather information about Exadata feature usage. For example:V$IOSTAT_CELLandV$IOSTAT_FUNCTIONviews can show statistics related to I/O operations, indicating if Smart Scan is being used.V$SQLandV$SQL_PLANcan show run plans, which may reveal the usage of Smart Scan or other optimizations.V$ASM_OPERATIONcan show ASM operations, and you can use it to observe offloading operations that use Exadata features.

- If you have Oracle Enterprise Manager (OEM) configured for your Exadata, you can use it to monitor the system’s performance and gain insights into usage of various Exadata features.

- Use tools like Oracle’s SQL Performance Analyzer (SPA) or Real Application Testing (RAT) to profile and benchmark workloads.

Determine Exadata Smart IO usage using AWR

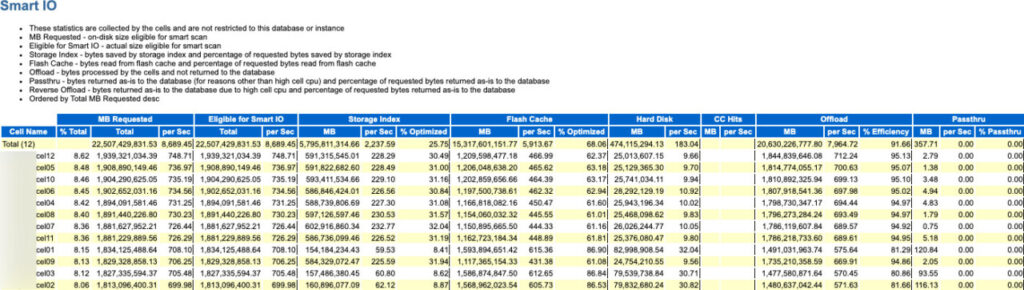

Cluster wide AWR report of a database or instance specific AWR report has a “Smart IO Statistics†section which contains summarized statistical information for smart I/O operations on the whole system and on each storage server. In particular, it tracks the amount of I/O that is eligible for optimization using Smart Scan, the savings from using storage indexes and columnar cache, the amount of data in Exadata Smart Flash Cache, and the amount of data filtered by the storage servers without transportation back to the database server. It also shows associated database statistics and more detailed information about situations where the storage servers are unable to perform predicate evaluation (also known as ‘passthru’).

This gives you an idea of how much of Exadata Smart IO features are being used by all the databases running on it. This section is not restricted to the database or instance where the AWR report is generated.

For an example, in the following screen capture (click to expand), the AWR report shows there are 12 storage cells. 25.75% of requested bytes were saved by the use of storage indexes, 68.06% of requested byes were read from flash cache. Offload suggests that 91.66% of the data was processed by the cells and was not returned to the compute nodes.

Based on observations for Smart IO usage for whole system, you can determine which Exadata-specific features are providing most optimization for the workloads running on it. If the Smart I/O section shows minimal usage of storage indexes or flash cache reads, you can conclude that your database does not heavily rely on Smart IO features. If significant dependency on Smart IO features is observed, the following section will help you pinpoint specific queries that may need optimization or redesign to preserve performance when migrating to RDS for Oracle.

The next crucial step is to analyze Smart IO usage at the database level. Prioritize this analysis for workloads scheduled for earlier migration. This targeted approach ensures efficient resource allocation and helps in creating a more effective migration roadmap.

Analyze database-level I/O usage using AWR

The “IO Stats†or “I/O statistics†section of AWR report for a database offers granular metrics on I/O activities, categorized by various functions, such as direct reads, Smart Scan, and buffer cache reads. These detailed I/O statistics can provide valuable insights into the workload characteristics and the effectiveness of the Exadata storage infrastructure in servicing the database’s I/O requirements. It’s important to note that the specific report sections may vary depending on whether the AWR data is collected at the instance level or the Real Application Clusters (RAC) level, which would provide a global perspective.

As seen in the following table, Smart Scan is processing 382.947 MB/s data. With absence of Smart Scan on RDS for Oracle, you will have to account for this additional throughput requirement.

| Function Name | Reads: Data | Reqs per sec | Data per sec | Writes: Data | Reqs per sec | Data per sec | Waits: Count | Avg Time |

| Direct Reads | 2.6T | 1498.31 | 749.248M | 20G | 10.92 | 5.67M | 344 | 279.07us |

| Smart Scan | 1.3T | 386.85 | 382.947M | 0M | 0.00 | 0M | 0 | |

| Buffer Cache Reads | 279.2G | 1643.05 | 79.246M | 0M | 0.00 | 0M | 5.8M | 358.19us |

| Direct Writes | 17.5G | 10.01 | 4.976M | 144.2G | 63.89 | 40.928M | 108 | 342.59us |

| Others | 38.3G | 31.74 | 10.868M | 7.1G | 6.34 | 2.027M | 71.8K | 638.13us |

| LGWR | 68M | 1.20 | .019M | 4.1G | 334.44 | 1.175M | 3127 | 336.73us |

| DBWR | 0M | 0.00 | 0M | 2.7G | 63.25 | .77M | 1 | .00ns |

| Streams AQ | 0M | 0.00 | 0M | 0M | 0.00 | 0M | 13 | 1.00ms |

| TOTAL: | 4.2T | 3571.16 | 1.2G | 178.2G | 478.83 | 50.57M | 5.8M | 361.62us |

When reviewing the AWR report or dictionary views, it’s crucial to properly contextualize the observed metrics and determine what constitutes a significant I/O throughput for the given database workload. For instance, the example indicates total throughput requirement of 1.2GB/s including 382.947 MB/s for Smart Scan. Amazon RDS for Oracle can potentially deliver significantly higher I/O throughput, with documented capabilities of up to 4000 MB/s for 256000 IOPS and IO size of 16KB. With IO2 Block Express and larger IO size, throughput can go beyond 4000 MB/s for RDS for Oracle instances. This highlights the need to compare the Exadata-reported metrics against the performance capabilities of the target Amazon RDS environment when planning the migration.

There are other places in the AWR report that you can see for details of I/O statistics, which gives you an idea about what type of IO operations are happening. For example, the following report shows read/write based on data file, temp file, and buffer reads. As an example, for Direct Reads (Temp File) write requests per second was 10.88 out of total write requests per second of 10.92. With respect to reads requests per second on temp file, we see 173.24 out of 1498.32. Both reads and writes on temp file through direct path is contributing 47.913 (42.244+5.669) MB/s throughput. Temp file operations may indicate SQLs doing hashing or sorting which didn’t fit in work areas from memory. The operations such as index creation, group by, order by, union, etc will cause IO operations on Temp Files. RDS for Oracle instance types that support local NVMe storage are a good fit for such use cases where temp files can be stored on local NVMe (instance store) to improve read/write operations to temp files. In this example, with usage of instance store on RDS Oracle for storing temporary tablespaces, you can reduce EBS throughput requirement by 47.913 MB/s.

| Function/File Name | Reads: Data | Reqs per sec | Data per sec | Writes: Data | Reqs per sec | Data per sec | Waits: Count | Avg Time |

| Direct Reads | 2.6T | 1498.32 | 749.253M | 20G | 10.92 | 5.67M | 344 | 279.07us |

| Direct Reads (Data File) | 2.4T | 1324.95 | 707.007M | 3M | 0.04 | .001M | 0 | |

| Direct Reads (Temp File) | 148.8G | 173.24 | 42.244M | 20G | 10.88 | 5.669M | 0 | |

| Direct Reads (Control File) | 8M | 0.13 | .002M | 0M | 0.00 | 0M | 344 | 279.07us |

| Smart Scan | 1.3T | 386.85 | 382.949M | 0M | 0.00 | 0M | 0 | |

| Smart Scan (Data File) | 1.3T | 386.79 | 382.922M | 0M | 0.00 | 0M | 0 | |

| Smart Scan (Temp File) | 96M | 0.07 | .027M | 0M | 0.00 | 0M | 0 | |

| Buffer Cache Reads | 279.2G | 1643.00 | 79.246M | 0M | 0.00 | 0M | 5.8M | 358.19us |

| Buffer Cache Reads (Data File) | 277.8G | 1641.88 | 78.852M | 0M | 0.00 | 0M | 5.7M | 357.17us |

| Buffer Cache Reads (Temp File) | 1.4G | 1.12 | .394M | 0M | 0.00 | 0M | 4041 | 1.81ms |

| Direct Writes | 17.5G | 10.01 | 4.976M | 144.2G | 63.89 | 40.928M | 108 | 342.59us |

| Direct Writes (Temp File) | 13.8G | 8.83 | 3.921M | 144.1G | 63.68 | 40.907M | 0 | |

| Direct Writes (Data File) | 3.7G | 1.14 | 1.055M | 75M | 0.21 | .021M | 0 | |

| Direct Writes (Control File) | 2M | 0.04 | .001M | 0M | 0.00 | 0M | 108 | 342.59us |

| Others | 38.3G | 31.16 | 10.867M | 7G | 5.33 | 1.999M | 70.3K | 648.14us |

| Others (Log File) | 27.9G | 8.03 | 7.919M | 0M | 0.00 | 0M | 290 | 207.65us |

| Others (Control File) | 10.3G | 21.29 | 2.927M | 58M | 1.02 | .016M | 60.1K | 505.08us |

| Others (Archive Log) | 0M | 0.00 | 0M | 4.5G | 1.26 | 1.264M | 0 | |

| Others (Flashback Log) | 0M | 0.00 | 0M | 2.4G | 1.56 | .675M | 5622 | 1.29ms |

| Others (Temp File) | 30M | 1.09 | .008M | 158M | 1.49 | .044M | 3922 | 1.98ms |

| Others (Data File) | 45M | 0.76 | .012M | 1M | 0.00 | 0M | 396 | 310.98us |

| LGWR | 68M | 1.20 | .019M | 4.1G | 334.41 | 1.175M | 3127 | 336.73us |

| LGWR (Log File) | 0M | 0.00 | 0M | 4.1G | 334.22 | 1.172M | 24 | 125.00us |

| LGWR (Control File) | 68M | 1.19 | .019M | 11M | 0.19 | .003M | 3103 | 338.37us |

| DBWR | 0M | 0.00 | 0M | 2.7G | 63.25 | .77M | 1 | .00ns |

| DBWR (Data File) | 0M | 0.00 | 0M | 2.7G | 62.96 | .768M | 1 | .00ns |

| DBWR (Temp File) | 0M | 0.00 | 0M | 8M | 0.29 | .002M | 0 | |

| Streams AQ (Data File) | 0M | 0.00 | 0M | 0M | 0.00 | 0M | 13 | 1.00ms |

| Streams AQ | 0M | 0.00 | 0M | 0M | 0.00 | 0M | 13 | 1.00ms |

| TOTAL: | 4.2T | 3570.54 | 1.2G | 178.1G | 477.80 | 50.541M | 5.8M | 361.67us |

So far, the analysis has focused on Exadata and database-level metrics to understand the utilization of Exadata features, particularly in terms of I/O. To further refine, it is possible to analyze at the SQL level to identify the specific SQL statements that are leveraging Exadata’s Smart IO capabilities. This can be accomplished by examining data from the Monitoring Smart I/O Using SQL Monitor, and SQL Explain Plan.

Finding SQLs utilizing Exadata features

There are multiple ways to identify SQLs that are using smart I/O in Exadata System Software. For this post, we discuss AWR and v$/ASH system views to identify SQLs that are using Exadata features. If you have access to Enterprise Manager, SQL monitor includes detailed statistics for the row sources, which includes information for smart I/O operations.

As a general first step, looking at the “SQL ordered by Reads†section in the AWR report gives you information about SQLs which are responsible for maximum reads. You can further analyze the SQL_IDs obtained by using execution plans to understand if they are using smart scan. The following report is a sample of the “SQL ordered by Reads†section.

| Total | ||||||||

| SQL_ID | Reads | Gets | Elapsed (s) | CPU (s) | IOWait (s) | Rows | Cluster (s) | Execs |

| 8pt40d4qwe6wa | 326,455,504 | 0 | 3,607.78 | 1,855.28 | 2,518.85 | 0 | 0.00 | 0 |

| 8q068zybtanjb | 326,455,504 | 0 | 3,607.78 | 1,855.28 | 2,518.85 | 0 | 0.00 | 0 |

| 88z7xkbq0y5m8 | 19,512,938 | 411,936 | 3,061.01 | 3,048.74 | 392.90 | 0 | 0.01 | 0 |

| 94r0dfuyakpsr | 15,669,184 | 184,550,928 | 3,607.71 | 3,044.34 | 705.00 | 0 | 0.22 | 2 |

| 4xnqx74fmw8rd | 14,540,078 | 19,488,109 | 304.75 | 283.96 | 24.49 | 1 | 0.07 | 1 |

| bgwmn5rcy6bnf | 14,472,343 | 15,010,745 | 636.04 | 456.84 | 208.72 | 1 | 2.97 | 1 |

| 87547jcnf6x97 | 14,472,278 | 15,010,113 | 635.85 | 456.67 | 208.71 | 63 | 2.96 | 1 |

| bgwv1cm164np3 | 12,216,146 | 10,606,974 | 481.66 | 339.44 | 171.59 | 8 | 0.05 | 2 |

| g33qbhb680yhd | 11,466,774 | 46,449,453 | 974.77 | 426.53 | 616.73 | 338 | 8.52 | 11 |

| 6qru79q74v2xx | 8,626,449 | 36,377,244 | 361.03 | 241.57 | 134.17 | 208 | 0.87 | 8 |

Alternatively, you can directly query Active Session History (ASH) views to obtain SQL_IDs for top SQLs which are waiting on events related to smart scan such as “cell smart table scanâ€. Other wait events can be found at Monitoring Smart I/O Using Database Statistics and Wait Events. You can use the following queries to either query gv$active_session_history (in-memory) or dba_hist_active_sess_history (history).

SELECT *

FROMÂ Â Â (SELECT sql_id, count(*)

FROM   gv$active_session_history

WHERE  event = 'cell smart table scan'

AND wait_time = 0

AND sample_time > (sysdate – 60 / 1440)

GROUP  BY sql_id

ORDER  BY count(*) DESC);

SQL_ID        COUNT(*)

----------Â Â Â Â Â Â Â ----------Â

6a759s0w8933y      62983742

7jaz5nktpyh3t     9362355

94r0dfqwrkpsr     8765452

c0u005sm63mqg    5897988

08r07spkmj1hk    4781238

50kx8nhsdqr74Â Â Â Â 213213

c3x1ctjfq81ph    186345

g33qbhb680yhd     176542

gbstw1ph628ck     12982

SELECT *.

FROM (SELECT sql_id, count(*)

FROM dba_hist_active_sess_history

WHERE event = ‘cell smart table scan’

AND wait_time = 0

AND dbid = &dbid

AND snap_id BETWEEN &bsnap AND &esnap

GROUP BY sql_id

ORDER BY count(*) DESC);

SQL_ID COUNT(*)

---------- ------------

hi86ns0wwf34y 9832340

e6azknktedh3 8912401

7kr0dfqqmitpsw 8164523

c0u005sm63mqg 261851

08r07spkmj1hk 118855

50kx8nhsdqr74 113236

b3jhgwej81ph 13469

k33qbweqw75y 8767

gbstw1ph628ck 298 It’s a good idea to understand the volume of bytes read for these top SQLs found in the preceding step. This can be obtained by querying the gv$sql view for the SQL_IDs and child cursor number. To obtain sql_child_number and sql_exec_id, use the following query:

SELECT sql_id, sql_child_number, sql_exec_id FROMÂ GV$ACTIVE_SESSION_HISTORY

WHERE sql_id = <SQL_ID from Above>With the sql_child_number, you can query v$sql to get the physical_read_bytes metric (phyrd_mb), io_interconnect_bytes (io_ret_mb), and io_cell_offload_eligible_bytes (io_elg_bytes):

SELECT ROUND(physical_read_bytes/1048576) phyrd_mb,

ROUND(io_cell_offload_eligible_bytes/1048576) io_elg_mb,

ROUND(io_interconnect_bytes/1048576) io_ret_mb,

(1-(io_interconnect_bytes/NULLIF(physical_read_bytes,0)))*100 "SAVING%"

FROM gv$sql WHERE sql_id = '6a759s0w8933y'

AND child_number = 0;

PHYRD_MB IO_ELG_MB IO_RET_MB SAVING%

---------- ---------- ---------- ----------

28833Â Â Â Â Â 28833Â Â Â Â Â 13098Â Â Â Â Â Â Â Â Â 45.4%The efficiency of Smart Scan is evident in this scenario. While 28,833 MB of data was read from storage, only 13,098 MB needed to be transferred to the compute nodes. This results in a 45.4% reduction in data transfer, which is a significant optimization.

The interpretation of the SAVING% is crucial:

A higher SAVING% indicates greater efficiency. It means that Smart Scan was able to filter out a larger portion of unnecessary data at the storage level, reducing network traffic and processing load on compute nodes.

Conversely, a lower SAVING% suggests that a larger proportion of the read data needed to be transferred to compute nodes, indicating less effective data filtering at the storage level.

In this case, the 45.4% saving is a positive result, showing that Smart Scan effectively reduced nearly half of the potential data transfer. This efficiency can lead to improved query performance, reduced network congestion, and lower processing requirements on compute nodes.

Repeat this activity for all the SQL_IDs that you found in the previous section to get a good idea of the number of bytes that are using Smart Scan or cell offloading.

Furthermore, you can find the SQL_IDs for the top SQL statements that are using the TABLE ACCESS STORAGE operation in their run plan by using a Common Table Expression (CTE). The CTE helps you first filter the SQL_IDs that satisfy the TABLE ACCESS STORAGE condition from the V$SQL_PLAN view. Then you can rank these SQL_IDs based on the desired metric using the DBA_HIST_SQLSTAT view. See the following code:

WITH TableAccessSQLs AS (

SELECT DISTINCT sql_id

FROM V$SQL_PLAN

WHERE operation LIKE '%TABLE ACCESS STORAGE%'

OR operation LIKE '%STORAGE INDEX%' ),

TopSQLs AS ( SELECT sql_id, RANK() OVER (ORDER BY <metric> DESC) AS rnk

FROM DBA_HIST_SQLSTAT WHERE sql_id IN (SELECT sql_id FROM TableAccessSQLs)

AND begin_interval_time >= TO_DATE('start_date', 'YYYY-MM-DD HH24:MI:SS')

AND end_interval_time <= TO_DATE('end_date', 'YYYY-MM-DD HH24:MI:SS') )

SELECT sql_id FROM TopSQLs WHERE rnk <= <top_n>Provide the following values:

- metric – Choose the metric based on how you want to determine the top SQL statements. Examples of metrics could be elapsed_time, cpu_time, disk_reads, or buffer_gets. Pick the metric that is most relevant to your analysis.

- start_date and end_date – Replace these with the start and end dates of the specific period you want to analyze in the format YYYY-MM-DD HH24:MI:SS.

- top_n – Specify the number of top SQL statements you want to retrieve. For example, if you want the top 10 SQL statements, set <top_n> to 10.

The next step in the process of identifying SQLs using Exadata-specific features is to look at execution plans. The purpose of this step is to identify exactly which execution plan row sources use Smart Scan and how much they benefit from it.

You can obtain the run plans of the SQL_IDs identified earlier by using the AWR SQL report (awrsqrpt.sql), explain plan, or SQL Monitor part of Enterprise Manager. The following report is an example of a run plan of a SQL. The table access storage full operation is performed on SALES and SALES_HIST, which are relatively big in size. There are a few other tables and temporary tables where storage full scan is performed.

| Id | Operation | Name | Rows | Bytes | Cost (%CPU) |

| 0 | SELECT STATEMENT | 476K(100) | |||

| 1 | Â Â SORT ORDER BY | 1820K | 199M | 476K (1) | |

| 2 | Â Â Â Â HASH GROUP BY | 1820K | 199M | 476K (1) | |

| 3 | Â Â Â Â Â Â VIEW | SALES_LOG_V | 1820K | 199M | 476K (1) |

| 4 | Â Â Â Â Â Â Â Â UNION-ALL | ||||

| 5 | Â Â Â Â Â Â Â Â Â Â VIEW | TRACK_DATE_FLAT_V | 43 | 4945 | 189K (1) |

| 6 | Â Â Â Â Â Â Â Â Â Â Â Â TEMP TABLE TRANSFORMATION | ||||

| 7 | Â Â Â Â Â Â Â Â Â Â Â Â Â Â LOAD AS SELECT (CURSOR DURATION MEMORY) | SYS_TEMP_0FD9D7A30_9BBCEA31 | |||

| 8 | Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â PARTITION LIST ALL | 41 | 2911 | 103 (0) | |

| 9 | Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â TABLE ACCESS BY LOCAL INDEX ROWID BATCHED | SALES_DATES_BY_APT | 41 | 2911 | 103 (0) |

| 10 | Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â BITMAP CONVERSION TO ROWIDS | ||||

| 11 | Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â BITMAP INDEX SINGLE VALUE | SALES_DATES_HIST_BIX | |||

| 12 | Â Â Â Â Â Â Â Â Â Â Â Â Â Â HASH JOIN | 43 | 15867 | 189K (1) | |

| 13 | Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â JOIN FILTER CREATE | :BF0000 | 41 | 13489 | 471 (1) |

| 14 | Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â HASH JOIN OUTER | 41 | 13489 | 471 (1) | |

| 15 | Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â JOIN FILTER CREATE | :BF0001 | 41 | 10455 | 331 (1) |

| 16 | Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â NESTED LOOPS OUTER | 41 | 10455 | 331 (1) | |

| 17 | Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â HASH JOIN | 41 | 10291 | 84 (0) | |

| 18 | Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â HASH JOIN OUTER | 41 | 9676 | 43 (0) | |

| 19 | Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â VIEW | 41 | 8159 | 2 (0) | |

| 20 | Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â TABLE ACCESS STORAGE FULL | SYS_TEMP_0FD9D7A30_9BBCEA31 | 41 | 2911 | 2 (0) |

| 21 | Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â INDEX STORAGE FAST FULL SCAN | SALES_UPLOAD_FK | 1 | 37 | 1 (0) |

| 22 | Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â TABLE ACCESS STORAGE FULL | CLIENTS | 1 | 15 | 1 (0) |

| 23 | Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â VIEW PUSHED PREDICATE | 1 | 4 | 6 (0) | |

| 24 | Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â SORT GROUP BY | 1 | 78 | 6 (0) | |

| 25 | Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â HASH JOIN | 1 | 78 | 6 (0) | |

| 26 | Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â PARTITION LIST SINGLE | 1 | 41 | 4 (0) | |

| 27 | Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â INDEX RANGE SCAN | CLAIMS_FK_IXLOC | 1 | 41 | 4 (0) |

| 28 | Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â VIEW | 41 | 1517 | 2 (0) | |

| 29 | Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â TABLE ACCESS STORAGE FULL | SYS_TEMP_0FD9D7A30_9BBCEA31 | 41 | 2911 | 2 (0) |

| 30 | Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â VIEW | 528 | 39072 | 140 (1) | |

| 31 | Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â HASH GROUP BY | 528 | 59136 | 140 (1) | |

| 32 | Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â VIEW | VW_DAG_0 | 528 | 59136 | 140 (1) |

| 33 | Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â HASH GROUP BY | 528 | 46992 | 140 (1) | |

| 34 | Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â JOIN FILTER USE | :BF0001 | 528 | 46992 | 139 (0) |

| 35 | Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â NESTED LOOPS | 528 | 46992 | 139 (0) | |

| 36 | Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â NESTED LOOPS | 533 | 46992 | 139 (0) | |

| 37 | Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â VIEW | 41 | 1517 | 2 (0) | |

| 38 | Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â TABLE ACCESS STORAGE FULL | SYS_TEMP_0FD9D7A30_9BBCEA31 | 41 | 2911 | 2 (0) |

| 39 | Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â INDEX RANGE SCAN | PROV_REPORT_DATA_FILE_ID_IDX | 13 | 3 (0) | |

| 40 | Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â TABLE ACCESS BY INDEX ROWID | PROV_REPORT_DATA | 13 | 676 | 5 (0) |

| 41 | Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â JOIN FILTER USE | :BF0000 | 7231K | 275M | 188K (1) |

| 42 | Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â Â TABLE ACCESS STORAGE FULL | SALES | 7231K | 275M | 188K (1) |

| 43 | Â Â Â Â Â Â Â Â Â Â PARTITION LIST ALL | 1820K | 60M | 287K (1) | |

| 44 | Â Â Â Â Â Â Â Â Â Â Â Â TABLE ACCESS STORAGE FULL | SALES_HIST | 1820K | 60M | 287K (1) |

Alternatively, you can use the real-time SQL monitoring view v$sql_plan_monitor or Enterprise Manager to quantify the physical read and interconnect bytes for the TABLE ACCESS STORAGE FULL or INDEX – STORAGE FAST FULL SCAN row source of the SQL:

SELECT plan_line_id id,

LPAD(' ', plan_depth) || plan_operation || ' ' || plan_options || ' ' || plan_object_name operation,

ROUND(physical_read_bytes / 1048576) phy_rd_mb,

ROUND(io_interconnect_bytes / 1048576) io_ret_mb,

(1 - (io_interconnect_bytes / NULLIF(physical_read_bytes, 0))) * 100 "savings%"

FROM v$sql_plan_monitor WHERE sql_id = '<sql_id>' AND sql_exec_id = '<sql_exec_id>';| ID | Operation | phy_rd_mb | io_ret_mb | Â savings% |

| 0 | SELECT STATEMENT FILTER | 0 | 0 | |

| 1 | Â Â FILTER | 0 | 0 | |

| 2 | Â Â Â Â HASH GROUP BY | 0 | 0 | |

| 3 | Â Â Â Â Â Â HASH JOIN | 0 | 0 | |

| 4 | Â Â Â Â Â Â Â PART JOIN FILTER CREATE :BF0000 | 0 | 0 | |

| 5 | Â Â Â Â Â Â Â Â PARTITION HASH ALL | 0 | 0 | |

| 6 | Â Â Â Â Â Â Â Â Â HASH JOIN | 0 | 0 | |

| 7 | Â Â Â Â Â Â Â Â Â TABLE ACCESS STORAGE FULL SALES | 23412 | 829 | 96.5% |

| 8 | Â Â Â Â Â Â Â Â Â TABLE ACCESS STORAGE FULL SALES_ITEMS | 84571 | 2851 | 96.6% |

| 9 | Â Â Â Â Â Â PARTITION HASH JOIN-FILTER | 0 | 0 | |

| 10 | Â Â Â Â Â Â Â Â Â TABLE ACCESS STORAGE FULL PROVIDERS | 3521 | 2448 | 30.5% |

The output shows the SALES and SALES_ITEMS tables are using full table scan and are benefiting from Smart Scan. Smart I/O operations typically occur when a full table scan or index fast full scan is done on a row source. You will see TABLE ACCESS STORAGE FULL or INDEX – STORAGE FAST FULL SCAN used in the SQL plan of those SQLs. This is because Exadata offloads the work to storage cells to scan the table and return only the rows which are required. This reduces amount of data sent back to compute nodes. In the example above, there is more than 96% saving on interconnect bytes by performing full table scan at storage level for SALES and SALES_ITEMS. This also suggests that these tables are large enough to qualify for direct path reads.

Finding the top SQLs utilizing smart IO features will help focus on finding alternatives to minimize performance impact on these SQLs after migration from Exadata to RDS for Oracle.

Amazon RDS Oracle offers memory-optimized instances with an impressive memory-to-vCPU ratio, exemplified by the db.x2iedn.32xlarge instance boasting 4096GB of memory. By default, 75% of this memory (3072GB in this case) is allocated to the System Global Area (SGA), significantly expanding the buffer cache. This large buffer cache alters how Oracle accesses data. Traditionally, large tables (exceeding 2% of buffer cache size) use direct path reads, bypassing the buffer cache. However, with RDS Oracle’s expanded memory, tables that previously triggered direct path reads and smart scans on systems like Exadata may now fit within the buffer cache, shifting to buffer cache reads. This change impacts query optimization: when indexes exist on predicate columns and queries request a small subset of rows, the optimizer often favors index scans over full table scans. Additionally, RDS Oracle can leverage the Database Smart Flash Cache using instance store, which helps reduce buffer aging and disk I/O. This feature acts as an intermediary layer between memory and disk, keeping less frequently accessed data in faster storage and potentially boosting performance for read-intensive workloads. While these memory-rich instances may alter some query execution patterns compared to other Oracle environments, they generally offer substantial performance benefits when database design and queries are optimized to leverage these resources effectively.

Now that you have conducted a detailed analysis of your Oracle database running on Exadata, let’s explore the steps and alternatives you can consider to address the identified Exadata-specific features as you migrate to Amazon RDS for Oracle.

Leveraging alternatives on RDS for Oracle for Exadata-specific features

On non-Exadata platforms like Amazon RDS for Oracle, you can use alternative features and techniques to provide similar or better performance to Smart Scan and storage indexes. Although these features may not be identical to Smart Scan and storage indexes, they aim to optimize data access and improve query performance. Some of these alternatives are native Oracle database features and some are architectural features on Amazon RDS for Oracle:

- Partitioning – Partitioning a large table into smaller, more manageable segments can improve query performance by reducing the amount of data that needs to be scanned. Partition pruning allows the database to skip unnecessary partitions when running queries, effectively achieving similar benefits as Smart IO for specific partitions. Like storage index, partitioning helps prune the partitions based on the predicate condition.

- Indexing – Creating appropriate indexes on columns frequently used in queries can speed up data retrieval by allowing the database to perform index scans or index range scans, which can reduce the amount of I/O needed compared to Smart Scan. Proper indexing can optimize query runs, especially for OLTP workloads. Many of the databases that run on Exadata today were run on non-Exadata systems before, and you may find that some of the original pre-Exadata indexes were dropped or made invisible to make the performance more Exadata-friendly. If so, a natural step is to experiment with reenabling some of the original indexes.

- Materialized views – Materialized views are precomputed and stored query results that can significantly speed up query performance for specific patterns of data access. They can act as precomputed aggregates or subsets of data, reducing the need for complex joins and computations during query runs. For more information, refer to Basic Materialized Views.

- Query rewrite – Oracle’s query rewrite feature automatically rewrites certain queries to use materialized views or summary tables, depending on the query’s structure and the available precomputed data. This feature can lead to more efficient query runs. For more information, see Basic Query Rewrite for Materialized Views.

- Database In-Memory – For analytics workloads, the in-memory columnar functionality allows storage of data in column format in memory for faster analytical query processing, reducing the need for disk I/O and significantly improving query response times. For more information about Database In-Memory features, refer to Introduction to Oracle Database In-Memory. To learn more about how to use Oracle Database In-Memory, see Improve performance of real-time analytics and mixed workloads using the Database In-Memory option on Amazon RDS for Oracle.

- Amazon RDS for Oracle memory optimized instances – Exadata databases are often configured with relatively small System Global Area (SGA) sizes due to availability for storage-level optimizations. While migrating from Exadata to Amazon RDS for Oracle, you can consider memory optimized instances along with extended memory instances that have a larger memory-to-vCPU ratio. These instance types can help you configure larger SGAs without requiring you to increase number of vCPUs. This also helps to optimize on licensing requirements.

- Amazon RDS for Oracle Instance Store – Amazon RDS for Oracle has instance classes which support local NVMe storage called Instance Store. An instance store is based on Non-Volatile Memory Express (NVMe) devices that are physically attached to the host computer. The storage is optimized for low latency, random I/O performance, and sequential read throughput. You can use this local storage to configure Database Smart Flash Cache and/or temporary tablespaces. This feature will help reduce IOPS and Throughput requirements from EBS.

- Amazon RDS Storage types – Amazon RDS offers different EBS volumes such as Provisioned IOPS SSD, General purpose SSD, and Magnetic. These support different IOPS and throughput performance. Depending on workload requirement you can choose between these storage types. Magnet storage is legacy storage and not recommended. For IO sensitive critical production workload, it is recommended to use Provisioned IOPS SSD storage. Amazon RDS offers two types of Provisioned IOPS SSD storage: io2 Block Express storage (recommended)and io1 storage (previous generation). Use io2 Block Express which provides upto 256000 IOPS with sub-millisecond latency and 99.999% durability. The throughput varies based on the amount of IOPS provisioned and size of I/O operations. E.g. for 256000 IOPS with 16 KB IO size, you can achieve 4000 MB/s throughput.

- Amazon RDS automated backups and manual snapshots – Databases running on Exadata mainly rely on Oracle RMAN to take backups. These backup operations contribute to IOPS and throughput indicators. With Amazon RDS for Oracle, automated or manual snapshots are storage-level snapshots; these snapshots are stored in Amazon Simple Storage Service (Amazon S3). Moving from RMAN to Amazon RDS snapshot based backups will reduce the IOPS and throughput requirements on Amazon RDS for Oracle.

- Amazon RDS Multi-AZ deployments – RDS Multi-AZ provides high availability and durability to your RDS Oracle instance as it provides automated failover capabilities to a synchronized standby in different availability zone. It is also useful to offload backups from primary which helps to further reduce IO requirements on primary instance.

- Amazon RDS Read Replicas – Replica for RDS Oracle can be a good disaster recovery solution. It can also help with read heavy workloads, by offloading read only traffic to read replica instances. IO intensive read traffic such as business reporting or data warehousing scenarios can be run against read replicas, this will reduce resource requirement on primary instance.

- Amazon ElastiCache caching strategy – ElastiCache is a fully managed, in-memory caching service that provides microsecond read and write latencies that support flexible, real-time use cases. A cache aside solution can be created by using Amazon ElastiCache to store query results in key-value format. Subsequent executions of same SQL can benefit from cache hit as it provides microsecond latency. This is very beneficial for repetitive long running queries.

- Deconsolidate a monolithic database or schemas into multiple RDS for Oracle instances or other AWS database services with the approach of a microservices application design.

- Offload analytical workloads from OLTP databases by using purpose-built databases like Amazon Redshift for analytical workloads.

- Refer to the blog series Archival solutions for Oracle database workloads in AWS to understand approaches to archive data. Data archival helps with application performance and cost-optimization.

- Proof of Concept(PoC) – it’s recommended to conduct a proof of concept to test the workload and optimize it to meet workload demands. You can use Oracle’s Real Application Testing (Database Replay and SQL Performance Analyzer) during the proof of concept, along with other tests as needed. Proof of Concept can also be used to test workloads along with above mentioned strategies.

- Architect to meet RPO and RTO requirements – Amazon RDS for Oracle provides several options to choose from to setup HA and DR solution for your RDS Oracle instance. Based on RPO and RTO requirements you can use combination of these options. Review blog series “Managed disaster recovery with Amazon RDS for Oracle cross-Region automated backups – Part 1†and “Managed disaster recovery with Amazon RDS for Oracle cross-Region automated backups – Part 2†for more details.

Conclusion

In conclusion, this post has provided a comprehensive guide for addressing performance considerations when migrating Oracle databases from Exadata to Amazon RDS for Oracle. We’ve explored methods to analyze Exadata workload characteristics, including determining Smart IO usage, examining database-level I/O patterns, and identifying SQLs that utilize Exadata-specific features. The post also discussed various alternatives available on RDS for Oracle to mitigate potential performance impacts. By understanding these aspects and applying the suggested optimization techniques, organizations can more effectively plan their migration strategy, minimize performance degradation, and potentially enhance their database performance in the AWS environment.

About the Authors

Justin John is a Database Specialist Solutions Architect with AWS. Prior to AWS, Justin worked for large enterprises in Technology, Financials, Airlines, and Energy verticals, helping them with database and solution architectures. In his spare time, you’ll find him exploring the great outdoors through hiking and traveling to new destinations.

Justin John is a Database Specialist Solutions Architect with AWS. Prior to AWS, Justin worked for large enterprises in Technology, Financials, Airlines, and Energy verticals, helping them with database and solution architectures. In his spare time, you’ll find him exploring the great outdoors through hiking and traveling to new destinations.

Vishal Patil is a Senior Database Specialist Solutions Architect with Amazon Web Services. Vishal helps customers design architecture, migrate, and optimize Oracle workloads in AWS.

Vishal Patil is a Senior Database Specialist Solutions Architect with Amazon Web Services. Vishal helps customers design architecture, migrate, and optimize Oracle workloads in AWS.

Source: Read More