In How the Amazon TimeHub team designed resiliency and high availability for their data replication framework: Part 2, we covered different scenarios handling replication failures at the source database (Oracle), AWS Database Migration Service (AWS DMS), and target database (Amazon Aurora PostgreSQL-Compatible Edition). We then covered how we use Amazon CloudWatch metrics to monitor the health of the replication to achieve operational excellence. As part of our resilience scenario testing, when there was a failover between the Oracle primary database instance and primary standby instances, and the database opened up with RESETLOGS, AWS DMS couldn’t automatically read the new set of logs in case of a new incarnation.

In this post, we dive deep into the solution the Amazon TimeHub team used for detecting such a scenario and recovering from it. We then describe the post-recovery steps to validate and correct data discrepancies caused due to the failover scenario.

The impact of Oracle RESETLOGS on AWS DMS

Whenever Oracle writes redo logs to a new log file group, a new log sequence number (LSN) is generated. The sequence keeps incrementing until it’s reset in case of media recovery, for example, as a result of an unplanned failover scenario. Oracle RESETLOGS resets the log sequence number to 1 and the Oracle System Change Number (SCN) is incremented too. This happens when redo logs get corrupted and are not in sync with the current state of other files (data files and control file) in the Oracle database.

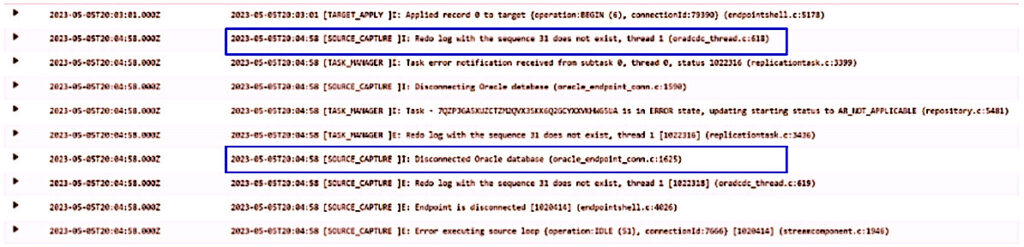

When AWS DMS is done processing an LSN, it looks for the next LSN. When the sequences are reset, AWS DMS is unable to detect reset logs, and keeps trying to find the next LSN and eventually the task fails. The following screenshot shows sample failure messages from the AWS DMS CloudWatch log group.

We will use this failure example to explain our approach to manage this failure. We evaluated three options to recover a failed task.

Option 1 – AWS DMS default RESETLOGS handling behavior

With AWS DMS version 3.5.1 and later, AWS DMS now addresses the challenges associated with RESETLOGS detection and log processing after a RESETLOGS operation. However, to take advantage of this feature, manual intervention is required. You need to stop the AWS DMS task and then resume it. Additionally, you must enable an Oracle database link from the AWS DMS instance to the primary server for this functionality to work correctly.

This option has limitations. The capability of AWS DMS to handle RESETLOGS doesn’t work when there are open transactions (swap files) in the system at the time of failover. In such cases, the RESETLOGS operation can’t be processed successfully.

Option 2 – Restart the AWS DMS task instead of resume

In AWS DMS, you have two options for starting a task after a failure or a manual stop: resume or restart.

If you choose to resume, AWS DMS starts the task from the last saved checkpoint. This checkpoint is automatically created and saved by AWS DMS during the previous run, allowing you to pick up where you left off without losing progress.

On the other hand, the restart option provides you with more granular control. The following screenshot shows the sub-options when restarting DMS tasks.

With this option, you can specify a particular timestamp or SCN in Oracle databases, indicating the point from which AWS DMS should begin the task. This capability is particularly useful when dealing with failovers and RESETLOGS in Oracle databases.

In scenarios where you know the exact time of a failover and RESETLOGS operation, you can use this feature to start the AWS DMS task from the next immediate SCN after that event. You can look up the exact SCN from the v$ARCHIVED_LOGS system view of the source Oracle database using the following SQL query:

We ran this SQL to find the SCN. The following screenshot shows the output.

Reviewing this output, we found that the SEQUENCE# is reset to 1 after 3916 and its corresponding RESETLOGS_TIME is 2023-07-26 18:06:18. We can also find FIRST_CHANGE# (11946493347137) that is the SCN of SEQUENCE# 1. We can restart the AWS DMS task from the timestamp mentioned in RESETLOGS_TIME or the first SCN after the RESETLOGS.

This option also has limitations. We do not have a way to know if AWS DMS was able to apply all logfiles to target until the last logfile of the previous incarnation of database (before the RESETLOGS operation). If all logfiles were not applied until SEQUENCE# 3916 and we chose the first SCN to restart the task, there is a possibility of data loss.

Option 3 – Perform a two-step restart of the task

In AWS DMS, you have option to stop the task. This option only supports system commit timestamps. We use this option to mitigate the issue of potential data loss from the previous option. In this option, we need to restart the task from a few SCNs behind the last SCN of the last incarnation before the RESETLOGS operation. The following screenshot from DMS console represents this option:

The process includes the following steps:

- Start from one SCN (SCN# 11946493114669, SEQUENCE# 3915) before the last SCN (SCN# 11946493301984, SEQUENCE# 3916) before the RESETLOGS operation and stop at timestamp 2023-07-26 18:06:18, which is the time for SEQUENCE# 1. This setup mitigates any potential data loss. The AWS DMS task stops automatically at the stop time. In the scenario of possible SCN overlap due to this, we prevent duplicate data insertion through primary keys in all of our tables.

- After the AWS DMS task stops, start from the first SCN# 11946493347137 for SEQUENCE# 1.

After testing and validating these options, we decided to use option three for our use case, because it is straightforward to follow and mitigates the risk of potential data loss.

Building an operational framework to handle an Oracle RESETLOGS failure scenario

After determining the solution, we built an operational framework to detect the RESETLOGS operation and validate data.

Our RESETLOGS data validation operates independently from AWS DMS and differs from the standard CDC validation task. While AWS DMS validation relies on redo logs parsing and batch validation of source and target data, this approach does not work during RESETLOGS scenarios. The validation process can take days to complete since it must parse through all data without filtering capabilities. This limitation led us to develop a custom validation framework that functions independent of redo logs.

Custom data validation framework design

In the event of this failure scenario, we must query the actual data in both source and target environments based on audit columns that are part of high-volume tables that AWS DMS can’t validate in a short time frame. The scope of validations is only for the data around the time of the source failover and the time when RESETLOGS occurred. The data validation framework queries the data in both source and target environments on the basis of audit columns. The following considerations are important while designing such a framework:

- Keep a buffer in terms of the window of the source failure. For example, the time of RESETLOGS minus 1 hour (depending on the last successful Oracle log sequence generation) to the time of RESETLOGS plus 1 hour. The records filter is based on the audit columns of each table.

- The framework is invoked only when a failover with RESETLOGS scenario happens.

Data validation workflow

Our framework needs to pull data from source and target to compare in memory. We chose AWS Glue to provide scalable resources and parallelism to complete validation as fast as possible. Also, the framework doesn’t need to run all the time, so AWS Glue being serverless is cost-effective as well.

The following diagram depicts the operational workflow of detecting and handling the RESETLOGS failure scenario.

The high-level steps of the validation framework are as follows:

- A CloudWatch alarm is set to capture RESETLOGS failures from Amazon CloudWatch

- The engineer receiving the alarm checks in the source database to confirm the scenario of RESETLOGS.

- If RESETLOGS isn’t confirmed, the engineer monitors the AWS DMS tasks to make sure it’s able to process the next few logs, and closes the case.

- If RESETLOGS is confirmed, the engineer finds the last SCN before RESETLOGS and the time of RESETLOGS from the source database.

- The engineer stops the validation task, modifies the replication task to start from the captured SCN and RESETLOGS timestamp, and then restarts the task, followed by monitoring.

- If the task is still stopped, the engineer finds the first SCN after RESETLOGS from the source database, modifies the replication task with that SCN, and attempts to restart the task, followed by monitoring. This step covers the last incarnation, and the AWS DMS replication task should start from the point of failure.

- The engineer then starts the AWS Glue based custom validation task to make sure there is no missing data.

- After confirming there is no missing or corrupt data, the engineer starts the AWS DMS CDC validation task to return to normal operations.

- In the next blog part of this series, we will dive deep into corrective measures we apply for our use case for missing / corrupt data.

Conclusion

In this post, we discussed how we built and tested a recovery framework for data replication using AWS DMS and AWS Glue during an Oracle RESETLOGS scenario. This framework helps us avoid data discrepancy scenarios whenever there are unplanned failovers on the source. This also helps us avoid impacts to downstream systems.

In the next post in this series, we will discuss how we developed a data validation framework to recover from disaster and disruption scenarios, so that data integrity is maintained between source and target.

About the Author

Ujjawal Kumar is a Data Engineer with Amazon’s Timehub team. He has over 12 years of experience as a data professional working in domains such as finance, treasury, sales, HCM, and timekeeping. He is passionate about learning all things data and implementing end-to-end data pipelines for data analytics and reporting. He is based out of Dallas, Texas.

Ujjawal Kumar is a Data Engineer with Amazon’s Timehub team. He has over 12 years of experience as a data professional working in domains such as finance, treasury, sales, HCM, and timekeeping. He is passionate about learning all things data and implementing end-to-end data pipelines for data analytics and reporting. He is based out of Dallas, Texas.

Amit Aman is Data Engineering Manager with Amazon’s TimeHub team. He is passionate about building data solutions that help drive business outcomes. He has over 17 years of experience building data solutions for large enterprises across retail, airlines, and people experience. He is based out of Dallas, Texas.

Amit Aman is Data Engineering Manager with Amazon’s TimeHub team. He is passionate about building data solutions that help drive business outcomes. He has over 17 years of experience building data solutions for large enterprises across retail, airlines, and people experience. He is based out of Dallas, Texas.

Saikat Gomes is part of the Customer Solutions team in Amazon Web Services. He is passionate about helping enterprises succeed and realize benefits from cloud adoption. He is a strategic advisor to his customers for large-scale cloud transformations involving people, process, and technology. Prior to joining AWS, he held multiple consulting leadership positions and led large-scale transformation programs in the retail industry for over 20 years. He is based out of Los Angeles, California.

Saikat Gomes is part of the Customer Solutions team in Amazon Web Services. He is passionate about helping enterprises succeed and realize benefits from cloud adoption. He is a strategic advisor to his customers for large-scale cloud transformations involving people, process, and technology. Prior to joining AWS, he held multiple consulting leadership positions and led large-scale transformation programs in the retail industry for over 20 years. He is based out of Los Angeles, California.

Source: Read More