In How the Amazon Timehub team built a data replication framework using AWS DMS: Part 1, we covered how we built a low-latency replication solution to replicate data from an Oracle database using AWS Database Migration Service (AWS DMS) to Amazon Aurora PostgreSQL-Compatible Edition. In this post, we elaborate on our approach to address resilience of the ongoing replication between source and target databases.

Amazon Timehub’s MyTime is a critical workforce management system that handles thousands of pay policy groups, accrual policies, and time-off request types. The requirement was that the solution had to replicate employee schedules, punches, and time-off data accurately to Aurora within the agreed business SLA for downstream application consumption. To enable this, we needed to build a robust reliability benchmark testing and data validation framework to maintain recoverability of data flow and accuracy of the replicated data. This framework tests the resilience mechanisms, such as failover scenarios, scaling, and monitoring, to make sure the solution can handle disruptions and provide accurate alerts. The framework also helps minimize the risk of disruptions to MyTime’s critical workforce management operations and achieve operational excellence.

Solution overview

As part of our vision to build a resilient data replication framework, we focused on the following:

- Resilient architecture – This involves addressing failover, scaling, and throttling scenarios. This had to be addressed across the source system, AWS DMS, network, and Aurora PostgreSQL.

- Monitoring – This component includes metrics for alerting issues in the data pipeline.

- Data validation – We created checks to enforce accuracy of the data transferred through AWS DMS.

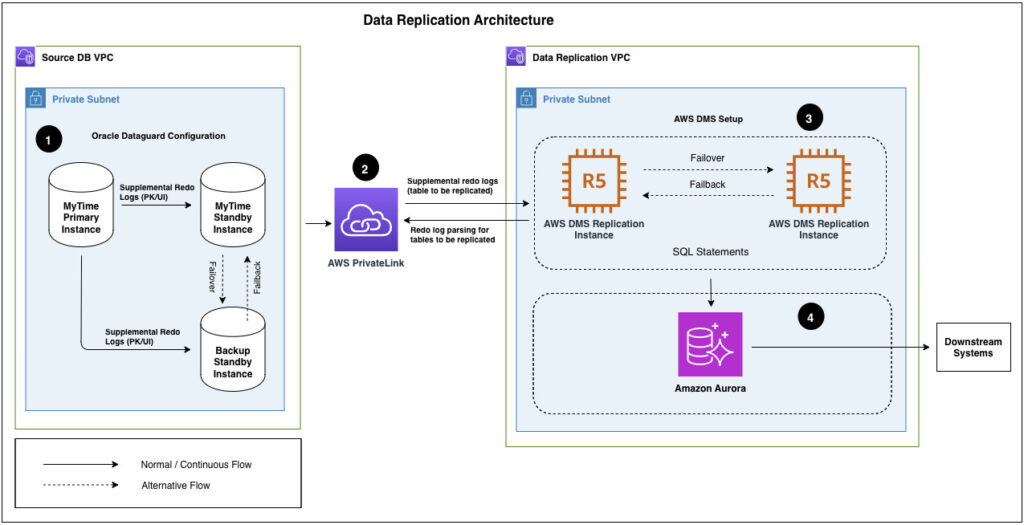

The following diagram illustrates the data replication architecture.

The components in the architecture are as follows:

- The source Oracle primary database, along with two Active Data Guard standby databases, are hosted within a VPC (Source DB VPC in the preceding diagram). The storage is configured with Oracle Automatic Storage Management (Oracle ASM). AWS DMS and the target data store, Aurora PostgreSQL cluster, and endpoint are in another dedicated VPC within private subnets (Data Replication VPC in the diagram).

- Because the source Oracle database is hosted in a different AWS account and VPC, we created an AWS PrivateLink VPC endpoint to the database endpoint service. We exposed both the source database and Oracle ASM service through the VPC endpoint in the source database AWS account.

- AWS DMS is set up with an Oracle read-only instance as source and Aurora PostgreSQL cluster endpoint as target, all within the same AWS Region. AWS DMS has two instances across Availability Zones within the same Region.

- The Aurora PostgreSQL cluster has three instances: a primary instance along with two read replicas in different Availability Zones. By default, the Aurora DB cluster endpoint connects to the writer (primary) instance of the Aurora database. Data is then automatically replicated across the reader instances within the Availability Zone.

In the following section, we discuss the failure scenarios that we tested to make sure the solution can achieve the business targets.

AWS DMS replication failure scenarios

AWS DMS was set up as a Multi-AZ configuration within the same Region as the source. We tested with three types of failure scenarios: failures at the source, failures in AWS DMS processing, and failures at the target. We wanted to verify that AWS DMS can overcome these failure types without manual intervention.

Failures at the source

This category of tests focused on failures at the source. We wanted to check the reaction of AWS DMS and its ability to handle these gracefully. The following table summarizes the test scenarios and our observations.

| No. | Test Scenario | Mechanism | Observations |

| 1 | Stopping replication between the Primary and Standby | Stop the Oracle primary database to standby replication for a period of time, then resume it. | No connection interruption occurred because the read-only instance wasn’t disturbed. AWS DMS didn’t receive any new logs until the replication from the primary was resumed. AWS DMS automatically caught up after replication was resumed. |

| 2 | Stop the actual instance to which DMS connects. The DNS still points to the same instance which is stopped. | Bring down the AWS DMS source for a period of time, then bring it back up without modifying source endpoint configuration. | Disconnect was observed and AWS DMS automatically connected and resumed replication with retry attempts after the primary database was back up. |

| 3 | Stop the actual Instance to which DMS connects. Point the DNS to a new instance. | Shut down the read-only instance for a period of time, then bring it back up and switch the source endpoint configuration to point to primary standby. | Disconnect was observed when the read-only instance was brought down. Connection was established automatically after the endpoint was flipped to point to the primary standby. AWS DMS resumed reading redo logs. |

| 4 | Same as 3 | When the read-only instance goes down (in a production environment), the solution automatically points to the primary standby instance to continue the replication process. This is similar to #3, where the source endpoint is flipped in the backend to simulate a read-only instance outage. | Disconnect was observed when the read-only instance went down. Connection was reestablished automatically after the target group IP was flipped to point to the primary standby instance. AWS DMS resumed reading redo logs. |

| 5 | Point the DNS back to the instance DMS previously connected | Modify the source endpoint to Oracle read-only instance. | Disconnect was observed during the IP flip. Connection was established automatically after the target group IP flip was complete, and AWS DMS resumed reading redo logs. |

| 6 | Point the DNS back and forth to the instance DMS previously connected | Without shutting down any databases, modify the source endpoint to point to the primary standby instance, and switch back again later. | Disconnect was observed during the IP flip. Connection was established automatically after the target group IP flip was complete, and AWS DMS resumed reading redo logs. |

| 7 | Shut down the source instance and DNS does not point to any instance | Shut down the read-only instance and bring it back up with no instance in the source endpoint. | Connection error occurred, and AWS DMS attempted retries to make a connection until retry timeout. |

| 8 | Make the DNS point to no instance and make it point to the instance DMS connects to | Pull all hosts from the source endpoint without any shutdown of the read-only instance, then put the read-only instance back as the endpoint. | Disconnect was observed when IPs were deregistered from the target group, and connection was established automatically after target group IPs were reregistered. AWS DMS resumed reading redo logs. |

| 9 | Test for the failover with ResetLogs | A failover occurs between the primary database and primary standby instances, and the database opens up with Oracle RESETLOGS. | AWS DMS tasks did not automatically read the new set of logs in case of the new incarnation. Tasks had to be manually stopped and resumed to read the logs from new incarnation. In our next post, we discuss the mechanisms to handle such scenarios in depth, without compromising data integrity between source and target. |

Failures in AWS DMS processing

The following table summarizes the tests done in AWS DMS replication instance failure scenarios.

| No. | Test Scenario | Observations |

| 1 | Reboot the AWS DMS primary replication instance while incremental change data capture (CDC) processing is in progress. | AWS DMS automatically resumed after the reboot was complete and the replication instance was back up. |

| 2 | Reboot the primary replication instance with a planned failover. | AWS DMS automatically resumed after the failover. |

| 3 | Fail over the primary replication instance and reboot the secondary replication instance. | While the failover was in progress, AWS DMS threw an exception to reboot the secondary replication instance again. |

| 4 | Simulate invoking a maintenance window. | Because no updates were due, the replication instance didn’t cause any interruption to the task. |

| 5 | Refresh Oracle source (test environment) using RMAN (with backup from production Oracle source). | A full load was needed to reload the data to Aurora database in the test environment. |

Â

Failures at the target

The following table summarizes the target failures test scenarios and our observations.

| No. | Test Scenario | Observations |

| 1 | Induce failovers on the Aurora database writer node. The Aurora DB writer instance fails over and the priority 1 reader instance takes over as the writer node. | Disconnect was observed during the failover. After the failover was complete, AWS DMS automatically resumed the replication process. |

| 2 | Induce a failback by switching back the original Aurora DB writer node (reversing Step 1). | Disconnect was observed during the failover. After the failover was complete, AWS DMS automatically resumed replication. |

| 3 | Induce failover on the Aurora database primary (writer node) and delete the priority 1 reader node. The Aurora database writer instance fails over, and the priority 2 reader instance takes over as the writer node. | Disconnect was observed during the failback. After the failback was completed, AWS DMS automatically resumed replication. |

| 4 | Reboot the target instance. | Disconnect was observed during reboot and AWS DMS automatically connected and resumed replication, similar to the previous failover scenario. |

| 5 | Stop and start the Aurora DB cluster. | This was similar to the reboot scenario. Disconnect was observed while the cluster was rebooting, and after the cluster came back up, AWS DMS was able to resume connection to the writer instance automatically. AWS DMS then automatically resumed the replication process. |

Monitoring of key metrics

As part of monitoring framework for the data replication from Oracle to Aurora, we monitor health check metrics with the following objectives:

- Keep propagation delay (p95) between Oracle and Aurora under thresholds of SLAs agreed with the business (ranging from 5 minutes to 8 hours) for downstream systems

- Make sure data moved from Oracle to Aurora is accurate and the schedule, punch, and time-off information is accurately reflected in the replication target Aurora database without any discrepancies

In this section, we discuss the broad categories of metrics that we monitor to achieve these objectives.

Propagation delay between Oracle and Aurora

AWS DMS publishes metrics to Amazon CloudWatch to help measure propagation delay from source to target. In addition to that, it provides metrics that help diagnose the root cause of the latency from source to target. We use the following key metrics:

- Target latency – Target latency is a measure of total replication delay from source to target. It’s measured as the difference between the current timestamp and the first event timestamp to be committed on the target. It’s published to CloudWatch every 5 minutes. If all events are applied to the target at the end of fifth minute, the target latency at the end of fifth minute can be zero. If the target latency is going beyond normal (p95 is 1 minute and p99 is 5 minutes), it’s likely that AWS DMS is not able to apply the changes at the same rate at which they are arriving from the source.

- Source latency – Source latency essentially represents a measure of propagation delay between the source database and the AWS DMS replication instance. It’s defined as the gap between the current system timestamp of the AWS DMS instance and latest event timestamp read by AWS DMS. In this way, source latency is a sub-component of target latency and therefore target latency is always higher than source latency. If the target latency is high and source latency is also high, you should look at the cause of source latency first. In our case, in some instances, we found that there was data replication lag between the primary database server and the read-only instance, from which AWS DMS reads redo logs.

- CDCIncomingChanges – This metric is the measure of changes that are accumulated on the AWS DMS replication instance and waiting to be applied to the target. This is not an exact measure of the number of incoming changes coming from the source. If too many changes are accumulated on the replication instance (when incoming changes are high), AWS DMS stops reading further from the source and waits for changes to be applied to the target. This causes spikes in source latency due to target contention. Therefore, you can use CDCIncomingChanges as an indicator to gauge how many changes are pending at a given point in time, and thereby estimate how long the target latency will take to normalize.

- CDCChangesMemoryTarget – This metric represents how many changes out of CDCIncomingChanges are in the memory of the AWS DMS replication instance.

- CDCChangesDiskTarget – This represents how many changes out of CDCIncomingChanges are on the disk of the AWS DMS replication instance. The metric shows how much of the incoming changes can’t be withheld in the memory of the AWS DMS replication instance. After a considerable amount of changes are spilled to the disk, it’s an indicator that target latency will further increase. AWS DMS performance degrades when the records are fetched from disk, compared to when they are fetched from memory.

AWS DMS replication instance metrics

We monitor system performance by CPU and memory utilization, storage consumption, and IOPs. These metrics measure the performance of replication instance within predefined thresholds. It also helps in root cause identification of task failures or task latency, when the thresholds are breached.

The following are some important metrics that are published by AWS DMS:

- CPUUtilization – This is the percentage of CPU utilization of the AWS DMS replication instance. It is proportional to the number of tasks running and the load they are processing at any time. We observed that replication performance degrades when CPUUtilization goes beyond 50% for a sustained period of 5 minutes interval.

- FreeableMemory – This is a combination of main memory components like buffer and cache in use that can be freed later. If FreeableMemory is too low, the replication instance can become a bottleneck in the replication process.

- FreeStorageSpace – Storage space is not part of the replication instance itself and can be provisioned independent of the replication instance size. The replication tasks and instance CloudWatch logs are stored in Amazon EBS volume type GP2 network-attached storage attached to the replication instance. If it fills up, you have to delete the logs using the AWS DMS console. In some instances, when the task logging levels are set to debug or detailed debug, the storage space can fill up very quickly. It’s an important metric to monitor, and can invoke automated cleanup based on predefined criteria.

Aurora system metrics

Aurora offers monitoring on system metrics like CPU and utilization, read and write IOPs, and disk queue depth. We have set alarms on 12 such metrics, as listed in the following table.

| Metric | Unit | Threshold |

| CPU utilization | Percentage | 30% |

| DB connection | Count | 50 |

| Write IOPS | Count/Second | 150,000 |

| Write latency | Second | 2 milliseconds |

| Read IOPS | Count/Second | 10,000 |

| Read latency | Second | 4 milliseconds |

| Replica lag | Milliseconds | 2,000 milliseconds |

| Buffer-cache hit ratio | Percentage | <80 |

| DiskQueueDepth | Count | 100 |

| FreeableMemory | Bytes | <200 GB |

| FreeLocalStorage | Bytes | <1 TB |

| Deadlocks | Count | 1 |

Custom metrics

We built a custom monitoring framework to integrate custom metrics to CloudWatch that aren’t available out of the box, such as data validation errors, lag report between the Oracle primary and read-only instance, and number of table scans compared to index scans.

All these metrics and those mentioned earlier are published on a dashboard. The following image shows a snapshot of the dashboard:

These metrics help engineers in analyzing and identifying any underlying issues related to replication lags and issues. For example, “DMS Recon†provides a visual representation of reconciliation related issues. Similarly, “Source Lag†metric provides lag from the source when there are overall lags in replication identified. This helps breakdown the causes of replication lags.

Conclusion

In this post, we discussed how we built and tested a fault-resilient framework for data replication using AWS DMS and Aurora PostgreSQL-Compatible. We also covered the key metrics that should be monitored to detect issues early and react to such situations in a controlled manner. These measures help us avoid data integrity-related issues and impacts to downstream system.

In the next post in this series, we discuss mechanisms to recover from Oracle reset log scenarios without compromising data integrity between source and target.

About the Author

Ujjawal Kumar is a Data Engineer with Amazon’s Timehub team. He has over 12 years of experience as a data professional working in domains such as finance, treasury, sales, HCM, and timekeeping. He is passionate about learning all things data and implementing end-to-end data pipelines for data analytics and reporting. He is based out of Dallas, Texas.

Amit Aman is Data Engineering Manager with Amazon’s TimeHub team. He is passionate about building data solutions that help drive business outcomes. He has over 17 years of experience building data solutions for large enterprises across retail, airlines, and people experience. He is based out of Dallas, Texas.

Amit Aman is Data Engineering Manager with Amazon’s TimeHub team. He is passionate about building data solutions that help drive business outcomes. He has over 17 years of experience building data solutions for large enterprises across retail, airlines, and people experience. He is based out of Dallas, Texas.

Saikat Gomes is part of the Customer Solutions team in Amazon Web Services. He is passionate about helping enterprises succeed and realize benefits from cloud adoption. He is a strategic advisor to his customers for large-scale cloud transformations involving people, process, and technology. Prior to joining AWS, he held multiple consulting leadership positions and led large-scale transformation programs in the retail industry for over 20 years. He is based out of Los Angeles, California.

Saikat Gomes is part of the Customer Solutions team in Amazon Web Services. He is passionate about helping enterprises succeed and realize benefits from cloud adoption. He is a strategic advisor to his customers for large-scale cloud transformations involving people, process, and technology. Prior to joining AWS, he held multiple consulting leadership positions and led large-scale transformation programs in the retail industry for over 20 years. He is based out of Los Angeles, California.

Source: Read More