FundApps, founded in 2010, is one of the pioneers in the Regulatory Technology (RegTech) space, which includes compliance monitoring and reporting. RegTech requires agility to process large volumes of data, generate reports, and automate operational efficiency to meet compliance requirements. Equipped with the insight that every asset manager needlessly struggles with the same tasks to comply with the same regulation, FundApps used the combination of cloud computing and regulatory expertise to launch the first compliance as a service (CaaS) product for financial institutions.

According to FundApps, they enable compliance teams to respond efficiently to regulatory change, increase certainty, and reduce complexity associated with compliance processes. FundApps serves the financial services industry (FSI), including investment banking, hedge funds, pension funds, and asset management. FundApps helps make compliance simple for over 140 financial institutions, and monitors over $20 trillion assets under management (AUM) on behalf of their clients.

FundApps decided to rearchitect their environment and transform it to a cloud-based architecture on AWS to better support the growth of their business. For more information, see Faster, cheaper, greener: Pick three — FundApps modernization journey. In this post, we focus on the persistence layer of the FundApps regulatory data service. You learn how FundApps improved the service scalability, reduced cost, and streamlined operations by migrating from SQL Server database to a cloud-centered solution combining Amazon Aurora Serverless v2 with Babelfish for Aurora PostgreSQL and Amazon Simple Storage Service (Amazon S3).

Background

To achieve its mission of making compliance simple, FundApps automates the collection and processing of large volumes of data relevant to financial regulation and compliance. This data spans over a decade with more than 60 million files. FundApps combines this extensive dataset with its clients’ positions in the FundApps Rule Engine to generate actionable results. These results might include disclosures that clients need to make to regulatory authorities, notifications to clients that they need to provide additional data, or information about disclosures that FundApps has automated on behalf of clients.

A key component of the FundApps compliance service is the Regulatory Data service. This service is responsible for ingesting thousands of files daily from various regulatory sources and processing the data for use by the FundApps Rule Engine. FundApps’s clients need accurate regulatory data for their disclosures. FundApps developed the original version of the Regulatory Data service in 2014 using a three-tier architecture with Windows-based Amazon Elastic Compute Cloud (Amazon EC2) instances and the F# framework. The service suffered from inefficient resources utilization and operational issues, and it cost a lot to run.

The Regulatory Data service ran its database on a static number of EC2 instances, overprovisioning resources to support peak load. FundApps shared the database with other services, causing noisy neighbor problems and additional overheads that complicated maintenance coordination. Engineers had to spend weekends and evenings patching and maintaining the databases. Lastly, SQL Server licensing made the service cost-inefficient.

Solution overview

Before we discuss the design choices for the new persistence layer, let’s discuss the data involved in the service (which SQL Server previously stored). At a high level, the data can be divided into three categories:

- Raw source data – This could be various file formats, such as PDF, HTML pages, CSV, XML, Excel spreadsheets, and JSON files

- Processed data – This is a binary version of the data parsed and processed by the service; the service has cleaned it and prepared it for use by other systems

- Metadata – This is broadly relational data that covers where source data has come from, when it was processed, hashes of the raw source data, links between the processed data and the raw data that produced it, and so on

The pattern of reads from the API layer to the database layer varies inconsistently because it depends on when clients decide to process their positions. Also, the background ingestion services write lots of data into the database in bulk at various points throughout the day, leading to very spiky loads on the database layer.

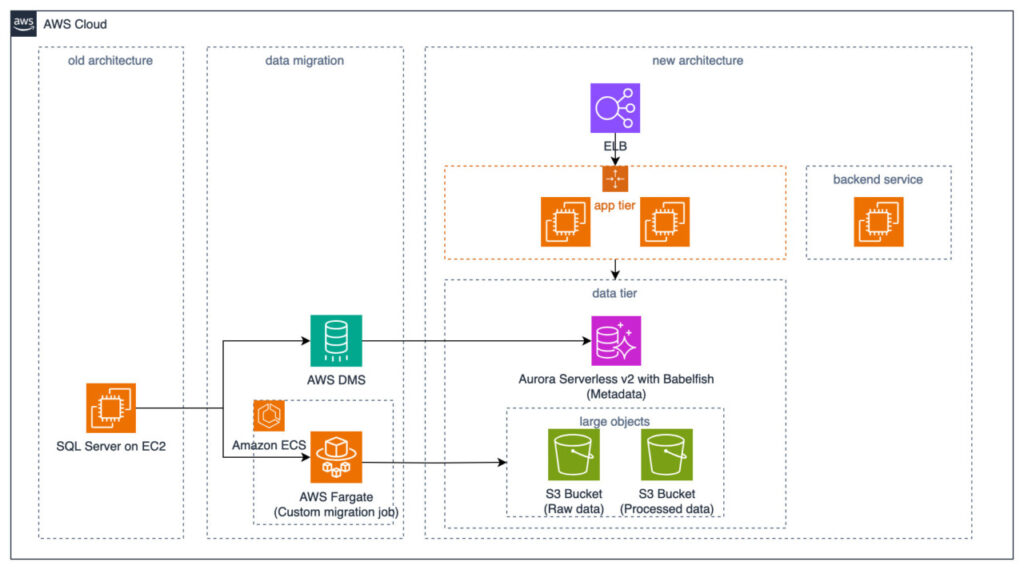

The following diagram depicts the AWS services used to build a new architecture for the Regulatory Data service. It also depicts the AWS services used for migrating data from the old persistence layer to the new persistence layer.

Data service modernization

SQL Server stores approximately 60 million files of raw source data and processed data as BLOBs. FundApps quickly landed on Amazon S3 as a storage service for the raw source data and the processed data. Amazon S3 is able to dynamically change the storage tier, replicate to other AWS Regions, and tag data with metadata. Coupled with FundApps’s familiarity with the service, Amazon S3 was a fairly simple choice.

As mentioned earlier, their metadata is highly relational, so FundApps wanted to continue storing it on a relational database, but at the same time break-free from legacy database solutions and the associated cost inefficiency. FundApps decided to use Amazon Aurora—a cost-efficient relational database management system (RDBMS) built for the cloud that provides enterprise-grade performance and availability.

Previously, FundApps had to overprovision resources to make sure that the persistence layer could handle peak load without performance degradation; this resulted in a lot of waste and cost inefficiency. The migration to Aurora allowed FundApps to use the serverless feature that provides an on-demand auto scaling configuration, where the database automatically scales capacity up or down based on the application’s needs. Additionally, FundApps no longer needed to undertake a majority of the traditional undifferentiated maintenance work associated with relational databases. This means engineers have more time to invest in building valuable new products.

The service is written in the F# framework, one of the old and complex components of the original environment. Therefore, rewriting the code to work with a cloud-centered database service creates higher developer burden and risk. To address this challenge, FundApps decided to use Babelfish for Aurora PostgreSQL, a feature for Amazon Aurora PostgreSQL-Compatible Edition that enables Aurora to understand commands from applications written for SQL Server.

The Babelfish Compass tool helped them generate compatibility assessment reports, which identified 90% of the SQL commands were compatible with Babelfish for Aurora PostgreSQL. FundApps addressed the remaining commands with simple workarounds, completing the migration within weeks.

With Babelfish, FundApps did not have to rewrite large portions of the application and change their current database connectivity drivers, which would have been required for a traditional SQL migration. Using this approach, the team completed the necessary code changes in just 1 week, compared to the 3 months that a SQL dialect conversion would have taken—approximately a 13-fold reduction in effort. This acceleration allowed the team to focus their time on new product innovation instead of the migration.

Data migration approach

FundApps used AWS Database Migration Service (AWS DMS) to migrate metadata from SQL Server to Aurora and Babelfish. AWS DMS did all the heavy lifting, allowing FundApps to run 10 migration tasks to read 10 million rows each from the SQL Server database into the new Aurora Serverless cluster. Using multiple AWS DMS tasks allowed for different settings on each task, customized for the needs of the table and thereby optimizing performance and reliability.

FundApps had specific partitioning and file naming requirements for the raw and processed data migrating to Amazon S3. Additionally, the URLs of the S3 objects had to be persisted in Aurora. To meet these requirements, FundApps developed custom code to handle this part of the data migration.

FundApps used Amazon Elastic Container Service (Amazon ECS), a fully managed container orchestration service, to run the migration custom code as a container. They used AWS Fargate, a serverless compute service for containers, for hosting the container, and this approach freed FundApps from managing the underlying container infrastructure. The application was then updated to read from Amazon S3.

Solution benefits

The new architecture for the Regulatory Data service and the data migration approach delivered several benefits:Â

- Streamlined migration and reduced risk – By using Compass assessment and Babelfish for Aurora PostgreSQL, the migration from SQL Server to Aurora PostgreSQL-Compatible was assessed and accomplished with minimal changes to the existing code base. This allowed most SQL scripts to remain intact, significantly reducing migration efforts and risk.

- Auto scaling – The adoption of Aurora Serverless v2 and Fargate enabled the solution to automatically scale up and down based on fluctuating workloads. This dynamic scaling capability optimized resource utilization, operational effort, and cost-efficiency.

- Enhanced price-performance efficiency – The solution delivered improved price-performance efficiency through several key factors:

- Cloud-based databases – Migrating from a traditional on-premises relational database to cloud-based solutions like Aurora PostgreSQL-Compatible provided the full manageability, availability, and performance of a commercial-grade database at a significantly lower cost.

- Optimized data storage – Offloading large BLOB data from the relational database to the more scalable and cost-effective Amazon S3 further enhanced the overall efficiency.

- Reduced maintenance overhead – By using fully managed AWS services, the solution offloaded the burden of database management and maintenance to AWS. It allowed the organization to focus on core business activities and innovation rather than infrastructure upkeep.

Conclusion

The migration from a traditional, proprietary database to an Aurora PostgreSQL database has yielded impressive operational improvements. By taking advantage of the elasticity and autoscaling capabilities of Aurora Serverless, FundApps was able to achieve significant cost optimizations, scalability enhancements, and performance improvements for their workloads. Additionally, by offloading large raw and processed files to Amazon S3, where access patterns didn’t require a relational database engine, FundApps was able to reduce the load on the relational database and enhance cost-efficiency. The use of Babelfish proved invaluable; it significantly reduced the need for changes in the application layer, streamlining the overall migration process. The transition to the Aurora PostgreSQL database, combined with the strategic use of complementary AWS services and tools, has delivered tangible operational excellence for FundApps with Babelfish and Aurora PostgreSQL-Compatible.

We welcome any questions, comments, or suggestions you may have regarding this successful migration and modernization journey.

About the authors

Elliot Greenwood is a Senior Software Engineer at FundApps with experience in distributed systems, data processing, and architecture design. At FundApps, he helps push forward the capabilities of their data processing platforms to support the needs of some of the largest financial institutions. Outside of work, Elliot is an avid board game player and puzzle solver.

Elliot Greenwood is a Senior Software Engineer at FundApps with experience in distributed systems, data processing, and architecture design. At FundApps, he helps push forward the capabilities of their data processing platforms to support the needs of some of the largest financial institutions. Outside of work, Elliot is an avid board game player and puzzle solver.

Islam Mahgoub is a Senior Solutions Architect at AWS with over 15 years of experience in application, integration, and technology architecture. At AWS, he helps customers build new cloud-centered solutions and modernize their legacy applications using AWS services. Outside of work, Islam enjoys walking, watching movies, and listening to music.

Islam Mahgoub is a Senior Solutions Architect at AWS with over 15 years of experience in application, integration, and technology architecture. At AWS, he helps customers build new cloud-centered solutions and modernize their legacy applications using AWS services. Outside of work, Islam enjoys walking, watching movies, and listening to music.

Raj Vaidyanath is a Principal WW Specialist for AWS Data and AI. He has over 20 years of experience across systems software and hardware technologies, product management, and business development. He helps customers create long-term value for their business, enabling customer success across modernization, Amazon Aurora, and generative AI vector database initiatives.

Raj Vaidyanath is a Principal WW Specialist for AWS Data and AI. He has over 20 years of experience across systems software and hardware technologies, product management, and business development. He helps customers create long-term value for their business, enabling customer success across modernization, Amazon Aurora, and generative AI vector database initiatives.

Shayon Sanyal is a Principal WW Specialist Solutions Architect for Data and AI and a Subject Matter Expert for Amazon’s flagship relational database, Amazon Aurora. He has over 15 years of experience managing relational databases and analytics workloads. Shayon’s relentless dedication to customer success allows him to help customers design scalable, secure, and robust cloud-based architectures. Shayon also helps service teams with the design and delivery of pioneering features, such as generative AI.

Shayon Sanyal is a Principal WW Specialist Solutions Architect for Data and AI and a Subject Matter Expert for Amazon’s flagship relational database, Amazon Aurora. He has over 15 years of experience managing relational databases and analytics workloads. Shayon’s relentless dedication to customer success allows him to help customers design scalable, secure, and robust cloud-based architectures. Shayon also helps service teams with the design and delivery of pioneering features, such as generative AI.

Source: Read More