TUI Group is one of the world’s leading global tourism services, providing 21 million customers with an unmatched holiday experience in 180 regions. TUI Group covers the end-to-end tourism chain with over 400 owned hotels, 16 cruise ships, 1,200 travel agencies, and 5 airlines covering all major holiday destinations around the globe. At TUI, crafting high-quality content is a crucial component of its promotional strategy.

The TUI content teams are tasked with producing high-quality content for its websites, including product details, hotel information, and travel guides, often using descriptions written by hotel and third-party partners. This content needs to adhere to TUI’s tone of voice, which is essential to communicating the brand’s distinct personality. But as its portfolio expands with more hotels and offerings, scaling content creation has proven challenging. This presents an opportunity to augment and automate the existing content creation process using generative AI.

In this post, we discuss how we used Amazon SageMaker and Amazon Bedrock to build a content generator that rewrites marketing content following specific brand and style guidelines. Amazon Bedrock is a fully managed service that offers a choice of high-performing foundation models (FMs) from leading AI companies such as AI21 Labs, Anthropic, Cohere, Meta, Mistral AI, Stability AI, and Amazon through a single API, along with a broad set of capabilities you need to build generative AI applications with security, privacy, and responsible AI. Amazon SageMaker helps data scientists and machine learning (ML) engineers build FMs from scratch, evaluate and customize FMs with advanced techniques, and deploy FMs with fine-grain controls for generative AI use cases that have stringent requirements on accuracy, latency, and cost.

Through experimentation, we found that following a two-phased approach worked best to make sure that the output aligned to TUI’s tone of voice requirements. The first phase was to fine-tune with a smaller large language model (LLM) on a large corpus of data. The second phase used a different LLM model for post-processing. Through fine-tuning, we generate content that mimics the TUI brand voice using static data and which could not be captured through prompt engineering. Employing a second model with few-shot examples helped verify the output adhered to specific formatting and grammatical rules. The latter uses a more dynamic dataset, which we can use to adjust the output quickly in the future for different brand requirements. Overall, this approach resulted in higher quality content and allowed TUI to improve content quality at a higher velocity.

Solution overview

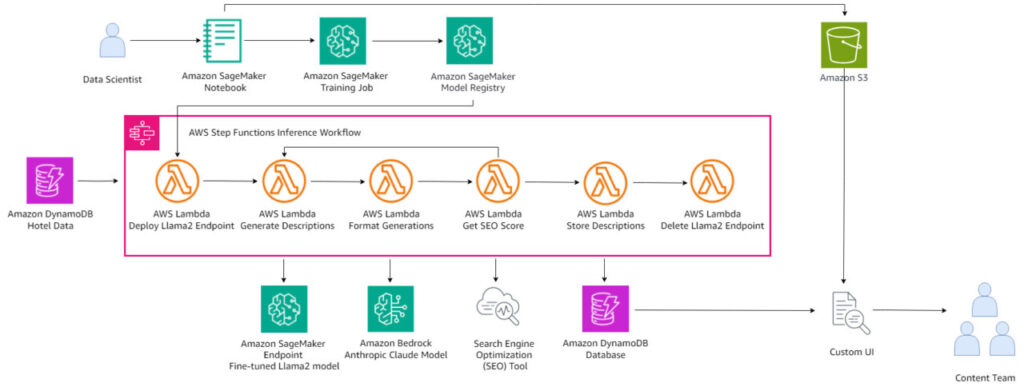

The architecture consists of a few key components:

- LLM models – We evaluated different approaches and found that a two-model solution performed the best. This consists of a fine-tuned Meta Llama model to generate a description for the given hotel and Anthropic’s Claude model to reformat its output. Fine-tuning and hosting the Meta Llama 2 model was done on Amazon SageMaker, and Anthropic’s Claude 2 was consumed from Amazon Bedrock through API calls.

- Orchestration – We created a state machine using AWS Step Functions to make calls in a batch format to the two LLMs and fetch the search engine optimization (SEO) score for the generated content from a third-party API. If the SEO content score is above a defined threshold (80%), the generated content is stored in an Amazon DynamoDB table and can later be reviewed by the content team directly in the front-end UI. Through this process, we maintain and monitor content quality at scale.

- Human in the loop feedback – We developed a custom React front-end application to gather feedback from the content team to facilitate continuous improvement and future model fine-tuning. You can use the feedback to fine-tune a base model on SageMaker using reinforcement learning from human feedback (RLHF) to improve performance.

The following diagram is the high-level architecture of the solution.

Prompt engineering

We started by crafting prompts that include the most common issues identified by the TUI content team, including tone of voice, accuracy, length, and grammar. We provided the LLM with a few examples of curated content within the context window. Although the generated output followed the guidance, the writing style didn’t meet TUI’s tone of voice requirements.

Example prompt:

Here is an example of the results we received.

| Original text | Generated text |

| Boutique interiors and a great location combine at this small but perfectly formed hotel. It may be small but this 15-room hotel packs a punch with its interior design. The immaculate pool is set in an open atrium area. And there’s funky cocktail bar and lounge, too. The location is every bit as impressive as the styling. It’s about a 10-minute walk to the beach and less than half that to the centre of Thassos Town. | This petite hotel brings boutique style to Thassos Town. Its immaculate pool and funky cocktail bar create an oasis in the heart of the action. The beach is just a 10-minute walk away, so you can easily split your time between sunbathing and sightseeing. With only 15 rooms, the experience feels intimate yet worldly. The interiors marry modern design with traditional touches for a unique look. And the central location means popular attractions like the Archaeological Museum and shopping street are right on your doorstep. |

To evaluate these results, we generated a dataset of 150 hotel descriptions using two different prompt versions and asked our business teams to review it. However, they flagged 98% of the output with significant issues. Firstly, Anthropic’s Claude 2 struggled to adhere to TUI’s tone of voice and branding guidelines, frequently employed abbreviations, and favored American English over British English. It also failed to follow the SEO guidelines provided and sometimes generated false information regarding hotel facilities and locations. The following image shows a list of these challenges and how the LLM handled them. Of the six challenges, the LLM met only one.

Fine-tuning Llama 2 using PEFT on Amazon SageMaker JumpStart

These issues and poor feedback led us to conclude that prompt engineering alone would not adequately address the problem. As a result, we decided to pursue an alternative approach: fine-tuning a smaller large language model to rewrite the text in accordance with TUI’s tone of voice. We used a curated set of hotel descriptions written by TUI copywriters so that the model would have better alignment with our guidelines.

We selected the Meta Llama 2 model using Amazon SageMaker JumpStart, one of the top open source LLMs available at the time, and chose the 13B parameter version to apply parameter-efficient fine-tuning (PEFT), specifically using quantized low-rank adaptation (QLoRA). This technique quantizes the pre-trained model to 4 bits and adds small low-rank adapters for fine-tuning. We fine-tuned the model on a single ml.g5.4xlarge instance in about 20 hours using a relatively small dataset of around 4,500 hotels. We also tested out the Llama 2 7B and 70B models. We found that the 7B model didn’t perform well enough, and the 70B model had much higher costs without seeing significant improvement.

We used common natural language processing (NLP) evaluation metrics, such as perplexity for evaluation and monitoring during training, and established daily feedback loops with the content team to refine the test set. The output from the fine-tuned Meta Llama 2 13B model effectively mimicked the TUI tone of voice and adhered to most SEO guidelines, even those not specified in the prompt.

For more information, refer to Fine-tune Llama 2 for text generation on Amazon SageMaker Jumpstart.

| Original text | Generated text |

| The NH München City Süd hotel, formerly known as NH München am Ring, is located southeast of Munich. The location is ideal for reaching the highway towards Salzburg and provides good access to the airport and Exhibition Center. You can also get to the city center in 20 minutes by hopping on the S-Bahn at the Karl-Preis-Platz station. The peaceful residential location offers an excellent opportunity to relax in one of the 163 rooms. These are decorated in white with red accents. Rooms feature free Wi-Fi and electronic safes that are large enough for your laptop. If you need to work, there are spacious desks in every room and apartments with seperate living rooms are available for longer term guests. The suites also come with separate living rooms and foldaway beds are available upon request. The breakfast will get you ready for a busy day with a large spread of cold cuts, cheeses, fresh pastries, and fruit. These come accompanied with freshly brewed coffee, tea, and fresh fruit juices. For lunch and dinner, you will find many restaurant options in close proximity to the hotel. There is a sauna for relaxation and you can park your car in the garage. | Make yourself at home in Munich at the München City Süd. This former NH München am Ring sits just southeast of the Bavarian capital, putting both the highway to Salzburg and the airport within easy reach. The S-Bahn station Karl-Preis-Platz is a quick walk away too, so you can be in the city center in just 20 minutes. The neighborhood here is peaceful, giving you the chance to properly relax during your stay. The hotel’s 163 rooms are decorated in calming white and red hues. Free Wi-Fi and laptop-sized safes come as standard, while spacious desks make working easy. For longer visits, apartments with separate living areas are available. The suites take things up a notch with their own living spaces and foldaway beds on request. Fuel up each morning with a vast breakfast spread of cold cuts, cheeses, pastries and fruit. All washed down with coffee, tea and fresh juices. You’ll find many dining options close by for other meals. After a busy day, unwind in the sauna or park up in the garage. |

The following image shows a list of the challenges and how the LLM handled them. Of the six challenges, the LLM met four.

Integrating Anthropic’s Claude 2 for further improvement

To further improve the output, we introduced Anthropic’s Claude 2 using Amazon Bedrock as a final refinement step. This included converting American spelling to British spelling, writing numbers one through nine in words and larger numbers in digits, correcting typos and capitalization errors, minimizing banned words, incorporating essential TUI branding words, and adding missing hotel information. We also implemented a feedback mechanism in the UI to use data for ongoing fine-tuning in production. By using Anthropic Claude 2, we make sure that the final output applies the remaining formatting rules.

| Original text | Generated text |

As its name suggests, Vienna Sporthotel has loads lined up for sports fans. There are 10 tennis courts and four golf simulators here, plus, you can book a session with a coach to brush up on your skills. On top of that, there’s an outdoor pool, a well-stocked fitness room and a big sauna. To fuel up for all that exercise, you can grab a plate at the breakfast buffet. Location-wise, this place is set up for sightseeing. Hop on a bus and you can reach the likes of The Hofburg, Belvedere Palace and Karlskirche in less than half an hour. You’re only a 10-minute stroll away from the nearest restaurants and cafes, too. | With a name like Vienna Sporthotel, you can expect this place to serve up plenty for fans of sport and activity. Brush up your skills on one of 10 tennis courts or in the four golf simulators, then recharge in the outdoor pool or well-equipped gym. There’s even a coach on hand to help perfect your technique. When you’re ready to refuel, tuck into the breakfast buffet. Then get set for sightseeing – a bus ride whisks you to top Vienna attractions like The Hofburg, Belvedere Palace and Karlskirche in under 30 minutes. You’re also just a short stroll from local eateries and coffee shops. |

The following image shows a list of the challenges and how the LLM handled them. The LLM met all six challenges.

Key outcomes

The final architecture consists of a fine-tuned Meta Llama 2 13B model and Anthropic Claude 2, using the strengths of each model. In a blind test, these dynamically generated hotel descriptions were rated higher than those written by humans in 75% of a sample of 50 hotels. We also integrated a third-party API to calculate SEO scores for the generated content, and we observed up to 4% uplift in SEO scores for the generated content compared to human written descriptions. Most significantly, the content generation process is now five times faster, enhancing our team’s productivity without compromising quality or consistency. We can generate a vast number of hotel descriptions in just a few hours— a task that previously took months.

Takeaways

Moving forward, we plan to explore how this technology can address current inefficiencies and quality gaps, especially for hotels that our team hasn’t had the capacity to curate. We plan to expand this solution to more brands and regions within the TUI portfolio, including producing content in various languages and tailoring it to meet the specific needs of different audiences.

Throughout this project, we learned a few valuable lessons:

- Few-shot prompting is cost-effective and sufficient when you have limited examples and specific guidelines for responses. Fine-tuning can help significantly improve model performance when you need to tailor content to match a brand’s tone of voice, but can be resource intensive and is based on static data sources that can get outdated.

- Fine-tuning the Llama 70B model was much more expensive than Llama 13B and did not result in significant improvement.

- Incorporating human feedback and maintaining a human-in-the-loop approach is essential for protecting brand integrity and continuously improving the solution. The collaboration between TUI engineering, content, and SEO teams was crucial to the success of this project.

Although Meta Llama 2 and Anthropic’s Claude 2 were the latest state-of-the-art models available at the time of our experiment, since then we have seen the launch of Meta Llama 3 and Anthropic’s Claude 3.5, which we expect can significantly improve the quality of our outputs. Amazon Bedrock also now supports fine-tuning for Meta Llama 2, Cohere Command Light, and Amazon Titan models, making it simpler and faster to test models without managing infrastructure.

About the Authors

Nikolaos Zavitsanos is a Data Scientist at TUI, specialized in developing customer-facing Generative AI applications using AWS services. With a strong background in Computer Science and Artificial Intelligence, he leverages advanced technologies to enhance user experiences and drive innovation. Outside of work, Nikolaos plays water polo and is competing at a national level. Connect with Nikolaos on Linkedin

Nikolaos Zavitsanos is a Data Scientist at TUI, specialized in developing customer-facing Generative AI applications using AWS services. With a strong background in Computer Science and Artificial Intelligence, he leverages advanced technologies to enhance user experiences and drive innovation. Outside of work, Nikolaos plays water polo and is competing at a national level. Connect with Nikolaos on Linkedin

Hin Yee Liu is a Senior Prototyping Engagement Manager at Amazon Web Services. She helps AWS customers to bring their big ideas to life and accelerate the adoption of emerging technologies. Hin Yee works closely with customer stakeholders to identify, shape and deliver impactful use cases leveraging Generative AI, AI/ML, Big Data, and Serverless technologies using agile methodologies. In her free time, she enjoys knitting, travelling and strength training. Connect with Hin Yee on LinkedIn.

Hin Yee Liu is a Senior Prototyping Engagement Manager at Amazon Web Services. She helps AWS customers to bring their big ideas to life and accelerate the adoption of emerging technologies. Hin Yee works closely with customer stakeholders to identify, shape and deliver impactful use cases leveraging Generative AI, AI/ML, Big Data, and Serverless technologies using agile methodologies. In her free time, she enjoys knitting, travelling and strength training. Connect with Hin Yee on LinkedIn.

Source: Read MoreÂ