TL;DR: LLM web agents are designed to predict a sequence of actions to complete a user-specified task. Most existing agents are built on top of general-purpose, proprietary models like GPT-4 and rely heavily on prompt engineering. We demonstrate that fine-tuning open-source LLMs using a large set of high-quality, real- world workflow data can improve performance while using a smaller LLM backbone, which can reduce serving costs.

As large language models (LLMs) continue to advance, a pivotal question arises when applying them to specialized tasks: should we fine-tune the model or rely on prompting with in-context examples? While prompting is straightforward and widely adopted, our recent work demonstrates that fine-tuning with in-domain data can significantly enhance performance over prompting in web navigation. In this blog post, we will introduce the paper “ScribeAgent: Towards Specialized Web Agents Using Production-Scale Workflow Data“, where we show fine-tuning a 7B open-source LLM using large-scale, high-quality, real-world web workflow data can surpass closed-source models such as GPT-4 and o1-preview on web navigation tasks. This result underscores the immense potential of specialized fine-tuning in tackling complex reasoning tasks.

Background: LLM Web Agents and the Need for Fine-Tuning

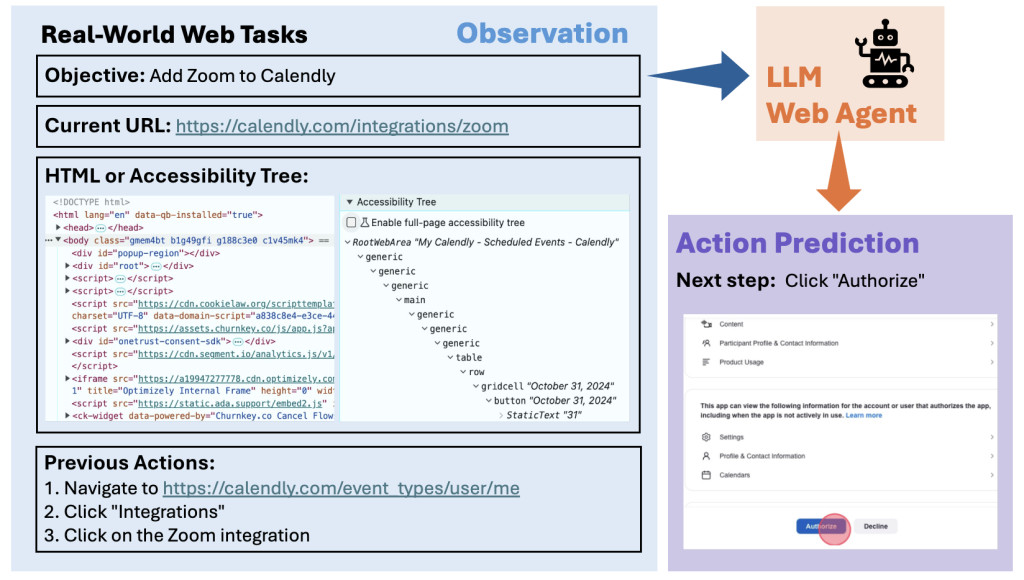

LLM-powered automated agents have emerged as a significant research domain, with “web agents†being one popular direction. These agents can navigate websites to solve real-world tasks. To do so, the user first defines a high-level objective. The agent then outputs step-by-step actions based on the user’s goal, current observation, and interaction history. For text-only agents, the observation typically includes the website’s URL, the webpage itself, and possibly the accessibility tree used by assistive technologies (see the introduction figure). The agent can then perform actions such as keyboard and mouse operations.

Existing web agents rely heavily on prompting general-purpose, proprietary LLMs like GPT-4. To leverage LLMs for web navigation, previous research explores various prompting techniques:

- Better planning ability: Several studies employ advanced search strategies to enable agents to plan ahead and select the optimal action in the long term (e.g., SteP, Tree Search).

- Better reasoning ability: Techniques like self-feedback and iterative refinement allow agents to improve their own actions iteratively (e.g., AdaPlanner, Bagel). Incorporating external evaluators provides an additional layer of oversight (e.g., Agent Eval & Refine).

- Memory usage:Â By employing memory databases, agents can retrieve past trajectories to use as demonstrations for current tasks. This helps agents learn from previous interactions (e.g., AWM, Synapse).

While these approaches are effective, the resulting agents perform significantly below human levels on standard benchmarks, such as Mind2Web and WebArena. This occurs because of the following challenges:

- Lack of web-specific knowledge: General-purpose LLMs are not specifically trained to interpret web-specific languages like HTML.

- Limited planning and exploration ability: LLMs are not developed to perform sequential reasoning over a long horizon, where the agent must remember past actions, understand the evolving state of the environment, perform active exploration, and plan several steps ahead to achieve a goal.

- Practical constraints: Reliance on proprietary models can lead to increased costs and dependency on a single provider. Real-time web interaction can require a large amount of API calls. Any changes in the provider’s service terms, pricing, or availability can affect the agent’s functionality.

Fine-tuning open-source LLMs offers an appealing way to address these challenges (Figure 1). However, fine-tuning comes with its own set of important questions. For example, how can we obtain sufficient domain-specific datasets to train the model effectively? How should we formulate the input prompts and outputs to align with the pre-trained model and the web navigation tasks? Which models should we fine-tune? Addressing these questions is crucial to unlocking the full potential of open-source LLMs for web navigation.

Introducing ScribeAgent: Fine-Tuning with In-Domain Data

ScribeAgent is developed by adapting open-source LLMs for web navigation by fine-tuning on in-domain data instead of prompting-based methods. We introduce two key aspects to make fine-tuning successful: (1) Constructing a large-scale, high-quality dataset and (2) fine-tuning LLMs to leverage this data.

Step 1: Crafting a Large-Scale, High-Quality Dataset

We collaborated with Scribe, an AI workflow documentation software that streamlines the creation of step-by-step guides for web-based tasks. Scribe allows users to record their web interactions via a browser extension, converting them into well-annotated instructions for specific business needs. See Figure 2 for an example scribe.

This collaboration provided access to a vast database of real-world, high-quality web workflows annotated by actual users. These workflows cover a variety of web domains, including social platforms like Facebook and LinkedIn; shopping sites like Amazon and Shopify; productivity tools like Notion and Calendly; and many others. Each workflow features a high-level user objective and a sequence of steps to achieve the task. Each step contains (1) the current web page’s URL, (2) raw HTML, (3) a natural language description of the action performed, (4) the type of action, like click or type, and (5) the HTML element that is the target of the action.

The raw HTML data of real-world websites can be exceedingly long, often ranging from 10K to 100K tokens, surpassing the context window of most open-source LLMs. To make the data manageable for fine-tuning, we implemented a pruning algorithm that retains essential structure and content while eliminating redundant elements. Finally, we reformat the dataset into a next-step prediction task: The input consists of the user objective, the current web page’s URL, the processed HTML, and the previous actions. The agent is expected to generate the next action based on the input. We highlight the following characteristics for the resulting dataset:

- Scale: Covers over 250 domains and 10,000 subdomains.

- Task length: Average 11 steps per task.

- Training tokens: Approximately 6 billion.

This dataset’s scale and quality are unparalleled in prior web agent research.

Step 2: Fine-Tuning Open-Source LLMs

After obtaining the dataset, we faced two critical decisions: which model to fine-tune and how to fine-tune it. To probe into these questions, we leverage the dataset and perform a series of ablation studies:

- LLM backbone: Mistral, Qwen, LLaMA

- Model size: small (<10B parameters), medium (10–30B parameters), large (>30B parameters)

- Context window: 32K tokens vs. 65K tokens

- Fine-tuning method: Full fine-tuning vs. LoRA

We fine-tuned each model variant on the same training dataset and evaluated their performance on a test set. The detailed results are available in our paper and Figure 3, but the key takeaways are:

- The Qwen family significantly outperformed Mistral and LLaMA models, both before and after fine-tuning.

- Increasing the model size and context window length consistently led to improved performance.

- While full fine-tuning has a slight performance gain over parameter-efficient fine-tuning, it requires much more GPU, memory, and time. On the other hand, LoRA reduced computational requirements without compromising performance.

Based on the ablation study results, we develop two versions of ScribeAgent by fine-tuning open-source LLMs using LoRA:

- ScribeAgent-Small: Based on Qwen2 Instruct 7B; cost-effective and efficient for inference.

- ScribeAgent-Large: Based on Qwen2.5 Instruct 32B; superior performance in internal and external evaluations.

Empirical Results: Fine-Tuned Models Surpass GPT-4-Based Agents

We evaluated ScribeAgent on three datasets: our proprietary test set, derived from the real-world workflows we collected; the text-based Mind2Web benchmark; and the interactive WebArena.

On our proprietary dataset, we observed that ScribeAgent significantly outperforms proprietary models like GPT-4o, GPT-4o mini, o1-mini, and o1-preview, showcasing the benefits of specialized fine-tuning over general-purpose LLMs (Figure 4). Notably, ScribeAgent-Small has only 7B parameters and ScribeAgent-Large has 32B parameters, neither requiring additional scaling during inference. In contrast, these proprietary baselines are typically larger and demand more computational resources at inference time, making ScribeAgent a better choice in terms of accuracy, latency, and cost. In addition, while the non-fine-tuned Qwen2 model performs extremely poorly, fine-tuning it with our dataset boosts its performance by nearly sixfold, highlighting the importance of domain-specific data.Â

As for Mind2Web, we followed the benchmark setup and tested our agents in two settings: multi-stage QA and direct generation. The multi-stage QA setting leverages a pretrained element-ranking model to filter out more likely candidate elements from the full HTML and ask the agent to select one option from the candidate list. The direct generation setting is much more challenging and requires the agent to directly generate an action based on the full HTML. To evaluate ScribeAgent’s generalization performance, we did not fine-tune it on the Mind2Web training data, so the evaluation is zero-shot.

Our results highlight that, for multi-stage evaluation, ScribeAgent-Large achieves the best overall zero-shot performance. Its element accuracy and step success rate metrics are also competitive with the best-fine-tuned baseline, HTML-T5-XL, on cross-website and cross-domain tasks. In the direct generation setting, ScribeAgent-Large outperforms all existing baselines, with step success rates 2-3 times higher than those achieved by the fine-tuned Flan-T5.Â

The primary failure cases of our models result from the distribution mismatch between our training data and the synthetic Mind2Web data. For instance, our agent might predict another element with identical function but different from the ground truth. It also decomposes typing actions into a click followed by a typing action, whereas Mind2Web expects a single type. These issues can be addressed by improving the evaluation procedure. After resolving these problems, we observed an average of 8% increase in task success rate and element accuracy for ScribeAgent.

Evaluation on WebArena is more complicated. First, WebArena expects actions specified in the accessibility tree format, whereas ScribeAgent outputs actions in HTML format. Second, the interactive nature of WebArena requires the agent to decide when to terminate the task. To address these challenges, we developed a multi-agent system that leverages GPT-4o for action translation and task completeness evaluation.

Compared to existing text-only agents, ScribeAgent augmented with GPT-4o achieved the highest task success rate across 4 of 5 domains in WebArena and improved the previous best total success rate by 7.3% (Figure 6). In domains more aligned with our training data, such as Reddit and GitLab, ScribeAgent demonstrated stronger generalization capabilities and higher success rates. We refer the readers to our paper for more experiment details on all three benchmarks.

Conclusion

In summary, ScribeAgent demonstrates that fine-tuning open-source LLMs with high-quality, in-domain data can outperform even the most advanced prompting methods. While our results are promising, there are limitations to consider. ScribeAgent was developed primarily to showcase the effectiveness of fine-tuning and does not incorporate external reasoning and planning modules; integrating these techniques could further improve its performance. Additionally, expanding ScribeAgent’s capabilities to handle multi-modal inputs, such as screenshots, can make it more versatile and robust in real-world web environments.

To learn more about ScribeAgent and explore our detailed findings, we invite you to read our full paper. The project’s progress, including future enhancements and updates, can be followed on our GitHub repository. Stay tuned for upcoming model releases!

Source: Read MoreÂ