Today, we are excited to announce that Mistral-NeMo-Base-2407 and Mistral-NeMo-Instruct-2407—twelve billion parameter large language models from Mistral AI that excel at text generation—are available for customers through Amazon SageMaker JumpStart. You can try these models with SageMaker JumpStart, a machine learning (ML) hub that provides access to algorithms and models that can be deployed with one click for running inference. In this post, we walk through how to discover, deploy and use the Mistral-NeMo-Instruct-2407 and Mistral-NeMo-Base-2407 models for a variety of real-world use cases.

Mistral-NeMo-Instruct-2407 and Mistral-NeMo-Base-2407 overview

Mistral NeMo, a powerful 12B parameter model developed through collaboration between Mistral AI and NVIDIAÂ and released under the Apache 2.0 license, is now available on SageMaker JumpStart. This model represents a significant advancement in multilingual AI capabilities and accessibility.

Key features and capabilities

Mistral NeMo features a 128k token context window, enabling processing of extensive long-form content. The model demonstrates strong performance in reasoning, world knowledge, and coding accuracy. Both pre-trained base and instruction-tuned checkpoints are available under the Apache 2.0 license, making it accessible for researchers and enterprises. The model’s quantization-aware training facilitates optimal FP8 inference performance without compromising quality.

Multilingual support

Mistral NeMo is designed for global applications, with strong performance across multiple languages including English, French, German, Spanish, Italian, Portuguese, Chinese, Japanese, Korean, Arabic, and Hindi. This multilingual capability, combined with built-in function calling and an extensive context window, helps make advanced AI more accessible across diverse linguistic and cultural landscapes.

Tekken:Â Advanced tokenization

The model uses Tekken, an innovative tokenizer based on tiktoken. Trained on over 100 languages, Tekken offers improved compression efficiency for natural language text and source code.

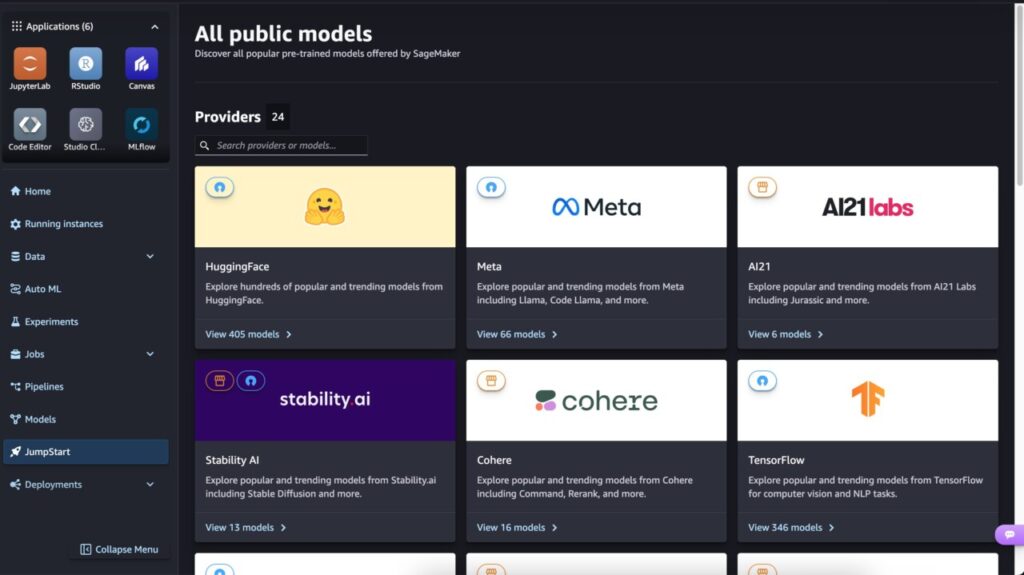

SageMaker JumpStart overview

SageMaker JumpStart is a fully managed service that offers state-of-the-art foundation models for various use cases such as content writing, code generation, question answering, copywriting, summarization, classification, and information retrieval. It provides a collection of pre-trained models that you can deploy quickly, accelerating the development and deployment of ML applications. One of the key components of SageMaker JumpStart is the Model Hub, which offers a vast catalog of pre-trained models, such as DBRX, for a variety of tasks.

You can now discover and deploy both Mistral NeMo models with a few clicks in Amazon SageMaker Studio or programmatically through the SageMaker Python SDK, enabling you to derive model performance and machine learning operations (MLOps) controls with Amazon SageMaker features such as Amazon SageMaker Pipelines, Amazon SageMaker Debugger, or container logs. The model is deployed in an AWS secure environment and under your virtual private cloud (VPC) controls, helping to support data security.

Prerequisites

To try out both NeMo models in SageMaker JumpStart, you will need the following prerequisites:

- An AWS account that will contain all your AWS resources.

- An AWS Identity and Access Management (IAM) role to access SageMaker. To learn more about how IAM works with SageMaker, see Identity and Access Management for Amazon SageMaker.

- Access to Amazon SageMaker Studio, a SageMaker notebook instance, or an interactive development environment (IDE) such as PyCharm or Visual Studio Code. We recommend using SageMaker Studio for straightforward deployment and inference.

- Access to accelerated instances (GPUs) for hosting the model.

- This model requires an

ml.g6.12xlargeinstance. SageMaker JumpStart provides a simplified way to access and deploy over 100 different open source and third-party foundation models. In order to launch an endpoint to host Mistral NeMo from SageMaker JumpStart, you may need to request a service quota increase to access anml.g6.12xlargeinstance for endpoint usage. You can request service quota increases through the console, AWS Command Line Interface (AWS CLI), or API to allow access to those additional resources.

Discover Mistral NeMo models in SageMaker JumpStart

You can access NeMo models through SageMaker JumpStart in the SageMaker Studio UI and the SageMaker Python SDK. In this section, we go over how to discover the models in SageMaker Studio.

SageMaker Studio is an integrated development environment (IDE) that provides a single web-based visual interface where you can access purpose-built tools to perform ML development steps, from preparing data to building, training, and deploying your ML models. For more details on how to get started and set up SageMaker Studio, see Amazon SageMaker Studio.

In SageMaker Studio, you can access SageMaker JumpStart by choosing JumpStart in the navigation pane.

Then choose HuggingFace.

From the SageMaker JumpStart landing page, you can search for NeMo in the search box. The search results will list Mistral NeMo Instruct and Mistral NeMo Base.

You can choose the model card to view details about the model such as license, data used to train, and how to use the model. You will also find the Deploy button to deploy the model and create an endpoint.

Deploy the model in SageMaker JumpStart

Deployment starts when you choose the Deploy button. After deployment finishes, you will see that an endpoint is created. You can test the endpoint by passing a sample inference request payload or by selecting the testing option using the SDK. When you select the option to use the SDK, you will see example code that you can use in the notebook editor of your choice in SageMaker Studio.

Deploy the model with the SageMaker Python SDK

To deploy using the SDK, we start by selecting the Mistral NeMo Base model, specified by the model_id with the value huggingface-llm-mistral-nemo-base-2407. You can deploy your choice of the selected models on SageMaker with the following code. Similarly, you can deploy NeMo Instruct using its own model ID.

This deploys the model on SageMaker with default configurations, including the default instance type and default VPC configurations. You can change these configurations by specifying non-default values in JumpStartModel. The EULA value must be explicitly defined as True to accept the end-user license agreement (EULA). Also make sure that you have the account-level service limit for using ml.g6.12xlarge for endpoint usage as one or more instances. You can follow the instructions in AWS service quotas to request a service quota increase. After it’s deployed, you can run inference against the deployed endpoint through the SageMaker predictor:

An important thing to note here is that we’re using the djl-lmi v12 inference container, so we’re following the large model inference chat completions API schema when sending a payload to both Mistral-NeMo-Base-2407 and Mistral-NeMo-Instruct-2407.

Mistral-NeMo-Base-2407

You can interact with the Mistral-NeMo-Base-2407 model like other standard text generation models, where the model processes an input sequence and outputs predicted next words in the sequence. In this section, we provide some example prompts and sample output. Keep in mind that the base model is not instruction fine-tuned.

Text completion

Tasks involving predicting the next token or filling in missing tokens in a sequence:

The following is the output:

Mistral NeMo Instruct

The Mistral-NeMo-Instruct-2407 model is a quick demonstration that the base model can be fine-tuned to achieve compelling performance. You can follow the steps provided to deploy the model and use the model_id value of huggingface-llm-mistral-nemo-instruct-2407 instead.

The instruction-tuned NeMo model can be tested with the following tasks:

Code generation

Mistral NeMo Instruct demonstrates benchmarked strengths for coding tasks. Mistral states that their Tekken tokenizer for NeMo is approximately 30% more efficient at compressing source code. For example, see the following code:

The following is the output:

The model demonstrates strong performance on code generation tasks, with the completion_tokens offering insight into how the tokenizer’s code compression effectively optimizes the representation of programming languages using fewer tokens.

Advanced math and reasoning

The model also reports strengths in mathematic and reasoning accuracy. For example, see the following code:

The following is the output:

In this task, let’s test Mistral’s new Tekken tokenizer. Mistral states that the tokenizer is two times and three times more efficient at compressing Korean and Arabic, respectively.

Here, we use some text for translation:

We set our prompt to instruct the model on the translation to Korean and Arabic:

We can then set the payload:

The following is the output:

The translation results demonstrate how the number of completion_tokens used is significantly reduced, even for tasks that are typically token-intensive, such as translations involving languages like Korean and Arabic. This improvement is made possible by the optimizations provided by the Tekken tokenizer. Such a reduction is particularly valuable for token-heavy applications, including summarization, language generation, and multi-turn conversations. By enhancing token efficiency, the Tekken tokenizer allows for more tasks to be handled within the same resource constraints, making it an invaluable tool for optimizing workflows where token usage directly impacts performance and cost.

Clean up

After you’re done running the notebook, make sure to delete all resources that you created in the process to avoid additional billing. Use the following code:

Conclusion

In this post, we showed you how to get started with Mistral NeMo Base and Instruct in SageMaker Studio and deploy the model for inference. Because foundation models are pre-trained, they can help lower training and infrastructure costs and enable customization for your use case. Visit SageMaker JumpStart in SageMaker Studio now to get started.

For more Mistral resources on AWS, check out the Mistral-on-AWS GitHub repository.

About the authors

Niithiyn Vijeaswaran is a Generative AI Specialist Solutions Architect with the Third-Party Model Science team at AWS. His area of focus is generative AI and AWS AI Accelerators. He holds a Bachelor’s degree in Computer Science and Bioinformatics.

Niithiyn Vijeaswaran is a Generative AI Specialist Solutions Architect with the Third-Party Model Science team at AWS. His area of focus is generative AI and AWS AI Accelerators. He holds a Bachelor’s degree in Computer Science and Bioinformatics.

Preston Tuggle is a Sr. Specialist Solutions Architect working on generative AI.

Preston Tuggle is a Sr. Specialist Solutions Architect working on generative AI.

Shane Rai is a Principal Generative AI Specialist with the AWS World Wide Specialist Organization (WWSO). He works with customers across industries to solve their most pressing and innovative business needs using the breadth of cloud-based AI/ML services provided by AWS, including model offerings from top tier foundation model providers.

Shane Rai is a Principal Generative AI Specialist with the AWS World Wide Specialist Organization (WWSO). He works with customers across industries to solve their most pressing and innovative business needs using the breadth of cloud-based AI/ML services provided by AWS, including model offerings from top tier foundation model providers.

Source: Read MoreÂ