Valkey is an open-source, distributed, in-memory key-value data store that offers high-performance data retrieval and storage capabilities, making it an ideal choice for scalable, low-latency modern application development. Originating as a fork of Redis OSS following recent licensing changes, Valkey maintains full compatibility with its predecessor while providing high performance alternative for its developers. Valkey provides a rich set of data structures, that can be used for wide range of use cases, including caching, session management, real-time analytics, and messaging systems. Valkey’s ability to process millions of requests per second with extremely low latency makes it as an ideal choice for high-performance applications.

Amazon ElastiCache is a fully managed, Valkey-compatible, in-memory caching service that delivers real-time, cost-optimized performance for modern applications. It allows developers to easily deploy and manage in-memory caches without manual administration, supporting millions of operations per second with microsecond response times. ElastiCache is designed to enhance the performance of modern applications while providing enterprise-grade security and reliability.

For applications with unpredictable traffic patterns, ElastiCache provides a serverless caching option that can be deployed in under a minute and instantly scales to match workload demand, removing the need for capacity planning and manual infrastructure management. This service follows a pay-as-you-go pricing model based on actual usage, making it cost-effective for variable workloads.

In this post, we explore some of the most common use cases of ElastiCache for Valkey, demonstrating how its unique capabilities enable faster, more scalable, and resilient applications across various industries. We focus on the flexible data structures of Valkey and how to use these data structures for implementing solutions for common use cases.

Common use cases implemented using ElastiCache for Valkey

The following are common use cases for ElastiCache for Valkey:

- Caching – ElastiCache for Valkey is extensively used as a caching layer to improve application performance by storing frequently accessed data in memory. This reduces the load on databases and improves response times. Popular examples include:

- Web application caching – ElastiCache for Valkey can cache user sessions, HTML fragments, or API responses to speed up web applications across retail, media, gaming, healthcare, and financial services.

- Database query caching – ElastiCache for Valkey can cache the results of expensive database queries, reducing the load on the database and improving response times. For example, Valkey can be used in e-commerce platforms for caching product catalogs and search results.

- Session management – ElastiCache for Valkey is commonly used for storing and managing user session data in web applications. Its fast read/write operations and built-in data expiration make it ideal for this use case. Popular examples include:

- Shopping cart data – e-commerce platforms can use ElastiCache for Valkey to store shopping cart data, providing a seamless user experience across multiple requests.

- User preferences and authentication – Social media platforms and online forums can use ElastiCache for Valkey to store user preferences, authentication tokens, and other session-related data.

- Real-time analytics – ElastiCache for Valkey’s ability to handle high-speed data ingestion and real-time processing makes it suitable for real-time analytics applications. Popular use cases include:

- Real-time leaderboards – Online gaming platforms can use ElastiCache for Valkey to maintain real-time leaderboards and rankings for their games.

- Real-time recommendations – e-commerce and media streaming platforms can use ElastiCache for Valkey to store user activity data and provide real-time recommendations based on user behavior.

- Messaging and queuing – ElastiCache for Valkey built-in data structures like lists, streams, and sorted sets make it suitable for implementing messaging and queuing systems. Popular examples include:

- Task queues – Web applications and backend services use ElastiCache for Valkey to manage task queues for asynchronous job processing, such as sending emails, processing uploads, or handling background tasks.

- Pub/Sub messaging – ElastiCache for Valkey’s built-in publish/subscribe functionality can be used to implement real-time messaging systems for applications like chat servers, real-time collaboration tools, and notification systems.

- Rate limiting applications – Rate limiting is a common use case for ElastiCache for Valkey, particularly in the context of web applications and APIs. Rate limiting is a technique used to control the rate at which requests are processed by a system, preventing it from being overwhelmed by excessive traffic or abuse. ElastiCache for Valkey can be integrated with load balancers, API gateways, or service meshes to enforce rate limiting at different levels (such as per client, per API endpoint, or per service).

- Media streaming – Media streaming platforms require efficient handling of high-throughput, real-time data for delivering content, tracking user interactions, and ensuring seamless playback experiences. Valkey Streams, with its ability to manage ordered logs of events and real-time high-throughput data processing, provides a powerful solution for these requirements.

- Live Event Streaming Analytics: Collect and process real-time metrics like viewer count, engagement (such as likes, comments), and playback quality.

- Live Chat and Audience Interaction: Manage real-time messaging for live-streamed events (such as Q&A, audience chat during sports events).

- Real-Time Recommendations: Provide personalized recommendations during live streams, such as suggesting related content or advertisements.

Flexible data structures

Valkey is not just a simple key-value store—it’s a versatile datastore that offers a wide array of powerful, in-memory data structures. Valkey’s rich set of data structures and its ability to handle large volumes of data with minimal latency make it a powerful tool for modern application development. These built-in data types, such as strings, hashes, lists, sets, geospatial index, and more, provide developers with the flexibility to model complex data relationships and build efficient, high-performance applications. Each data structure in Valkey is optimized for specific use cases, allowing developers to choose the best tool for the job without compromising performance.

For example, lists are ideal for implementing queues, sets support fast membership checks, and streams are perfect for handling real-time event data. This flexibility allows Valkey to serve as a backbone for a variety of applications, from social media feeds and leaderboards to financial transaction processing and Internet of Things (IoT) data collection.

The following table provides a summary of Valkey data structures and their common use cases.

| Data Structure | Description | Common Use Cases |

| String | Simple key-value storage | Caching, session tokens, counters, configuration values, user authentication, and basic data storage |

| Hash | Key-value pairs within a single key | User profiles, metadata storage, product information, and storing structured data like JSON objects |

| List | Ordered collection of strings | Task queues, message queues, latest news feeds, comments on posts, and storing recent activities |

| Set | Unordered collection of unique values | Tags, tracking unique views or actions (such as upvotes and followers), and managing user permissions |

| Sorted Set | Ordered collection of unique values with a score for each | Leaderboards, ranking systems, time-based event sorting, and priority queues for messaging |

| Stream | Log-like data structure for ordered sequences of entries. | Real-time data processing, event sourcing, live chat, IoT data ingestion, and activity tracking |

| Bitmap | Compact storage of bits (0s and 1s) | User activity tracking (such as daily check-ins), feature usage toggles, and tracking online statuses |

| HyperLogLog | Probabilistic data structure for cardinality estimation. | Counting unique users, tracking unique page views, and estimating large sets without high memory use |

| Geospatial | Store and query geographic coordinates | Location-based services, proximity search, ride-hailing services, and social media check-ins |

Here, we focus on the string data structure and how to use it for a caching use case.

Strings are the simplest and most commonly used data type in Valkey. A string in Valkey can store any type of data, including text, numbers, or binary data such as serialized objects, images, or videos. Strings support basic operations like setting, getting, incrementing, and decrementing numeric values, which are performed with extremely low latency due to Valkey’s in-memory architecture.

One of the most popular use cases for Valkey is caching frequently accessed data to improve the performance and responsiveness of web applications. By storing data in-memory, Valkey can serve requests much faster than querying a traditional database, significantly reducing latency and server load.

Imagine an e-commerce website that displays product details like images, prices, descriptions, and reviews. When a user browses different product pages, the website needs to fetch this information to render the page. If this data were retrieved from a traditional database each time a user requests it, the process could become slow and resource-intensive, especially during peak traffic. If the same data can be cached using an in-memory data store like Valkey, this can significantly improve the latencies of the application and thereby improve customer experience.

ElastiCache for Valkey as a database cache

ElastiCache for Valkey can be used as a caching layer to reduce database load and speed up response times. By storing frequently accessed data in Valkey, web applications can quickly retrieve cached content without having to query the database. This reduces the time required to serve a request, increases scalability, and decreases the load on the underlying database.

ElastiCache for Valkey is a great choice for implementing a highly available, distributed, and secure in-memory cache because it can serve frequently requested items at sub-millisecond response times, and by doing so, can ease the load off your relational or NoSQL databases and applications. Valkey stores all data in memory, allowing for extremely fast data access handling millions of operations per second with microsecond responses. Apart from high performance, Valkey uses asynchronous I/O threading system allowing for parallel processing of commands and I/O operations, maximizing throughput and minimizing bottlenecks. Valkey supports asynchronous replication that allows you to build highly available solutions providing consistent performance and reliability.

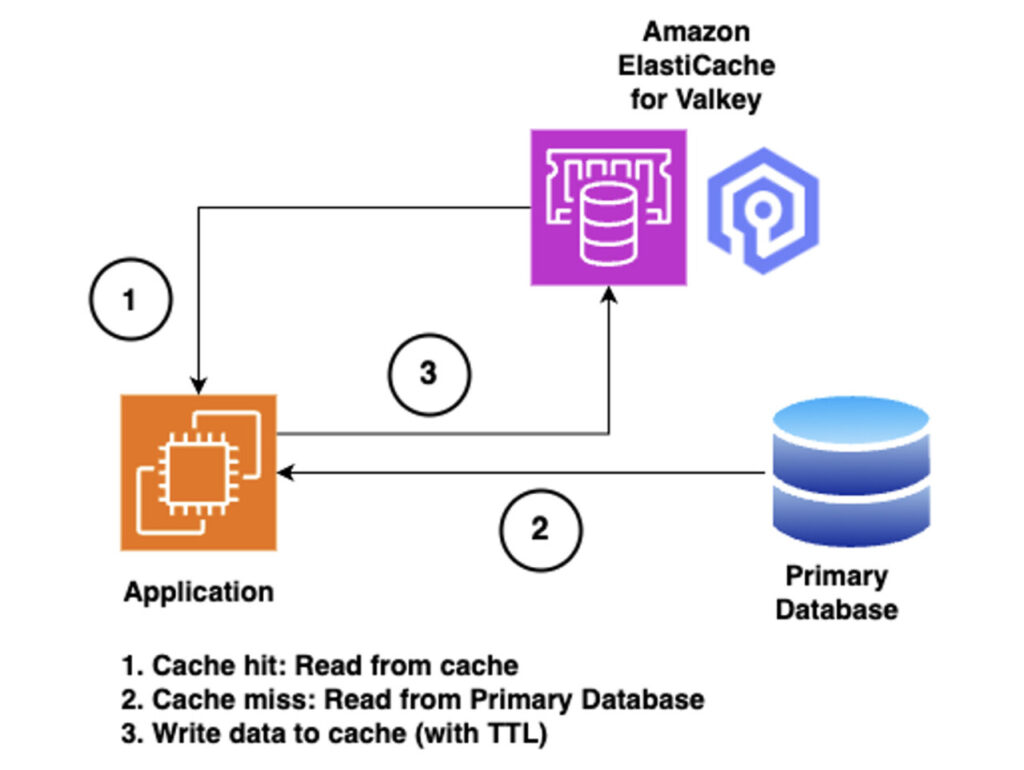

The following diagram illustrates an example architecture and workflow.

Lazy loading is a popular caching strategy where data is loaded into a cache only when it is requested for the first time (on a cache miss). When an application or system tries to access a particular piece of data that is not yet in the cache, the data is fetched from the underlying data source (such as a database), stored in the cache, and then returned to the requester. Subsequent requests for the same data can then be served directly from the cache, avoiding the need to query the data source again.

A real-world example of this caching strategy is employed by e-commerce platforms that cache product catalog data in an in-memory data store like ElastiCache for Valkey to provide a seamless experience with minimal latency.

By using Valkey as a caching layer, the website can improve its performance as follows:

- Initial request – When a user visits a product page for the first time, the application queries the database to retrieve the product information. The retrieved data is then stored in Valkey with a unique key (for example, product:12345) corresponding to the product ID, along with an expiration time (TTL) to make sure the data remains fresh.

- Subsequent requests – For future requests to the same product page, the application first checks Valkey for the cached data using the key product:12345. Depending on if the data is present, it takes the following actions:

- If the data is present in the cache (a cache hit), Valkey returns the data almost instantly, eliminating the need for a database query and significantly reducing load times.

- If the data is not found in Valkey (a cache miss), the application retrieves it from the database, stores it in Valkey, and serves it to the user.

- Cache expiration and invalidation – Cached product data can be set to expire after a certain period (for example, 10 minutes) to make sure users see the latest information, or it can be invalidated when updates occur (for example, price changes or inventory updates).

The following is a sample Python function that first checks if the user data is in the cache. If not, it queries the database and then stores the result in the cache for future use.

import os, valkey, pymysql, boto3, json, hashlib

import cachetools.func

class DB:

def __init__(self, **params):

params.setdefault("charset", "utf8mb4")

params.setdefault("cursorclass", pymysql.cursors.DictCursor)

self.mysql = pymysql.connect(**params)

class SecretsManagerProvider(valkey.CredentialProvider):

def __init__(self, secret_id, version_id=None, version_stage='AWSCURRENT'):

self.sm_client = boto3.client('secretsmanager')

self.secret_id = secret_id

self.version_id = version_id

self.version_stage = version_stage

def get_credentials(self) -> Union[Tuple[str], Tuple[str, str]]:

@cachetools.func.ttl_cache(maxsize=128, ttl=24 * 60 * 60) #24h

def get_sm_user_credentials(secret_id, version_id, version_stage):

secret = self.sm_client.get_secret_value(secret_id, version_id)

return json.loads(secret['SecretString'])

creds = get_sm_user_credentials(self.secret_id, self.version_id, self.version_stage)

return creds['username'], creds['password']

# Time to live for cached data

TTL = 600

# Connect to the Valkey cluster

my_secret_id = os.environ.get('EC_SECRETS_ID')

creds_provider = SecretsManagerProvider(secret_id=my_secret_id)

cache = valkey.Valkey(host="localhost", port=6379, credential_provider=creds_provider)

cache.ping()

# Connect to the MySQL database

database = DB(host=<hostname>, user=<username>, password=<password>, db=<database>)

cursor = database.cursor()

def get_user_from_db(user_id):

"""Query the database for user information based on user_id."""

query = "SELECT name, email FROM users WHERE id = %s"

cursor.execute(query, (user_id,))

result = cursor.fetchone()

return result

def get_user(user_id):

"""Retrieve user data, using Valkey as a cache."""

cache_key = f"user:{user_id}"

# Check if the data is in the cache

user_data = cache.get(cache_key)

if user_data:

print("Cache hit!")

return eval(user_data) # Convert string back to a dictionary

print("Cache miss! Querying the database...")

# Query the database

user_data = get_user_from_db(user_id)

if user_data:

# Convert the tuple from the database to a dictionary

user_data = {"name": user_data[0], "email": user_data[1]}

# Store the result in the cache with a TTL (e.g., 10 minutes)

cache.setex(cache_key, TTL, str(user_data))

return user_data

if __name__ == "__main__":

user_id = 12345 # Example user ID

# First call: Cache Miss, should query the database and cache the result

user = get_user(user_id)

print(f"User Data: {user}")

# Subsequent calls - should retrieve the result from the cache

user = get_user(user_id)

print(f"User Data: {user}")

Adding a cache using ElastiCache for Valkey offers numerous advantages that can significantly improve the performance, scalability, and reliability of an application:

- Improved application performance – Valkey is an in-memory datastore, meaning it can retrieve data much faster than traditional disk-based databases. This dramatically reduces the time it takes to access frequently used data, leading to lower latency for end-users.

- Handling high throughput – Valkey can handle a high number of read and write operations per second, making it suitable for applications with high traffic and large volumes of data requests.

- Reduced database load – By caching frequently accessed data, Valkey reduces the number of direct queries to the primary database, which can prevent the database from becoming a bottleneck and reduce the overall load on backend systems.

- Reduced load times – By caching content that needs to be delivered to users quickly, such as user sessions, API responses, or frequently accessed webpages, Valkey can help reduce load times and improve the overall user experience.

- Reduced infrastructure costs – Valkey can generate high throughout with minimal hardware footprint when compared to disk-based systems. Serving data from a Valkey cache can be much cheaper than repeatedly querying a primary database, especially for read-heavy workloads.

Summary

ElastiCache for Valkey is an in-memory data store designed for managing data in real-time applications. It supports a wide range of data structures, including strings, hashes, lists, sets, sorted sets and geospatial index types, making it suitable for diverse use cases such as caching, session management, task queues, real-time analytics, and location-based services. As a high-performance database, message broker, and real-time analytics engine, ElastiCache for Valkey remains an ideal choice for building reliable and low-latency solutions across various industries. Its ability to handle high-throughput scenarios makes it the preferred choice for developers seeking speed, scalability, and simplicity in their applications.

With ElastiCache Serverless for Valkey, you can create a cache in under a minute and get started as low as $6/month. Try out ElastiCache for Valkey for your own use case, and share your feedback in the comments.

About the Author

Siva Karuturi is a Sr. Specialist Solutions Architect for In-Memory Databases. Siva specializes in various database technologies (both Relational & NoSQL) and has been helping customers implement complex architectures and providing leadership for In-Memory Database & analytics solutions including cloud computing, governance, security, architecture, high availability, disaster recovery and performance enhancements. Off work, he likes traveling and tasting various cuisines Anthony Bourdain style!

Source: Read More