In any database management system (DBMS), concurrency control plays a crucial role in making sure multiple transactions can occur simultaneously without leading to inconsistencies or corrupt data. It refers to the mechanisms that manage how transactions interact, particularly when they access shared resources. A distributed database management system (D-DBMS) introduces complexity due to data being spread across multiple nodes, leading to challenges like data replication, network latency, and maintaining consistency, which heightens the importance of concurrency control.

In designing distributed systems that scale efficiently, avoiding constant coordination becomes crucial. By doing so, components must make assumptions about the state of other components, because they can’t continuously check in. These assumptions can be categorized as optimistic or pessimistic. Optimistic assumptions, central to optimistic concurrency control (OCC), presume conflicts are rare, allowing transactions to proceed without locks and resolving conflicts only when they occur. This approach is especially useful in distributed environments for enhancing scalability, but it requires robust conflict detection and resolution to maintain ACID properties—maintaining atomicity, consistency, isolation, and durability across the system. Pessimistic assumptions, which are the basis of pessimistic concurrency control (PCC), assume conflicts are common and enforce locks on resources during transactions to prevent interference. Although this provides consistency, it can limit scalability and performance. The choice between OCC and PCC comes down to whether your system benefits more from avoiding early coordination and addressing conflicts later or from taking control upfront to prevent any transactional conflicts.

In this post, we dive deep into concurrency control, providing valuable insights into crafting efficient transaction patterns and presenting examples that demonstrate effective solutions to common concurrency challenges. We also include a sample code that illustrates how to implement retry patterns for seamlessly managing concurrency control exceptions in Amazon Aurora DSQL (DSQL).

When to use PCC or OCC

When choosing between OCC and PCC, it’s crucial to align the choice with your workload and database environment.

PCC is ideal for single-instance databases that can optimize lock management, excelling in high-contention scenarios with frequent updates on small key ranges, such as continuously updating the same stock ticker. Although this pattern works well on a single-instance RDBMS, it’s less effective in distributed systems.

OCC, on the other hand, performs best in low-contention environments by allowing transactions to proceed without locking resources, only checking for conflicts at the commit stage. This reduces execution overhead and blocking delays, making OCC suitable for workloads with minimal conflicts or where non-blocking execution is critical. Although OCC can be more complex to manage under high contention scenarios, it effectively supports distributed systems with scalable key ranges and patterns like append-only operations that benefit from horizontal scalability.

Benefits of OCC for Aurora DSQL

Aurora DSQL employs optimistic concurrency, a technique that is more commonly used in non-relational databases than in relational databases. With optimistic concurrency, the transaction logic is run with little consideration for other transactions that may be attempting to update the same rows. Upon completion of the transaction, an attempt is made to commit the changes to the database. Aurora DSQL then checks to see if writes from other concurrent transactions have interfered with the transaction. If not, the transaction successfully commits; otherwise, the database reports an error to the transaction. In such cases, users must then decide how to proceed, just as they would in a database relying on pessimistic concurrency control. For most use cases, the best approach is to retry the transaction.

In Aurora DSQL, a single slow client or long-running query doesn’t impact or slow down other transactions because contention is handled at commit time on the server side, rather than while SQL is running. In contrast, locking-based designs allow a client to acquire exclusive locks on rows or even entire tables when starting a transaction. If the client then stops unexpectedly, these locks can be held indefinitely, potentially blocking other operations. This prevents other clients from making progress, keeping them in a queue of indeterminate length. In contrast, OCC immediately informs the client of any contention that provides a deterministic outcome, allowing for immediate retry or abort. Lock-based systems typically only exercise retry or cancel logic after reaching a timeout period. This makes Aurora DSQL more robust to the realistic failures and faults that come with building large distributed applications, especially across AWS Regions.

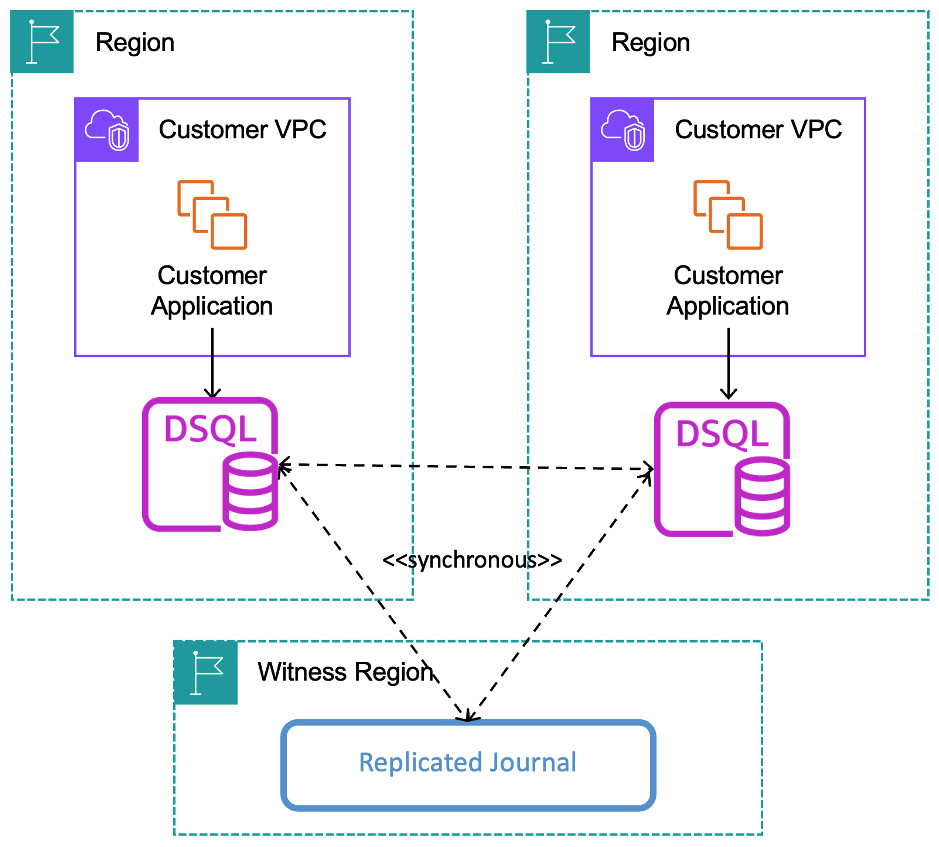

With multi-Region clusters, when users submit a transaction, the SQL operation is executed against the local storage in the region where the transaction was submitted (Region 1). Once the full transaction is completed a post image, along with the keys involved in that transaction are sent to the other region (Region 2). In Region 2, there is a transactional processor leader that is aware of all the current changes in flight in that region. When it receives the post image from Region 1, along with the list of keys involved in that transaction, it will check that against all keys that are being actively changed in the region local to it. If there are no conflicts, it will send a confirmation back for the commit.

If there are conflicts, the earliest transaction to commit will succeed, and any remaining transactions will need to be retried, resulting in a concurrency control conflict.

Aurora DSQL enables business continuity for organizations with multi-Region active-active availability. OCC improves efficiency for multi-Region transactions with synchronous replication. Aurora DSQL processes read and write transactions locally without cross-Region communication, checking for concurrency control across Regions only when a transaction commit is requested. OCC eliminates the need for lock negotiation, allowing Aurora DSQL to preprocess the full post-image and check it against the Multi-AZ and multi-Region quorum. This approach results in lower latency for synchronous transactions across Availability Zones and Regions, because Aurora DSQL can process them without lock overhead.

The following diagram illustrates a multi-Region cluster (active-active).

Optimistic concurrency control and isolation levels

Compared to other distributed SQL databases which support serializable isolation, Aurora DSQL supports strong snapshot isolation, which is equivalent to repeatable read isolation in PostgreSQL, where a transaction reads data from a snapshot of the database at the time the transaction started. This is especially helpful in case of read-only transactions because they don’t need to queue up and are less prone to OCC.

Guidance for transaction patterns

Concurrent database transactions updating the same records risk collisions. Although good data modeling can reduce these risks, collisions remain inevitable and must be handled. Databases offer concurrency management features that developers should understand and implement effectively in their applications.

Aurora DSQL uses optimistic concurrency, which may require a different programming approach, particularly for use cases where the rate of concurrent updates to the same key is very high. After you’ve done your work, you attempt to commit the transaction. Aurora DSQL checks to see if other update transactions have interfered with your transaction. If not, the transaction succeeds. Otherwise, the database reports an error. You must then decide what to do: either reattempt the transaction immediately or introduce exponential backoff and jitter to reduce the likelihood of subsequent collisions.

Although OCC always helps transactions progress in the database, it can still perform quite poorly under high contention. Therefore, there are some good transaction patterns to be followed as guidelines:

- Assume transactions may fail and design transactions so that they can always be retried.

- Implement timeout logic for each transaction to minimize contention on row-level conflicts or data update contention. The value of the timeout that is set needs to factor in the maximum query duration in order to avoid unnecessary transaction cancellations.

- In cases where high contention and retry rates are likely, implement exponential backoff and jitter during the retry attempt to make sure the failed transactions have more chances of running successfully when tried at different random intervals.

- In a system with a high volume of updates to existing keys (updates and upserts), it’s important to keep the scope of transactions small to minimize the chance of concurrency collisions.

Let’s explore some use cases where OCC exceptions might occur in Aurora DSQL, along with code examples.

Example 1: Data conflicts in cross-Region transactions

In distributed SQL systems like Aurora DSQL, a common scenario where OCC exceptions arise is when multiple Regions attempt to update the same data concurrently. For this example, let’s imagine we have a cluster with two linked Regions: us-east-1 and us-east-2, both operating on the same account data:

- Transaction A in

us-east-1reads the balance and version of an account, preparing to update it - At the same time, Transaction B in

us-east-2also reads the same account’s balance and version, preparing to perform its own update - Transaction B successfully updates the account balance and increments the version

- When Transaction A later tries to commit its update using the old version, an OCC exception occurs because the version has been updated by Transaction B

This scenario demonstrates how concurrent transactions on the same data in different Regions can lead to OCC exceptions.

Now, let’s break this down with a code example.

- Create the table and insert a record:

- Transaction A reads the account balance and version:

- Transaction B reads the same data, updates it, and increments the version:

- Transaction A tries to update using the old version of the account, triggering an OCC conflict:

In this scenario, Transaction A would fail because the version number has been changed by Transaction B, simulating an OCC exception:

Let’s dive into the details of what actually happened.

Transaction A is a read/write transaction, so during the read phase, the query processor parses through the SELECT and consults the shard maps to find the storage nodes that have this data and retrieves the data from those nodes. Transaction B is also a read/write transaction, and the query processor creates the read set and a write set as part of the UPDATE statement. As Transaction B issues a commit after the update, the query processor packages the read set and the write set and sends it to the transaction processors. Now each transaction processor will check if there are any modifications to the read set by any other transactions. If there are no changes, then it will read and write the pre- and post-images to the commit layer, making the commit durable and the version is not incremented. Transaction A issues an UPDATE statement. Because the query processor already has all the data it needs for the update, it creates the write set and passes the read and write sets to the transaction processors. Because the data changed for the read set, transaction processors will then reject the changes and give an OCC exception. At this point, the client can retry the same transaction with the updates made by Transaction B.

Example 2: SELECT FOR UPDATE to manage write skew

We mentioned earlier that Aurora DSQL doesn’t normally perform concurrency checks on read records. SELECT FOR UPDATE changes this behavior and flags the read rows for concurrency checks.

This is how you manage write skew in Aurora DSQL.

In a write skew two concurrent transactions can read a common data set and each make updates that change the common data set but don’t overlap with each other. Since they don’t overlap (don’t modify the same data), they don’t trigger concurrency protections.

In Aurora DSQL, the FOR UPDATE clause modifies the typical behavior of Optimistic Concurrency Control (OCC) by introducing additional checks on the flagged rows. This adjustment prevents anomalies that could occur when transactions interact with the same data set concurrently. Unlike traditional Pessimistic Concurrency Control (PCC), which relies on locking mechanisms to manage conflicts, OCC handles potential write conflicts differently. The following example demonstrates how the FOR UPDATE clause enforces concurrency checks in this context.

Let’s look at a real-world example of how this situation can occur.

- First, create an

Orderstable and insert a couple of rows: - Transaction A begins a read/write transaction using a

FOR UPDATEclause but hasn’t yet executed the update or committed: - Transaction B starts its own read/write transaction and commits:

- Transaction A then attempts to update the

accountstable within the same transaction:

When Transaction A issues the commit, the following OCC exception occurs:

The OCC exception occurred even though the update wasn’t on the orders table, which had the read set that was changed.

Let’s break down what actually happened.

In this scenario, Transaction A creates a read set with details of order_id=1. Meanwhile, Transaction B, also a read/write transaction, reads and updates the same order_id. Later, when Transaction A attempts to update the balance in the accounts table, it encounters an OCC error (OC000). Here’s what happens: when Transaction A is initiated, the query processor creates the read set and waits to generate the write set before committing. However, while Transaction A is still in progress, Transaction B updates and commits changes to the same order_id in Transaction A’s read set. When Transaction A proceeds to update the accounts table, the query processor creates the write set and hands both the read and write sets to the transaction processor for validation. During this phase, the transaction processor notices that the data in the read set has a new version due to Transaction B’s changes, causing it to reject the transaction, forcing Transaction A to retry.

This example demonstrated how in cases where you wanted to manage write skew in Aurora DSQL, using for update is an efficient way to handle it.

Note FOR UPDATE only functions within read/write transactions. Attempting to use FOR UPDATE in a read-only transaction will result in a warning and not report updates on the rows read:

Also, the SELECT FOR UPDATE filter will need to include the primary key of the table you’re select from. In our case, the Primary Key for the table orders is order_id, so this select for update will fail:

but this one will succeed because the filter includes the Primary key:

Example 3: Catalog out of sync exceptions

Although data conflicts are a major cause of OCC exceptions, catalog out-of-sync issues can also trigger these errors. These occur when concurrent transactions modify or access the database schema, such as creating or altering tables, during an active session. For instance, if one transaction creates a table while another transaction attempts to read from or write to it, an OCC error (like OC001) may occur because the session’s view of the catalog is outdated.

Retrying the operation often resolves the issue, because the database catalog is refreshed after the initial failure. To minimize the risk of such errors in production, it’s recommended to avoid performing DDL operations in multi-threaded manner. Simultaneous schema modifications can cause race conditions, failed transactions, and rollbacks. Managing DDL changes in a controlled, single-threaded context is a more reliable way to reduce concurrency conflicts and maintain smoother database operations.

Let’s see a real-world example of how this can happen.

- Transaction A creates the table:

- Transaction B, which already has an open session, tries to write a record into the table:

In this example, Transaction A creates a table and Transaction B tries to write a record into the same table and receives an OCC, but the subsequent retry is successful.

The following are a few other scenarios where you can encounter an OCC exception (OC001), which can typically be resolved with a retry:

- Transaction A is adding a column to an existing table, while Transaction B is attempting to read from or write to the table. Transaction B will encounter an OCC exception.

- Transaction A drops a table, and Transaction B subsequently tries to access the same table.

- Transaction A adds a column to the table, and Transaction B simultaneously tries to add a column with a different name.

- Any catalog changes that conflict with ongoing transactions.

In summary, OCC exceptions (OC001) often arise due to concurrent modifications to database schemas or catalog changes, but they can generally be resolved by implementing appropriate retry mechanisms.

Handling OCC exceptions with retry mechanisms

In OCC, implementing backoff and jitter is a best practice for managing retries when transactions conflict, avoiding synchronized retries that could potentially lead to further conflicts or system overload. Backoff makes sure that after a conflict, retries are not immediate but spaced out with progressively longer delays, helping reduce system load. Jitter introduces randomness to these delays. Together, backoff and jitter reduce contention and enhance the retry logic’s efficiency in distributed systems employing OCC. For a deeper dive, refer to Exponential Backoff And Jitter. The below code sample is available through this repo.

Let’s walk through a scenario where we simulate an OCC exception in a high-transaction environment and manage retries using backoff and jitter strategies.

- First, use the

create.pyscript to create an order schema and two tables:accountsandorders: - Generate LoadRun the

load_generator.pyscript to generate load for the database, inserting data into theorderstable: - To introduce an OCC condition, alter the

accountstable by adding a new column in another PostgreSQL session:After the schema is updated, the

load_generator.pyscript fails with the following error: -  Now, let’s integrate backoff and jitter into the retry logic by running the

retry_backoff_jitter.pyscript, an enhanced version of theload_generator.pyscript with built-in retry mechanisms: - Now, introduce another schema change in the

accountstable:

As the retry logic kicks in, you’ll see the script handling the OCC exception with retries:

This can be fine-tuned based on the retry strategy. In this case, we are using seconds for the delay.

Effectively managing OCC exceptions in distributed systems requires a comprehensive retry strategy that can incorporate backoff and jitter in the case that very high contention areas of the application key space (hot keys) can’t be avoided. A well-considered approach can help provide predictability at scale, maximize throughput, and increase stability for areas of a workload that naturally demonstrates a high contention rate (hot keys). Beyond retry logic, building applications to minimize contention from the start—favoring append-only patterns over update-in-place—is key. Most modern application designs and distributed databases like Aurora DSQL benefit from horizontal scaling, where introducing new keys rather than updating existing ones supports optimal system scalability. Additionally, implementing idempotency makes sure retries don’t create duplicate operations or data inconsistencies, and a dead letter queue for persistent failures allows for escalation and manual intervention, further enhancing system reliability.

Conclusion

Concurrency control is a critical aspect of any database management system, and it becomes even more important when dealing with distributed databases. Using OCC with Aurora DSQL is well-suited due to its distributed architecture, because it allows for higher throughput and system efficiency by avoiding the need for resource locking during transaction execution.

The key advantages of OCC in Aurora DSQL are:

- Resilience to slow clients or long-running queries – With OCC, a single slow transaction doesn’t impact or slow down other transactions, because contention is handled at the commit stage rather than during query execution

- Scalable query processing – The backward validation approach in Aurora DSQL, which compares the current transaction with committed transactions, allows the query processing layer to scale out without the need for coordination

- Robust to realistic failures – OCC makes Aurora DSQL more resilient to the failures and faults that come with building large distributed applications, because it doesn’t rely on locking mechanisms that can lead to deadlocks or performance bottlenecks

Although OCC provides significant benefits, it also requires careful consideration of transaction patterns to achieve optimal performance. Principles like assuming transactions may fail, implementing timeouts and exponential backoff with jitter, and managing write skew using SELECT FOR UPDATE are important guidelines for developers working with Aurora DSQL.

By understanding the concurrency control mechanisms and best practices employed by Aurora DSQL, you can use the system’s distributed capabilities while maintaining data consistency and availability, even in the face of complex, high-transaction workloads. To learn more about Aurora DSQL refer to to the documentation.

About the authors

Rajesh Kantamani is a Senior Database Specialist SA. He specializes in assisting customers with designing, migrating, and optimizing database solutions on Amazon Web Services, ensuring scalability, security, and performance. In his spare time, he loves spending time outdoors with family and friends.

Rajesh Kantamani is a Senior Database Specialist SA. He specializes in assisting customers with designing, migrating, and optimizing database solutions on Amazon Web Services, ensuring scalability, security, and performance. In his spare time, he loves spending time outdoors with family and friends.

Prasad Matkar is Database Specialist Solutions Architect at AWS based in the EMEA region. With a focus on relational database engines, he provides technical assistance to customers migrating and modernizing their database workloads to AWS.

Prasad Matkar is Database Specialist Solutions Architect at AWS based in the EMEA region. With a focus on relational database engines, he provides technical assistance to customers migrating and modernizing their database workloads to AWS.

Source: Read More