Retrieval Augmented Generation (RAG) enhances AI responses by combining the generative AI model’s capabilities with information from external data sources, rather than relying solely on the model’s built-in knowledge. In this post, we look at two capabilities in Amazon Bedrock Knowledge Bases that make it easier to build RAG workflows with Amazon Aurora Serverless v2 as the vector store. The first capability helps you easily create an Aurora Serverless v2 knowledge base to use with Amazon Bedrock and the second capability enables you to automate deploying your RAG workflow across environments.

First, there is a new quick create a new vector store option from Amazon Bedrock Knowledge Bases lets you set up Aurora Serverless v2 as a vector store with minimal setup work. The quick create capability automatically provisions and configures the Aurora Serverless v2 cluster as the vector store, creates the necessary table and index, and sets up the knowledge base. This eliminates the need for manual vector database setup and configuration. Amazon Bedrock Knowledge Bases automatically processes the data after insertion by chunking it, converting it into embeddings using your chosen Amazon Bedrock model, and storing everything in Aurora Serverless v2. This automation applies to both newly created and existing databases, streamlining your workflow so you can focus on building AI applications without worrying about orchestrating data chunking, embeddings generation, or vector store provisioning and indexing.

Second, we explore how to automate your setup using AWS CloudFormation templates. When you create an Aurora Serverless v2 vector store using the quick create option, you get a CloudFormation template containing the deployed resource configurations. You can use this template to replicate your setup, modify parameters like DB ports and parameter groups, and deploy through the Amazon Web Services (AWS) console, AWS Command Line Interface (AWS CLI), or AWS SDK. This makes it simple to automate and standardize your RAG workflow deployments across environments while maintaining consistency.

Amazon Aurora PostgreSQL-Compatible Edition overview

Amazon Aurora PostgreSQL-Compatible Edition is a fully managed relational database that delivers unparalleled high performance and availability at global scale while maintaining the simplicity and cost-effectiveness of open source databases. The pgvector extension support in Aurora PostgreSQL-Compatible makes it well-suited as a vector database, enabling efficient storage and similarity searches of high-dimensional vectors. This capability makes it ideal for generative AI applications. Amazon Aurora Serverless v2 adds on-demand auto scaling with the ability to scale down to 0 ACUs during periods of inactivity. It offers pricing based on the database capacity you use and enables seamless integration of vector operations without added complexity. Using a relational database such as Aurora is ideal for generative AI applications with queries that filter on relational data combined with vectorized data, such as documents or images, in a similarity search.

Amazon Bedrock overview

Amazon Bedrock is a fully managed service that offers a choice of high-performing foundation models (FMs) from leading AI companies such as Anthropic, Cohere, Meta, Stability AI, and Amazon through a single API, along with a broad set of capabilities you need to build generative AI applications with security, privacy, and responsible AI. Amazon Bedrock Knowledge Bases allows organizations to build fully managed RAG pipelines by augmenting contextual information from private data sources to deliver more relevant, accurate, and customized responses. The information retrieved from Amazon Bedrock Knowledge Bases is provided with citations to improve transparency and minimize hallucinations.

Why choose Aurora Serverless v2 and Amazon Bedrock for RAG?

The combination offers four key advantages:

- Simple setup – Deploy Aurora Serverless v2 as your vector store in Amazon Bedrock Knowledge Bases in minutes using quick create

- Built-in scaling – Handle growing workloads with Aurora Serverless v2 auto scaling and Amazon Bedrock serverless infrastructure

- Secure – Build secure RAG applications faster with Aurora Serverless v2 consolidated storage for both tabular data and embeddings, minimizing cross-service data movement while maintaining both data and embeddings in a single, queryable environment

- Cost control – Save costs with the Aurora Serverless v2 scale-to-zero capability and pay for the database capacity you consume

Using this integration, you can efficiently store and search vector embeddings in Aurora Serverless v2 while using Amazon Bedrock Foundation Models (FMs) for embedding creation and response generation. The result is a complete system for building scalable, cost-effective AI applications without managing complex infrastructure.

Solution overview: Build a generative AI customer support tool with RAG

For this post, we implement a RAG architecture for customer support analytics using Amazon Bedrock Knowledge Bases and Aurora Serverless v2. The solution enables real-time analysis of customer feedback through vector embeddings and large language models (LLMs).

Implementation flow

The implementation follows these high-level steps:

- Data source setup – Configure an Amazon Simple Storage Service (Amazon S3) bucket for data storage

- Amazon Bedrock Knowledge Bases setup – Create a knowledge base in Amazon Bedrock using the quick create a new vector store option, which automatically provisions and sets up Aurora Serverless v2 as the vector store

- Data ingestion – Perform a data sync to ingest the data into Aurora Serverless v2 vector store, using Amazon Bedrock Foundation Models to create embeddings

- Test the knowledge base – Evaluate customer feedback analysis using the knowledge base

Technical components

- Vector store – Aurora Serverless v2 with pgvector extension

- Enables semantic search using hierarchical navigable small world (HNSW) indexing and Euclidean distance measure

- Scales automatically to handle variable workloads, optimizing costs by scaling to 0 ACUs during inactivity

- LLM and embedding service – Amazon Bedrock

- Generates and manages embeddings automatically and provides LLMs for text generation

- RAG enhances LLM responses with relevant customer support data

- Dataset – Bitext Gen AI Chatbot Customer Support Dataset from Kaggle

- Contains production customer support dialogues spanning multiple products and services

- Enables LLMs to classify support issues, identify recurring query patterns, and analyze customer satisfaction trends through natural language understanding

- Knowledge base – Amazon Bedrock Knowledge Bases

- Automates the end-to-end process of creating and configuring Aurora Serverless v2 vector store using the quick create capability

- Streamlines data chunking, embedding generation, and vector indexing

- Simplifies the workflow from data ingestion to query processing

This architecture uses AWS serverless capabilities to minimize operational overhead while maintaining high performance for vector search operations and natural language processing tasks.

Solution walkthrough

To build a generative AI customer support tool with RAG, use the instructions in the following sections.

Configure an S3 bucket

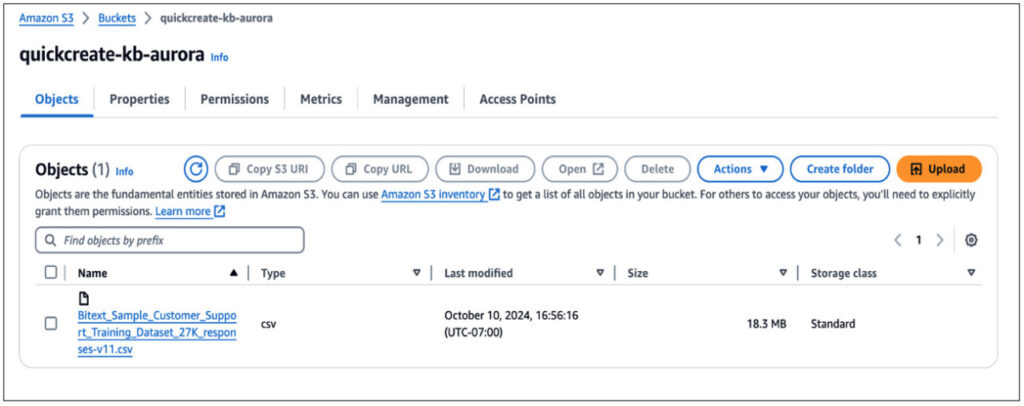

Start by setting up an S3 bucket to store the dataset, which will serve as the data source for creating the knowledge base in Amazon Bedrock. Uploading the dataset to Amazon S3 is essential because Amazon Bedrock will use this dataset to configure the knowledge base. Follow these steps:

- Download the Bitext Gen AI Chatbot Customer Support Dataset from Kaggle.

- On the Amazon S3 console, choose Create bucket, give it a unique name, and select the appropriate AWS Region

- To upload the dataset, navigate to the Objects tab and choose Upload to select the downloaded file as shown in the following screenshot

- Modify the bucket policy or permissions settings as necessary to meet your security requirements

After the dataset is uploaded, proceed to create and automate the knowledge base using Amazon Bedrock.

Create a knowledge base in Amazon Bedrock

To create a knowledge base in Amazon Bedrock, follow these steps:

- On the Amazon Bedrock console, in the left navigation page under Builder tools, choose Knowledge Bases

- To initiate knowledge base creation, on the Create dropdown menu, choose Knowledge Base with vector store, as shown in the following screenshot

- In the Provide knowledge base details pane, enter a unique Knowledge base name and a Knowledge base description

- Under IAM permissions, choose the default option, Create and use a new service role, and provide a Service role name(optional), as shown in the following screenshot

- On the Choose data source pane, select Amazon S3 as the data source where your dataset is stored

- Choose Next, as shown in the following screenshot

- On the Configure data source pane, enter a Data source name

- Choose Browse S3, as shown in the following screenshot, and select the dataset you uploaded previously

- Under Parsing strategy, select Amazon Bedrock default parser and for Chunking strategy, choose Default chunking. Choose Next, as shown in the following screenshot

- On the Select embeddings model and configure vector store pane, for Embeddings model, choose Titan Text Embeddings v2. For Embeddings type, choose Floating-point vector embeddings. For Vector dimensions, select 1024, as shown in the following screenshot

Make sure you have requested and received access to the chosen FM in Amazon Bedrock. To learn more, refer to Add or remove access to Amazon Bedrock foundation models.

- On the Vector database pane, select Quick create a new vector store option and choose the new Amazon Aurora PostgreSQL Serverless option as the vector store

- (Optional) If you want to use custom AWS Key Management Store (AWS KMS) keys for your Aurora Serverless v2 vector store or for your vector store credentials, enable them under Additional configurations, as shown in the following screenshot. For this walkthrough, retain the default settings by skipping this step

- On the next screen, review your selections. To finalize the setup, choose Create

- After initiating the creation, a progress tracker will appear at the top of the console. To view status and progress, choose View details, as shown in the following screenshot

A pop-up will show you the status and progress of the following three sub-events as shown in the following image:

- Aurora cluster creation

- Seeding Aurora Cluster

- Knowledge base creation

The Seeding Aurora cluster sub-event configures the newly provisioned Aurora Serverless v2 vector store by creating the pgvector extension, schema, roles and required tables. It also sets up a limited-privilege database user for secure Amazon Bedrock interactions with the Aurora Serverless v2 cluster.

Within a few minutes, the console will display your newly created knowledge base as well as the Aurora Serverless v2 database cluster. After selecting the Cloud Formation link, as shown in the following image, you can observe the resources and configurations that are deployed upon creating the knowledge base.

Perform a data sync

After the knowledge base becomes available, we perform a data sync to make sure that all of data is properly ingested, processed, and stored in the vector database. The data sync automates the ingestion of our customer support dataset, chunks the data based on our specified strategy, generates embeddings using our chosen Amazon Bedrock embedding model, and stores these embeddings in the newly created Aurora Serverless v2 sector store. This automation keeps our knowledge base current and ready for efficient similarity searches. This data sync step is essential before testing the knowledge base.

Select the newly created knowledge base and, in the Data source section, choose the data source. Choose Sync, as shown in the following screenshot.

You will observe a progress tracker on top of the console until the sync process is finished, as shown in the following screenshot. It might take several minutes depending on the amount of source data that you have.

After the syncing of data source is completed, you can observe that the value in the Last sync time column in the Data source section has now been populated with the current time, as shown in the following screenshot.

Test the knowledge base

After completing the data sync, you can test the knowledge base directly from the Amazon Bedrock Knowledge Bases console to verify that the RAG workflow functions correctly.

On the Amazon Bedrock Knowledge Bases console, click on the newly created knowledge base and navigate to the Test Knowledge Base section by clicking on Test as shown in the following screenshot.

Choose Select Model and select a model of your choice. For this walkthrough, select Claude 3 Sonnet and click Apply as shown in the following screenshot.

Make sure you have requested and received access to the chosen FM in Amazon Bedrock. To learn more, refer to Add or remove access to Amazon Bedrock foundation models.

The knowledge base performs vector similarity search in Aurora Serverless v2 to find semantically relevant content for generating accurate, factual responses.

The following image shows the source dataset’s raw format.

The next section shows the results of testing the RAG implementation with customer support queries:

Question 1: What technical problems are customers reporting the most?

Question 2: Can you find the common causes of customer dissatisfaction related to shipping delays?

Question 3: How can customers reset their passwords if they’ve forgotten them?

By effortlessly setting up the knowledge base and automating the customer support generative AI application using Amazon Bedrock Knowledge Bases and Aurora Serverless v2 as the vector store, you can efficiently analyze customer issues and retrieve relevant information related to customer inquiries. This streamlined approach demonstrates how seamlessly you can use these tools to enhance customer support and gain valuable insights with minimal configuration.

Vector embeddings in Aurora Serverless v2

The Aurora Serverless v2 vector store created using the quick create option has certain default configurations (upon creation) such as the virtual private cloud (VPC) and minimum and maximum ACUs, as mentioned earlier. The quick create option automatically creates a dedicated database, schema and table with columns for storing the embeddings along with the metadata and text in the Aurora Serverless v2 vector store as shown in the following screenshot.

If you want to view the vector embeddings stored in Aurora Serverless v2, you can use the query editor in the Amazon Relational Database Service (Amazon RDS) console. To retrieve the necessary database connection details, follow these steps:

- On the Amazon Bedrock Knowledge Bases console, select the knowledge base you just created and locate the database connection details in the Vector database section, as shown in the following screenshot. You need the Database name, Credential Secret ARN, Amazon Aurora DB Cluster ARN, and Table name to connect and view the table in Aurora Serverless v2 database using the query editor

- On the Amazon RDS console, in the left-hand navigation pane, select Query Editor. Learn more about how to use the query editor in Using the Aurora query editor. Use the credential secret ARN to authenticate and connect to your Aurora Serverless v2 database. Choose Connect to database, as shown in the following screenshot

- After it’s connected, execute a SELECT statement against your vector table that is displayed in the Amazon Bedrock Knowledge Bases console, as shown in the following screenshot. For example:

This query displays a sample row from your vector embeddings table so you can observe the structure and contents of the stored embeddings. You can examine the embedding vectors and associated metadata to gain insights into how your data is represented and stored within the database.

Customize your knowledge base deployment

The quick create option provisions your knowledge base infrastructure through a CloudFormation stack. This stack deploys the required resources, including Aurora Serverless v2 database cluster, security groups, and AWS Identity and Access Management (IAM) roles. The following screenshot shows the resources created in the CloudFormation console:

The Aurora Serverless v2 vector store is created with several default configurations, including:

- Default VPC

- Default subnet group

- Default security group

- Aurora Standard Storage configuration

- Aurora PostgreSQL-Compatible engine with pgvector extension

- Minimum = 0 ACUs (and will scale to zero when not in use) and Maximum = 16 ACUs. The unit of measure for Aurora Serverless v2 is Aurora Capacity Unit (ACU). To learn more, refer the Aurora Serverless v2 capacity documentation

- Database Name: Bedrock_Knowledge_Base_Cluster

- Schema Name: bedrock_integration

- Table Name: bedrock_knowledge_base

- HNSW index with L2 Euclidean distance measure

You have several options for customizing your knowledge base infrastructure. Customize new Amazon Bedrock Knowledge Bases deployments with Aurora Serverless v2 vector store using the provided AWS CloudFormation template. This allows you to specify VPC configurations, subnet placements, IAM roles among others. Deploy your customized AWS CloudFormation template using the AWS Management Console, AWS Command Line Interface (AWS CLI), or AWS SDK, to align your infrastructure with organizational requirements.

After creating the knowledge base, you can modify several configurations of your Aurora Serverless v2 cluster such as parameter groups, security group rules, Aurora Capacity Units (ACUs) scaling range and maintenance windows. Configurations that can be altered in an existing Aurora Serverless v2 cluster can be modified here, while restrictions on unchangeable settings also apply. For example, you cannot use modify option on the RDS console to change the VPC of an Aurora Serverless v2 cluster.

Managing and cleaning up resources with AWS CloudFormation

To manage your deployment:

- Locate your stack using the stack name (KnowledgeBaseQuickCreateAurora-XXX). You can find the CloudFormation stack name/stack ID by navigating to the Tags section of the newly created knowledge base

- Monitor and manage the resources through the AWS CloudFormation console

- Clean up the resources efficiently by deleting the AWS CloudFormation stack when no longer needed. For more information, see Deleting a stack from the CloudFormation console

Conclusion

Amazon Bedrock Knowledge Bases with Aurora Serverless v2 simplifies RAG workflow implementation through its quick create capability and automated CloudFormation deployments. This takes away the manual vector store setup, automates data processing and embedding generation, and provides seamless scaling capabilities. The ability to replicate configurations across environments using CloudFormation templates promotes consistency while reducing deployment complexity.

By combining Aurora Serverless v2 with the pgvector extension, you get a secure, scalable vector storage capability to use with Amazon Bedrock. With this automated knowledge base management, developers can focus on building innovative AI applications rather than managing infrastructure. Whether you’re prototyping or deploying production workloads, this integrated solution provides a robust foundation for building next-generation RAG-based applications.

We’d love to hear about your experience with Amazon Bedrock Knowledge Bases and Aurora Serverless v2 – share your insights or questions in the comments below.

About the authors

Rinisha Marar is a Database Specialist Solutions Architect at AWS with deep expertise in Amazon RDS and Amazon Aurora. She brings extensive experience in relational database technologies. Through architectural guidance and technical expertise, Rinisha helps organizations through complex database migrations, performance tuning, and the adoption of cutting-edge features. She is passionate about enabling customers to build resilient, scalable database solutions while following AWS best practices.

Rinisha Marar is a Database Specialist Solutions Architect at AWS with deep expertise in Amazon RDS and Amazon Aurora. She brings extensive experience in relational database technologies. Through architectural guidance and technical expertise, Rinisha helps organizations through complex database migrations, performance tuning, and the adoption of cutting-edge features. She is passionate about enabling customers to build resilient, scalable database solutions while following AWS best practices.

Shayon Sanyal is a Principal Database Specialist Solutions Architect and a Subject Matter Expert for Amazon’s flagship relational database, Amazon Aurora. He has over 15 years of experience managing relational databases and analytics workloads. Shayon’s relentless dedication to customer success allows him to help customers design scalable, secure and robust cloud native architectures. Shayon also helps service teams with design and delivery of pioneering features, such as Generative AI.

Shayon Sanyal is a Principal Database Specialist Solutions Architect and a Subject Matter Expert for Amazon’s flagship relational database, Amazon Aurora. He has over 15 years of experience managing relational databases and analytics workloads. Shayon’s relentless dedication to customer success allows him to help customers design scalable, secure and robust cloud native architectures. Shayon also helps service teams with design and delivery of pioneering features, such as Generative AI.

Source: Read More