In today’s world, large language models have shown great performance on various tasks and demonstrated different reasoning capabilities. This is important for advancing Artificial General Intelligence (AGI) and its use in robotics and navigation. Spatial reasoning includes quantitative aspects (e.g., distances, angles) and qualitative aspects (e.g., relative positions like “near†or “insideâ€). While humans excel at these tasks, LLMs often struggle with spatial reasoning, which is one essential part of reasoning and inference and requires understanding complex relationships between objects in space. These problems show that effective and well-connected approaches are needed for spatial reasoning improvement in LLMs.

Traditional LLM approaches only rely on free-form prompting in a single call to LLMs to enable spatial reasoning. However, these approaches have shown notable limitations and, in particular, tend to fail on challenging datasets, such as StepGame or SparQA, which require multi-step planning. Researchers have developed strategies like Chain of Thought (CoT) prompting and newer approaches like visualization of thought to enhance reasoning. Recent advancements like using external tools or combining fact extraction with logical reasoning through neural-symbolic methods, such as ASP, offer better results. However, challenges exist in the form of testing on limited datasets, underutilization of methods, and weak feedback systems. These problems show that effective and well-connected approaches are demanded for spatial reasoning improvement in LLMs.

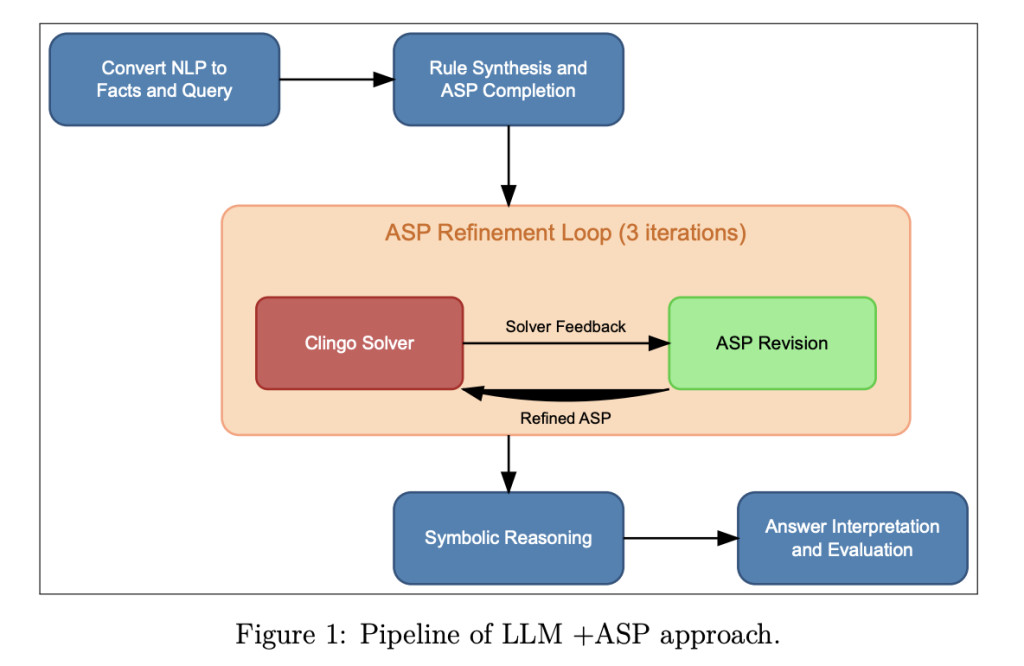

To solve this, researchers from Stuttgart University proposed a systematic neural-symbolic framework to enhance the spatial reasoning abilities of LLMs by combining strategic prompting with symbolic reasoning. This approach integrates feedback loops and ASP-based verification to improve performance on complex tasks, demonstrating generalizability across different LLM architectures.

The study explored methods to improve spatial reasoning in LLMs using two datasets: StepGame, with synthetic spatial questions involving up to 10 reasoning steps, and SparQA, featuring complex text-based questions with diverse formats and 3D spatial relationships. Three approaches were tested: ASP for logical reasoning, an LLM+ASP pipeline combining symbolic reasoning with DSPy optimization, and a “Fact + Logical Rules†method embedding rules in prompts to simplify computations. Tools like Clingo, DSPy, and LangChain supported implementation, while models such as DeepSeek and GPT-4 Mini were evaluated using metrics like micro-F1 scores, showing the adaptability of these methods.

The “LLM + ASP†approach on the SparQA dataset showed accuracy improvements, especially for “Finding Relation†and “Finding Block†questions, with GPT-4.0 mini performing best. However, “Yes/No†questions were better with direct prompting. Error analysis showed problems with grounding and parsing, which required specific optimizations for each model. The “Facts + Rules†method outperformed direct prompting, which showed an accuracy improvement of over 5% in SparQA. This method translates natural language into structured facts and applies logical rules, especially Llama3 70B in the case of extended reasoning. The neural-symbolic methods also outperformed the accuracy of both datasets. StepGame got 80% above, and SparQA approximated at about 60%. This significantly improved over baseline prompting, with accuracy increasing by 40-50% on StepGame and 3-13% on SparQA.

The key factors for success were the distinction of semantic parsing and logical reasoning, clear spatial relationships, and multi-hop handling. Therefore, the methodology performed much better in the simpler, well-defined environment than the complex natural SparQA datasets.

In summary, the proposed framework boosts LLMs’ spatial reasoning capability. Indeed, experimental results work more significantly than conventional neural-symbolic systems while increasing performance upon difficult spatial reasoning tasks related to several different types of LLMs. While the approach achieved over 80% accuracy on StepGame, it averaged 60% on the more complex SparQA. Thus, there is a scope for future advancement in this method to achieve greater performance and better results. This work lays a critical foundation for future breakthroughs in AI and can serve as a baseline for future researchers!

Check out the Paper. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter and join our Telegram Channel and LinkedIn Group. If you like our work, you will love our newsletter.. Don’t Forget to join our 55k+ ML SubReddit.

The post This AI Paper Proposes a Novel Neural-Symbolic Framework that Enhances LLMs’ Spatial Reasoning Abilities appeared first on MarkTechPost.

Source: Read MoreÂ

‘

‘