The development of effective AI models is crucial in deep learning research, but finding optimal model architectures remains challenging and costly. Traditional manual and automated approaches often fail to expand design possibilities beyond basic architectures like Transformers or hybrids, and the high cost of exploring a comprehensive search space limits model improvement. Manual optimization demands significant expertise and resources, while automated methods are often restricted by narrow search spaces, hindering substantial progress across tasks. To address these challenges, Liquid AI’s latest research offers a practical solution.

To address these challenges, Liquid AI has developed STAR (Synthesis of Tailored Architectures), a framework aimed at automatically evolving model architectures to enhance efficiency and performance. STAR reimagines the model-building process by creating a novel search space for architectures based on the theory of linear input-varying systems (LIVs). Unlike traditional methods that iterate on a limited set of known patterns, STAR provides a new approach to representing model structures, enabling exploration at different hierarchical levels through what they term “STAR genomes.â€

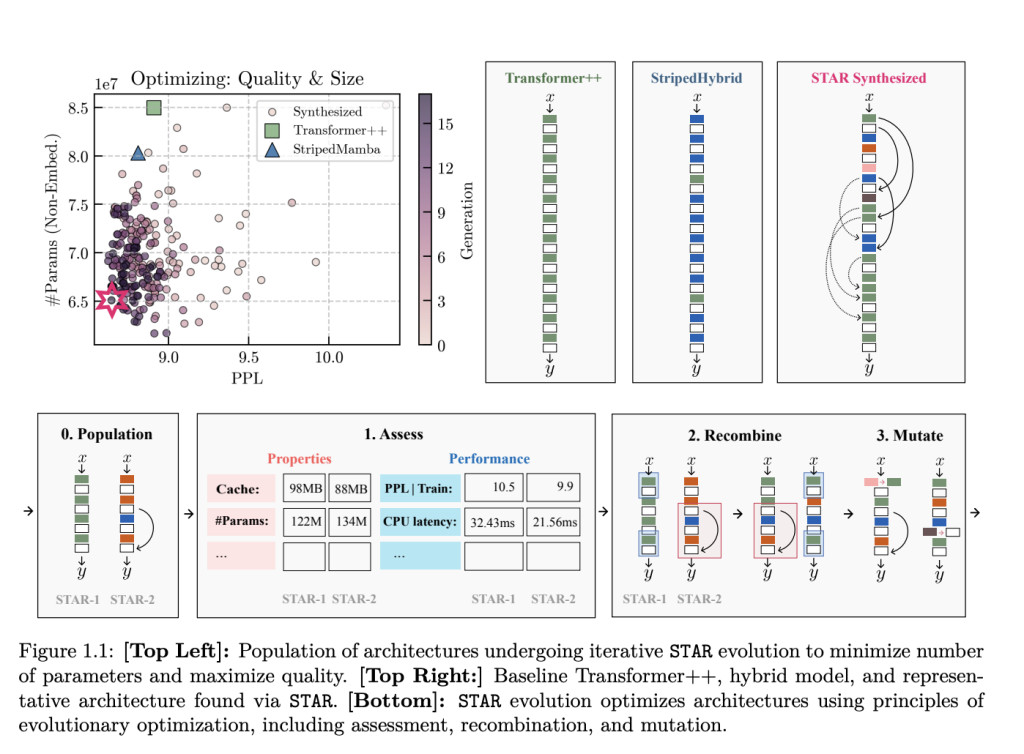

These genomes serve as a numerical encoding of architecture designs, which STAR evolves using principles from evolutionary optimization. By compiling and evaluating these genomes iteratively, STAR allows for recombination and mutation, resulting in continuous refinements. The core idea is to treat model architectures as dynamic entities that can evolve over generations, optimizing for metrics like quality, efficiency, size, and inference cache—all key components of modern AI applications.

Technical Insights: STAR’s Architecture and Benefits

The technical foundation of STAR lies in its representation of model architectures as hierarchical numeric sequences—â€genomesâ€â€”that define computational units and their interconnections. The search space is inspired by LIV systems, which generalize many common components of deep learning architectures, such as convolutional layers, attention mechanisms, and recurrent units. The STAR genome is composed of several levels of abstraction, including the backbone, operator, and featurizer genomes, which together determine the structure and properties of the computational units used in a model.

STAR optimizes these genomes through a combination of evolutionary algorithms. The process involves a series of operations: assessment, recombination, and mutation, which refine the population of architectures over time. Each architecture in the population is evaluated based on its performance on specific metrics, and the best-performing ones are recombined and mutated to form a new generation of architectures.

This approach enables STAR to generate diverse architectural designs. By breaking down architectures into manageable components and systematically optimizing them, STAR is capable of designing models that are efficient in terms of both computational requirements and quality. For instance, the STAR-generated architectures have shown improvements over manually tuned models such as Transformers and hybrid designs, especially when evaluated on parameters like size, efficiency, and inference cache requirements.

The implications of STAR are notable, especially given the challenges of scaling AI models while balancing efficiency and quality. Liquid AI’s results show that when optimizing for both quality and parameter size, STAR-evolved architectures consistently outperformed Transformer++ and hybrid models on downstream benchmarks. Specifically, STAR achieved a 13% reduction in parameter counts while maintaining or improving overall quality, measured by perplexity, across a variety of metrics and tasks.

The reduction in cache size is another important feature of STAR’s capabilities. When optimizing for quality and inference cache size, STAR-evolved models were found to have cache sizes up to 90% smaller than those of Transformer architectures while matching or surpassing them in quality. These improvements suggest that STAR’s approach of using evolutionary algorithms to synthesize architecture designs is viable and effective, particularly when optimizing for multiple metrics simultaneously.

Furthermore, STAR’s ability to identify recurring architecture motifs—patterns that emerge during the evolution process—provides valuable insights into the design principles that underlie the improvements observed. This analytical capability could serve as a tool for researchers looking to understand why certain architectures perform better, ultimately driving future innovation in AI model design.

Conclusion

STAR represents an important advancement in how we approach designing AI architectures. By leveraging evolutionary principles and a well-defined search space, Liquid AI has created a tool that can automatically generate tailored architectures optimized for specific needs. This framework is particularly valuable for addressing the need for efficient yet high-quality models capable of handling the diverse demands of real-world AI applications. As AI systems continue to grow in complexity, STAR’s approach offers a promising path forward—one that combines automation, adaptability, and insight to push the boundaries of AI model design.

Check out the Paper and Details. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter and join our Telegram Channel and LinkedIn Group. If you like our work, you will love our newsletter.. Don’t Forget to join our 60k+ ML SubReddit.

The post Liquid AI Introduces STAR: An AI Framework for the Automated Evolution of Tailored Architectures appeared first on MarkTechPost.

Source: Read MoreÂ

‘

‘