Prompt engineering refers to the practice of writing instructions to get the desired responses from foundation models (FMs). You might have to spend months experimenting and iterating on your prompts, following the best practices for each model, to achieve your desired output. Furthermore, these prompts are specific to a model and task, and performance isn’t guaranteed when they are used with a different FM. This manual effort required for prompt engineering can slow down your ability to test different models.

Today, we are excited to announce the availability of Prompt Optimization on Amazon Bedrock. With this capability, you can now optimize your prompts for several use cases with a single API call or a click of a button on the Amazon Bedrock console.

In this post, we discuss how you can get started with this new feature using an example use case in addition to discussing some performance benchmarks.

Solution overview

At the time of writing, Prompt Optimization for Amazon Bedrock supports Prompt Optimization for Anthropic’s Claude 3 Haiku, Claude 3 Sonnet, Claude 3 Opus, and Claude-3.5-Sonnet models, Meta’s Llama 3 70B and Llama 3.1 70B models, Mistral’s Large model and Amazon’s Titan Text Premier model. Prompt Optimizations can result in significant improvements for Generative AI tasks. Some example performance benchmarks for several tasks were conducted and are discussed.

In the following sections, we demonstrate how to use the Prompt Optimization feature. For our use case, we want to optimize a prompt that looks at a call or chat transcript, and classifies the next best action.

Use automatic prompt optimization

To get started with this feature, complete the following steps:

- On the Amazon Bedrock console, choose Prompt management in the navigation pane.

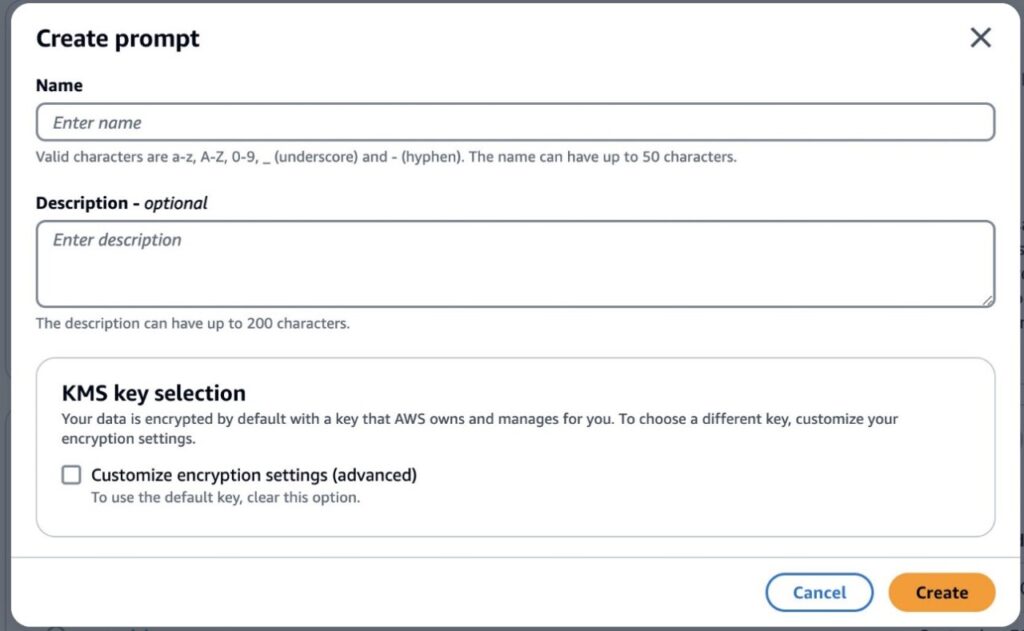

- Choose Create prompt.

- Enter a name and optional description for your prompt, then choose Create.

- For User message, enter the prompt template that you want to optimize.

For example, we want to optimize a prompt that looks at a call or chat transcript and classifies the next best action as one of the following:

- Wait for customer input

- Assign agent

- Escalate

The following screenshot shows what our prompt looks like in the prompt builder.

- In the Configurations pane, for Generative AI resource, choose Models and choose your preferred model. For this example, we use Anthropic’s Claude 3.5 Sonnet.

- Choose Optimize.

A pop-up appears that indicates that your prompt is being optimized.

When optimization is complete, you should see a side-by-side view of the original and the optimized prompt for your use case.

- Add values to your test variables (in this case,

transcript) and choose Run.

You can then see the output from the model in the desired format.

As we can see in this example, the prompt is more explicit, with clear instructions on how to process the original transcript provided as a variable. This results in the correct classification, in the required output format. Once a prompt has been optimized, it can be deployed into an application by creating a version which creates a snapshot of its configuration. Multiple versions can be stored to enable switching between different use-case prompt configurations. See prompt management for more details on prompt version control and deployment.

Performance benchmarks

We ran the Prompt Optimization feature on several open source datasets. We are excited to share the improvements seen in a few important and common use cases that we see our customers working with:

To measure performance improvement with respect to the baseline prompts, we use ROUGE-2 F1 for the summarization use case, HELM-F1 for the dialog continuation use case, and HELM-F1 and JSON matching for function calling. We saw a performance improvement of 18% on the summarization use case, 8% on dialog completion, and 22% on function calling benchmarks. The following table contains the detailed results.

| Use Case | Original Prompt | Optimized Prompt | Performance Improvement |

| Summarization | First, please read the article below. {context}Â Now, can you write me an extremely short abstract for it? | <task>Your task is to provide a concise 1-2 sentence summary of the given text that captures the main points or key information.</task><context>{context}</context><instructions>Please read the provided text carefully and thoroughly to understand its content. Then, generate a brief summary in your own words that is much shorter than the original text while still preserving the core ideas and essential details. The summary should be concise yet informative, capturing the essence of the text in just 1-2 sentences.</instructions><result_format>Summary: [WRITE YOUR 1-2 SENTENCE SUMMARY HERE]</result_format> | 18.04% |

| Dialog continuation | Functions available:{available_functions}Examples of calling functions:Input:Functions: [{"name": "calculate_area", "description": "Calculate the area of a shape", "parameters": {"type": "object", "properties": {"shape": {"type": "string", "description": "The type of shape (e.g. rectangle, triangle, circle)"}, "dimensions": {"type": "object", "properties": {"length": {"type": "number", "description": "The length of the shape"}, "width": {"type": "number", "description": "The width of the shape"}, "base": {"type": "number", "description": "The base of the shape"}, "height": {"type": "number", "description": "The height of the shape"}, "radius": {"type": "number", "description": "The radius of the shape"}}}}, "required": ["shape", "dimensions"]}}]Conversation history: USER: Can you calculate the area of a rectangle with a length of 5 and width of 3?Output:{"name": "calculate_area", "arguments": {"shape": "rectangle", "dimensions": {"length": 5, "width": 3}}}Input:Functions: [{"name": "search_books", "description": "Search for books based on title or author", "parameters": {"type": "object", "properties": {"search_query": {"type": "string", "description": "The title or author to search for"}}, "required": ["search_query"]}}]Conversation history: USER: I am looking for books by J.K. Rowling. Can you help me find them?Output:{"name": "search_books", "arguments": {"search_query": "J.K. Rowling"}}Input:Functions: [{"name": "calculate_age", "description": "Calculate the age based on the birthdate", "parameters": {"type": "object", "properties": {"birthdate": {"type": "string", "format": "date", "description": "The birthdate"}}, "required": ["birthdate"]}}]Conversation history: USER: Hi, I was born on 1990-05-15. Can you tell me how old I am today?Output:{"name": "calculate_age", "arguments": {"birthdate": "1990-05-15"}}Current chat history:{conversation_history}Respond to the last message. Call a function if necessary. |

| 8.23% |

| Function Calling |

| <task_description>You are an advanced question-answering system that utilizes information from a retrieval augmented generation (RAG) system to provide accurate and relevant responses to user queries.</task_description><instructions>1. Carefully review the provided context information:<context>Domain: Restaurant Entity: THE COPPER KETTLE Review: My friend Mark took me to the copper kettle to celebrate my promotion. I decided to treat myself to Shepherds Pie. It was not as flavorful as I'd have liked and the consistency was just runny, but the servers were awesome and I enjoyed the view from the patio. I may come back to try the strawberries and cream come time for Wimbledon..Highlight: It was not as flavorful as I'd have liked and the consistency was just runny, but the servers were awesome and I enjoyed the view from the patio.Domain: Restaurant Entity: THE COPPER KETTLE Review: Last week, my colleagues and I visited THE COPPER KETTLE that serves British cuisine. We enjoyed a nice view from inside of the restaurant. The atmosphere was enjoyable and the restaurant was located in a nice area. However, the food was mediocre and was served in small portions.Highlight: We enjoyed a nice view from inside of the restaurant.</context>2. Analyze the user's question:<question>user: Howdy, I'm looking for a British restaurant for breakfast.agent: There are several British restaurants available. Would you prefer a moderate or expensive price range?user: Moderate price range please.agent: Five restaurants match your criteria. Four are in Centre area and one is in the West. Which area would you prefer?user: I would like the Center of town please.agent: How about The Copper Kettle?user: Do they offer a good view?

| 22.03% |

The consistent improvements across different tasks highlight the robustness and effectiveness of Prompt Optimization in enhancing prompt performance for various natural language processing (NLP) tasks. This shows Prompt Optimization can save you considerable time and effort while achieving better outcomes by testing models with optimized prompts implementing the best practices for each model.

Conclusion

Prompt Optimization on Amazon Bedrock empowers you to effortlessly enhance your prompt’s performance across a wide range of use cases with just a single API call or a few clicks on the Amazon Bedrock console. The substantial improvements demonstrated on open-source benchmarks for tasks like summarization, dialog continuation, and function calling underscore this new feature’s capability to streamline the prompt engineering process significantly. Prompt Optimization on Amazon Bedrock enables you to easily test many different models for your generative-AI application, following the best prompt engineering practices for each model. The reduced manual effort, will greatly accelerate the development of generative-AI applications in your organization.

We encourage you to try out Prompt Optimization with your own use cases and reach out to us for feedback and collaboration.

About the Authors

Shreyas Subramanian is a Principal Data Scientist and helps customers by using generative AI and deep learning to solve their business challenges using AWS services. Shreyas has a background in large-scale optimization and ML and in the use of ML and reinforcement learning for accelerating optimization tasks.

Shreyas Subramanian is a Principal Data Scientist and helps customers by using generative AI and deep learning to solve their business challenges using AWS services. Shreyas has a background in large-scale optimization and ML and in the use of ML and reinforcement learning for accelerating optimization tasks.

Chris Pecora is a Generative AI Data Scientist at Amazon Web Services. He is passionate about building innovative products and solutions while also focusing on customer-obsessed science. When not running experiments and keeping up with the latest developments in generative AI, he loves spending time with his kids.

Chris Pecora is a Generative AI Data Scientist at Amazon Web Services. He is passionate about building innovative products and solutions while also focusing on customer-obsessed science. When not running experiments and keeping up with the latest developments in generative AI, he loves spending time with his kids.

Zhengyuan Shen is an Applied Scientist at Amazon Bedrock, specializing in foundational models and ML modeling for complex tasks including natural language and structured data understanding. He is passionate about leveraging innovative ML solutions to enhance products or services, thereby simplifying the lives of customers through a seamless blend of science and engineering. Outside work, he enjoys sports and cooking.

Zhengyuan Shen is an Applied Scientist at Amazon Bedrock, specializing in foundational models and ML modeling for complex tasks including natural language and structured data understanding. He is passionate about leveraging innovative ML solutions to enhance products or services, thereby simplifying the lives of customers through a seamless blend of science and engineering. Outside work, he enjoys sports and cooking.

Shipra Kanoria is a Principal Product Manager at AWS. She is passionate about helping customers solve their most complex problems with the power of machine learning and artificial intelligence. Before joining AWS, Shipra spent over 4 years at Amazon Alexa, where she launched many productivity-related features on the Alexa voice assistant.

Shipra Kanoria is a Principal Product Manager at AWS. She is passionate about helping customers solve their most complex problems with the power of machine learning and artificial intelligence. Before joining AWS, Shipra spent over 4 years at Amazon Alexa, where she launched many productivity-related features on the Alexa voice assistant.

Source: Read MoreÂ