Natural neural systems have inspired innovations in machine learning and neuromorphic circuits designed for energy-efficient data processing. However, implementing the backpropagation algorithm, a foundational tool in deep learning, on neuromorphic hardware remains challenging due to its reliance on bidirectional synapses, gradient storage, and nondifferentiable spikes. These issues make it difficult to achieve the precise weight updates required for learning. As a result, neuromorphic systems often depend on off-chip training, where networks are pre-trained on conventional systems and only used for inference on neuromorphic chips. This limits their adaptability, reducing their ability to learn autonomously after deployment.

Researchers have developed alternative learning mechanisms tailored for spiking neural networks (SNNs) and neuromorphic hardware to address these challenges. Techniques like surrogate gradients and spike-timing-dependent plasticity (STDP) offer biologically inspired solutions, while feedback networks and symmetric learning rules mitigate issues such as weight transport. Other approaches include hybrid systems, compartmental neuron models for error propagation, and random feedback alignment to relax weight symmetry requirements. Despite progress, these methods face hardware constraints and limited computational efficiency. Emerging strategies, including spiking backpropagation and STDP variants, promise to enable adaptive learning on neuromorphic systems directly.

Researchers from the Institute of Neuroinformatics at the University of Zurich and ETH Zurich, Forschungszentrum Jülich, Los Alamos National Laboratory, London Institute for Mathematical Sciences, and Peking University have developed the first fully on-chip implementation of the exact backpropagation algorithm on Intel’s Loihi neuromorphic processor. Leveraging synfire-gated synfire chains (SGSCs) for dynamic information coordination, this method enables SNNs to classify MNIST and Fashion MNIST datasets with competitive accuracy. The streamlined design integrates Hebbian learning mechanisms and achieves an energy-efficient, low-latency solution, setting a baseline for evaluating future neuromorphic training algorithms on modern deep learning tasks.

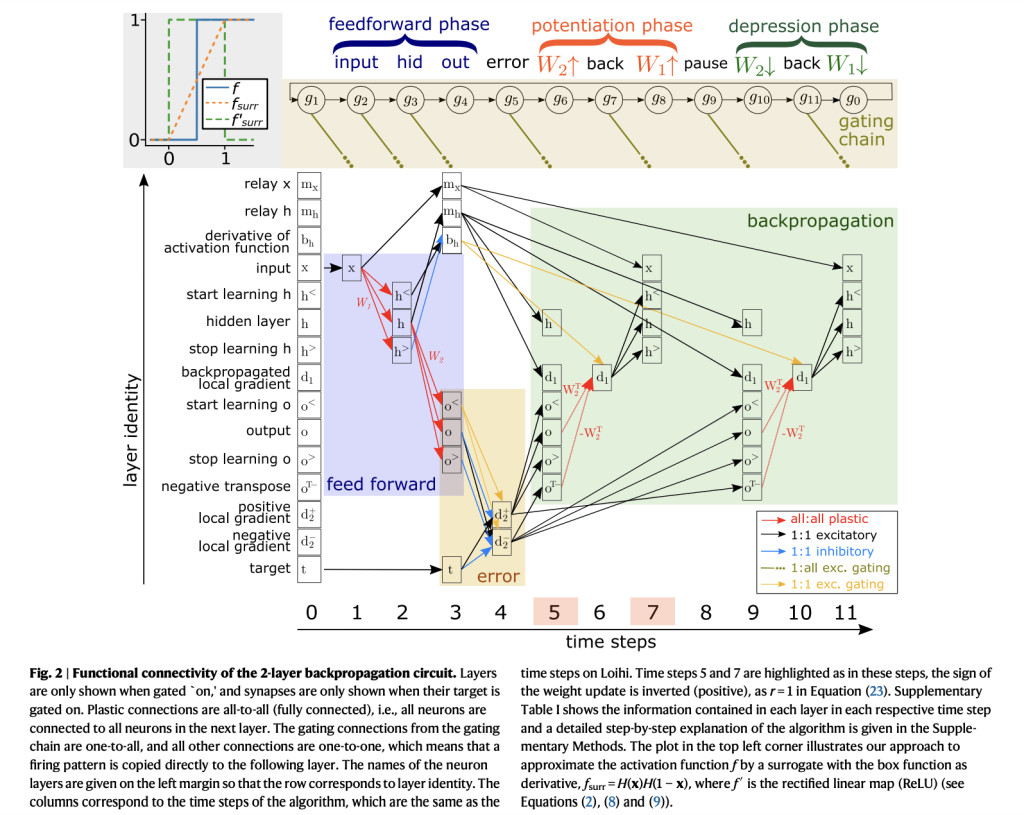

The methods section outlines the system at three levels: computation, algorithm, and hardware. A binarized backpropagation model computes network inference using weight matrices and activation functions, minimizing errors via recursive weight updates. Surrogate ReLU replaces non-differentiable threshold functions for backpropagation. Weight initialization follows He distribution, while MNIST data preprocessing involves cropping, thresholding, and downsampling. A spiking neural network implements these computations using a leaky integrate-and-fire neuron model on Intel’s Loihi chip. Synfire gating ensures autonomous spike routing. Learning employs a modified Hebbian rule with supervised updates controlled by gating neurons and reinforcement signals for precise temporal coordination.

The binarized nBP model was implemented on Loihi hardware, extending a previous architecture with new mechanisms. Each neural network unit was represented by a spiking neuron using the current-based leaky integrate-and-fire (CUBA) model. The network used binary activations, discrete weights, and a three-layer feedforward MLP. Synfire gating controlled the information flow, enabling precise Hebbian weight updates. Training on MNIST achieved 95.7% accuracy with efficient energy use, consuming 0.6 mJ per sample. On the Fashion MNIST dataset, the model reached 79% accuracy after 40 epochs. The network demonstrated inherent sparsity due to its spiking nature, with reduced energy use during inference.

The study successfully implements the backpropagation (nBP) algorithm on neuromorphic hardware, specifically using Loihi VLSI. The approach resolves key issues like weight transport, backward computation, gradient storage, differentiability, and hardware constraints through techniques like symmetric learning rules, synfire-gated chains, and surrogate activation functions. The algorithm was evaluated on MNIST and Fashion MNIST datasets, achieving high accuracy with low power consumption. This implementation highlights the potential for efficient, low-latency deep learning applications on neuromorphic processors. However, further work is needed to scale to deeper networks, convolutional models, and continual learning while addressing computational overhead.

Check out the Paper. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter and join our Telegram Channel and LinkedIn Group. If you like our work, you will love our newsletter.. Don’t Forget to join our 55k+ ML SubReddit.

The post On-Chip Implementation of Backpropagation for Spiking Neural Networks on Neuromorphic Hardware appeared first on MarkTechPost.

Source: Read MoreÂ

‘

‘