We are excited to announce Amazon Timestream for LiveAnalytics as a newly supported target endpoint for AWS Database Migration Service (AWS DMS). This addition allows you to move time-series data from an AWS DMS supported source database to Timestream.

Timestream is a fully managed, scalable, and serverless time series database service that makes it straightforward to store and analyze trillions of events per day. Customers across a broad range of industry verticals have adopted Timestream to derive real-time insights, monitor critical business applications, and analyze millions of real-time events across websites and applications. With the migration capabilities of AWS DMS, you can now migrate your existing time series data and replicate ongoing time series data to Timestream with reduced downtime. To take advantage of the service’s high throughput ingestion capabilities, Timestream also supports AWS DMS Parallel Load and Parallel Apply features so you can migrate a large volume of data in parallel, allowing for significantly faster migrations.

In this post, we show you how to use Timestream as a target for an example PostgreSQL source endpoint in AWS DMS.

Solution overview

When creating your migration, the source database is where your data currently resides, and the target database is where it will be transferred to. In this post, we migrate data from a PostgreSQL source database to a Timestream target database. The replication instance is the component that runs the migration task, and it needs access to both the source and target within your VPC. This post guides you through setting up your Timestream database and assigning the required AWS Identity and Access Management (IAM) permissions for AWS DMS to migrate your data. When the setup is complete, you can run your task and verify that the data is migrated to your Timestream database.

Note that AWS DMS Timestream endpoint only supports RDBMS sources.

Prerequisites

You should have a basic understanding of how AWS DMS works. If you’re just getting started with AWS DMS, review the AWS DMS documentation. You should also have a supported AWS DMS source endpoint and a Timestream target to perform the migration.

Set up IAM resources for Timestream

It is straightforward to set up a Timestream database as an AWS DMS target and start migrating data. To get started, you first need to create an IAM role with the necessary privileges.

- On the IAM console, choose Policies in the navigation pane.

- Choose Create policy.

- Use the following code to create your IAM policy. Update the AWS Region, account ID, and database name accordingly. For this post, we name the policy

dms-timestream-access.This policy encompasses three key permissions for Timestream:

- Describe endpoints permission – Grants the ability to describe Timestream endpoints. This is crucial for using the

DescribeDatabasefunction, which is used in conjunction with the other Timestream access permissions. - Database description and table listing access – Allows for the description of a specific database to verify its existence and accessibility to AWS DMS. It also permits listing tables within that database, which is important for identifying existing tables and creating new ones as necessary.

- Data insertion and table management capabilities – Essential for enabling AWS DMS to insert data into Timestream and for updating tables, especially when changes are needed in the memory or magnetic store durations.

Now you can attach your IAM policy to the role that you’ll use.

- Describe endpoints permission – Grants the ability to describe Timestream endpoints. This is crucial for using the

- On the IAM console, choose Roles in the navigation pane.

- Choose Create role.

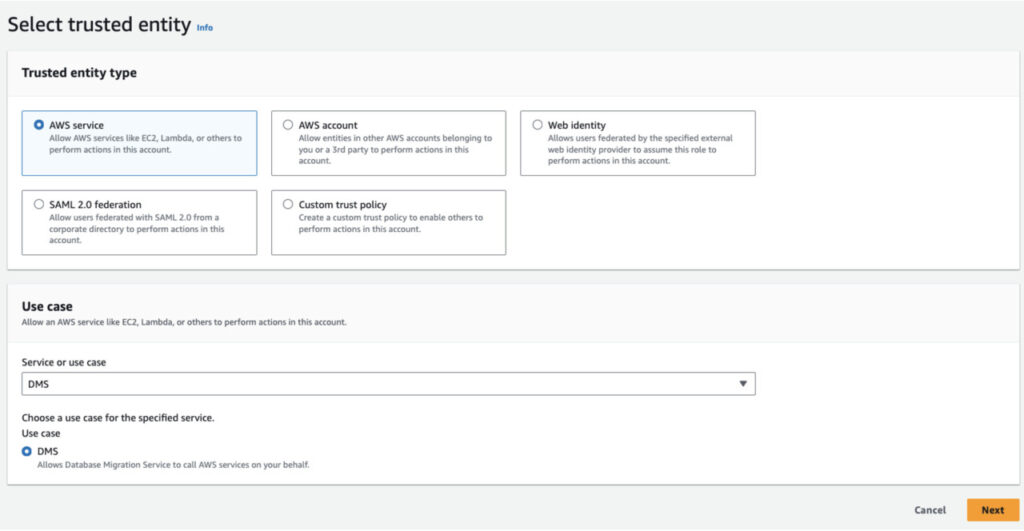

- For Trusted entity type, select AWS service.

- For Service or use case, choose DMS.

- Choose Next.

- Give the role a name (for this post, we name it

dms-timestream-role). - Attach the policy you created.

- Create the role.

Create a Timestream database

To create a Timestream database, complete the following steps:

- On the Timestream console, choose Databases in the navigation pane.

- Choose Create database.

- For Choose a configuration, select Standard database.

- For Name, enter a name for your database.

- For KMS key, choose a key ID.

- Choose Create database.

Create a target Timestream endpoint

To set up the Timestream endpoint with AWS DMS, complete the following steps:

- On the AWS DMS console, choose Endpoints in the navigation pane.

- Choose Create endpoint.

- For Endpoint type, select target endpoint.

- For Endpoint identifier, enter a name for your endpoint (for this post, we use

timestream-target) - For Target engine, choose Amazon Timestream.

- For Amazon Resource Name (ARN) for service access role, enter the role ARN for the role you created earlier (

dms-timestream-role). - For Memory store retention, set the retention period according to your use case.

The memory store retention period in AWS DMS is calculated in hours with a supported range of 1–8,736 hours. The memory store is optimized for high-speed ingestion. As a best practice, set the memory store retention period to accommodate the majority of the data you’re migrating. - For Magnetic store retention, set the duration according to your use case.

The magnetic store retention period in AWS DMS is measured in days, with a supported range of 1–73,000 days. The magnetic store is read-only by default, but you can enable write capability by adjusting the endpoint settings and settingEnableMagneticStoreWritesto true. After you make this change, data outside of the memory store’s retention period will automatically be transferred to the magnetic store for longer-term storage.The magnetic store is designed for updating historical data and does not support large volume ingestion. If your intention is to load historical data into the magnetic store, it’s recommended to use AWS DMS to migrate to Amazon Simple Storage Service (Amazon S3) in CSV format and then use batch load in Timestream. Records older than the magnetic store retention period will be automatically deleted, so you should carefully consider your retention period setting.

- Optionally, you can configure

CdcInsertsAndUpdatesandEnableMagneticStoreWritesunder Endpoint settings.

CdcInsertsAndUpdatesis a Boolean field. By default, it isfalse. Whentrue, it will skip deletes from the source database during change data capture (CDC). Whenfalse, it will apply deletes from the source database as updates in Timestream. The reason why this might be a desired behavior is that, as of this writing, Timestream doesn’t support deleting records. Instead, AWS DMS will null out the records by adding in 0 values for number types, NULL strings for VARCHAR, andFalsefor bool values. This is so that you can tell that a record no longer contains data. If you would like to leave the original record before the delete, set this field totrue.EnableMagneticStoreWritesis a Boolean field as well. By default, it isfalse. Whentrue, it will allow records outside of the memory store retention period, but within the magnetic store retention period, to be written directly to the magnetic store. This is not recommended for most use cases because large inputs will cause throttling. This is intended for a case where some records are outside of the memory store retention period and you don’t want to skip those records.

- Choose Create endpoint to create your target endpoint.

Create a migration task

To create a migration task with your Timestream target endpoint, complete the following steps:

- On the AWS DMS console, choose Database migration tasks in the navigation pane.

- Choose Create database migration task.

- Specify the replication instance and source database endpoint that you’ll use with the Timestream target.

- Choose the Timestream endpoint you created as the target.

- Choose the migration task depending on the type of migration you want to complete.

- In the Task settings section, select JSON editor and adjust the settings accordingly.

The code contains the following key parameters:- ParallelLoadThreads – Determines the number of threads AWS DMS uses for initially loading each table into a Timestream target, with a maximum limit of 32 threads

- ParallelLoadBufferSize – Sets the maximum number of records for the buffer used by parallel load threads for a Timestream target, ranging from a default of 50 to a maximum of 1,000

- ParallelLoadQueuesPerThread – Defines the number of queues each thread accesses to process data records for batch loads to the target, with a default setting of 1 and a range of 5–512 for Timestream targets

- ParallelApplyThreads – Specifies the number of concurrent threads used by AWS DMS to push data to a Timestream target during a CDC load, with values ranging from 0–32

- ParallelApplyBufferSize – Indicates the maximum number of records each buffer queue can hold, used by concurrent threads to push data to a Timestream target during a CDC load, with a default of 100 and a maximum of 1,000

- ParallelApplyQueuesPerThread – Specifies the number of queues per thread for extracting data records for batch loads to a Timestream endpoint during CDC, with a range from 1–512

You can use these fields to enable faster migrations by batching record writes:

- We recommend using a

ParallelLoadQueuesPerThreadandParallelApplyQueuesPerThreadsize of 100 because this is the maximum records per write to Timestream, so you get the best throughput this way - You should adjust the thread count depending on your throughput

- You should scale queues per thread with your load, but this should be relatively low, around 50, because it doesn’t take up too much instance memory

The most important part when configuring an AWS DMS migration task with a Timestream target is how your source tables will map to Timestream tables. Timestream has a unique mapping rule when being used as a target. Let’s explore how this works with some example data.

Say we have two tables. One table is called

sensor_dataand the other is calledsensors. The following screenshot shows example data insensor_data.

The SQL command used to create this table is the following:

We populate the table with sample data using the following command:

For

sensors, the data will not be important; the significance is to show how table mappings will impact Timestream migrations. - In this example, configure table mappings in the following way:

In the preceding code, you’re mapping the

sensor_datatable only. You specifytimestream_dimensionto beserialin the sample data, and setsensor_timeas the timestamp name.timestream-dimensionsandtimestream-timestamp-nameare required for each Timestream mapping rule.Additionally, only tables that have a mapping rule for Timestream will be migrated.

There are two types of mapping available:

- Single-measure mapping – In this case, all the columns that aren’t the timestamp or the dimensions (

serialandsensor_time) will be mapped to their own record. For example, let’s consider the tablesensor_data. For a single record in the source database, there would be five records in Timestream. Each would have the same serialvalueand sensor, but there would be one record forTemperature, another forHumidity, another forLight, and so on. - Multi-measure mapping – To use multi-measure mapping, you add a setting in the same section as

"timestream-timestamp-name":"sensor_time", where you specify which column from the source you want to use as the measure name for the multi-measure record. In this case, let’s reuseserial: "timestream-multi-measure-name": "serialâ€. This means that the value ofserialwill be used as the measure name as well as the dimension in Timestream. The result in Timestream is that for each record in the source database, there is a single record in Timestream with a dimensionserial, a timestampsensor_time, and a measure name of the value ofserial(in the first-row example, this would be 1). This record would then have measure values for each of the columns in the source database. When using multi-measure mapping, you should generally use"timestream-hash-measure-name": Trueso that you won’t meet the limit in Timestream of 8,192 unique measure names. For more information, refer to Recommendations for partitioning multi-measure records.

For this post, we use single-measure mapping. Therefore, you set up

sensor_datato be mapped, andsensorswill be ignored, even if you were to add it in the first selection rule. - Single-measure mapping – In this case, all the columns that aren’t the timestamp or the dimensions (

- Choose Create task to create your migration task with a Timestream target endpoint.

Run your migration task

To start your task, complete the following steps:

- On the AWS DMS console, choose Database migration tasks in the navigation pane.

- Select the task you created.

- On the Actions menu, choose Start.

You can monitor the Timestream database to view the migration activity.

Validate the migration

To verify your data is in Timestream, complete the following steps:

- On the Timestream console, under Management tools in the navigation pane, choose Query editor.

- For Choose a database to query, choose the database you’re migrating to.

- To see the number of records migrated, enter the following query:

- To see some sample data, enter the following query:

Assuming you have at least 10 rows migrated, the results should show you 10 rows of the records in your selected table, as shown in the following screenshot.

Error handling

The following are some common issues that you might face when migrating to Timestream:

- When creating table mappings, make sure that you include

timestream-dimensionsandtimestream-timestamp-name. Also, make sure thattimestream-timestamp-nameis an actual column in your source database table, is of type timestamp, and has the correct case, because it’s case-sensitive. See the following code: - Verify that your IAM role for the access policy has AWS DMS as a trusted relationship. To check this, navigate to your role on the IAM console and look under Trust relationships.

- When using

parallelLoadorparallelApply, your instance will use more RAM. If your task fails due to out of memory, you should scale your instance. For instance sizing recommendations, see Choosing the right AWS DMS replication instance for your migration.

Clean up

Now that the migration is complete, it’s time to clean up the AWS DMS, Timestream, and IAM resources:

- On the AWS DMS console, choose Database migration tasks in the navigation pane.

- Select the replication task you created and on the Actions menu, choose Delete.

- On the AWS DMS console, choose Endpoints in the navigation pane.

- Select the endpoint you created and on the Actions menu, choose Delete.

- On the AWS DMS console, choose Replication instances in the navigation pane.

- Select the replication instance you created and on the Actions menu, choose Delete.

- On the Timestream console, choose Databases in the navigation pane.

- Select the database you want to delete and choose Delete.

- On the IAM console, choose Roles in the navigation pane.

- Select the role you created and choose Delete.

- On the IAM console, choose Policies in the navigation pane.

- Select the policy you created and choose Delete.

After you finish these steps, all the resources you created for this post should be cleaned up!

Conclusion

In this post, we showed you how to set up an AWS DMS migration by creating a Timestream database, giving AWS DMS the necessary permissions to migrate to it, and creating the AWS DMS replication instance, endpoints, and task. You then ran that task and verified that the data was successfully migrated to your Timestream database. You can now run this task on your own workload. The final steps (create and run your migration task) are now universal among AWS DMS tasks. This enables you to run a full end-to-end migration from whichever relational database you would like and have the data appear in your Timestream database.

Let us know in the comments section what you are going to build with this new migration capability!

About the author

Matthew Carroll is a Software Development Engineer with the AWS DMS team at AWS He is dedicated to enhancing the versatility and scale of the tools used to migrate customer data across databases. He is the lead developer for the Amazon Timestream endpoint feature in AWS DMS.

Matthew Carroll is a Software Development Engineer with the AWS DMS team at AWS He is dedicated to enhancing the versatility and scale of the tools used to migrate customer data across databases. He is the lead developer for the Amazon Timestream endpoint feature in AWS DMS.

Source: Read More