In recent years, there has been a growing demand for machine learning models capable of handling visual and language tasks effectively, without relying on large, cumbersome infrastructure. The challenge lies in balancing performance with resource requirements, particularly for devices like laptops, consumer GPUs, or mobile devices. Many vision-language models (VLMs) require significant computational power and memory, making them impractical for on-device applications. Models such as Qwen2-VL, although performant, require expensive hardware and substantial GPU RAM, limiting their accessibility and practicality for real-time, on-device tasks. This has created a need for lightweight models that can provide strong performance with minimal resources.

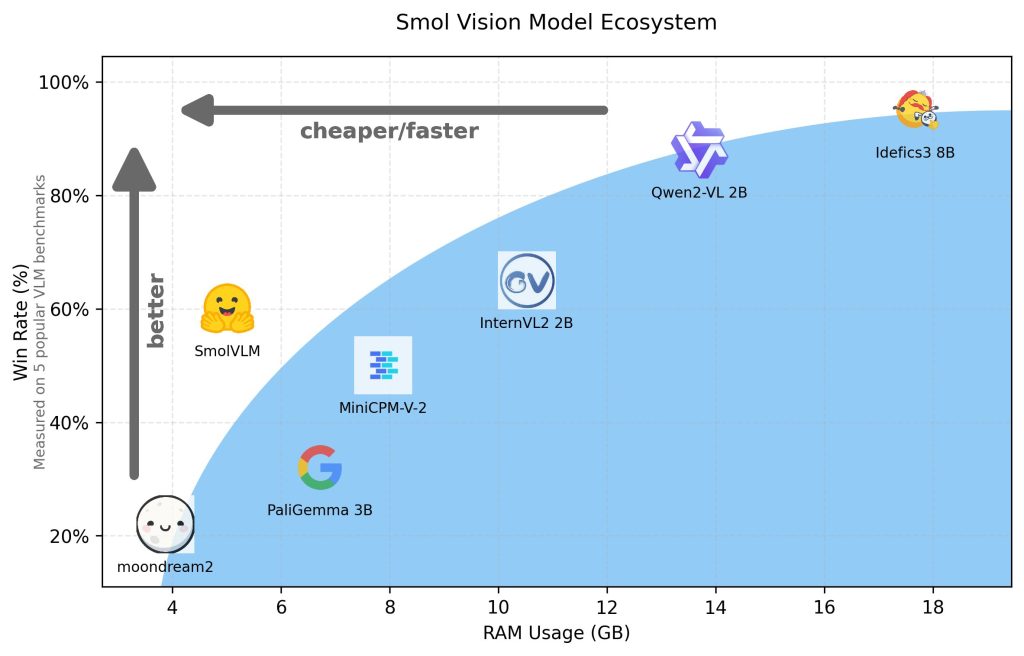

Hugging Face recently released SmolVLM, a 2B parameter vision-language model specifically designed for on-device inference. SmolVLM outperforms other models with comparable GPU RAM usage and token throughput. The key feature of SmolVLM is its ability to run effectively on smaller devices, including laptops or consumer-grade GPUs, without compromising performance. It achieves a balance between performance and efficiency that has been challenging to achieve with models of similar size and capability. Unlike Qwen2-VL 2B, SmolVLM generates tokens 7.5 to 16 times faster, due to its optimized architecture that favors lightweight inference. This efficiency translates into practical advantages for end-users.

Technical Overview

From a technical standpoint, SmolVLM has an optimized architecture that enables efficient on-device inference. It can be fine-tuned easily using Google Colab, making it accessible for experimentation and development even to those with limited resources. It is lightweight enough to run smoothly on a laptop or process millions of documents using a consumer GPU. One of its main advantages is its small memory footprint, which makes it feasible to deploy on devices that could not handle similarly sized models before. The efficiency is evident in its token generation throughput: SmolVLM produces tokens at a speed ranging from 7.5 to 16 times faster compared to Qwen2-VL. This performance gain is primarily due to SmolVLM’s streamlined architecture that optimizes image encoding and inference speed. Even though it has the same number of parameters as Qwen2-VL, SmolVLM’s efficient image encoding prevents it from overloading devices—an issue that frequently causes Qwen2-VL to crash systems like the MacBook Pro M3.

The significance of SmolVLM lies in its ability to provide high-quality visual-language inference without the need for powerful hardware. This is an important step for researchers, developers, and hobbyists who wish to experiment with vision-language tasks without investing in expensive GPUs. In tests conducted by the team, SmolVLM demonstrated its efficiency when evaluated with 50 frames from a YouTube video, producing results that justified further testing on CinePile, a benchmark that assesses a model’s ability to understand cinematic visuals. The results showed SmolVLM scoring 27.14%, placing it between two more resource-intensive models: InternVL2 (2B) and Video LlaVa (7B). Notably, SmolVLM wasn’t trained on video data, yet it performed comparably to models designed for such tasks, demonstrating its robustness and versatility. Moreover, SmolVLM achieves these efficiency gains while maintaining accuracy and output quality, highlighting that it is possible to create smaller models without sacrificing performance.

Conclusion

In conclusion, SmolVLM represents a significant advancement in the field of vision-language models. By enabling complex VLM tasks to be run on everyday devices, Hugging Face has addressed an important gap in the current landscape of AI tools. SmolVLM competes well with other models in its class and often surpasses them in terms of speed, efficiency, and practicality for on-device use. With its compact design and efficient token throughput, SmolVLM will be a valuable tool for those needing robust vision-language processing without access to high-end hardware. This development has the potential to broaden the use of VLMs, making sophisticated AI systems more accessible. As AI becomes more personalized and ubiquitous, models like SmolVLM pave the way for making powerful machine learning accessible to a wider audience.

Check out the Models on Hugging Face, Details, and Demo. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter and join our Telegram Channel and LinkedIn Group. If you like our work, you will love our newsletter.. Don’t Forget to join our 55k+ ML SubReddit.

The post Hugging Face Releases SmolVLM: A 2B Parameter Vision-Language Model for On-Device Inference appeared first on MarkTechPost.

Source: Read MoreÂ

‘

‘