Creating, editing, and transforming music and sounds present both technical and creative challenges. Current AI models often struggle with versatility, specializing in narrow tasks or lacking the ability to generalize effectively. This limits AI-assisted production and hinders creative adaptability. For AI to genuinely contribute to music and audio production, it must be versatile, compositional, and responsive to creative prompts, allowing artists to craft unique sounds. There is a clear need for a generalist model that can navigate the nuances of audio and text interaction, perform creative transformations, and deliver high-quality output.

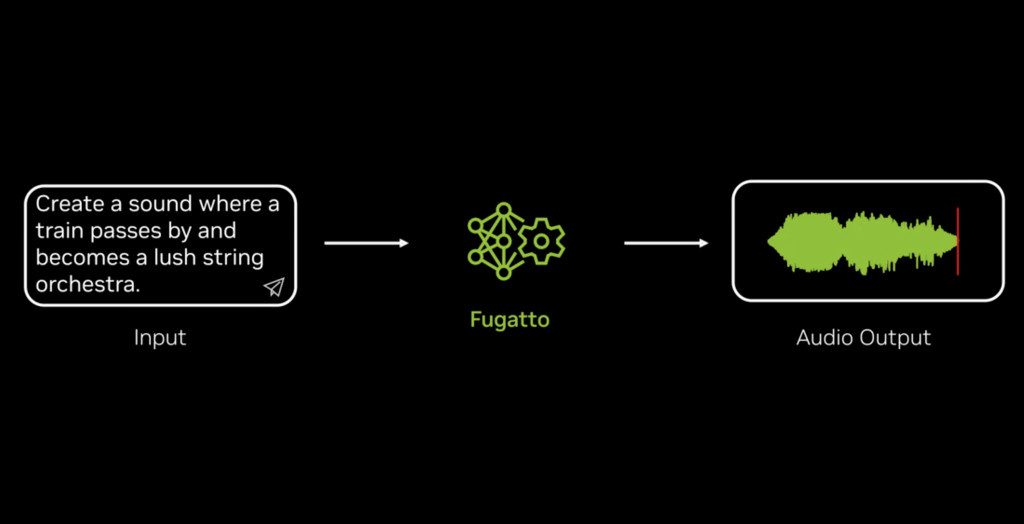

NVIDIA has introduced Fugatto, an AI model with 2.5 billion parameters designed for generating and manipulating music, voices, and sounds. Fugatto blends text prompts with advanced audio synthesis capabilities, making sound inputs highly flexible for creative experimentation—such as changing a piano line into a human voice singing or making a trumpet produce unexpected sounds.

The model supports both text and optional audio inputs, enabling it to create and manipulate sounds in ways that go beyond conventional audio generation models. This versatile approach allows for real-time experimentation, enabling artists and developers to generate new types of sounds or modify existing audio fluidly. NVIDIA’s emphasis on flexibility allows Fugatto to excel at tasks involving complex compositional transformations, making it a valuable tool for artists and audio producers.

Technical Details

Fugatto operates using an innovative data generation approach that extends beyond conventional supervised learning. Its training involved not just regular datasets but also a specialized dataset generation technique to create a wide range of audio and transformation tasks. It uses large language models (LLMs) to enhance instruction generation, allowing it to better understand and interpret the relationship between audio and textual prompts. This dataset enrichment strategy has given Fugatto the capability to learn from diverse contexts, building a robust foundation for multitask learning.

A key innovation is the Composable Audio Representation Transformation (ComposableART), an inference-time technique developed to extend classifier-free guidance to compositional instructions. This enables Fugatto to combine, interpolate, or negate different audio generation instructions smoothly, opening new possibilities in sound creation. ComposableART provides a high level of control over synthesis, allowing users to navigate Fugatto’s sonic palette with precision, blending different sounds and generating unique sonic phenomena.

Fugatto’s architecture leverages Transformer models enhanced by specific modifications like Adaptive Layer Normalization, which helps maintain consistency across diverse inputs and supports compositional instructions better than existing models. This translates into a model capable of tasks like singing synthesis, sound transformations, and effects manipulations, making it suitable for a wide range of audio applications.

Fugatto’s versatility lies in its ability to perform at the intersection of creativity and technology. Specialized models have traditionally required manual intervention or narrowly defined tasks, often lacking the flexibility needed for creative experimentation. Fugatto, however, can be adapted for numerous purposes, which brings its utility to the forefront in the audio creation landscape. Early tests of Fugatto show that it performs competitively with other specialized models on common benchmarks, but its real strength lies in emergent abilities.

The results have been promising: Fugatto’s evaluations indicate competitive or superior performance compared to specialized models for audio synthesis and transformation. When tasked with synthesizing new sounds or following compositional instructions, Fugatto outperformed several benchmarks. For instance, it has demonstrated capabilities like creating novel sounds, such as synthesizing a saxophone with unusual characteristics or generating speech that integrates smoothly with background soundscapes—tasks that were previously challenging for other models.

Furthermore, Fugatto’s ability to generate emergent sounds—sonic phenomena that go beyond typical training data—opens new possibilities for creative sound design. Its use of ComposableART for compositional synthesis means users can merge multiple attributes dynamically, making it a valuable tool for audio producers seeking creative control.

Conclusion

Fugatto is a notable advancement in generative AI for audio, offering capabilities that challenge traditional limits and enhance creative sound manipulation. NVIDIA has integrated large language models with the intricacies of sound and music, resulting in a tool that is both powerful and versatile. Fugatto’s ability to manage nuanced audio tasks, from straightforward sound generation to complex compositional modifications, makes it a valuable contribution to the future of creative AI tools. This model has significant implications not only for artists but also for industries such as gaming, entertainment, and education, where AI tools are increasingly supporting and inspiring human creativity.

Check out the Paper and NVIDIA Blog. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter and join our Telegram Channel and LinkedIn Group. If you like our work, you will love our newsletter.. Don’t Forget to join our 55k+ ML SubReddit.

The post NVIDIA AI Unveils Fugatto: A 2.5 Billion Parameter Audio Model that Generates Music, Voice, and Sound from Text and Audio Input appeared first on MarkTechPost.

Source: Read MoreÂ

‘

‘