Diffusion models have emerged as transformative tools in machine learning, providing unparalleled capabilities for generating high-quality samples across domains such as image synthesis, molecule design, and audio creation. These models function by iteratively refining noisy data to match desired distributions, leveraging advanced denoising processes. With their scalability to vast datasets and applicability to diverse tasks, diffusion models are increasingly regarded as foundational in generative modeling. However, their practical application in conditional generation remains a significant challenge, especially when outputs must satisfy specific user-defined criteria.

A major obstacle in diffusion modeling lies in conditional generation, where models must tailor outputs to match attributes such as labels, energies, or features without additional retraining. Traditional methods, including classifier-based and classifier-free guidance, often involve training specialized predictors for each conditioning signal. While effective, these approaches are computationally intensive and lack flexibility, particularly when applied to novel datasets or tasks. The absence of unified frameworks or systematic benchmarks further complicates their broader adoption. This creates a critical need for more efficient and adaptable methods to expand the utility of diffusion models in real-world applications.

Existing methodologies in training-based guidance rely heavily on pre-trained conditional predictors embedded into the denoising process. For example, classifier-based guidance uses noise-conditioned classifiers, while classifier-free guidance incorporates conditioning signals directly into diffusion model training. While theoretically sound, these approaches require significant computational resources and retraining efforts for every new condition. Also, existing methods frequently need to catch up in handling complex or fine-grained conditions, as evidenced by their limited success on datasets like CIFAR10 or scenarios demanding out-of-distribution generalization. The need for methods that bypass retraining while maintaining high performance is evident.

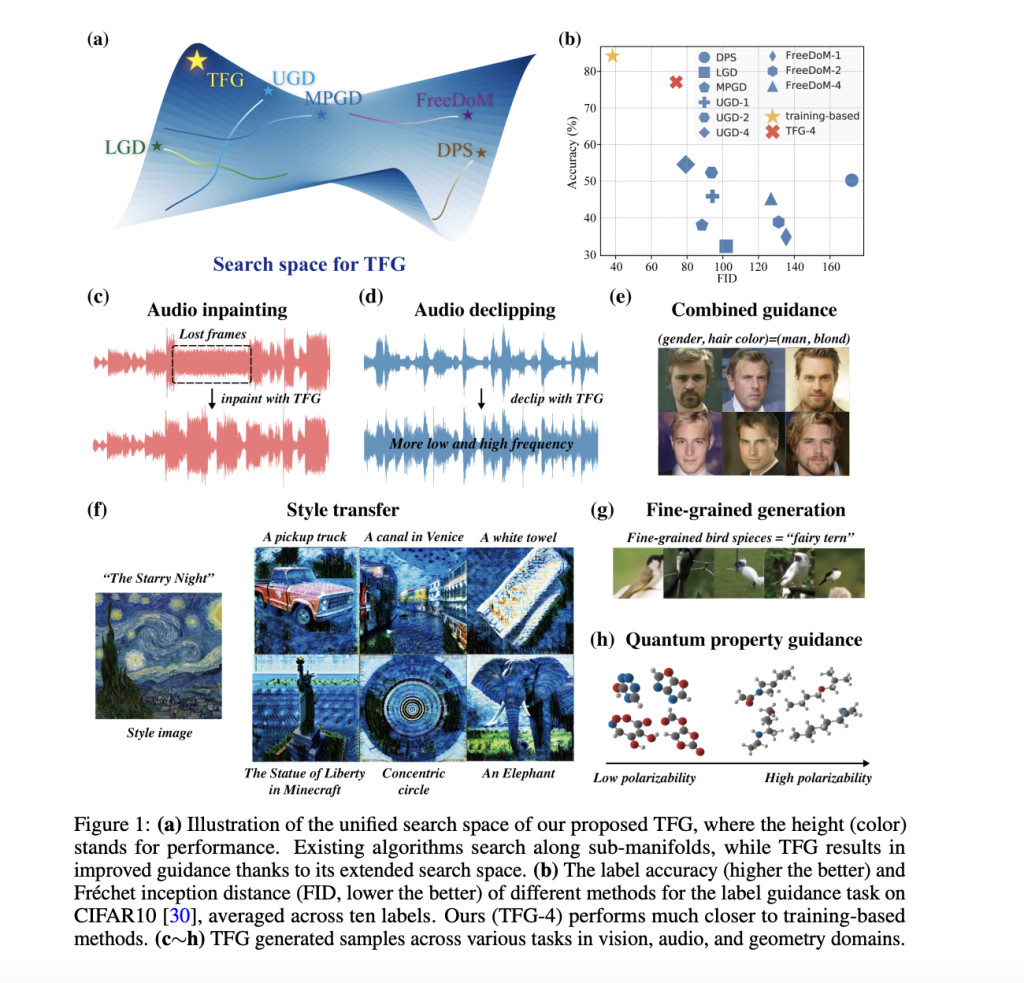

Researchers from Stanford University, Peking University, and Tsinghua University introduced a new framework called Training-Free Guidance (TFG). This algorithmic innovation unifies existing conditional generation methods into a single design space, eliminating the need for retraining while enhancing flexibility and performance. TFG reframes conditional generation as a problem of optimizing hyper-parameters within a unified framework, which can be applied seamlessly to various tasks. By integrating tools like mean guidance, variance guidance, and implicit dynamic modeling, TFG expands the design space available for training-free conditional generation, offering a robust alternative to traditional approaches.

TFG achieves its efficiency by guiding the diffusion process using hyper-parameters rather than specialized training. The method employs advanced techniques such as recurrent refinement, where the model iteratively denoises and regenerates samples to improve their alignment with target properties. Key elements like implicit dynamic modeling add noise to guidance functions to drive predictions toward high-density regions, while variance guidance incorporates second-order information to enhance gradient stability. By combining these features, TFG simplifies the conditional generation process and enables its application to previously inaccessible domains, including fine-grained label guidance and molecule generation.

The framework’s effectiveness was rigorously validated through comprehensive benchmarking across seven diffusion models and 16 tasks, encompassing 40 individual targets. TFG delivered an 8.5% average improvement in performance over existing methods. For instance, in CIFAR10 label guidance tasks, TFG achieved an accuracy of 77.1% compared to 52% for earlier approaches without recurrence. On ImageNet, TFG’s label guidance reached 59.8% accuracy, showcasing its superiority in handling challenging datasets. Its results in molecule property optimization were particularly notable, with improvements of 5.64% in mean absolute error over competing methods. TFG also excelled in multi-condition tasks, such as guiding facial image generation based on combinations of gender and age or hair color, outperforming existing models while mitigating dataset biases.

Key Takeaways from the Research:

- Efficiency Gains: TFG eliminates the need for retraining, significantly reducing computational costs while maintaining high accuracy across tasks.

- Broad Applicability: The framework demonstrated superior performance in diverse domains, including CIFAR10 (77.1% accuracy), ImageNet (59.8% accuracy), and molecule generation (5.64% improvement in MAE).

- Robust Benchmarks: Comprehensive testing on seven models, 16 tasks, and 40 targets sets a new standard for evaluating diffusion models.

- Innovative Techniques: This technique incorporates mean and variance guidance, implicit dynamic modeling, and recurrent refinement to enhance sample quality.

- Bias Mitigation: Successfully addressed dataset imbalances in multi-condition tasks, achieving 46.7% accuracy for rare classes such as “male + blonde hair.â€

- Scalable Design: The hyper-parameter optimization approach ensures scalability to new tasks and datasets without compromising performance.

In conclusion, TFG represents a significant breakthrough in diffusion modeling by addressing key limitations in conditional generation. Unifying diverse methods into a single framework streamlines the adaptation of diffusion models to various tasks without additional training. Its performance across vision, audio, and molecular domains highlights its versatility and potential as a foundational tool in machine learning. The study advances the state-of-the-art diffusion models and establishes a robust benchmark for future research, paving the way for more accessible and efficient generative modeling.

Check out the Paper here. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter and join our Telegram Channel and LinkedIn Group. If you like our work, you will love our newsletter.. Don’t Forget to join our 55k+ ML SubReddit.

[FREE AI VIRTUAL CONFERENCE] SmallCon: Free Virtual GenAI Conference ft. Meta, Mistral, Salesforce, Harvey AI & more. Join us on Dec 11th for this free virtual event to learn what it takes to build big with small models from AI trailblazers like Meta, Mistral AI, Salesforce, Harvey AI, Upstage, Nubank, Nvidia, Hugging Face, and more.

The post Training-Free Guidance (TFG): A Unified Machine Learning Framework Transforming Conditional Generation in Diffusion Models with Enhanced Efficiency and Versatility Across Domains appeared first on MarkTechPost.

Source: Read MoreÂ