Language and vision models have experienced remarkable breakthroughs with the advent of Transformer architecture. Models like BERT and GPT have revolutionized natural language processing, while Vision Transformers have achieved significant success in computer vision tasks. This architecture’s effectiveness has extended to recommendation systems through models like SASRec and Bert4Rec. However, despite these academic achievements, significant challenges persist in implementing these solutions for large-scale industrial applications, particularly in platforms like Kuaishou’s short-video recommendation system, where real-time adaptation and complex user behavior patterns demand more sophisticated approaches.

Recommendation systems operate through a two-stage process: retrieval and ranking. The retrieval phase efficiently selects potential items from vast pools using lightweight dual-tower architectures, where user and item features are processed separately. The ranking phase then applies more sophisticated models to score this filtered subset. This field has evolved from traditional collaborative filtering methods to advanced deep learning approaches. Sequential modeling has emerged as a crucial component, with Transformer-based models like SASRec and BERT4Rec demonstrating remarkable improvements in capturing user behavior patterns through their attention mechanisms and bidirectional processing capabilities.

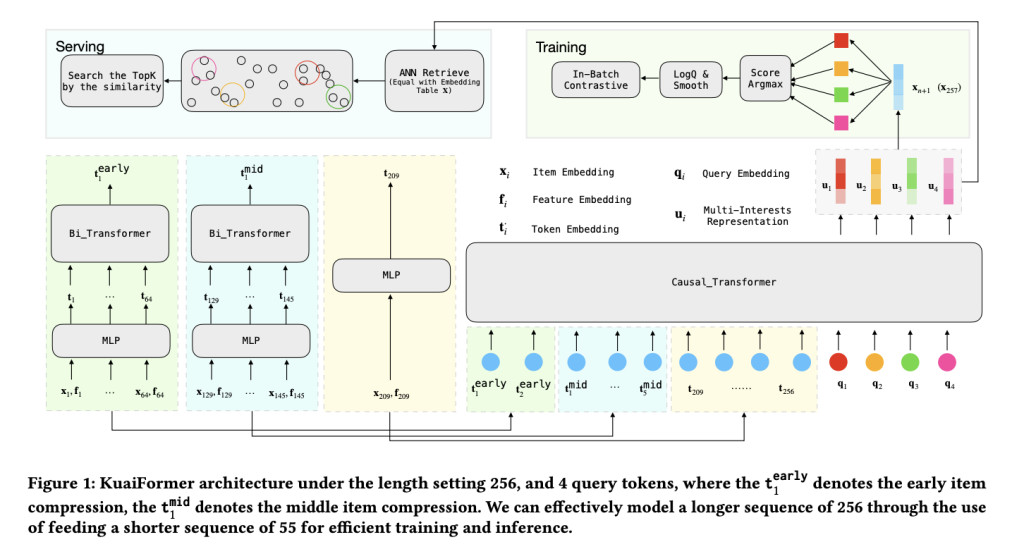

Researchers from Kuaishou Technology, Beijing, China introduce KuaiFormer, an outstanding transformation in large-scale content recommendation systems, departing from traditional score estimation methods to embrace a transformer-driven Next Action Prediction approach. This innovative framework, implemented in the Kuaishou App’s short-video recommendation system, has demonstrated remarkable success in serving over 400 million daily active users. The system excels in real-time interest acquisition and multi-interest extraction, leading to significant improvements in user engagement metrics. KuaiFormer’s successful deployment provides valuable insights into implementing transformer models in industrial-scale recommendation systems, offering practical solutions for both technical and business challenges.

The problem of short-video recommendation presents unique technical challenges in modeling user interests and predicting engagement. KuaiFormer processes user interaction data as sequences, where each interaction includes both the video ID and various watching attributes such as viewing time, interaction labels, and category tags. The system utilizes these sequences to predict users’ next likely engagements through a two-stage process: training to capture real-time interests and inference to retrieve relevant content. The architecture employs sophisticated embedding techniques for both discrete and continuous attributes, utilizing a Transformer-based backbone inspired by the Llama architecture to process these complex sequential patterns.

KuaiFormer operates within a sophisticated industrial streaming video recommendation infrastructure, serving as a crucial component of Kuaishou’s retrieval system. The system processes user requests through multiple retrieval pathways, including traditional approaches like Swing, GNN, Comirec, Dimerec, and GPRP, with KuaiFormer functioning as an additional pathway. The architecture implements a multi-stage ranking process, progressing from pre-ranking through cascading ranks to final full ranking. The system maintains continuous improvement through real-time processing of user feedback signals, including watch time and social interactions, while optimizing efficiency through dedicated embedding servers and GPU-accelerated retrieval algorithms like Faiss and ScaNN.

Comprehensive performance evaluations demonstrate KuaiFormer’s superior effectiveness across multiple metrics. In offline testing, KuaiFormer significantly outperformed traditional approaches like SASRec and ComiRec, showing a 25% improvement in hit rate compared to GPRP. Online A/B testing across Kuaishou’s major platforms revealed substantial improvements in key metrics, including video watch time increases of 0.360%, 0.126%, and 0.411% across different scenarios. Extensive hyperparameter analysis revealed optimal configurations: sequence lengths beyond 64 showed diminishing returns, 6 query tokens provided the best balance of performance and efficiency, and 4-5 transformer layers achieved optimal accuracy. The innovative item compression strategy proved particularly effective, matching or exceeding the performance of uncompressed sequences while maintaining computational efficiency.

KuaiFormer represents a significant advancement in industrial-scale recommendation systems, particularly for short-video content. The framework successfully addresses key challenges through its innovative combination of multi-interest extraction, adaptive sequence compression, and robust training mechanisms. These technical achievements have translated into measurable business impact, as evidenced by improved user engagement metrics and hit rates across Kuaishou’s platform. KuaiFormer’s success demonstrates that sophisticated Transformer-based architectures can be effectively scaled for real-world applications, handling billions of requests while maintaining high performance. This breakthrough paves the way for future developments in content recommendation systems and establishes a new benchmark for industrial-scale neural architectures.

Check out the Paper. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter and join our Telegram Channel and LinkedIn Group. If you like our work, you will love our newsletter.. Don’t Forget to join our 55k+ ML SubReddit.

[FREE AI VIRTUAL CONFERENCE] SmallCon: Free Virtual GenAI Conference ft. Meta, Mistral, Salesforce, Harvey AI & more. Join us on Dec 11th for this free virtual event to learn what it takes to build big with small models from AI trailblazers like Meta, Mistral AI, Salesforce, Harvey AI, Upstage, Nubank, Nvidia, Hugging Face, and more.

The post KuaiFormer: A Transformer-Based Architecture for Large-Scale Short-Video Recommendation Systems appeared first on MarkTechPost.

Source: Read MoreÂ