In recent years, the rise of large language models (LLMs) and vision-language models (VLMs) has led to significant advances in artificial intelligence, enabling models to interact more intelligently with their environments. Despite these advances, existing models still struggle with tasks that require a high degree of reasoning, long-term planning, and adaptability in dynamic scenarios. Most of the benchmarks available today, while effective in assessing specific language or multimodal capabilities, do not fully capture the complexities involved in real-world decision-making. This gap in evaluation is especially noticeable when attempting to measure how well LLMs can autonomously navigate complex environments, manage resources, and perform sequential decision-making. These challenges necessitate new methodologies for evaluating agentic capabilities—an area where traditional benchmarks often fall short. The need for a more comprehensive evaluation tool is evident.

Meet BALROG

BALROG is a benchmark designed to assess the agentic capabilities of LLMs and VLMs through a diverse set of challenging games. BALROG addresses these evaluation gaps by incorporating environments that require not just basic language or multimodal comprehension but also sophisticated agentic behaviors. It aggregates six well-known game environments—BabyAI, Crafter, TextWorld, Baba Is AI, MiniHack, and the NetHack Learning Environment (NLE)—into one cohesive benchmark. These environments vary significantly in complexity, ranging from simple tasks that even novice humans can accomplish in seconds to extremely challenging ones that demand years of expertise. BALROG aims to provide a standardized testbed for evaluating the ability of AI agents to autonomously plan, strategize, and interact meaningfully with their surroundings over long horizons. Unlike other benchmarks, BALROG requires agents to demonstrate both short-term and long-term planning, continuous exploration, and adaptation, making it a rigorous test for current LLMs and VLMs.

Technical Overview

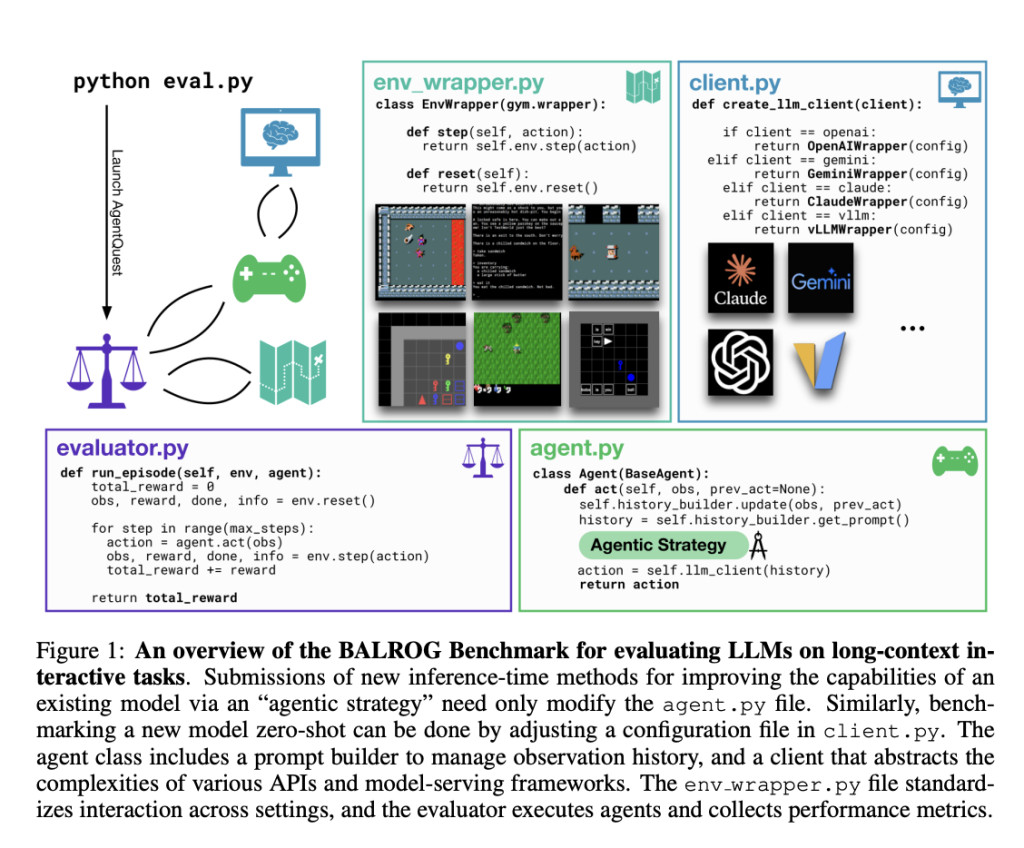

BALROG provides a detailed infrastructure that facilitates the implementation and assessment of agentic LLMs. It uses a fine-grained metric system to evaluate the performance of agents in different settings. For example, in BabyAI, agents must complete navigation tasks described in natural language, while in MiniHack and NLE, the challenges are significantly more complex, requiring advanced spatial reasoning and the ability to handle long-term credit assignment. The evaluation setup is consistent across environments, employing zero-shot prompting to ensure that the models are not specifically tuned for each game. Moreover, BALROG allows researchers to develop and test new inference-time prompting strategies or “agentic strategies†that could further enhance model capabilities during evaluations. This infrastructure makes BALROG not only a benchmark but also a development framework where new approaches to model prompting and interaction can be prototyped and tested in a controlled manner.

Evaluation Insights

The significance of BALROG lies in its ability to identify where current AI models fall short in their development toward becoming autonomous agents. Initial results from using BALROG have shown that even the most advanced LLMs struggle with tasks that involve multiple steps of reasoning or require interpreting visual cues. For example, in environments like MiniHack and NetHack, none of the current models have demonstrated the ability to make significant progress—often failing at critical decision points, such as managing in-game resources or avoiding common pitfalls. The models performed worse when images were added to the text-based observation, indicating that vision-based decision-making remains a major challenge for current VLMs. The evaluation results show an average performance drop when switching from language-only to vision-language formats, with GPT-4, Claude 3.5, and Llama models all seeing reduced accuracy. For language-only tasks, GPT-4 showed the best overall performance with an average progression rate of about 32%, while in vision-language settings, models like Claude 3.5 Sonnet maintained better consistency, highlighting a disparity in multimodal integration capabilities across models.

These insights provide a clear roadmap for what needs to be improved in current AI systems. The capability gaps identified by BALROG underscore the importance of developing stronger vision-language fusion techniques, more effective strategies for long-term planning, and new approaches to leveraging existing knowledge during decision-making. The “knowing-doing†gap—where models correctly identify dangerous or unproductive actions but fail to avoid them in practice—is another significant finding that suggests current architectures may need enhanced internal feedback mechanisms to align decision-making with knowledge effectively. BALROG’s open-source nature and detailed leaderboard provide a transparent platform for researchers to contribute, compare, and refine their agentic approaches, advancing what LLMs and VLMs can achieve autonomously.

Conclusion

BALROG sets a new standard for evaluating the agentic capabilities of language and vision-language models. By providing a diverse set of long-horizon tasks, BALROG challenges models to go beyond simple question-answering or translation tasks and act as true agents capable of planning and adapting in complex environments. This benchmark is not just about evaluating current capabilities but also about guiding future research toward building AI systems that can perform effectively in real-world, dynamic situations.

Researchers interested in exploring BALROG further can visit balrogai.com or access the open-source toolkit available at GitHub. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter and join our Telegram Channel and LinkedIn Group. If you like our work, you will love our newsletter.. Don’t Forget to join our 55k+ ML SubReddit.

[FREE AI VIRTUAL CONFERENCE] SmallCon: Free Virtual GenAI Conference ft. Meta, Mistral, Salesforce, Harvey AI & more. Join us on Dec 11th for this free virtual event to learn what it takes to build big with small models from AI trailblazers like Meta, Mistral AI, Salesforce, Harvey AI, Upstage, Nubank, Nvidia, Hugging Face, and more

The post Meet ‘BALROG’: A Novel AI Benchmark Evaluating Agentic LLM and VLM Capabilities on Long-Horizon Interactive Tasks Using Reinforcement Learning Environment appeared first on MarkTechPost.

Source: Read MoreÂ