Amazon Bedrock is a fully managed service that offers a choice of high-performing foundation models (FMs) from leading AI companies like AI21 Labs, Anthropic, Cohere, Meta, Mistral AI, Stability AI, and Amazon through a single API, along with a broad set of capabilities to build generative AI applications with security, privacy, and responsible AI. With Amazon Bedrock, you can experiment with and evaluate top FMs for your use case, privately customize them with your data using techniques such as fine-tuning and Retrieval Augmented Generation (RAG), and build agents that run tasks using your enterprise systems and data sources. Because Amazon Bedrock is serverless, you don’t have to manage any infrastructure, and you can securely integrate and deploy generative AI capabilities into your applications using the AWS services you are already familiar with.

In this post, we demonstrate how to use Amazon Bedrock with the AWS SDK for Python (Boto3) to programmatically incorporate FMs.

Solution overview

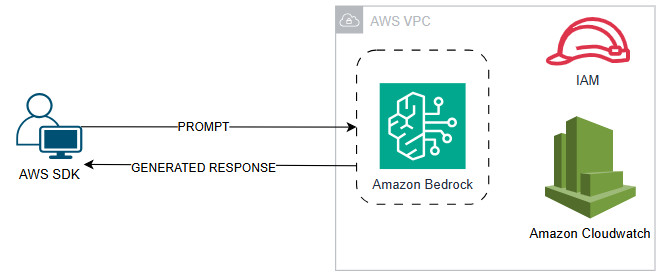

The solution uses an AWS SDK for Python script with features that invoke Anthropic’s Claude 3 Sonnet on Amazon Bedrock. By using this FM, it generates an output using a prompt as input. The following diagram illustrates the solution architecture.

Prerequisites

Before you invoke the Amazon Bedrock API, make sure you have the following:

- An AWS account that provides access to AWS services, including Amazon Bedrock

- The AWS Command Line Interface (AWS CLI) set up

- An AWS Identity and Access Management (IAM) user set up for the Amazon Bedrock API and appropriate permissions added to the IAM user

- The IAM user access key and secret key to configure the AWS CLI and permissions

- Access to FMs on Amazon Bedrock

- The latest Boto3 library

- The minimum Python version 3.8 configured with your integrated development environment (IDE)

Deploy the solution

After you complete the prerequisites, you can start using Amazon Bedrock. Begin by scripting with the following steps:

- Import the required libraries:

- Set up the Boto3 client to use the Amazon Bedrock runtime and specify the AWS Region:

- Define the model to invoke using its model ID. In this example, we use Anthropic’s Claude 3 Sonnet on Amazon Bedrock:

- Assign a prompt, which is your message that will be used to interact with the FM at invocation:

Prompt engineering techniques can improve FM performance and enhance results.

Before invoking the Amazon Bedrock model, we need to define a payload, which acts as a set of instructions and information guiding the model’s generation process. This payload structure varies depending on the chosen model. In this example, we use Anthropic’s Claude 3 Sonnet on Amazon Bedrock. Think of this payload as the blueprint for the model, and provide it with the necessary context and parameters to generate the desired text based on your specific prompt. Let’s break down the key elements within this payload:

- anthropic_version – This specifies the exact Amazon Bedrock version you’re using.

- max_tokens – This sets a limit on the total number of tokens the model can generate in its response. Tokens are the smallest meaningful unit of text (word, punctuation, subword) processed and generated by large language models (LLMs).

- temperature – This parameter controls the level of randomness in the generated text. Higher values lead to more creative and potentially unexpected outputs, and lower values promote more conservative and consistent results.

- top_k – This defines the number of most probable candidate words considered at each step during the generation process.

- top_p – This influences the sampling probability distribution for selecting the next word. Higher values favor frequent words, whereas lower values allow for more diverse and potentially surprising choices.

- messages – This is an array containing individual messages for the model to process.

- role – This defines the sender’s role within the message (the user for the prompt you provide).

- content – This array holds the actual prompt text itself, represented as a “text†type object.

- Define the payload as follows:

- You have set the parameters and the FM you want to interact with. Now you send a request to Amazon Bedrock by providing the FM to interact with and the payload that you defined:

- After the request is processed, you can display the result of the generated text from Amazon Bedrock:

Let’s look at our complete script:

Â

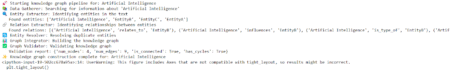

Invoking the model with the prompt “Hello, how are you?†will yield the result shown in the following screenshot.

![]()

Clean up

When you’re done using Amazon Bedrock, clean up temporary resources like IAM users and Amazon CloudWatch logs to avoid unnecessary charges. Cost considerations depend on usage frequency, chosen model pricing, and resource utilization while the script runs. See Amazon Bedrock Pricing for pricing details and cost-optimization strategies like selecting appropriate models, optimizing prompts, and monitoring usage.

Conclusion

In this post, we demonstrated how to programmatically interact with Amazon Bedrock FMs using Boto3. We explored invoking a specific FM and processing the generated text, showcasing the potential for developers to use these models in their applications for a variety of use cases, such as:

- Text generation – Generate creative content like poems, scripts, musical pieces, or even different programming languages

- Code completion – Enhance developer productivity by suggesting relevant code snippets based on existing code or prompts

- Data summarization – Extract key insights and generate concise summaries from large datasets

- Conversational AI – Develop chatbots and virtual assistants that can engage in natural language conversations

Stay curious and explore how generative AI can revolutionize various industries. Explore the different models and APIs and run comparisons of how each model provides different outputs. Find the model that will fit your use case and use this script as a base to create agents and integrations in your solution.

About the Author

Merlin Naidoo is a Senior Technical Account Manager at AWS with over 15 years of experience in digital transformation and innovative technical solutions. His passion is connecting with people from all backgrounds and leveraging technology to create meaningful opportunities that empower everyone. When he’s not immersed in the world of tech, you can find him taking part in active sports.

Source: Read MoreÂ