Neural networks have traditionally operated as static models with fixed structures and parameters once trained, a limitation that hinders their adaptability to new or unforeseen scenarios. Deploying these models in varied environments often requires designing and teaching new configurations, a resource-intensive process. While flexible models and network pruning have been explored to address these challenges, they come with constraints. Flexible models are confined to their training configurations, and pruning techniques often degrade performance and necessitate retraining. To overcome these issues, researchers aim to develop neural networks that can dynamically adapt to various configurations and generalize beyond their training setups.

Existing approaches to efficient neural networks include structural pruning, flexible neural architectures, and continuous deep learning methods. Structural pruning reduces network size by eliminating redundant connections, while flexible neural networks adapt to different configurations but are limited to the scenarios encountered during training. Continuous models, such as those employing neural ordinary differential equations or weight generation via hypernetworks, enable dynamic transformations but often require extensive training checkpoints or are limited to fixed-size weight predictions.

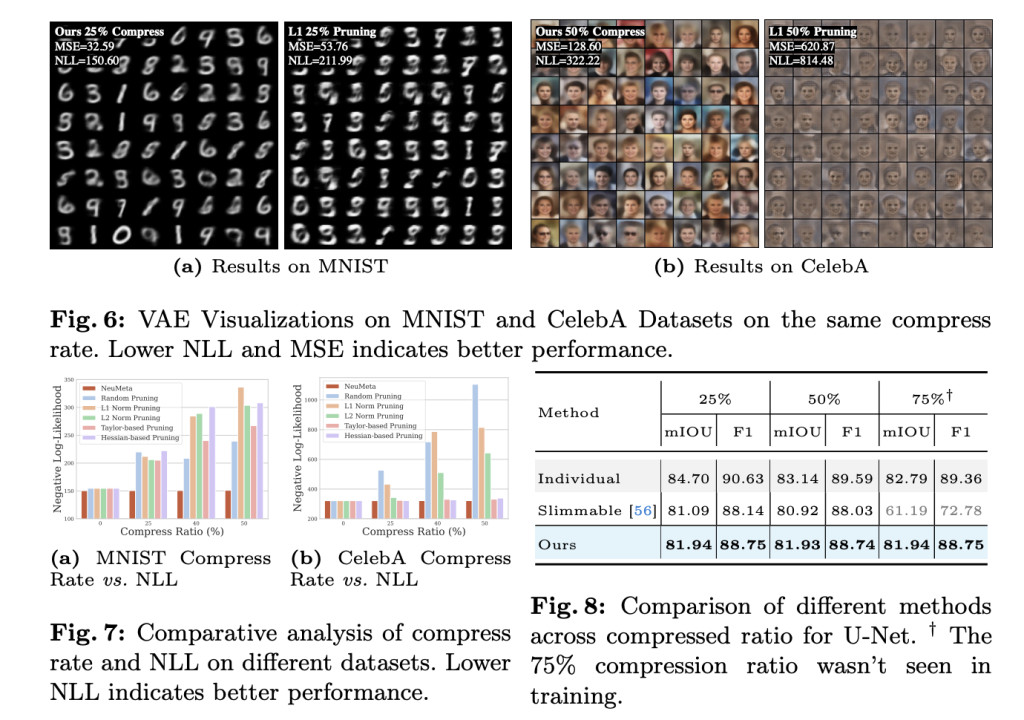

The National University of Singapore researchers introduced Neural Metamorphosis (NeuMeta), a learning paradigm that constructs self-morphable neural networks by modeling them as points on a continuous weight manifold. Using Implicit Neural Representations (INRs) as hypernetworks, NeuMeta generates weights for any-sized network directly from the manifold, including unseen configurations, eliminating the need for retraining. Strategies like weight matrix permutation and input noise during training are employed to enhance the manifold’s smoothness. NeuMeta achieves remarkable results in tasks like image classification and segmentation, maintaining full-size performance even with a 75% compression rate, showcasing adaptability and robustness.

NeuMeta introduces a neural implicit function to predict weights for diverse neural networks by leveraging the smoothness of the weight manifold. The framework models weight as a continuous function using an INR, enabling it to generalize across varying architectures. Normalizing and encoding weight indices using Fourier features maps model space to weights via a multi-layer perceptron. NeuMeta ensures smoothness within and across models by addressing weight matrix permutations and incorporating coordinate perturbations during training. This approach facilitates efficient optimization and stability, generating weights for different configurations while minimizing task-specific and reconstruction losses.

The experiments evaluate NeuMeta across tasks like classification, segmentation, and image generation using datasets like MNIST, CIFAR, ImageNet, PASCAL VOC2012, and CelebA. NeuMeta performs better than pruning and flexible model approaches, especially under high compression ratios, maintaining stability up to 40%. Ablation studies validate the benefits of weight permutation strategies and manifold sampling in improving accuracy and smoothness across network configurations. For image generation, NeuMeta outperforms traditional pruning with significantly better reconstruction metrics. Semantic segmentation results reveal improved efficiency over Slimmable networks, particularly at untrained compression rates. Overall, NeuMeta efficiently balances accuracy and parameter storage.

In conclusion, the study introduces Neural Metamorphosis (NeuMeta), a framework for creating self-morphable neural networks. Instead of designing separate models for different architectures or sizes, NeuMeta learns a continuous weight manifold to generate tailored network weights for any configuration without retraining. Using neural implicit functions as hypernetworks, NeuMeta maps input coordinates to corresponding weight values while ensuring smoothness in the weight manifold. Strategies like weight matrix permutation and noise addition during training enhance adaptability. NeuMeta demonstrates strong performance in image classification, segmentation, and generation tasks, maintaining effectiveness even with a 75% compression rate.

Check out the Paper, Project Page, and GitHub Repo. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter and join our Telegram Channel and LinkedIn Group. If you like our work, you will love our newsletter.. Don’t Forget to join our 55k+ ML SubReddit.

[FREE AI VIRTUAL CONFERENCE] SmallCon: Free Virtual GenAI Conference ft. Meta, Mistral, Salesforce, Harvey AI & more. Join us on Dec 11th for this free virtual event to learn what it takes to build big with small models from AI trailblazers like Meta, Mistral AI, Salesforce, Harvey AI, Upstage, Nubank, Nvidia, Hugging Face, and more.

The post NeuMeta (Neural Metamorphosis): A Paradigm for Self-Morphable Neural Networks via Continuous Weight Manifolds appeared first on MarkTechPost.

Source: Read MoreÂ