Foundation models (FMs) and large language models (LLMs) are revolutionizing AI applications by enabling tasks such as text summarization, real-time translation, and software development. These technologies have powered the development of autonomous agents that can perform complex decision-making and iterative processes with minimal human intervention. However, as these systems tackle increasingly multifaceted tasks, they require robust observability, traceability, and compliance mechanisms. Ensuring their reliability has become critical, especially as the demand for FM-based autonomous agents grows across academia and industry.

A major hurdle in FM-based autonomous agents is their need for consistent traceability and observability across operational workflows. These agents rely on intricate processes, integrating various tools, memory modules, and decision-making capabilities to perform their tasks. This complexity often leads to suboptimal outputs that are difficult to debug and correct. Regulatory requirements, such as the EU AI Act, add another layer of complexity by demanding transparency and traceability in high-risk AI systems. Compliance with such frameworks is vital for gaining trust and ensuring the ethical deployment of AI systems.

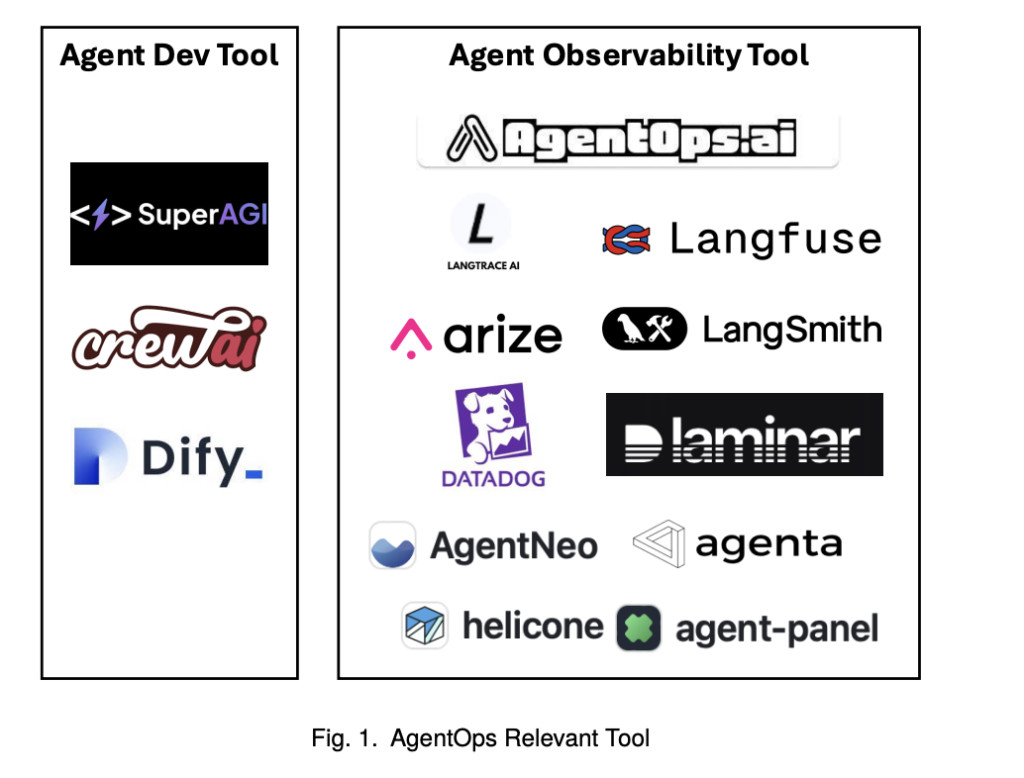

Existing tools and frameworks provide partial solutions but need to deliver end-to-end observability. For instance, LangSmith and Arize offer features for monitoring agent costs and improving latency but fail to address the broader life-cycle traceability required for debugging and compliance. Similarly, frameworks such as SuperAGI and CrewAI enable multi-agent collaboration and agent customization but lack robust mechanisms for monitoring decision-making pathways or tracing errors to their source. These limitations urgently require tools that can provide comprehensive oversight throughout the agent production life-cycle.

Researchers at CSIRO’s Data61, Australia, conducted a rapid review of tools and methodologies in the AgentOps ecosystem to address these gaps. Their study examined existing AgentOps tools and identified key features for achieving observability and traceability in FM-based agents. Based on their findings, the researchers proposed a comprehensive overview of observability data and traceable artifacts that span the entire agent life cycle. Their review underscores the importance of these tools in ensuring system reliability, debugging, and compliance with regulatory frameworks such as the EU AI Act.

The methodology employed in the study involved a detailed analysis of tools supporting the AgentOps ecosystem. The researchers identified observability and traceability as core components for enhancing the reliability of FM-based agents. AgentOps tools allow developers to monitor workflows, record LLM interactions, and trace external tool usage. Memory modules were highlighted as crucial for maintaining both short-term and long-term context, enabling agents to produce coherent outputs in multi-step tasks. Another important feature is the integration of guardrails, which enforce ethical and operational constraints to guide agents toward achieving their predefined objectives. Observability features like artifact tracing and session-level analytics were critical for real-time monitoring and debugging.

The study revealed results that emphasize the effectiveness of AgentOps tools in addressing the challenges of FM-based agents. These tools ensure compliance with the EU AI Act’s Articles 12, 26, and 79 by implementing comprehensive logging and monitoring capabilities. Developers can trace every decision made by the agent, from initial user inputs to intermediate steps and final outputs. This level of traceability not only simplifies debugging but also enhances transparency in agent operations. Observability tools within the AgentOps ecosystem also enable performance optimization through session-level analytics and actionable insights, helping developers refine workflows and improve efficiency. Although specific numerical improvements were not provided in the paper, the ability of these tools to streamline processes and enhance system reliability was consistently emphasized.

The findings by CSIRO’s Data61 researchers provide a systematic overview of the AgentOps landscape and its potential to transform FM-based agent development. Their review offers valuable insights for developers and stakeholders looking to deploy reliable and compliant AI systems by focusing on observability and traceability. The study underscores the importance of integrating these capabilities into AgentOps platforms, which serve as a foundation for building scalable, transparent, and trustworthy autonomous agents. As the demand for FM-based agents continues to grow, the methodologies and tools outlined in this research set a benchmark for future advancements.

Check out the Paper. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter and join our Telegram Channel and LinkedIn Group. If you like our work, you will love our newsletter.. Don’t Forget to join our 55k+ ML SubReddit.

[FREE AI WEBINAR] Implementing Intelligent Document Processing with GenAI in Financial Services and Real Estate Transactions– From Framework to Production

The post This AI Paper Explores AgentOps Tools: Enhancing Observability and Traceability in Foundation Model FM-Based Autonomous Agents appeared first on MarkTechPost.

Source: Read MoreÂ