Large Language Models (LLMs) have advanced exponentially since the last decade. However, LLMs still need to improve regarding deployment and utilization, particularly in the areas of computational cost, latency, and output accuracy. This limits the accessibility of LLMs to smaller organizations, degrades the user experience in real-time applications, and risks misinformation or errors in critical domains like healthcare and finance. Addressing these obstacles is essential for broader adoption and trust in LLM-powered solutions.

Existing approaches for optimizing LLMs include methods like prompt engineering, few-shot learning, and hardware accelerations, yet these techniques often focus on isolated aspects of optimization. While effective in certain scenarios, they may not comprehensively address the intertwined challenges of computational cost, latency, and accuracy. Â

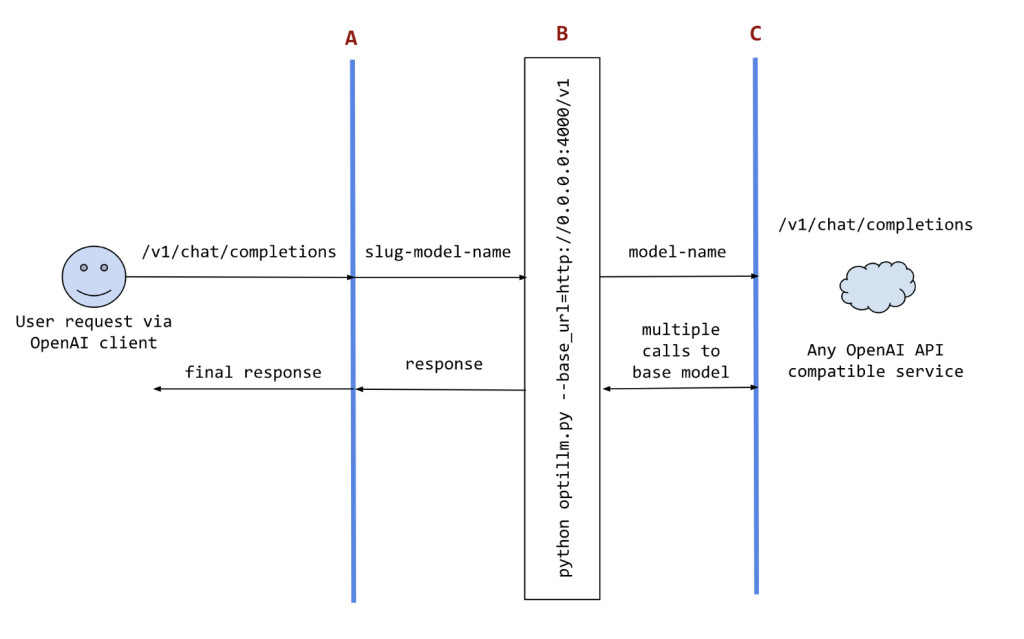

The proposed solution, Optillm, introduces a holistic framework to optimize LLMs by integrating several strategies into a unified system. It builds upon current practices but extends its capabilities with a multi-faceted approach. Optillm optimizes LLMs by focusing on three key dimensions: prompt engineering, intelligent model selection, and inference optimization. Furthermore, it incorporates a plugin system that enhances flexibility and seamlessly integrates with other tools and libraries. This makes Optillm suitable for a wide range of applications, from specific-use cases requiring high accuracy to tasks that demand low-latency responses.

Optillm adopts a multi-pronged methodology to tackle the challenges of LLM optimization. First, prompt optimization utilizes techniques like few-shot learning to guide LLMs toward producing more precise outputs. By refining how prompts are structured, Optillm ensures that the responses generated by LLMs align closely with the intended objectives. Second, Optillm incorporates task-specific strategies in model selection to select the most suitable LLM for a given application. This approach balances performance metrics like accuracy, computational cost, and speed, ensuring efficiency without compromising output quality.Â

Third, Optillm excels in inference optimization by employing advanced techniques, such as hardware acceleration with GPUs and TPUs, alongside model quantization and pruning. These steps reduce the model’s size and complexity, which lowers memory requirements and enhances inference speed. The tool’s plugin system also enables developers to customize and integrate Optillm into their existing workflows, improving its usability across diverse projects. While still in development, Optillm’s comprehensive framework demonstrates the potential to address critical LLM deployment challenges. It surpasses the scope of traditional tools by offering an integrated solution rather than isolated methods.

Optillm represents a promising innovation for optimizing LLMs by addressing computational cost, latency, and accuracy challenges through a multi-faceted approach. By combining advanced prompt optimization, task-specific model selection, inference acceleration, and flexible plugins, it stands as a versatile tool for enhancing LLM deployment. Although in its early stages, Optillm’s holistic methodology could significantly improve the accessibility, efficiency, and reliability of LLMs, unlocking their full potential for real-world applications.

Check out the GitHub. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter and join our Telegram Channel and LinkedIn Group. If you like our work, you will love our newsletter.. Don’t Forget to join our 55k+ ML SubReddit.

[FREE AI WEBINAR] Implementing Intelligent Document Processing with GenAI in Financial Services and Real Estate Transactions– From Framework to Production

The post OptiLLM: An OpenAI API Compatible Optimizing Inference Proxy which Implements Several State-of-the-Art Techniques that can Improve the Accuracy and Performance of LLMs appeared first on MarkTechPost.

Source: Read MoreÂ