Planning and decision-making in complex, partially observed environments is a significant challenge in embodied AI. Traditionally, embodied agents rely on physical exploration to gather more information, which can be time-consuming and impractical, especially in large-scale, dynamic environments. For instance, autonomous driving or navigation in urban settings often demands the agent to make quick decisions based on limited visual inputs. Physical movement to acquire more information may not always be feasible or safe, such as when responding to a sudden obstacle like a stopped vehicle. Hence, there’s a pressing need for solutions that help agents form a clearer understanding of their environment without costly and risky physical exploration.

Introduction to Genex

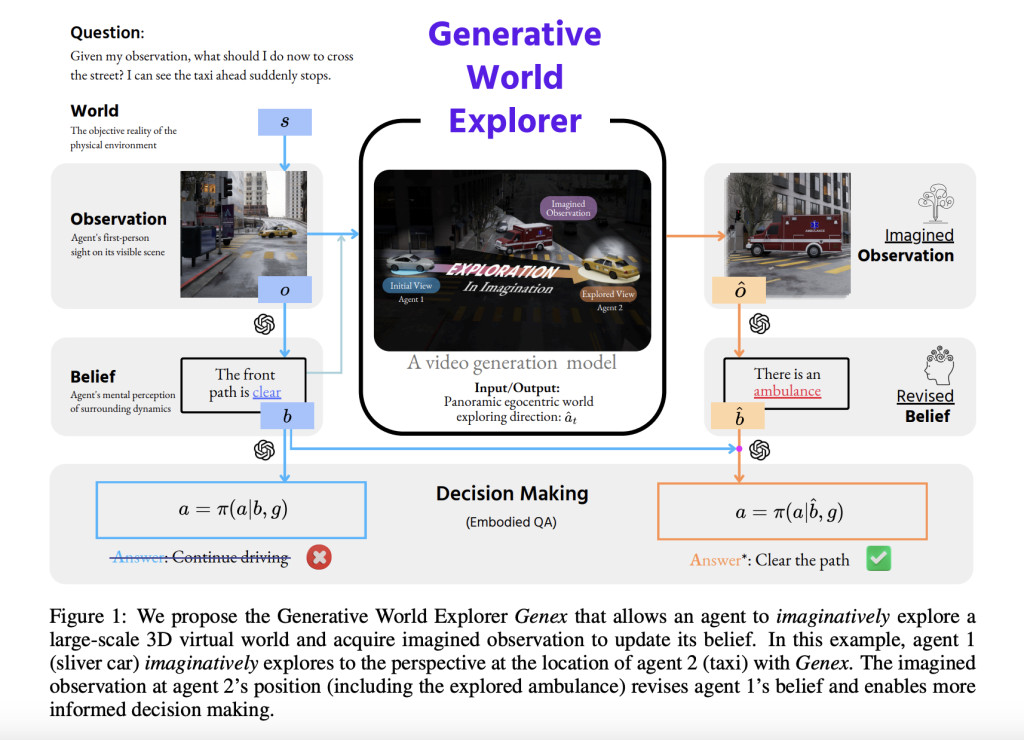

John Hopkins researchers introduced Generative World Explorer (Genex), a novel video generation model that enables embodied agents to imaginatively explore large-scale 3D environments and update their beliefs without physical movement. Inspired by how humans use mental models to infer unseen parts of their surroundings, Genex empowers AI agents to make more informed decisions based on imagined scenarios. Rather than physically navigating the environment to gather new observations, Genex allows an agent to imagine the unseen parts of the environment and adjust its understanding accordingly. This capability could be particularly beneficial for autonomous vehicles, robots, or other AI systems that need to operate effectively in large-scale urban or natural environments.

To train Genex, the researchers created a synthetic urban scene dataset called Genex-DB, which includes diverse environments to simulate real-world conditions. Through this dataset, Genex learns to generate high-quality, consistent observations of its surroundings during prolonged exploration of a virtual environment. The updated beliefs, derived from imagined observations, inform existing decision-making models, enabling better planning without the need for physical navigation.

Technical Details

Genex uses an egocentric video generation framework conditioned on the agent’s current panoramic view, combining intended movement directions as action inputs. This enables the model to generate future egocentric observations, akin to mentally exploring new perspectives. The researchers leveraged a video diffusion model trained on panoramic representations to maintain coherence and ensure the generated output is spatially consistent. This is crucial because an agent needs to keep a consistent understanding of its environment, even as it generates long-horizon observations.

One of the core techniques introduced is spherical-consistent learning (SCL), which trains Genex to ensure smooth transitions and continuity in panoramic observations. Unlike traditional video generation models, which might focus on individual frames or fixed points, Genex’s panoramic approach captures an entire 360-degree view, ensuring the generated video maintains consistency across different fields of vision. The high-quality generative capability of Genex makes it suitable for tasks like autonomous driving, where long-horizon predictions and maintaining spatial awareness are critical.

Importance and Results

The introduction of imagination-driven belief revision is a major leap for embodied AI. With Genex, agents can generate a sequence of imagined views that simulate physical exploration. This capability allows them to update their beliefs in a way that mimics the advantages of physical navigation—but without the risks and costs associated. Such an ability is vital for scenarios like autonomous driving, where safety and rapid decision-making are paramount.

In experimental evaluations, Genex demonstrated remarkable capabilities. It was shown to outperform baseline models in several metrics, such as video quality and exploration consistency. Notably, the Imaginative Exploration Cycle Consistency (IECC) metric revealed that Genex maintained a high level of coherence during long-range exploration—with mean square errors (MSE) consistently lower than competitive models. These results indicate that Genex is not only effective at generating high-quality visual content but also successful in maintaining a stable understanding of the environment over extended periods of exploration. Furthermore, in scenarios involving multi-agent environments, Genex exhibited a significant improvement in decision accuracy, highlighting its robustness in complex, dynamic settings.

Conclusion

In summary, the Generative World Explorer (Genex) represents a significant advancement in the field of embodied AI. By leveraging imaginative exploration, Genex allows agents to mentally navigate large-scale environments and update their understanding without physical movement. This approach not only reduces the risks and costs associated with traditional exploration but also enhances the decision-making capabilities of AI agents by allowing them to take into account imagined, rather than merely observed, possibilities. As AI systems continue to be deployed in increasingly complex environments, models like Genex pave the way for more robust, adaptive, and safe interactions in real-world scenarios. The model’s application to autonomous driving and its extension to multi-agent scenarios suggest a wide range of potential uses that could revolutionize how AI interacts with its surroundings.

Check out the Paper and Project Page. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter and join our Telegram Channel and LinkedIn Group. If you like our work, you will love our newsletter.. Don’t Forget to join our 55k+ ML SubReddit.

Why AI-Language Models Are Still Vulnerable: Key Insights from Kili Technology’s Report on Large Language Model Vulnerabilities [Read the full technical report here]

The post John Hopkins Researchers Introduce Genex: The AI Model that Imagines its Way through 3D Worlds appeared first on MarkTechPost.

Source: Read MoreÂ