In recent years, the development of large language models has significantly advanced natural language processing (NLP). These models, trained on extensive datasets, can generate, understand, and analyze human language with remarkable proficiency. However, building such models requires substantial amounts of data, and access to high-quality multilingual datasets remains a considerable challenge. The scarcity of openly available, large-scale, and diverse training datasets has hindered researchers and developers from creating more inclusive and robust language models, especially for less widely spoken languages. Language barriers and limited representation have prevented NLP systems from reaching their full potential. Addressing these challenges requires a new approach that prioritizes multilingualism and open access in language model training.

The Release of Common Corpus

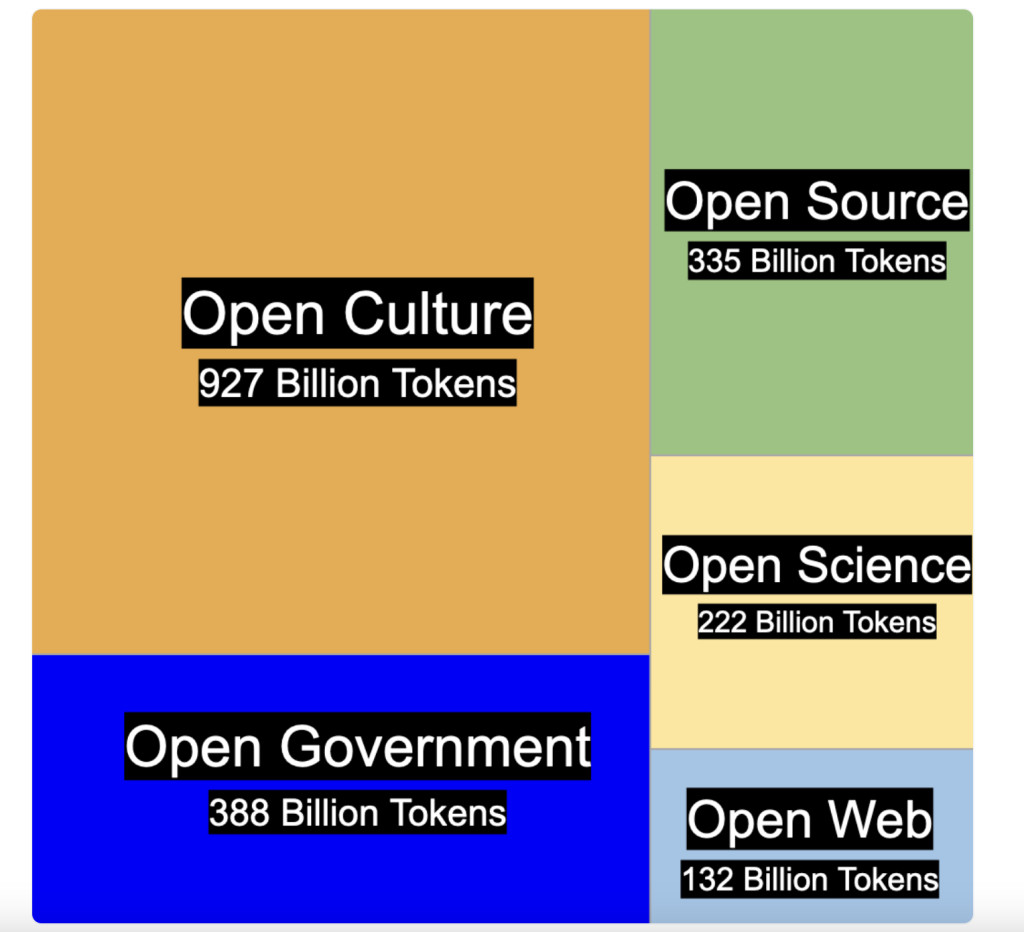

Pleias recently released the Common Corpus: the largest multilingual dataset for pretraining language models. This extensive dataset is a significant milestone for the NLP community, offering over two trillion tokens across dozens of languages, sourced from various open domains. Available on Hugging Face, the Common Corpus is part of the AI Alliance’s open dataset initiative, embodying a commitment to open-access data for research and innovation. Common Corpus is a collection that celebrates the diversity and breadth of the knowledge commons, containing five major categories of data: open culture, open government, open source, open science, and open web. From public reports to scientific publications, open culture resources like Wikipedia, and even permissively licensed code from GitHub, this dataset provides an unprecedented breadth of content for training multilingual models. The inclusion of these diverse data types makes it ideal for the pretraining of general-purpose language models that can understand and respond to nuanced, varied human communication.

Technical Details and Benefits

From a technical standpoint, the Common Corpus is an extraordinary achievement, serving as a multilingual data powerhouse. It includes curated data from open-access repositories like OpenAlex for scientific articles, government publications, GitHub for open-source software, and more. By leveraging multiple data domains, Pleias ensures that the dataset is not only vast but also represents a wide spectrum of real-world content. This diversity enables language models trained on Common Corpus to develop better contextual understanding and a deeper grasp of different genres and registers of language. Furthermore, its multilingual nature addresses the critical need for equitable representation across global languages, helping NLP researchers work toward a future where language technologies are not dominated by only English or a handful of widely spoken languages. The dataset, with its emphasis on open access, also helps in reducing the resource disparity between major research entities and independent or academic researchers, making advanced language technology more accessible.

Importance and Results

The release of the Common Corpus is a pivotal development for multiple reasons. The dataset not only sets a new benchmark in terms of size but also embodies a vision of shared knowledge, reproducibility, and inclusivity. It empowers researchers across the globe to develop language models that cater to a broader audience. By training on a rich multilingual dataset, future models can deliver more accurate, culturally aware, and contextually nuanced responses. Preliminary experiments have already shown promising results, with models trained on the Common Corpus exhibiting improved performance in zero-shot and few-shot settings across a variety of languages. This suggests that the scope of such a dataset can genuinely elevate language models beyond the typical monolingual or bilingual training paradigms, offering a real step forward for both academia and industry in tackling challenges like language preservation and ensuring the cultural inclusiveness of AI systems.

Conclusion

In conclusion, Pleias’ Common Corpus stands as a monumental contribution to the future of multilingual language modeling. By providing an open and comprehensive dataset, it addresses the challenges of data accessibility and diversity that have limited NLP development. With the dataset being openly available on platforms like Hugging Face, it also reflects a growing commitment within the AI community to prioritize collaboration and openness. As we move forward, resources like Common Corpus will be critical in shaping more democratic, fair, and inclusive AI systems that can truly serve a global audience.

Check out Common Corpus on HuggingFace. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter and join our Telegram Channel and LinkedIn Group. If you like our work, you will love our newsletter.. Don’t Forget to join our 55k+ ML SubReddit.

Why AI-Language Models Are Still Vulnerable: Key Insights from Kili Technology’s Report on Large Language Model Vulnerabilities [Read the full technical report here]

The post Pleias Introduces Common Corpus: The Largest Multilingual Dataset for Pretraining Language Models appeared first on MarkTechPost.

Source: Read MoreÂ