Large language models (LLMs) have revolutionized natural language processing by offering sophisticated abilities for a range of applications. However, these models face significant challenges. First, deploying these massive models on end devices, such as smartphones or personal computers, is extremely resource-intensive, making integration impractical for everyday applications. Second, current LLMs are monolithic, storing all domain knowledge in a single model, which often results in inefficient, redundant computations and potential conflicts when trying to address diverse tasks. Third, as the requirements of tasks and domains evolve, these models need efficient adaptation mechanisms to continually learn new information without retraining from scratch—an increasingly difficult demand given the growing size of the models.

The Concept of Configurable Foundation Models

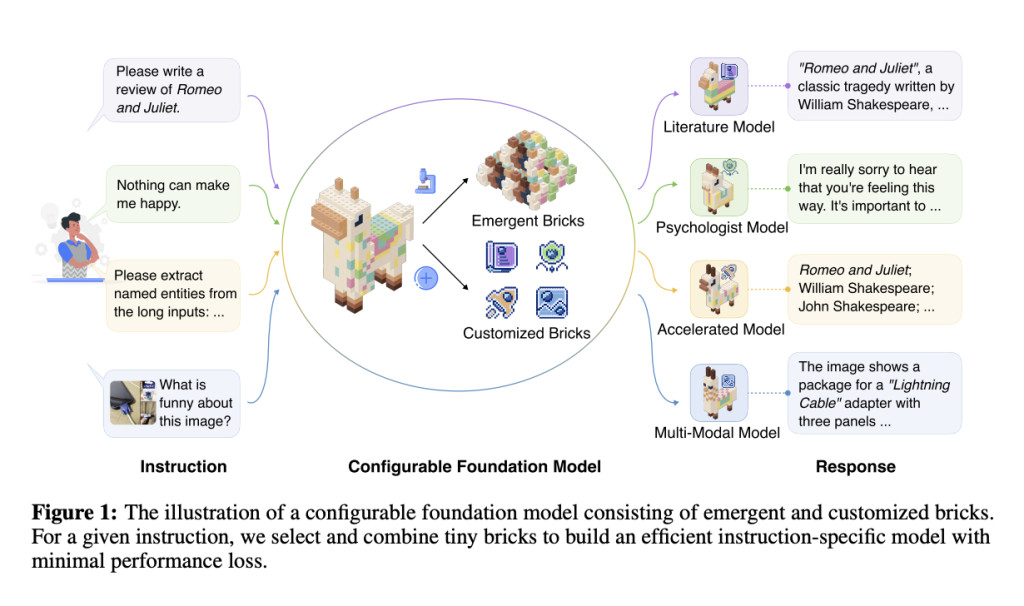

A new research study from Tsinghua University proposes a concept called Configurable Foundation Models, which is a modular approach to LLMs. Inspired by the modularity in biological systems, the idea is to break LLMs into multiple functional modules or “bricks.†Each brick can be either an emergent brick that naturally forms during pre-training or a customized brick specifically designed post-training to enhance a model’s capabilities. These bricks allow for flexible and efficient configuration, where only a subset of bricks can be dynamically activated to handle specific tasks or solve particular problems, thus optimizing resource utilization. Such modularization makes the models configurable, versatile, and adaptable, allowing them to function with fewer computational resources without a significant compromise in performance.

Technical Details and Benefits

Technically, bricks can be classified into emergent and customized types. Emergent bricks are functional modules that develop spontaneously during the pre-training process, often through the differentiation of neurons into specialized roles. Customized bricks, on the other hand, are designed to inject specific capabilities such as new knowledge or domain-specific skills after the initial training. These bricks can be updated, merged, or grown, allowing models to dynamically reconfigure based on the tasks at hand. One major benefit of this modularity is computational efficiency; rather than activating all model parameters for every task, only the relevant bricks need to be triggered, reducing redundancy. Furthermore, this modular approach makes it possible to introduce new capabilities by simply adding new customized bricks without retraining the entire model, thus allowing for continual scalability and flexible adaptation to new scenarios.

Importance and Empirical Results

The importance of Configurable Foundation Models lies in their potential to bring LLMs to more practical, efficient deployments. This modular framework ensures that LLMs can be deployed on devices with limited computational power, making advanced NLP capabilities more accessible. The empirical analysis performed on two models—Llama-3-8B-Instruct and Mistral-7B-Instruct-v0.3—demonstrates that their feedforward layers inherently follow a modular pattern with functional specialization. For example, the analysis showed that neuron activation is highly sparse, meaning only a small subset of neurons are involved in processing any specific instruction. Moreover, it was found that these specialized neurons can be partitioned without impacting other model capabilities, supporting the concept of functional modularization. These findings illustrate that configurable LLMs can maintain performance with fewer computational demands, thus validating the effectiveness of the brick-based approach.

Conclusion

The Configurable Foundation Model introduces an innovative solution to some of the pressing issues in large language models today. Modulizing LLMs into functional bricks optimizes computational efficiency, scalability, and flexibility. It ensures that these models are capable of handling diverse and evolving tasks without the computational overhead typical of traditional monolithic LLMs. As AI continues to penetrate everyday applications, approaches like the Configurable Foundation Model will be instrumental in ensuring that these technologies remain both powerful and practical, pushing forward the evolution of foundation models in a more sustainable and adaptable direction.

Check out the Paper. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter and join our Telegram Channel and LinkedIn Group. If you like our work, you will love our newsletter.. Don’t Forget to join our 55k+ ML SubReddit.

[FREE AI WEBINAR] Implementing Intelligent Document Processing with GenAI in Financial Services and Real Estate Transactions– From Framework to Production

The post How Modular Bricks are Revolutionizing the Efficiency of Large Language Models appeared first on MarkTechPost.

Source: Read MoreÂ