This is the second post of a two-part series. In Part 1, we explored how you can use Automatic Data Optimization (ADO) and Oracle Information Lifecycle Management (ILM) policies for data compression. In this post, we demonstrate how to use Heat Map statistics to monitor data usage and integrate this information with hybrid partitioning and ILM data movement policies to move data to more cost-effective storage solutions.

Hybrid partitioning based on data access pattern

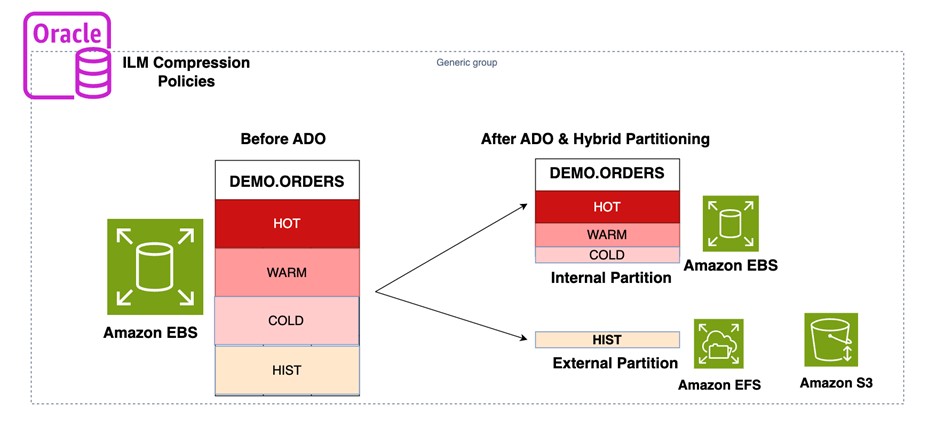

In this use case, you use Heat Map data tracking and hybrid partitioning to efficiently move data to external storage based on its age and usage patterns. To accomplish this, you use Heat Map statistics alongside a custom procedure. For Amazon Relational Database Service (Amazon RDS) for Oracle, you need to integrate your instance with Amazon Elastic File System (Amazon EFS). Then you create a custom procedure to migrate an internal partition to an external partition on Amazon EFS based on the condition you define. The following diagram illustrates this architecture.

In this example, you migration partitions that haven’t been accessed in the last 6 months using a condition such as FULL_SCAN < (sysdate - 180), where the FULL_SCAN column in the user_heat_map_segment view represents the latest timestamp when the corresponding segment (or partition, in this example) underwent a full table scan.

For Amazon RDS for Oracle, the implementation steps are as follows:

- Create an EFS file system and integrate Amazon EFS with the RDS for Oracle instance.

- Create an accessory Amazon Elastic Compute Cloud (Amazon EC2) instance that you will use as part of these instructions. Also, create a security group inbound rule to allow communication between the EC2 instance with Amazon EFS.

- On the AWS Management Console, from Amazon EC2, mount Amazon EFS by running the following code (Replace

fs-0cac1d632b3bf7f1c.efs.us-east-1.amazonaws.comwith your EFS identifier): - As a user with execute privilege on rdsadmin.rdsadmin_util, create a directory for the EFS file system in Amazon RDS for Oracle (replace p_path_on_efs with correct EFS identifier):

If above directory is created as other user, then grant read-write access to demo.

Use this directory to keep the files related to external partitions. You can keep files for multiple external partitions in the same Oracle directory.

Internal partitioned tables combined with Oracle external partitioned tables form a more general partitioning method called hybrid partitioned tables. You can either use a hybrid partitioned table or create an external table to move your aged partition to an external partition. In this example, we use a hybrid partitioned table.

- If there are existing ILM policies defined on the table, delete them before converting to a hybrid partitioned table:

- Convert the table to a hybrid partitioned table by adding external partition attributes to the

ORDERStable: - Optionally, define ILM compression policies on the hybrid partitioned table, as explained in Part 1 of this series. For this use case, we assume that these ILM compression policies have already been applied, and the data is compressed based on its lifecycle.

- To identify suitable partitions for conversion to external partitions, evaluate your specific requirements and data access patterns. Use Heat Map statistics to pinpoint the last modification and last access dates for each partition. For example, you can find partitions that haven’t been accessed in the last 6 months with the following SQL condition:

- For testing, access data from specific partitions to simulate actual access patterns for hot data by updating their access timestamps in the Heat Map. This will prevent those partitions from being flagged for migration to external storage based on their last access date.

- Create a procedure to move the internal partition to an external partition based on the Heat Map tracking data. The following pseudocode outlines the logic of the

move_to_extpartitionprocedure, which automates the process of identifying and moving older partitions to external partitions based on the Heat Map tracking data:The following is example code:

- To run the procedure, you need to pass the owner, table name, and

NUM_OF_DAYS, which specifies the number of days a partition has gone without access: - Schedule this procedure to run periodically for migrating older partitions. For testing, we run this manually and set a condition value of 0.01 days (approximately 15 minutes) for rapid testing:

After an internal partition is successfully converted to an external partition, it should no longer appear in the

USER_SEGMENTSview. Additionally, theNUM_ROWScolumn in theuser_tab_partitionsshould display a null value, as shown in the following code: - Verify that the external partition was created in Amazon EFS:

- Verify that you are able to access data from both the internal and external partitions:

- Check the size of table again after converting the partition to an external partition and running the ILM policies:

After implementing hybrid partitioning, the size of the table DEMO.ORDERS further reduced from 1,363 MB to 208 MB.

In RDS Custom for Oracle and self-managed databases running on Amazon EC2, you can create external partitions directly in Amazon Simple Storage Service (Amazon S3) using DBMS_CLOUD, which will further reduce the cost. For more details, see Use the DBMS_CLOUD package in Amazon RDS Custom for Oracle for direct Amazon S3 integration.

External partitions will be read-only partitions and won’t get backed up as a part of Amazon RDS automated and manual snapshots. You should take a backup of your EFS and S3 directories separately. When restoring an RDS for Oracle instance from a snapshot or performing point-in-time recovery (PITR), the external partitions stored in Amazon EFS won’t be automatically restored. However, if you apply the same option group as the source database during the restore process, and the option group is configured to mount the same EFS file system containing the external partition data, the restored RDS instance will have access to the external partitions.

Storage tiering based on data access pattern

ILM data movement policies allow you to automatically move data between different types of storage tiers based on the data’s age, activity level, and importance. This helps optimize storage costs and improve performance by storing frequently accessed, high-value data on high-performance, more expensive storage like io2 or io1, and moving less frequently accessed data or cold data to lower-cost storage like gp3 or gp2. The following diagram illustrates this architecture.

At the time of writing this post, storage tiering is not supported in Amazon RDS for Oracle and RDS Custom for Oracle. Storage tiering-based data management can only be used in self-managed databases running on Amazon EC2.

The steps to implement storage tiering-based ADO are as follows:

- Assuming that your database is already using an io2 or io1 EBS volume, add another EBS volume (gp3 or gp2) and attach it to the database EC2 instance.

- Create a separate logical volume or ASM data diskgroup for the newly attached EBS volume to store your cold data or less frequently accessed data. You should not add this volume to your existing ASM data diskgroup.

- Create two separate tablespaces using different storage tiers. For example:

- Assign an appropriate quota on the tablespaces to the user:

- Create a table and partitions in the

HOT_DATAtablespace: - Add an ILM policy to add storage tiering. The policy will be applied to the partitions of the table.

According to the defined ILM policy, the ADO decision to move segments to different storage tiers will depend on 90 days of no access.

- To test the policy, invoke the ILM policy by setting the ILM evaluation time to seconds as shown in previous examples, flush the Heat Map information, and wait more than 90 seconds:

For the purposes of this post, we can’t wait for the maintenance window to invoke the ADO policy jobs. Instead, we invoke it manually as the table owner.

- Access data from some of the partitions to update the access timestamp of these partitions in the Heat Map. This makes sure the policy is not applied to these partitions.

- Check the status of the submitted job:

- After you run the preceding policy, check the tablespace for partitions that aren’t accessed for more than 90 seconds (90 days in reality):

Two of the partitions moved to the COLD_DATA tablespace.

Clean up

You can disable and delete all ADO policies in a table with the following code:

Use the following commands to clean up the EFS directory DATA_PUMP_DIR_EFS:

Delete all resources that you created to test this solution, including the EC2 instance, RDS instance, EFS storage and EBS storage. Drop related data diskgroups and tablespaces.

Conclusion

In this post, we showed how to implement hybrid partitioning and ADO to move data to cost-effective storage based on its access patterns, addressing the challenges of growing databases. By applying hybrid partitioning and ILM policies, we reduced the table size by more than 80%. This leads to significant cost savings, particularly for RDS instances running in Multi-AZ configurations or with multiple copies.

Leave your feedback in the comments section.

About the Authors

Archana Sharma is a Sr. Database Specialist Solutions Architect, working with Worldwide Public Sector customers. She has years of experience in relational databases, and is passionate about helping customers in their journey to the AWS Cloud with a focus on database migration and modernization.

Archana Sharma is a Sr. Database Specialist Solutions Architect, working with Worldwide Public Sector customers. She has years of experience in relational databases, and is passionate about helping customers in their journey to the AWS Cloud with a focus on database migration and modernization.

Ramesh Pathuri is a Senior Database Consultant with AWS Worldwide Public Sector ProServe. His extensive experience in databases, along with a passion for guiding customers through their innovative transition to the AWS Cloud, enables them to unlock new possibilities through database migration and modernization, and optimize their data and analytics solutions for increased innovation, efficiency, and impact.

Ramesh Pathuri is a Senior Database Consultant with AWS Worldwide Public Sector ProServe. His extensive experience in databases, along with a passion for guiding customers through their innovative transition to the AWS Cloud, enables them to unlock new possibilities through database migration and modernization, and optimize their data and analytics solutions for increased innovation, efficiency, and impact.

Source: Read More