In this two-part series, we demonstrate how to optimize storage for Oracle database workloads on AWS by using Oracle’s built-in features, such as Heat Map, Automatic Data Optimization (ADO), and hybrid partitioning. These features help classify data by its lifecycle stage and automate data management tasks to significantly reduce storage costs, while enhancing database performance, especially for growing datasets.

In this series, we outline three different approaches to effectively manage the data lifecycle. You can apply these strategies individually or in combination, depending on the specific needs of your data environment:

- Automatic data compression based on data access pattern – Use Oracle’s Heat Map, ADO, and Oracle Information Lifecycle Management (ILM) policies to apply compression on tables or partitions that are less frequently accessed, helping reduce storage costs while improving performance

- Hybrid partitioning based on data access pattern – Use Heat Map data to identify aged or rarely accessed data and move it to external partitions, freeing up high-performance storage for more active workloads while still retaining access to older data

- Storage tiering based on data access pattern – Use Heat Map, ADO, and data movement ILM policies to move data to colder, less expensive storage tiers as data ages and becomes less frequently used

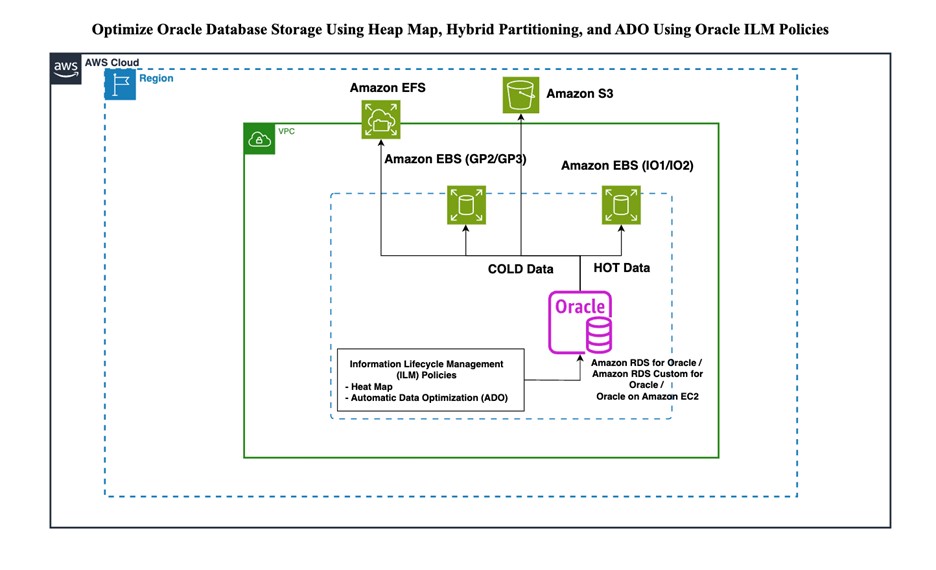

The first two use cases are applicable across all deployment options for Oracle Database Enterprise Edition on AWS, including Amazon Relational Database Service (Amazon RDS) for Oracle, Amazon RDS Custom for Oracle, and a self-managed database running on Amazon Elastic Compute Cloud (Amazon EC2). The third use case is limited to self-managed databases on Amazon EC2, because tiered storage is currently not supported in RDS instances (as of this writing).

In this post, we explore how to use ADO and Oracle ILM policies to automatically compress data based on usage patterns. In Part 2, we take a deeper look at using Heat Map statistics to manage both active and inactive data through hybrid partitioning and ADO, enabling the automatic movement of data to different storage tiers as it becomes less frequently accessed.

Solution overview

Oracle ILM helps you manage data based on its usage patterns over time. It allows you to define data classes and uses Heat Map and ADO to automate data compression and movement across different storage tiers based on user-defined policies and data access patterns.

Heat Map tracks data usage at a granular level, providing insights into data activity and helping ADO make informed decisions about when to compress or move data to appropriate storage tiers, providing efficient data management throughout its lifecycle.

ADO is a feature within Oracle Database that automates the management of data storage. It constantly assesses the value and usage patterns of your data and takes proactive steps to make sure everything is stored in the most efficient manner. ADO operates based on the ILM policies you define. These policies set the rules for when and how data should be compressed, moved, or otherwise optimized.

Here’s how it breaks down:

- Heat Map tracking – ADO uses Oracle’s Heat Map feature to monitor data usage at a granular level. It tracks how often each row and segment is accessed and modified, giving a detailed picture of data activity over time.

- Policy-based actions – Based on the insights from Heat Map, ADO implements the policies you’ve set up. For example, you can create a policy to compress data that hasn’t been accessed in the last 30 days, or to move seldom-used data to a cheaper storage tier.

- Automated execution – After these policies are in place, ADO takes care of the rest. It automatically compresses, moves, and optimizes data without manual intervention, making sure your database remains high performing and cost-efficient.

The following diagram illustrates this architecture.

The high-level steps to implement this solution are as follows:

- Enable Heat Map at the database level. This feature tracks data access and modifications at both the segment and row levels, providing a detailed, real-time map of how your data is being used.

- Create ADO policies tailored to your business needs. You can define these policies at various levels, such as tablespace, group, segment, or row. Policies can also be based on different types of operations, such as creation, access, and modification. You can specify conditions for when these policies take effect, such as based on a time period (for example, after 3 days, 1 week, or 1 year) or a tablespace usage threshold, in which you invoke policies when usage exceeds a certain threshold. The actions specified in these policies can include compression, moving to a different storage tier, or both.

- Segment-level ADO policies are evaluated daily during the auto-task maintenance window by default, but DBAs can create custom schedules. Row-level policies are evaluated every 15 minutes by the Manageability Monitor (MMON)

- Use the USER_ILMEVALUATIONDETAILS and USER_ILMRESULTS views to check the execution details of ADO policies, confirming that everything is working as intended.

- Enable hybrid partitioning and convert partitions based on access patterns.

- Verify that segments are compressed or moved according to the policies. This confirmation step makes sure your data is optimally managed and stored.

Licensing

You must be licensed for the Oracle Advanced Compression or Oracle Database In-Memory option to use Oracle ADO, as explained in the Oracle documentation. You should also be licensed to use Oracle partitioning when using hybrid partitioning. Refer to the Oracle licensing documentation for the latest information.

Prerequisites

In this section, we discuss the prerequisites to complete before you get started. You will need Oracle EE running on AWS and a client to connect to the database. For this post, we use the SQL*Plus client and Amazon RDS for Oracle deployment.

Enable Heat Map tracking

Before you can start using ADO, you need to enable activity tracking. You can enable this by setting the initialization parameter HEAT_MAP. In Amazon RDS for Oracle, you can set this parameter by creating a custom parameter group. In RDS Custom for Oracle and a self-managed database running on Amazon EC2, you can use the following command by connecting to the pluggable database (PDB) as a user with ALTER SYSTEM privileges:

The preceding parameter enables the database to track read and write activities at the database level. Enabling Heat Map enables tracking both DML and access at the segment level and stores these details in a relevant table in the SYSAUX tablespace. The activities are not tracked for objects in the SYSTEM and SYSAUX tablespaces.

Tracking details of segments are available in real time in the V$HEAP_MAP_SEGMENT view. As soon as the session accesses some objects in the database, real-time segment access information will be available immediately in this view. Data from V$HEAP_MAP_SEGMENT is persisted into the SYS.HEAT_MAP_STAT$ table in the SYSAUX tablespace by the DBMS_SCHEDULER job at a regular period of time. This data is available in dictionary views like DBA_HEAT_MAP_SEGMENT and DBA_HEAT_MAP_SEG_HISTOGRAM.

After enabling Heat Map tracking, we set the tracking start time back 5 days to make sure statistics logged after this time are valid and considered by ADO:

You can also customize parameters that control ILM. You can check the values of the parameters from the dba_ilmparameters view:

For ILM to be active, the ENABLED parameter must be set to 1. The parameter POLICY TIME determines if ADO policies are specified in seconds or days. Values are 1 for seconds or 0 for days (default). We set POLICY TIME to seconds. This allows us to test policies without having to wait for long periods of time.

This will change the POLICY TIME value in dba_ilmparameters to 1.

Create a sample table with partitions and insert dummy data into the table

Use the following code to create a partitioned table called DEMO.ORDERS and load it with dummy data. We use this table throughout this post to demonstrate various use cases.

Check if table is getting tracked in the Heat Map:

Check the current size and compression status of the table and its partitions:

The size of the uncompressed DEMO.ORDERS table is around 1.36 GB.

Automatic data compression based on data access pattern

In this example, we show how you can automate the data compression level based on the data access pattern and the age of the data. The following diagram illustrates this architecture.

In Oracle, the following compression levels are available:

ROW STORE COMPRESS BASICROW STORE COMPRESS ADVANCEDCOLUMN STORE COMPRESS FOR QUERY LOW OR HIGHCOLUMN STORE COMPRESS FOR ARCHIVE LOW OR HIGH

In this post, we only test ROW STORE COMPRESS BASIC and ROW STORE COMPRESS ADVANCED.

You can define the ILM policy at the tablespace, group, segment, and row level. For this example, we create and enable an ADO row store compression policy on the DEMO.ORDERS table at the segment and row levels.

Segment-level compression policies

In segment-level compression policies, the entire segment properties get changed. These are most useful for partitioned tables where partitions have become unused or are infrequently used. The segment-level policy is run only one time and is disabled afterwards.

By issuing the following statements, we add ILM policies to automatically compress the partition at the segment level depending on the defined condition and age of the data:

In this example, we have defined ILM policies to perform advanced compression on two of the older partitions and basic compression on one of the partitions. Let’s verify the policies as follows:

To test the policy, we flush the Heat Map statistics from memory to disk and add a pause of more than 60 seconds so we know that the segment wasn’t modified in the last 60 seconds. We set the dbms_ilm_admin.policy_time parameter to seconds earlier to allow us to simulate 60 days in just 60 seconds of real clock for quick testing.

Instead of waiting for the MMON background process to invoke the policy job, we run the policy manually:

Check the status of the task:

After the JOB_STATE column value changes to COMPLETED SUCCESSFULLY, check if the table partitions were compressed based on the defined policy:

Because compression is at the segment level, all rows in the segment should be compressed. Let’s verify for one of the compressed partitions:

The ADO segment-level compression policy gets disabled after the object is already compressed. Its status will be changed to NO, as shown in the following example:

If you insert new rows into the partitions where segment-level compression policies are already run, new inserted rows won’t get compressed. If segment-level compression is being used in an active segment where data continues to be added, it’s recommended to re-enable the ADO segment level compression policy.

Let’s check the size of table after running the ADO segment-level policies:

Row-level compression policies

In a row-level policy, blocks where all the rows in the blocks meet the policy are compressed. The row-level policy continues to run after its first run. Row-level compression policies can be created based only on modification time. They can’t be created based on creation time or access time. Also, the only compression type available for row-level compression policies is ROW STORE COMPRESS ADVANCED.

Let’s test a row-level compression ADO policy on the ORDERS_HOT partition:

Verify that the policy is enabled:

Run the policy manually:

After the policy job is complete, check both the segment-level compression as well as row-level compression for the ORDERS_HOT partition:

The segment compression attributes haven’t been modified, but some rows (721,863) have been compressed and others (12,526) have not. Only blocks where all the rows meet the policy criteria are compressed.

Check the status of the ADO policy after the first ADO evaluation:

Because the ADO compression policy is set at the row level, the ADO compression policy is still useful to verify whether further updated or new inserted rows in blocks need to be compressed. This will reduce the size of the table further.

After implementing the defined ILM data compression policies, the size of the table was reduced from 1.36 GB to 360 MB, resulting in nearly a 70% reduction in storage space.

Conclusion

In this post, we demonstrated how ILM compression policies and ADO enhance storage management based on data age. You can significantly reduce your storage usage while maintaining cost-efficiency and effective data access.

In Part 2, we show you how to use Heat Map statistics to monitor data usage and integrate this information with hybrid partitioning and ILM data movement policies to move data to more cost-effective storage solutions.

Leave your feedback in the comments section.

About the Authors

Archana Sharma is a Sr. Database Specialist Solutions Architect, working with Worldwide Public Sector customers. She has years of experience in relational databases, and is passionate about helping customers in their journey to the AWS Cloud with a focus on database migration and modernization.

Archana Sharma is a Sr. Database Specialist Solutions Architect, working with Worldwide Public Sector customers. She has years of experience in relational databases, and is passionate about helping customers in their journey to the AWS Cloud with a focus on database migration and modernization.

Ramesh Pathuri is a Senior Database Consultant with AWS Worldwide Public Sector ProServe. His extensive experience in databases, along with a passion for guiding customers through their innovative transition to the AWS Cloud, enables them to unlock new possibilities through database migration and modernization, and optimize their data and analytics solutions for increased innovation, efficiency, and impact.

Ramesh Pathuri is a Senior Database Consultant with AWS Worldwide Public Sector ProServe. His extensive experience in databases, along with a passion for guiding customers through their innovative transition to the AWS Cloud, enables them to unlock new possibilities through database migration and modernization, and optimize their data and analytics solutions for increased innovation, efficiency, and impact.

Source: Read More