PostgreSQL’s ability to handle concurrent access while maintaining data consistency relies heavily on its locking mechanisms, particularly at the row level. When multiple transactions attempt to lock the same row simultaneously, PostgreSQL turns to a specialized structure called MultiXact IDs. While MultiXacts provide an efficient way to manage multiple locks on a single row, they can also introduce performance challenges, such as unexpected slowdowns or delays during vacuum operations.

In this post, we dive deep into the inner workings of MultiXacts, exploring how they function, where they are commonly used, and the potential side effects they can have on your database performance. You’ll learn how to monitor MultiXacts, identify bottlenecks, and implement strategies to mitigate their impact on your system. Whether you’re dealing with high-concurrency workloads, foreign keys, or savepoints, understanding MultiXacts is key to maintaining a performant and reliable PostgreSQL database. By the end of this post, you will have the tools and insights to proactively manage MultiXacts, optimize your database, and prevent issues like wraparound and excessive storage usage.

Understanding MultiXacts in PostgreSQL

To understand MultiXacts, we must first describe how locking information is stored in PostgreSQL. In this section we describe row locking, MultiXact storage and show examples of MultiXact locking behavior.

Row-level locking, XMAX, and MultiXact IDs

When a transaction locks a row in PostgreSQL, the transaction ID (XID) is stored in the row’s header under the XMAX field. This form of row-level locking is the most common form of locking, for example, during an UPDATE or DELETE transaction. Row-level locks in PostgreSQL don’t interfere with read operations; they only prevent other transactions from modifying or acquiring conflicting locks on the same row. These locks remain in place until the transaction completes or a savepoint is rolled back, similar to how table-level locks behave.

When multiple transactions need to lock the same row, PostgreSQL replaces the XID with a MultiXact ID to manage the concurrent locks efficiently. A MultiXact ID is a secondary data structure that tracks multiple transactions holding locks on the same row. Because the row header has limited space for lock information, MultiXact IDs provide an efficient way to manage multiple transaction locks by maintaining a list of transaction IDs. The MultiXact ID appears in the row header, and the list is stored separately in files in the pg_multixact subdirectory under PGDATA:

├── pg_multixact

│ ├── members

│ └── offsets

Example of MultiXact locking behavior

Let’s look at an example of MultiXact locking behavior. To do this, we create a demo database, create the pgrowlocks extension and install a small pgbench schema. If you follow along with these examples on your own instance, we recommend using a non-production instance and remember to remove the demo database when completed.

We will use 3 sessions in this example to demonstrate the Multixact locking behaviour.

In session 1, we check the current XID and then perform an operation with a shared lock on the pgbench_accounts relation (this will be the next XID). In this example we will use the SELECT…FOR SHARE mode which will allow other types of shared locks but prevent other exclusive locks. This is similar to locking used by a foreign key relationship, which we will describe further in this post.

In session 2, we check CTID and XMAX and see the XID of session 1. We will reference CTID here as it will become useful in queries used later in this post. As described earlier, a single transaction performing a row lock will have its XID show up in the XMAX field of the row itself. Now let’s examine the result of another session performing a locking operation:

We will use the pgrowlocks extension to show more details about a table’s locking information. Although it’s useful for the purposes of this post, the pgrowlocks extension performs a full scan of the entire relation and will therefore be slower on large relations. It’s not recommended to use this extension while a heavy workload is running because it can take a significant amount of time to complete as well as use database resources causing other queries to degrade.

We can see that there is one single XID recorded as a locker and that the column multi is false—indicating that this is not a MultiXact, rather just a regular transaction with an XID:

In session 3, we perform an operation with another shared lock:

Back in session 2, we check XMAX and see this is now converted to a MultiXact because both sessions 1 and 3 are performing an operation that takes a shared lock and replaces XMAX with the current MultiXact ID:

Using pgrowlocks, we can now specifically see that the locker column is represented by the MultiXact ID and the column multi is true. We can see both PIDs that are participating in this MultiXact.

We issue a commit in the sessions holding the locks (Sessions 1 and 3):

In session 2, we can see that the output from pgrowlocks provides real-time row locking information for a specified table and because there is no longer a lock, it displays no rows:

Example of MultiXact with higher concurrency

The preceding section presents a simple example with just a few transactions to describe what is happening. If we add a session 4 to this example, which also runs the SELECT…FOR SHARE command, PostgreSQL wouldn’t just add it to the existing MultiXact (670526 in that example); it would have to create a new MultiXact with all three sessions holding the lock.

This is important to understand because your workload may in fact have tens or hundreds of sessions performing this type of lock, which will quickly generate a long list of MultiXacts.

Using pgbench, let’s now simulate 50 sessions doing the same steps and watch the behavior. We will look at the XMAX field and then look deeper at how many MultiXact members this creates when all of our sessions are performing a SELECT…FOR SHARE on the same row. This time, we use a built-in function to show how many MultiXact members this uses and display the mode.

This is the script we will be using:

Let’s run this script and simulate 50 sessions:

$ pgbench -c 50 -T 30 demo -n -f pgbench_share.sql

As we mentioned, PostgreSQL doesn’t just append the new XID to the list; instead it has to create an entirely new MultiXact ID with a list of members. If we look at the previous MultiXact IDs as the workload ramped up to 50 sessions, we can see the MultiXact ID bloat that is left behind:

For additional details on how PostgreSQL implements row locking and MultiXact IDs, refer to the PostgreSQL source documentation.

Now that we have seen an example of what MultiXacts are, let’s understand which operations in the PostgreSQL database will lead to Multixact usage. The next section lists a few examples of such operations.

Operations using MultiXacts

In this section, we discuss some operations that use MultiXacts:

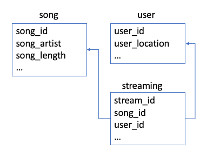

- Foreign keys – MultiXacts occur when multiple transactions acquire a shared lock on a parent record. For example, consider a music streaming schema where the system tracks current streams with foreign key references to a song and user table. When a popular song is released and millions of users start streaming it simultaneously, the system inserts entries in the streaming PostgreSQL uses a SELECT…FOR KEY SHARE on the table referenced by the foreign key. This can be surprising, because you are inserting in to the streaming table, but the rows in the song table are locked, and under concurrent usage those locks are converted to MultiXacts. The following figure illustrates this use case.

- ..FOR SHARE – MultiXact is used when the SELECT…FOR SHARE clause is applied to prevent updates and deletions on rows, while allowing other processes to acquire a shared lock on the same row. This behavior was discussed in the example earlier in this post.

- Sub-transactions from explicit savepoints – Creating explicit savepoints within a transaction creates additional sub-transactions that have separate XIDs. If you lock or modify the same row in these different sub-transactions, then the multixact mechanism comes in to play.

- Sub-transactions from PL/pgSQL EXCEPTION clauses – Each EXCEPTION clause that you write in your PL/pgSQL functions or procedures creates a savepoint internally.

- Drivers, ORMs, and abstraction layers – Some database drivers, object-relational mappers (ORMs), and abstraction layers automatically wrap operations with savepoints, potentially generating numerous MultiXact wait events. The PostgreSQL JDBC driver’s autosave option is one example. Similarly, the PostgreSQL ODBC driver offers protocol options that can cause MultiXact behavior.

MultiXact wraparound and vacuuming thresholds

Like transaction IDs, MultiXact IDs are implemented as a 32-bit counter, which require intentional management to avoid wraparound issues. We will now examine the factors which trigger autovacuum to avoid MultiXact wraparound. We will delve deeper into the impact of a manual VACUUM FREEZE on the size of the pg_multixact directories, particularly the physical storage space occupied by them.

The following is an example of the errors that PostgreSQL emits when MultiXact wraparound occurs:

To prevent MultiXact wraparound, the autovacuum daemon invokes an autovacuum worker based on one of the following conditions (whichever happens first):

- If the MultiXact IDs consumed reach the threshold autovacuum_multixact_freeze_max_age – The autovacuum_multixact_freeze_max_age parameter defaults to 400 million. Changing this static parameter requires an instance restart after modification.

- If the amount of disk storage occupied by the MultiXact directories crosses an internal threshold – In PostgreSQL 14 and later, improvements were made such that aggressive VACUUMs are invoked when the storage occupied by MultiXacts exceeds 2 GB. This allows the directories to stay relatively small and avoid some of the performance issues we describe in this post. In versions prior to 14, it occurs when MultiXact storage exceeds 50% of the addressable space. This allowed the directories to grow quite large, requiring lookups to traverse several files to satisfy the query. You can read more about these processes in the official PostgreSQL documentation for version 14 and above and version 13.

The primary purpose for the above thresholds is to trigger autovacuum workers way before the actual storage or multixact ID limit is hit. Let’s now understand the impact of Vacuum Freeze, to see what actions can be taken manually, along with autovacuum doing its job on busier tables.

VACUUM FREEZE

The VACUUM command is primarily used for garbage collection in PostgreSQL. There are different options which you can use with the standard VACUUM command – which includes FULL (re-writes the table and needs an exclusive lock), FREEZE (freezes tuples to release transaction IDs for re-use – for both XIDs and MXIDs).

For MultiXacts, a standard VACUUM command will only release MultiXact IDs older than vacuum_multixact_freeze_min_age and remove their corresponding files from the pg_multixact directories. However, running the VACUUM FREEZE command releases all MultiXact IDs that are completed and is used to prevent a MultiXact wraparound. Continuing the previous example where we saw the usage of MultiXacts, the XMAX field will be cleared only after a VACUUM FREEZE cleanup is performed. While it will use resources, this is an essential maintenance operation that must be performed when MultiXacts are heavily used in your workload.

Continuing from the previous example, we demonstrate that even though the sessions are complete, the MultiXact information still exists and needs to be cleaned up. A normal VACUUM won’t release anything in this example because these transactions aren’t old enough to breach vacuum_multixact_freeze_min_age. Issuing a VACUUM FREEZE is required to clean up the completed MultiXact, as shown in the example below:

This VACUUM removes the old files from the pg_multixact/members and pg_multixact/offsets subdirectories, which store the MultiXact ID information. It also updates the dataminmxid field in the pg_database_table:

Now that we have discussed the usage on MultiXacts and the impact on vacuum on them, the next two sections focus on how to effectively monitor their usage, and the actions to take if there is a problem observed. The next section describes some of the ways to monitor the usage of Multixacts through some queries you run against the database, and some using Performance Insights. The following one will discuss some of the ways to manage the MultiXact usage.

Key metrics to monitor

In this section, we examine several metrics that PostgreSQL provides so that you can monitor your database health.

MultiXact ID age

The age of the oldest MultiXact ID is a key indicator of when an aggressive autovacuum may be necessary. You can monitor the MultiXact age per database using the following query :

Find the databases that are holding the highest MultiXact age (from the preceding query), then connect to that database to find the tables with the oldest MultiXact age:

If your workload isn’t generating MultiXact IDs, then the mxid_age(relminmxid) value for your tables will be zero.

Storage size

As discussed earlier, the disk space usage for the pg_multixact directories is another threshold that cause aggressive autovacuum operations. For Amazon Aurora PostgreSQL-Compatible Edition, you can use the aurora_stat_utils extension to examine the storage occupied by the pg_multixact/members and pg_multixact/offsets subdirectories:

Simple LRU buffer activity

Monitoring the eviction and read activity of MultiXact buffers from the Simple LRU (SLRU) can help identify performance bottlenecks. Starting with PostgreSQL 13, the monitoring view pg_stat_slru was introduced. This view provides insights into the number of reads, writes, and flushes for the SLRU buffers used by MultiXact. Although it won’t tie to which sessions are using MultiXacts, you can confirm the fact that MultiXacts are being used and monitor the rate of usage.

Wait events

Using pg_stat_activity, if you sort and count by the wait_event column, you can observe if any MultiXact lightweight wait events such as LWLock:MultiXactMemberBuffer, LWLock:MultiXactMemberSLRU, LWLock:MultiXactOffsetBuffer, or LWLock:MultiXactOffsetSLRU are near the top of the list. Similarly, Amazon RDS Performance Insights will display these wait events, as shown in the following screenshot. These wait events (checked through pg_stat_activity or Performance Insights) can indicate if database workload activity is using the MultiXact mechanism heavily. You will sometimes see these waits also when an aggressive vacuum is run to clean up for MultiXacts.

Managing MultiXacts

You can use the monitoring steps we discussed to first confirm that your database is using MultiXacts, and if there are significant wait events, you can identify the queries associated with the wait events. Knowing how MultiXacts are used by PostgreSQL, coupled with understanding your data model, you can determine which tables are involved. In this section, we discuss some steps you can take to reduce the impact of MultiXact on your database.

Perform VACUUM FREEZE on specific tables

If the wait events described earlier spike suddenly and affects your production environment, you can use the following temporary methods to reduce its count:

- Use VACUUM FREEZE on the affected table or table partition to resolve the issue immediately. For more information, see VACUUM.

- Use the VACUUM (FREEZE, INDEX_CLEANUP FALSE) clause to perform a quick vacuum by skipping the indexes. For more information, see Vacuuming a table as quickly as possible.

The above commands can be executed using a scheduler, such as pg_cron, to perform the VACUUM instead of adjusting autovacuum at the instance level as described next.

Adjust table level settings so that autovacuum will clean up MultiXacts more frequently

Another approach you can use is to modify specific table settings so that autovacuum will perform cleanup more often. You can lower the value of autovacuum_multixact_freeze_max_age from the default of 400,000,000 to something like 200,000,000. This will cause autovacuum to run Multixact clean up more frequently against this specific table.

Increase memory parameters

You can use more of the instance RAM to store the MultiXact structure in memory before spilling to disk. In all versions of Aurora PostgreSQL-Compatible and starting in version 17 of RDS for PostgreSQL, you can increase the following parameters in your RDS parameter group :

- multixact_offsets_cache_size to 128

- multixact_members_cache_size to 256

You must reboot the instance for the parameter change to take effects, as these are static parameter. We recommend not setting these values so high that you run out of memory.

Reduce long-running transactions

Long-running transactions affect vacuum operations in two ways: 1. For active transactions: Vacuum must retain its information until the transaction is committed. 2. For read-only transactions: Vacuum must retain its information until the transaction is closed. This retention requirement applies to both standard transaction IDs and MultiXacts. As a result, vacuum may struggle to clean up these IDs effectively. To prevent potential issues, we strongly recommend proactively monitoring and managing long-running transactions in your database.

For more information, see Database has long running idle in transaction connection. Try to modify your application to avoid or minimize your use of long-running transactions. Some approaches to limit this may involve usage of a connection pooler (with certain timeouts for long-running transactions), or using the idle_in_transaction_session_timeout parameter.

Conclusion

In this post, you learned what MultiXacts are and how their usage introduces certain challenges, particularly in terms of performance and maintenance. Managing MultiXacts effectively is crucial, especially in high-concurrency environments, as failing to do so can lead to issues like MultiXact ID wraparound and storage bloat in the `pg_multixact` directories. Regular maintenance, such as running `VACUUM FREEZE`, is necessary to prevent these problems and ensure that the database remains healthy and performant.

By implementing proactive strategies like consistent vacuuming, optimizing memory settings, and minimizing long-running transactions, you can mitigate the potential performance bottlenecks caused by MultiXacts. Monitoring key metrics and using PostgreSQL’s available tools to track MultiXact usage enables early identification of issues, helping maintain optimal database performance. Effectively managing MultiXacts ensures smooth database operations, even under heavy transactional loads, while preventing performance degradation and costly interruptions.

MultiXacts play an essential role in PostgreSQL’s ability to handle concurrent row-level locking efficiently and require specific maintenance considerations. Reach out to share your feedback and questions in the comments section.

About the Authors

Shawn McCoy is a Senior Database Engineer for RDS & Aurora PostgreSQL. After being an Oracle DBA for many years he became one of the founding engineers for the launch of RDS PostgreSQL in 2013. Since then he has been improving the service to help customers succeed and scale their applications.

Shawn McCoy is a Senior Database Engineer for RDS & Aurora PostgreSQL. After being an Oracle DBA for many years he became one of the founding engineers for the launch of RDS PostgreSQL in 2013. Since then he has been improving the service to help customers succeed and scale their applications.

Divya Sharma is a Senior RDS PostgreSQL Specialist Solutions Architect at AWS. She has helped many customers successfully migrate from commercial database engines to AWS RDS PostgreSQL and Aurora PostgreSQL. In her day-to-day role, she also assists customers in understanding the intricacies of the PostgreSQL engine and optimize their database workloads for better performance and scalability.

Divya Sharma is a Senior RDS PostgreSQL Specialist Solutions Architect at AWS. She has helped many customers successfully migrate from commercial database engines to AWS RDS PostgreSQL and Aurora PostgreSQL. In her day-to-day role, she also assists customers in understanding the intricacies of the PostgreSQL engine and optimize their database workloads for better performance and scalability.

Source: Read More