The need for efficient retrieval methods from documents that are rich in both visuals and text has been a persistent challenge for researchers and developers alike. Think about it: how often do you need to dig through slides, figures, or long PDFs that contain essential images intertwined with detailed textual explanations? Existing models that address this problem often struggle to efficiently capture information from such documents, requiring complex document parsing techniques and relying on suboptimal multimodal models that fail to truly integrate textual and visual features. The challenges of accurately searching and understanding these rich data formats have slowed down the promise of seamless Retrieval-Augmented Generation (RAG) and semantic search.

Voyage AI Introduces voyage-multimodal-3

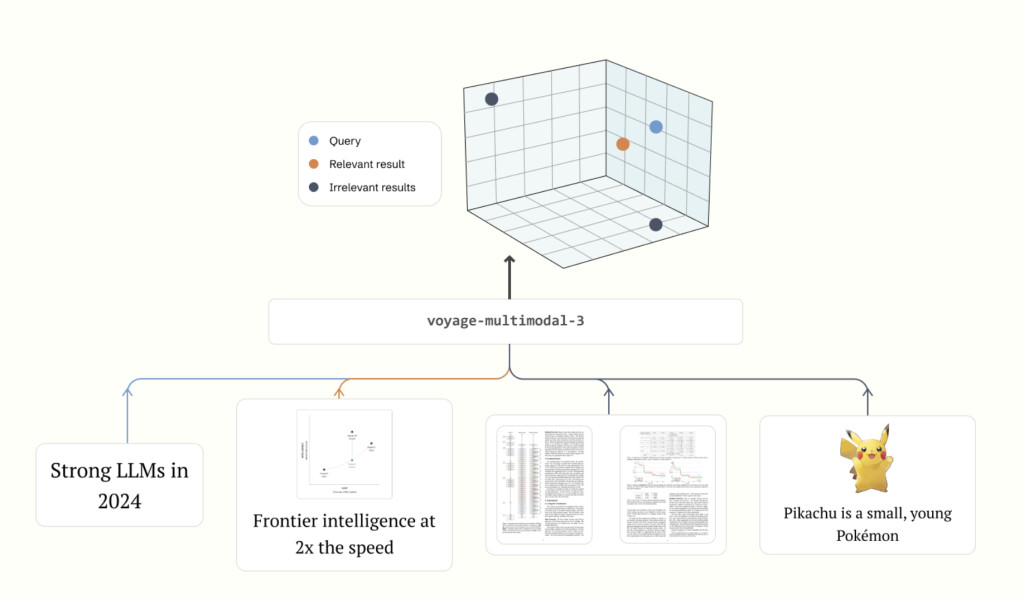

Voyage AI is aiming to bridge this gap with the introduction of voyage-multimodal-3, a groundbreaking model that raises the bar for multimodal embeddings. Unlike traditional models that struggle with documents containing both images and text, voyage-multimodal-3 is designed to seamlessly vectorize interleaved text and images, fully capturing their complex interdependencies. This ability allows the model to go beyond the need for complex parsing techniques for documents that come with screenshots, tables, figures, and similar visual elements. By focusing on these integrated features, voyage-multimodal-3 offers a more natural representation of the multimodal content found in everyday documents such as PDFs, presentations, or research papers.

Technical Insights and Benefits

What makes voyage-multimodal-3 a leap forward in the world of embeddings is its unique ability to truly capture the nuanced interaction between text and images. Built upon the latest advancements in deep learning, the model leverages a combination of Transformer-based vision encoders and state-of-the-art natural language processing techniques to create an embedding that represents both visual and textual content cohesively. This allows voyage-multimodal-3 to provide robust support for tasks like retrieval-augmented generation and semantic search—key areas where understanding the relationship between text and images is crucial.

A core benefit of voyage-multimodal-3 is its efficiency. With the ability to vectorize combined visual and textual data in one go, developers no longer have to spend time and effort parsing documents into separate visual and textual components, analyzing them independently, and then recombining the information. The model can now directly process mixed-media documents, leading to more accurate and efficient retrieval performance. This greatly reduces the latency and complexity of building applications that rely on mixed-media data, which is especially critical in real-world use cases such as legal document analysis, research data retrieval, or enterprise search systems.

Why voyage-multimodal-3 is a Game Changer

The significance of voyage-multimodal-3 lies in its performance and practicality. Across three major multimodal retrieval tasks, involving 20 different datasets, voyage-multimodal-3 achieved an average accuracy improvement of 19.63% over the next best-performing multimodal embedding model. These datasets included complex media types, with PDFs, figures, tables, and mixed content—the types of documents that typically pose substantial retrieval challenges for current embedding models. Such a substantial increase in retrieval accuracy speaks to the model’s ability to effectively understand and integrate visual and textual content, a crucial feature for creating truly seamless retrieval and search experiences.

The results from voyage-multimodal-3 represent a significant step forward towards enhancing retrieval-based AI tasks, such as retrieval-augmented generation (RAG), where presenting the right information in context can drastically improve generative output quality. By improving the quality of the embedded representation of text and image content, voyage-multimodal-3 helps lay the groundwork for more accurate and contextually enriched answers, which is highly beneficial for use cases like customer support systems, documentation assistance, and educational AI tools.

Conclusion

Voyage AI’s latest innovation, voyage-multimodal-3, sets a new benchmark in the world of multimodal embeddings. By tackling the longstanding challenges of vectorizing interleaved text and image content without the need for complex document parsing, this model offers an elegant solution to the problems faced in semantic search and retrieval-augmented generation tasks. With an average accuracy boost of 19.63% over previous best models, voyage-multimodal-3 not only advances the capabilities of multimodal embeddings but also paves the way for more integrated, efficient, and powerful AI applications. As multimodal documents continue to dominate various domains, voyage-multimodal-3 is poised to be a key enabler in making these rich sources of information more accessible and useful than ever before.

Check out the Details here. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter and join our Telegram Channel and LinkedIn Group. If you like our work, you will love our newsletter.. Don’t Forget to join our 55k+ ML SubReddit.

[Upcoming Live LinkedIn event] ‘One Platform, Multimodal Possibilities,’ where Encord CEO Eric Landau and Head of Product Engineering, Justin Sharps will talk how they are reinventing data development process to help teams build game-changing multimodal AI models, fast‘

The post Voyage AI Introduces voyage-multimodal-3: A New State-of-the-Art for Multimodal Embedding Model that Improves Retrieval Accuracy by an Average of 19.63% appeared first on MarkTechPost.

Source: Read MoreÂ