The field of AI-driven image generation and understanding has seen rapid progress, but significant challenges hinder the development of a seamless, unified approach. Currently, models that excel in image understanding often struggle to generate high-quality images and vice versa. The need to maintain separate architectures for each task not only increases complexity but also limits efficiency, making it cumbersome to handle tasks requiring both understanding and generation. Moreover, many existing models rely heavily on architectural modifications or pre-trained components to perform either function effectively, which results in performance trade-offs and integration challenges.

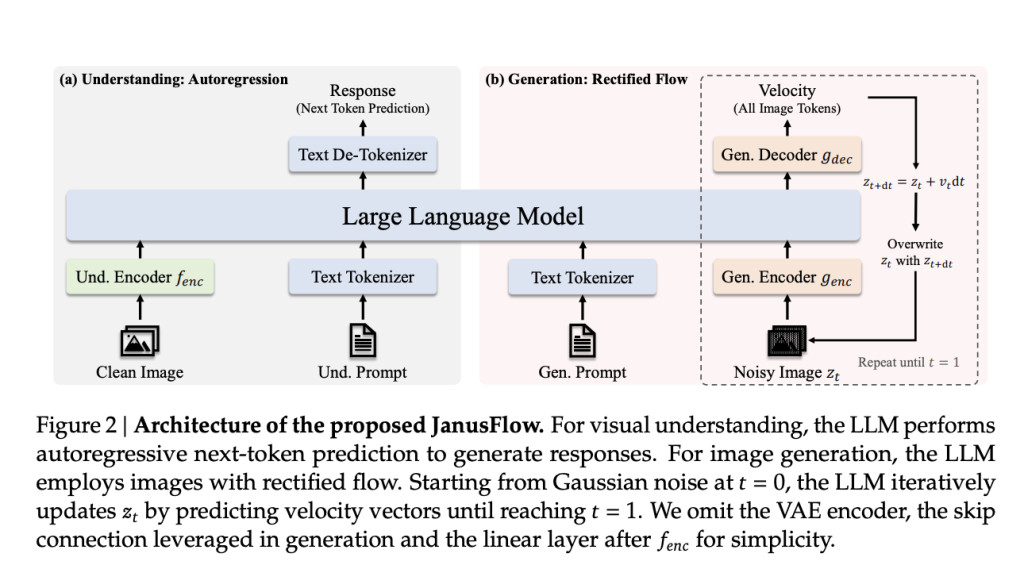

DeepSeek AI has released JanusFlow: a powerful AI framework that unifies image understanding and generation in a single model. JanusFlow aims to solve the inefficiencies mentioned earlier by integrating image understanding and generation into a unified architecture. This novel framework uses a minimalist design that leverages autoregressive language models in combination with rectified flow—a state-of-the-art generative modeling method. By eliminating the need for separate LLM and generative components, JanusFlow achieves more cohesive functionality while reducing architectural complexity. It introduces a dual encoder-decoder structure that decouples the understanding and generation tasks and aligns representations to ensure performance coherence in a unified training scheme.

Technical Details

JanusFlow integrates rectified flow with a large language model (LLM) in a lightweight and efficient manner. The architecture consists of separate vision encoders for both understanding and generation tasks. During training, these encoders are aligned to improve semantic coherence, allowing the system to excel in both image generation and visual comprehension tasks. This decoupling of encoders prevents task interference, thereby enhancing each module’s capabilities. The model also employs classifier-free guidance (CFG) to control the alignment of generated images with text conditions, resulting in improved image quality. Compared to traditional unified systems that utilize diffusion models as external tools or use vector quantization techniques, JanusFlow provides a simpler and more direct generative process with fewer limitations. The architecture’s effectiveness is evident in its ability to match or even exceed the performance of many task-specific models across multiple benchmarks.

Why JanusFlow Matters

The importance of JanusFlow lies in its efficiency and versatility, addressing a critical gap in the development of multimodal models. By eliminating the need for separate generative and understanding modules, JanusFlow allows researchers and developers to leverage a single framework for multiple tasks, significantly reducing complexity and resource usage. Benchmark results indicate that JanusFlow outperforms many existing unified models, achieving scores of 74.9, 70.5, and 60.3 on MMBench, SeedBench, and GQA, respectively. In terms of image generation, JanusFlow surpasses models like SDv1.5 and SDXL, with scores of 9.51 on MJHQ FID-30k and 0.63 on GenEval. These metrics indicate its superior capability in generating high-quality images and handling complex multimodal tasks with only 1.3B parameters. Notably, JanusFlow achieves these results without relying on extensive modifications or overly complex architectures, providing a more accessible solution for general AI applications.

Conclusion

JanusFlow is a significant step forward in the development of unified AI models capable of both image understanding and generation. Its minimalist approach—focusing on integrating autoregressive capabilities with rectified flow—not only enhances performance but also simplifies the model architecture, making it more efficient and accessible. By decoupling vision encoders and aligning representations during training, JanusFlow successfully bridges the gap between image comprehension and generation. As AI research continues to push the boundaries of what models can achieve, JanusFlow represents an important milestone toward creating more generalizable and versatile multimodal AI systems.

Check out the Paper and Model on Hugging Face. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter and join our Telegram Channel and LinkedIn Group. If you like our work, you will love our newsletter.. Don’t Forget to join our 55k+ ML SubReddit.

[Upcoming Live LinkedIn event] ‘One Platform, Multimodal Possibilities,’ where Encord CEO Eric Landau and Head of Product Engineering, Justin Sharps will talk how they are reinventing data development process to help teams build game-changing multimodal AI models, fast‘

The post DeepSeek AI Releases JanusFlow: A Unified Framework for Image Understanding and Generation appeared first on MarkTechPost.

Source: Read MoreÂ