Top News

Google DeepMind open-sources AlphaFold 3, ushering in a new era for drug discovery and molecular biology

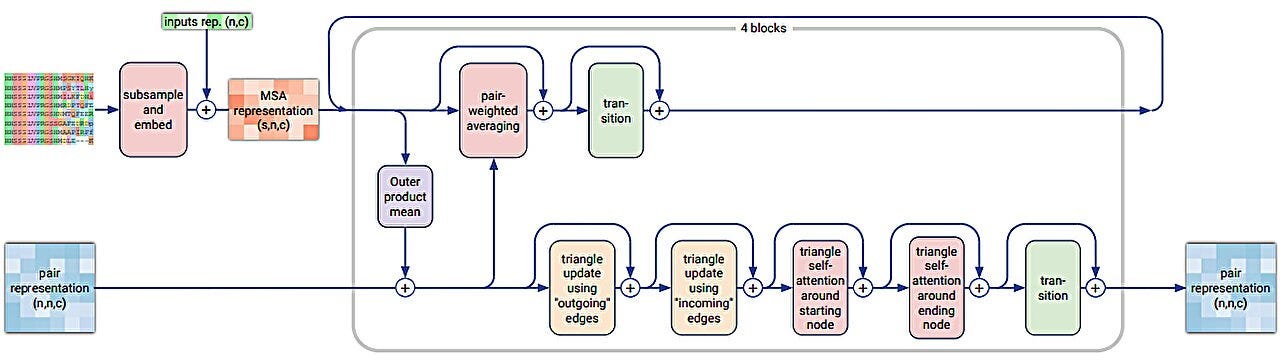

Google DeepMind has released the source code and model weights of AlphaFold 3 for academic use, a move that could significantly speed up scientific discovery and drug development. AlphaFold 3 is a major upgrade from its predecessor, capable of modeling complex interactions between proteins, DNA, RNA, and small molecules, which are crucial for understanding drug discovery and disease treatment. The system’s diffusion-based approach aligns with the basic physics of molecular interactions, making it more efficient and reliable. Despite some limitations, such as producing incorrect structures in disordered regions and only predicting static structures, the release of AlphaFold 3 is seen as a significant step forward in AI-powered science, with potential impacts extending beyond drug discovery and molecular biology.

Sponsored Message

Uncontrollable: The Threat of Artificial Superintelligence and the Race to Save the World

We recommend the AI safety book “Uncontrollable”! This is not a doomer book, but instead lays out the reasonable case for AI safety and what we can do about it.Â

Max TEGMARK said that “Uncontrollable†is a captivating, balanced, and remarkably up-to-date book on the most important issue of our time” – find it on Amazon today!

OpenAI reportedly developing new strategies to deal with AI improvement slowdown

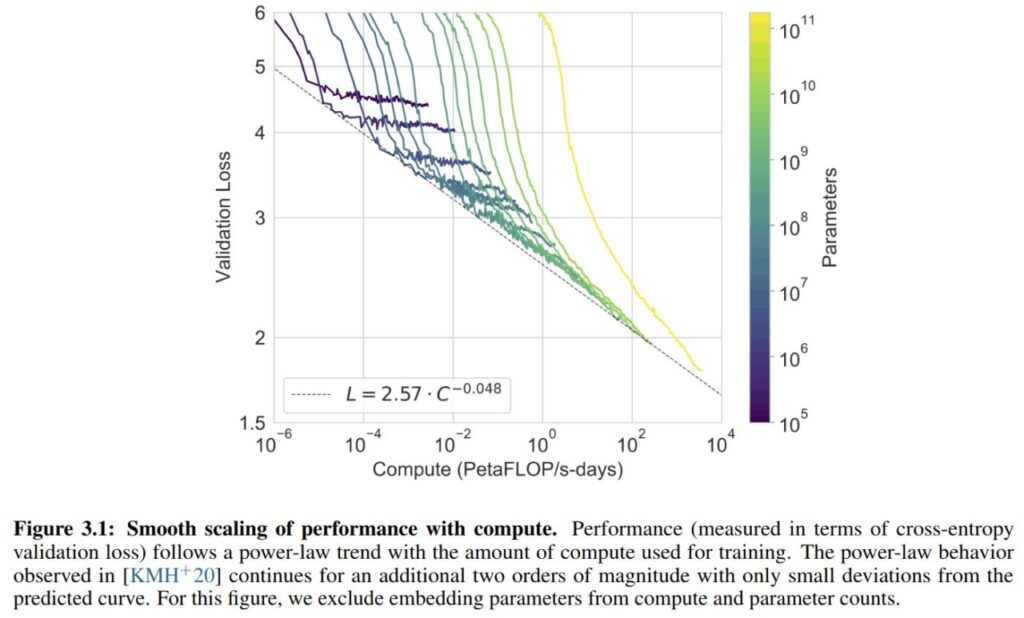

OpenAI’s upcoming model, code-named Orion, may not represent a significant advancement over its predecessors, as per a report in The Information. The model’s performance surpasses existing OpenAI models, but the rate of improvement appears to be decelerating, with Orion potentially not outperforming previous models in certain areas like coding. To address this, OpenAI has established a foundations team to explore ways to enhance its models despite a decreasing availability of new training data. Some of the strategies being considered include training Orion on synthetic data generated by AI models and refining models during the post-training process. OpenAI has not confirmed plans to release Orion this year.

What Donald Trump’s Win Means For AI

Donald Trump’s victory in the 2024 election has significant implications for the future of artificial intelligence (AI) in the United States. Trump’s stance on AI has varied, but he has consistently viewed the technology as a competitive tool against China. His administration is expected to repeal President Biden’s Executive Order on AI, which aimed to address potential threats to civil rights, privacy, and national security. Trump’s AI policy may also involve rolling back regulations to accelerate infrastructure development and maintaining chip export restrictions to curb China’s access to advanced semiconductors. However, the Trump administration’s approach to AI is marked by internal divisions, with some allies advocating for looser regulations and others emphasizing the potential risks of AI. The direction of Trump’s AI policy remains uncertain, with the potential to significantly impact national security, the economy, and the global balance of power.

Other News

Tools

Introducing FLUX1.1 [pro] Ultra and Raw Modes – FLUX1.1 [pro] introduces ultra and raw modes, offering high-resolution image generation at competitive speeds and prices, with options for enhanced realism and diversity in photography.

Qwen Open Sources the Powerful, Diverse, and Practical Qwen2.5-Coder Series – Qwen2.5-Coder series offers open-source, state-of-the-art coding language models with diverse capabilities, scalability, and accessibility, excelling in multiple programming languages and benchmarks.

Mistral launches a moderation API – Mistral’s new moderation API, powered by the Ministral 8B model, offers customizable content moderation across multiple languages and categories, but faces challenges with biases and technical flaws common in AI systems.

Business

Waymo opens robotaxi service to anyone in Los Angeles, marking its largest expansion yet – Waymo’s robotaxi service, now available in Los Angeles, has rapidly expanded due to significant funding and partnerships, offering over 150,000 weekly rides across multiple cities.

DeepRoute raises $100M in push to beat Tesla’s FSD in China – DeepRoute.ai is leveraging a $100 million investment from Great Wall Motor to rapidly expand its autonomous driving technology in China, aiming to outpace Tesla’s Full Self-Driving system by deploying its ADAS in 200,000 vehicles by the end of 2025.

Anthropic teams up with Palantir and AWS to sell its AI to defense customers – Anthropic is collaborating with Palantir and AWS to integrate its Claude AI models into U.S. defense and intelligence operations, enhancing data analysis and decision-making capabilities while maintaining a focus on responsible AI use.

Nvidia Rides AI Wave to Pass Apple as World’s Largest Company – Nvidia’s rise to become the world’s largest company highlights the significant impact and dominance of artificial intelligence in the financial markets.

OpenAI loses another lead safety researcher, Lilian Weng – Lilian Weng’s departure from OpenAI highlights ongoing concerns about the company’s commitment to AI safety amid a wave of exits by key researchers and executives.

Meta’s former hardware lead for Orion is joining OpenAI – Caitlin Kalinowski, formerly of Meta, is joining OpenAI to lead its robotics and consumer hardware initiatives, potentially collaborating with Jony Ive on a new AI hardware device.

Did OpenAI just spend more than $10 million on a URL? – OpenAI acquired the chat.com domain from Dharmesh Shah, who had initially purchased it for $15.5 million, as part of their rebranding efforts, likely paying in shares rather than cash.

‘Unrestricted’ AI group Nous Research launches first chatbot — with guardrails – Nous Research has launched Nous Chat, a user-friendly chatbot interface for their Hermes 3-70B model, offering a personalized AI experience with some content guardrails, and plans for future enhancements.

Research

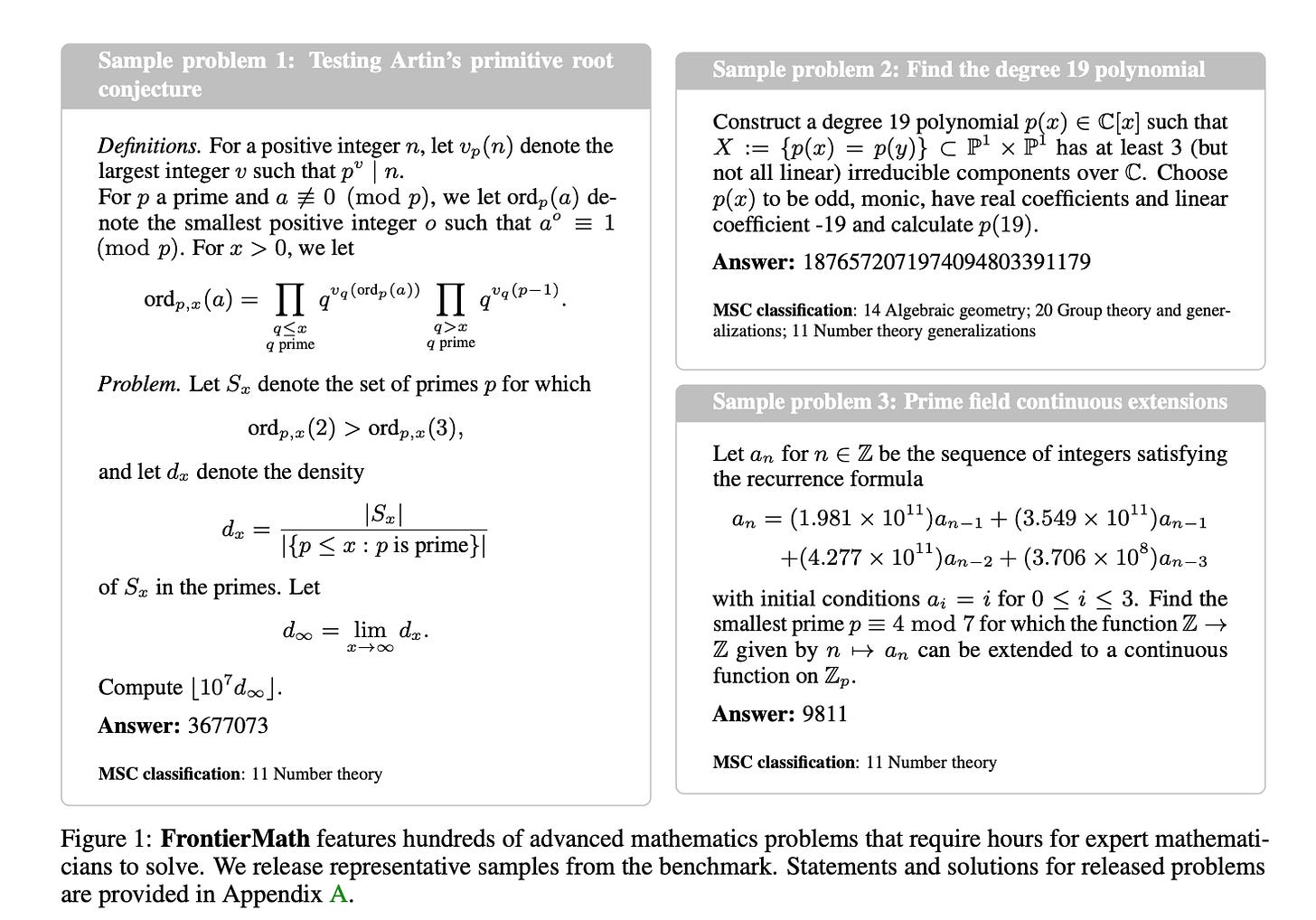

FrontierMath: The Benchmark that Highlights AI’s Limits in Mathematics – FrontierMath is a new benchmark designed to evaluate AI’s mathematical reasoning by presenting research-level problems that current models struggle to solve, highlighting the gap between AI and human mathematicians.

Relaxed Recursive Transformers: Effective Parameter Sharing with Layer-wise LoRA – Relaxed Recursive Transformers utilize parameter sharing and layer-wise low-rank adaptation to create smaller, efficient language models with minimal performance loss, outperforming similar-sized models and offering potential gains in inference throughput.

TableGPT2: A Large Multimodal Model with Tabular Data Integration – TableGPT2 is a large multimodal model that integrates tabular data using a novel table encoder, achieving significant performance improvements over existing models in table-centric tasks while maintaining strong general language and coding abilities.

Hunyuan-Large: An Open-Source MoE Model with 52 Billion Activated Parameters by Tencent – Hunyuan-Large, developed by Tencent, is a powerful open-source Transformer-based mixture of experts model with 389 billion parameters, excelling in various benchmarks and offering insights into model optimization through its innovative techniques and released resources.

Mixture-of-Transformers: A Sparse and Scalable Architecture for Multi-Modal Foundation Models – Mixture-of-Transformers (MoT) is a sparse multi-modal transformer architecture that reduces computational costs while maintaining performance across text, image, and speech modalities by decoupling non-embedding parameters and enabling modality-specific processing.

Add-it: Training-Free Object Insertion in Images With Pretrained Diffusion Models – Add-it leverages a training-free approach using diffusion models’ attention mechanisms to seamlessly integrate objects into images based on text instructions, achieving state-of-the-art results without task-specific fine-tuning.

Edify Image: High-Quality Image Generation with Pixel Space Laplacian Diffusion Models – Edify Image employs a novel Laplacian diffusion process to generate photorealistic images with pixel-perfect accuracy across various applications such as text-to-image synthesis and 4K upsampling.

OmniEdit: Building Image Editing Generalist Models Through Specialist Supervision – OmniEdit addresses the limitations of current image editing models by using specialist supervision, improved data quality, a new architecture called EditNet, and support for various aspect ratios to enhance editing capabilities across multiple tasks.

Watermark Anything with Localized Messages – The Watermark Anything Model (WAM) uses deep learning to imperceptibly embed and extract localized watermarks in images, offering robustness and the ability to handle multiple small regions with distinct messages.

How a stubborn computer scientist accidentally launched the deep learning boom – Prof. Fei-Fei Li’s creation of the massive ImageNet dataset challenged prevailing AI paradigms and played a pivotal role in revitalizing interest in neural networks and deep learning.

Policy

Judge tosses publishers’ copyright suit against OpenAI – A US judge dismissed a copyright lawsuit against OpenAI, ruling that the plaintiffs failed to demonstrate that their articles were copyrighted or that ChatGPT’s responses would likely plagiarize their content.

Mark Zuckerberg’s Nuclear-Powered Data Center for AI Derailed by Bees – Meta’s plan to build a nuclear-powered data center was halted due to the discovery of a rare bee species on the proposed site, highlighting the challenges tech companies face in balancing AI energy demands with environmental concerns.

Source: Read MoreÂ