Amazon DynamoDB is a Serverless, NoSQL, fully managed database with single-digit millisecond performance at any scale. It excels in scenarios where traditional relational databases struggle to keep up.

Event-driven architectures, common in microservices-based applications, use events to invoke actions across decoupled services. In this context, Amazon DynamoDB streams captures changes to items in a table, creating a flow of event data. AWS Lambda, a serverless compute service, can then consume these events to run custom logic without the need for server management.

By combining DynamoDB streams with Lambda, you can build responsive, scalable, and cost-effective systems that automatically react to data changes in real time. In this post, we explore best practices for architecting event-driven systems using DynamoDB and Lambda.

DynamoDB provides two options for capturing data changes (CDC): DynamoDB streams and Amazon Kinesis Data Streams (KDS). In this post, we focus exclusively on DynamoDB streams.

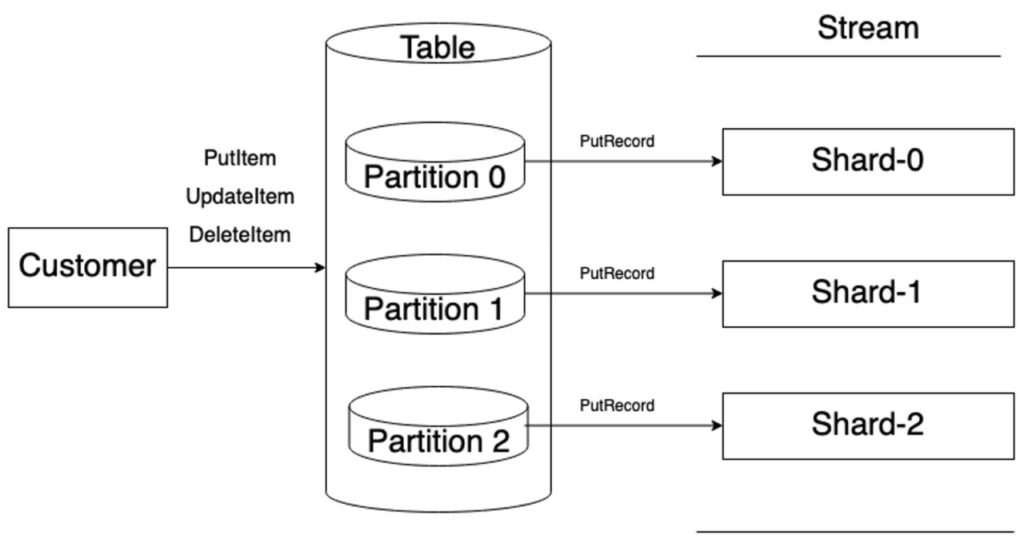

Overview of the relationship between DynamoDB partitions, stream shards, and Lambda instances

DynamoDB stores data in partitions. A partition is an allocation of storage for a table and automatically replicated across multiple Availability Zones within an AWS Region. Partition management is handled entirely by DynamoDB—you never have to manage partitions yourself. To write an item to the table, DynamoDB uses the value of the partition key as input to an internal hash function. The output value from the hash function determines the partition in which the item will be stored.

In DynamoDB, a stream is composed of stream records that capture individual data modifications made to the associated DynamoDB table. Each stream record is assigned a unique sequence number, which represents the order in which it was published to the stream shard.

To organize the stream records, they are grouped into shards. A shard serves as a container for multiple stream records and includes essential information for accessing and iterating through these records. The stream records within a shard are automatically removed after 24 hours.

There is a 1:1 mapping between a partition and an open shard in its corresponding stream. This means that each partition will write its stream records to a dedicated shard, so no other partition writes to the same shard. This mapping provides data isolation and prevents conflicts between partitions. The following figure shows the 1:1 mapping of partitions to open shards.

Each shard is characterized by its metadata, an example of which is shown below:

When a new stream is created, a corresponding shard is generated for each partition in the DynamoDB table. These initial shards will not have a ParentShardId or an EndingSequenceNumber. The ParentShardId is assigned to shards that have been rolled over or split, while the EndingSequenceNumber is associated with shards that are closed for writes, indicating they are now read-only.

Stream shard lifecycle

A stream shard in DynamoDB has a lifespan of up to 4 hours. After this period, a new shard is automatically created, and the partition that generates the stream updates begins publishing to this new shard. The old shard is then marked as read-only and you will see an EndingSequenceNumber applied to its metadata. This process, known as shard rollover, is essential for preventing individual shards from becoming overloaded, providing a balanced distribution of records across shards, which enhances system performance and scalability.

When a new shard is created, DynamoDB tracks its lineage by assigning a ParentShardId value to the metadata of the child shard, establishing a link between the parent shard and the new child shard. This lineage makes sure stream records are processed in the correct sequence. To maintain this order, it’s crucial that applications process the parent shard before its child shard. If Lambda is used as the stream consumer, it automatically handles this ordering, making sure shards and stream records are processed sequentially, even as shards are rolled over or split during application runtime. The following figure illustrates the shard rollover process.

Shard splits

Shard splits in DynamoDB streams occur when the underlying table partitions increase due to growing data volume or higher throughput demands. As data scales, DynamoDB automatically adds new partitions to manage the load, maintaining consistent performance. When a partition splits, the corresponding stream shard also splits, creating new child shards while marking the parent shard as read-only. This process maintains the 1:1 mapping of partitions and shards are intact, enhancing overall throughput and scalability. Lambda, when used as the stream consumer, automatically manages the processing of these split shards, making sure all records are handled in the correct sequence, even as the shard structure changes over time. It’s important for your application to understand how items maintain their order during the shard splitting process. For more details, see the item-level ordering section below.

Impact of shard rollover and split latency

Shard rollovers and splits in DynamoDB streams can introduce increased iterator-age latency due to the time required for Lambda to perform shard discovery. When a shard rolls over or splits, Lambda needs to detect the new shard structure and adjust its processing, a process that can take several seconds depending on the number of shards in the stream. Although it’s usually brief, this latency can accumulate in systems with frequent shard rollovers or splits, potentially impacting event processing times if measuring above P99 for overall latency between DynamoDB and Lambda, particularly in high-throughput scenarios or tables which have a large number of partitions.

Mapping of shards to a Lambda instance

In DynamoDB streams, there is a direct 1:1:1 relationship between a DynamoDB partition, its corresponding open stream shard, and the Lambda instance that processes records from that shard. Each partition in DynamoDB is responsible for storing a subset of the table’s data, and for every partition, there is a dedicated stream shard that captures all the changes (inserts, updates, deletes) made to the items within that partition. This shard acts as a container for these change records, making sure they are stored in the correct order. It’s important to note that write operations that do not result in any data changes will not generate a record in the stream.

When Lambda processes a shard, a single Lambda function instance is responsible for handling the records within that shard. This tight mapping makes sure the processing is isolated and ordered on a per-partition basis. The 1:1:1 relationship between partitions, shards, and Lambda instances helps maintain data consistency and makes sure changes are processed in sequence, thereby supporting the scalability and reliability of event-driven applications built on DynamoDB streams. The following figure shows the 1:1:1 mapping of partitions to shards to Lambda instances.

Ordering and deduplication

DynamoDB streams provides key features to maintain data consistency and integrity:

- Deduplication – Each stream record appears exactly one time in the stream, preventing any duplicates. This is achieved through a monotonically increasing sequence number assigned to each record. DynamoDB uses this sequence number to detect and eliminate duplicates, making sure only unique records are processed.

- Ordering – DynamoDB makes sure stream records for modifications to a specific item are presented in the exact order in which the modifications occurred, preserving the sequence of changes.

DynamoDB enforces these features using the monotonically increasing sequence number, which consists of multiple fields that help validate item modifications as they enter the stream. Here’s how this works:

- Deduplication – DynamoDB makes sure that each update to an item appears in the stream only once. The sequence number assigned to each modification allows DynamoDB to conditionally write the record to the shard:

ACCEPT "1" ONLY IF "1" NOT PREVIOUSLY ACCEPTED

- Ordering – The sequence number makes sure modifications to an item are emitted to the shard in the correct order. For ordering, the system verifies that the previous sequence number was accepted before processing the current one:

ACCEPT "2" ONLY IF "1" WAS ALREADY ACCEPTED

Item-level ordering

Although DynamoDB streams maintains a 1:1 mapping between partitions and open stream shards, this doesn’t imply guaranteed partition-level ordering. Instead, DynamoDB streams provides item-level ordering, which is important to understand.

An item collection in DynamoDB, which consists of items sharing the same partition key, can span multiple partitions through adaptive capacity. This can occur when an item collection experiences high read/write throughput or exceeds the per partition storage quota.

When an item collection spans multiple partitions, enforcing strict partition-level ordering across the entire collection becomes challenging. Each partition operates independently, and coordinating partition-level ordering across multiple partitions would introduce significant complexity and performance constraints.

To optimize performance and scalability, DynamoDB streams focuses on item-level ordering, making sure all modifications for an individual item are presented in the correct sequence, regardless of how the item collection is distributed across partitions.

Lambda as a DynamoDB stream consumer

Lambda allows you to effortlessly process and respond to real-time data modifications in your DynamoDB tables. When you configure Lambda to consume DynamoDB streams, it automatically manages the complexities of stream record processing. This includes making sure records are processed in the correct order and efficiently handling events like item expirations, shard rollovers and shard splits.

By writing a Lambda function, you can define custom logic to process these stream records, enabling a wide range of operations such as data transformation, aggregation, validation, notifications, and invoking downstream actions. Lambda’s seamless integration with other AWS services, databases, APIs, and external systems makes it a powerful tool for building event-driven workflows and microservices.

One of the key advantages of using Lambda as a DynamoDB stream consumer is its serverless architecture. There’s no need to provision or manage infrastructure—Lambda automatically scales to accommodate incoming stream records, providing high availability, reliability, and fault tolerance. Additionally, you only pay for the actual runtime of your Lambda functions, not for the DynamoDB stream itself, making it a cost-effective solution for event-driven processing.

An important component in this setup is EventSourceMapping, which connects Lambda to DynamoDB streams. This configuration acts as a bridge between the two services, enabling Lambda functions to consume and process stream records seamlessly.

EventSourceMapping defines the behavior and characteristics of the event processing pipeline, making sure stream records are efficiently consumed and processed by the associated Lambda function. Key aspects of EventSourceMapping configuration include specifying the batch size, defining error handling strategies, and managing parallel processing, all of which are essential for optimizing the performance and reliability of your Lambda functions. The following table summarizes some key aspects of the EventSourceMapping configuration and their importance.

| Parameter | Information | Required | Default | Notes |

BatchSize | The BatchSize setting determines the maximum number of stream records that are fetched and passed to the Lambda function for processing in each invocation. Choosing an appropriate batch size depends on factors such as the processing capacity of the Lambda function, the expected volume of stream records, and the desired balance between throughput and latency. | No | 100 | Maximum: 10,000 |

BisectBatchOnFunctionError | When the function returns an error, split the batch into two before retrying. Your original batch size setting remains unchanged. | No | false | This configuration provides the ability to recursively split a failed batch and retry on a smaller subset of records, eventually isolating the record causing the error. |

DestinationConfig | This specifies a standard Amazon SQS queue or standard Amazon SNS topic for records that can’t be processed. When Lambda discards a batch of records that is too old or has exhausted all retries, it sends details about the batch to the designated queue or topic. The message sent to the destination doesn’t contain the actual item but instead includes metadata such as the Shard ID and Sequence Number, enabling you to retrieve the item from the stream if needed. | No | Standard SQS queue or standard SNS topic destination for discarded records. | |

Enabled | Set to true to enable the event source mapping. Set to false to stop processing records. Lambda keeps track of the last record processed and resumes processing from that point when the mapping is reenabled. | No | true | |

EventSourceArn | EventSourceMapping specifies the source of events, which in this case is a DynamoDB stream. It establishes the connection between the DynamoDB table’s stream and the Lambda function. | Yes | The ARN of the stream. | |

FilterCriteria | This specifies the object that defines the filter criteria that determine whether Lambda should process an event. | No | ||

FunctionName | This specifies the Lambda function to serve as a consumer for the stream, processing the stream records. | Yes | ||

MaximumBatchingWindowInSeconds | This sets the maximum amount of time, in seconds, that Lambda spends gathering records before invoking the function. | No | 0 | |

MaximumRecordAgeInSeconds | The MaximumRecordAgeInSeconds setting defines the maximum age of a stream record that the Lambda function can process. This helps make sure stale or outdated records aren’t processed, and it provides a way to filter out records that are no longer relevant to the application’s logic. | No | -1 | -1 means infinite: failed records are retried until the record expires. Minimum: -1 Maximum: 604800 |

MaximumRetryAttempts | When a batch of stream records fails to be processed by the associated Lambda function, due to factors such as errors in the function code, throttling, or resource limitations, Lambda can automatically retry the failed batch. The MaximumRetryAttempts setting determines how many retry attempts will be made. | No | -1 | -1 means infinite: failed records are retried until the record expires. Minimum: -1 Maximum: 604800 |

ParallelizationFactor | Configure this setting to allow multiple Lambda instances to process a single shard of a DynamoDB stream concurrently. You can control the number of concurrent batches Lambda polls from a shard by adjusting the parallelization factor, which ranges from 1 (default) to 10. When you increase the number of concurrent batches per shard, Lambda still ensures in-order processing at the item (partition and sort key) level. | No | 1 | Maximum: 10 |

StartingPosition | This setting determines the position in the stream from which the Lambda function begins processing records. Options include LATEST (starting from the most recent records) or TRIM_HORIZON (starting from the oldest available records), which is 24 hours in the past defined by the max retention period of the stream. Selecting the appropriate starting position makes sure the desired portion of the stream is consumed, taking into account any potential replay scenarios or resuming from a specific point. | Yes | TRIM_HORIZON or LATEST | |

TumblingWindowInSeconds | This indicates the duration in seconds of a processing window for DynamoDB Streams event sources. A value of 0 seconds indicates no tumbling window. | No | Minimum: 0 Maximum: 900 |

Lambda asynchronous processing

AWS services like DynamoDB and Amazon Simple Notification Service (Amazon SNS) invoke functions asynchronously in Lambda. When invoking a function asynchronously, you pass the event to Lambda without waiting for a response. Lambda handles the event by queuing it and sending it to the function later. You have the flexibility to configure error handling in Lambda and can send invocation records to downstream resources like Amazon Simple Queue Service (Amazon SQS) or Amazon EventBridge.

Lambda manages stream events, retries on errors, and handles throttling. Expired or unsuccessfully processed events can be discarded based on your error handling configuration. You can set up various destinations for invocation records, including Amazon SQS, Amazon SNS, Lambda, and EventBridge, and use dead-letter queues for discarded events. Delivery failures to configured destinations are reported in Amazon CloudWatch.

Processing speed and iterator age

One challenge in asynchronous event processing is to prevent a situation where incoming change events exceed the processing capacity of the Lambda function. The DynamoDB streams retention period is 24 hours, so it’s crucial to process events before they expire. For instance, if the table undergoes 10,000 mutations per second, but the function can only handle 100 events per second, the processing backlog will continue to grow. To mitigate this, it’s important to monitor the IteratorAge metric and set up alerts for concerning levels of increase. For examples of such monitoring techniques, refer to Monitoring Amazon DynamoDB for operational awareness. However, the key question remains: how can we provide timely event processing and avoid falling behind? The answer lies in optimizing the Lambda function to be as concise and efficient as possible. If each event takes the full 15-minute runtime limit of the Lambda function to process, it’s highly likely that we will fall behind in handling the incoming events.

Several factors contribute to the IteratorAge metric of a function and we will cover each of the following factors in order:

- Runtime duration

- Batch size

- Invocation errors

- Throttling

- Lambda invocation throttling

- DynamoDB streams throttling

- Unevenly distributed records

- Shard count

Runtime duration

Lambda runtime duration refers to the amount of time taken by a Lambda function to run its tasks from start to finish. It represents the elapsed time between the invocation of the function and its completion. The runtime duration includes the processing time for any operations or computations performed by the function code, as well as any external service interactions or network latency. It is an important metric to consider when assessing the performance and efficiency of a Lambda function, as well as for monitoring and optimizing its runtime to meet specific requirements or service-level agreements.

Lambda allows you to allocate memory to your serverless functions, which directly affects their performance and resource utilization. The allocated memory determines the CPU power, network bandwidth, and disk I/O capacity available to your function during its run.

By default, Lambda provides a default memory allocation of 128 MB for newly created functions. However, you have the flexibility to adjust this allocation based on the specific needs of your application. The available memory options range from 128–10,240 MB, increasing in increments of 64 MB.

It’s important to select an appropriate memory allocation for your functions to optimize their performance. When a function is allocated more memory, it also receives a proportional increase in CPU power and other resources. This can result in faster runtimes and improved responsiveness.

However, it’s essential to strike a balance and avoid over-allocating memory beyond what your function requires. Overprovisioning memory can lead to unnecessary costs and under-utilization of resources. It’s recommended to analyze your function’s memory usage patterns and performance requirements to determine the optimal memory allocation. While you can manually run tests on functions by selecting different memory allocations and measuring the time taken to complete, the AWS Lambda Power Tuning tool allows you to automate the process.

Batch size and batch window

To enhance the event processing efficiency further, you should consider modifying your Lambda function to handle events in batches. When an event is added to the DynamoDB stream, Lambda doesn’t immediately invoke the trigger function. Instead, the Lambda infrastructure polls the stream four times per second and invokes the function only when there are events in the stream. Within the short intervals between the polls, numerous events could accumulate, and processing them individually can lead to falling behind in the workload.

There are several configuration parameters that come into play, which also contributes to the runtime duration and ultimately impacting the IteratorAge:

- BatchSize – This parameter allows you to specify the maximum number of items in each batch. The default value is 100, and the maximum allowed is 10,000. By adjusting this parameter based on your specific workload characteristics, you can optimize the batch size for efficient processing.

- MaximumBatchingWindowInSeconds – This parameter determines how long the Lambda function continues accumulating records before invoking the function. By setting an appropriate value for this parameter, you can control the duration for which the events are collected before processing, balancing the frequency of invocations with efficient resource utilization.

Before invoking the function, Lambda continues to read records from the event source until it has gathered a full batch, the batching window expires, or the batch reaches the payload limit of 6 MB.

Finding the right balance between batch processing and real-time requirements is a trade-off that needs careful consideration. Although processing events in batches improves efficiency, having an excessively long batching window may result in a delay that doesn’t align with your application’s real-time needs. It’s important to strike the right balance to meet your specific requirements. This balancing act requires thorough performance testing to achieve optimal parameter settings.

Invocation errors

Invocation errors occur when a Lambda function fails to process a record from a DynamoDB stream. This failure can be caused by various issues, including malformed data, external service failures, or bugs in the function’s code. When such errors occur, and if not properly managed, they can lead to significant processing delays and potential data loss.

One of the most critical issues related to invocation errors is the concept of poisoned pills. A poisoned pill is a specific record that causes repeated failures in the Lambda function. Because Lambda processes records from a DynamoDB stream shard sequentially, a poisoned pill can block the entire shard or partition from being processed. If the function continues to fail on the same record, it will keep retrying indefinitely unless specific handling mechanisms are put in place.

By default, the Lambda EventSourceMapping feature, which reads records from DynamoDB streams and passes them to your Lambda function, has a MaximumRetryAttempts value of -1. This means that the function will retry processing the same record indefinitely until it succeeds. If a poisoned pill is encountered, this default setting can cause the processing of the entire shard to stall, potentially blocking the shard for up to 24 hours—the maximum retention period for DynamoDB streams. During this time, no other records from that shard can be processed, leading to a significant backlog and risking data expiration.

Monitoring invocation errors for your Lambda function is important, and Amazon CloudWatch metrics can help with this. Invocation metrics serve as binary indicators of the Lambda function’s outcome. For instance, if the function encounters an error, the Errors metric is recorded with a value of 1. To track the number of errors occurring each minute, you can view the Sum of the Errors metric, aggregated over a 1-minute period. The following graph shows prolonged error metrics caused by a poisoned pill while the Lambda event source mapping retry configuration is set to default value of -1.

Another key metric to monitor is the IteratorAge, particularly for DynamoDB event sources. The IteratorAge metric represents the age of the last record in the event, measuring the time elapsed between when a record is added to the stream and when the event source mapping forwards it to the Lambda function. A significant rise in the IteratorAge metric, especially when viewed using the Maximum statistic, can indicate issues such as a poisoned pill – a problematic record that causes repeated invocation failures. This behavior is clearly illustrated in the following CloudWatch graph.

The IteratorAge metric will keep increasing until either the problematic record surpasses DynamoDB streams 24-hour retention period and is no longer available to read or the Lambda function code is updated to resolve the underlying issue causing the error.

To prevent such scenarios, it’s important to configure your Lambda function to handle invocation errors effectively. The following are two key strategies:

- Set a maximum number of retries – Instead of allowing infinite retries, you should configure the

MaximumRetryAttemptssetting to a finite number. This makes sure that if a record consistently fails to process, the function will eventually stop retrying after the specified number of attempts. By doing this, you avoid blocking the entire shard and can continue processing other records. - Use a dead-letter queue – Implementing a dead-letter queue (DLQ) is important for managing failed records. With the

BisectBatchOnFunctionErrorsetting enabled, any failed batch of records is split into smaller batches until only the problematic items remain. Without this setting, the entire batch is sent to the DLQ. When a record fails to process after the maximum number of retries, it’s metadata is sent to the DLQ. The DLQ acts as a separate queue where failed record metadata is stored for later analysis and manual intervention. This approach allows you to isolate and address problematic records without affecting the processing of the rest of the stream, helping to keep your application running smoothly.

By setting a maximum number of retries and using a DLQ, you can mitigate the risks associated with poisoned pills and make sure your Lambda function can handle invocation errors without causing significant delays or data loss.

Throttling

Throttling can occur in two main ways when using a Lambda function to process DynamoDB streams. The first involves exceeding the maximum concurrency limits set for AWS Lambda, and the second stems from throttling within the DynamoDB stream itself.

Lambda invocation throttling

Throttling occurs when a Lambda function exceeds the maximum invocation concurrency limit set for its run, causing delays or failures in processing events. In the context of DynamoDB streams, throttling can significantly impact the ability to keep up with the flow of incoming records, leading to an increase in the IteratorAge metric and potentially causing data processing to fall behind.

When a Lambda function is invoked by DynamoDB streams, it is allocated a certain amount of concurrency based on the overall available concurrency limits in your AWS account. If the function is invoked more frequently than it can handle—due to a high parallelization factor or for a table with a large number of partitions—AWS may throttle the function. Throttling means that some instances of the Lambda function are delayed or even dropped, depending on the severity of the throttling.

Throttling can be particularly problematic when combined with other issues, such as poisoned pills or unoptimized batch sizes. If a Lambda function is already struggling to process events due to errors or inefficient run, throttling can exacerbate the problem by further reducing the function’s ability to keep up with incoming records.

To mitigate the risk of throttling, consider the following strategies:

- Increase concurrency limits – If your Lambda function is frequently throttled, it may be necessary to increase the concurrency limit for that function. Lambda allows you to set a specific concurrency limit for individual Lambda functions or request an increase in the overall account concurrency limit. By increasing the concurrency, you can make sure your Lambda function has sufficient resources to handle spikes in event volume without being throttled.

- Adjust batch size and windowing – As discussed in the batch size section, configuring the

BatchSizeandMaximumBatchingWindowInSecondsparameters appropriately can help manage the load on your Lambda function. By finding the right balance between batch size and the batching window, you can reduce the number of individual invocations and better manage the concurrency usage of your function, thereby minimizing the risk of throttling. - Monitor throttling metrics – Lambda provides several metrics in CloudWatch that can help you monitor throttling, such as

ThrottlesandConcurrentExecutions. By setting up alarms on these metrics, you can receive notifications when throttling occurs and take proactive measures to address it. Monitoring these metrics allows you to quickly identify and respond to potential throttling issues before they impact your system’s performance. - Use a dead-letter queue – In cases where throttling leads to failed instances, it’s important to have a DLQ in place to capture any records that couldn’t be processed. This makes sure throttled records aren’t lost and can be reprocessed after the throttling issue has been resolved.

DynamoDB stream throttling

When using DynamoDB streams, it’s recommended to limit the number of consumers to two per stream. This helps maintain efficient consumption and avoids overwhelming the stream’s capacity. Adding more consumers leads to competition for the same API limits, increasing the likelihood of throttling.

Exceeding two Lambda consumers per stream typically results in control-plane throttling, specifically due to hitting the DescribeStream API quota, which is limited to 10 requests per second (RPS). Lambda’s event-source mapping polls the stream 4 times per second per consumer. With two Lambda consumers, the polling rate reaches 8 RPS, which is just under the limit. However, adding a third consumer would push the rate beyond 10 RPS, causing control-plane throttling and reducing overall performance.

While control-plane throttling doesn’t pose a risk of data loss, it does lead to degraded performance due to the need for AWS Lambda to retry the throttled requests. When throttling occurs, the system must pause and retry the DescribeStream API calls, which introduces delays in processing. As more consumers are attached to the stream, throttling becomes more frequent, and the retries further slow down the overall throughput.

Unevenly distributed records

Unevenly distributed records in DynamoDB streams can create significant challenges for Lambda processing, particularly when some partitions receive far more data than others. This imbalance leads to certain shards becoming hot, where they accumulate more records than others, causing delays in processing because Lambda must handle these overloaded shards sequentially. As a result, this can increase the IteratorAge metric and cause bottlenecks in your event-driven architecture.

To mitigate this, it’s important to design your DynamoDB table with a partition key that provides even data distribution across partitions. Regular monitoring for imbalances and adjusting your Lambda function’s batch size dynamically based on workload can help manage uneven distribution.

Shard count

The shard count in DynamoDB streams directly influences the number of concurrent Lambda instances processing the stream. Each shard handles a sequence of data changes with one Lambda instance processing the records from one shard at a time. Increasing the number of shards allows for more parallel processing, improving throughput.

However, before you increase the shard count by pre-warming your DynamoDB table, it’s often more effective to first adjust the ParallelizationFactor parameter in Lambda. By increasing this factor, you allow Lambda to obtain records from a single shard and share it among multiple Lambda instances. This can significantly boost throughput without needing to increase the shard count, offering a more efficient way to handle high volumes of data.

Summary

DynamoDB is a high-performance NoSQL database built for serverless applications that require global scalability. In event-driven architectures, DynamoDB streams captures real-time changes, which Lambda can process without the need for server management, enabling responsive and scalable systems.

This post explored best practices for integrating DynamoDB and Lambda, covering topics like stream shard management, ordering and deduplication guarantees, and the role of EventSourceMapping. It also provided insights into optimizing Lambda functions to handle invocation errors, throttling, and uneven data distribution.

You can use these best practices to enhance your event-driven applications with DynamoDB and Lambda. Start applying these strategies today to build more efficient and scalable solutions. For more details, refer to the Amazon DynamoDB Developer Guide and Using AWS Lambda with Amazon DynamoDB.

About the Author

Lee Hannigan is a Sr. DynamoDB Specialist Solutions Architect based in Donegal, Ireland. He brings a wealth of expertise in distributed systems, backed by a strong foundation in big data and analytics technologies. In his role as a DynamoDB Specialist Solutions Architect, Lee excels in assisting customers with the design, evaluation, and optimization of their workloads using the capabilities of DynamoDB.

Lee Hannigan is a Sr. DynamoDB Specialist Solutions Architect based in Donegal, Ireland. He brings a wealth of expertise in distributed systems, backed by a strong foundation in big data and analytics technologies. In his role as a DynamoDB Specialist Solutions Architect, Lee excels in assisting customers with the design, evaluation, and optimization of their workloads using the capabilities of DynamoDB.

Source: Read More