The General Data Protection Regulation (GDPR) represents a significant milestone in data privacy, aiming to safeguard the personal information of individuals within the European Union (EU). Among its key mandates, GDPR requires organizations to obtain explicit consent before collecting personal data and provides individuals with the right to erasure, known as the “right to be forgotten,†which allows them to request the deletion of their personal information. For organizations, especially those handling vast amounts of data, achieving GDPR compliance presents a complex and multifaceted challenge.

In this post, AWS Service Sector Industry Solutions shares our journey in developing a feature that enables customers to efficiently locate and delete personal data upon request, helping them meet GDPR compliance requirements. The mission of the Service Sector Solutions Engineering Team is to accelerate AWS Cloud adoption across diverse industries, including Travel, Hospitality, Gaming, and Entertainment. We work with customers from Cruise Lines, Lodging, Alternative Accommodation, Travel Agencies, Airports, Airlines, Restaurants, Catering, Casinos, Lotteries, and more.

Our application manages extensive profile data across various services, and we needed a scalable, cost-effective solution to handle GDPR erasure requests. This task involved overcoming significant challenges related to data storage, retrieval, and deletion while also minimizing disruption to our customers’ operations.

Application overview

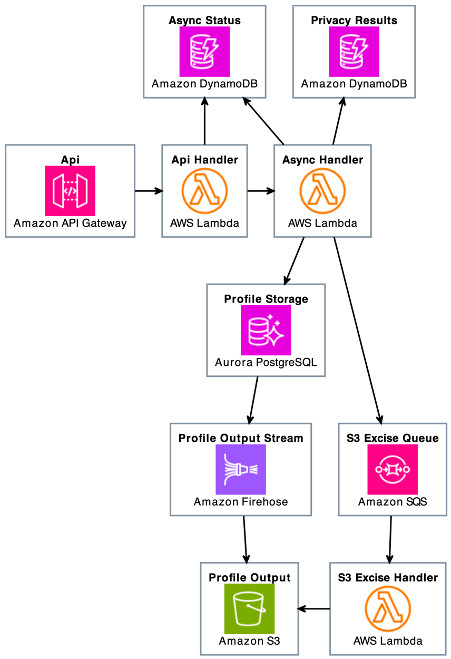

At the time of writing, our application stores profile data across several services: Amazon Aurora PostgreSQL-Compatible Edition, Amazon DynamoDB, and Amazon Simple Storage Service (Amazon S3). The S3 bucket is indexed and partitioned using AWS Glue and can be queried using Amazon Athena. To search for profiles, we use an AWS Lambda function that queries Aurora, DynamoDB, and Athena and places those locations in a DynamoDB table specifically for GDPR requests. To purge this data, we use two more Lambda functions and an Amazon Simple Queue Service (Amazon SQS) queue. Our customers manage up to 200 million profiles and typically process 100–200 GDPR erasure requests every month, handled in batch operations. The following image shows a high-level overview of the architecture.

One of the primary design challenges was efficiently locating and purging profile data stored in Amazon S3, especially considering the terabytes of data involved. Traditional databases like Aurora PostgreSQL-Compatible and DynamoDB offer straightforward data operations, but Amazon S3 required a different approach.

Prior to designing our GDPR erasure handler, the S3 bucket stored profile data in CSV format. Querying this data with Athena provided the necessary S3 paths for profile data, solving the problem of where to look. However, querying CSV data at scale proved to be prohibitively expensive. With an anticipated 200 million profiles and over 1 billion records in Amazon S3, we needed a more cost-effective solution.

We transitioned to storing data in Parquet format with GZIP compression using Amazon Kinesis Data Firehose. Parquet, a columnar storage format, allows Athena to query only the necessary columns rather than entire rows, as required with CSV files. This columnar approach significantly reduces the amount of data scanned, leading to faster query performance and lower costs. Additionally, GZIP compression further minimizes storage and transfer costs by reducing the file size. With the data now efficiently stored in Amazon S3, we needed a reliable method to delete specific profile data to comply with GDPR requests without affecting other data.

Implementing the right to erasure

We considered using an open source solution, S3 Find and Forget, but it wasn’t ideal for our customers. Deploying two separate solutions would increase cost and complexity, and customers would lack control over an external solution’s inner workings. This could lead to mismatched requirements and the need to maintain a separate solution. Therefore, we opted to develop our own custom solution for data removal from Amazon S3.

We decided to build a custom solution predominantly using the Go programming language, complemented by a Lambda function using AWS SDK for Pandas in Python due to the absence of reliable Parquet libraries for Go. This combination proved effective in reading, querying, and managing data in Amazon S3.

To enhance the performance of GDPR purge operations, we parallelized Lambda function invocations. However, this approach required a way to inform customers when an operation is already in progress and enforce serialization to prevent conflicts. Limiting user actions contradicted our goal of reducing friction. Without a solution, if an end user creates two batches of GDPR purge requests targeting the same S3 object, the first Lambda invocation to finish would be overwritten by the second, restoring the deleted data. To avoid this, we needed a locking mechanism—a locking system (mutex).

Fortunately, there was already work done on building a distributed mutex using DynamoDB, as detailed in Building Distributed Locks with the DynamoDB Lock Client. The existing library was written in Java, so we ported it to Python to suit our specific use case.

Building a distributed mutex with DynamoDB

To implement a distributed mutex using DynamoDB, we used a custom mutex client. This client can be instantiated with default settings or configured for specific use cases:

By default, the client reserves a lock for 30 seconds and attempts to secure a lock for up to 60 seconds. Given our use of Lambda, we needed to account for Lambda timeouts. Therefore, the client respects these constraints and uses the DynamoDB time to live (TTL) feature to clean up expired locks in cases where the Lambda runtime fails and cannot release a lock. However, we cannot rely on TTL for the lock itself due to the lack of sufficient granularity in the DynamoDB TTL cleanup process.

The client exposes three primary methods: try_acquire_lock, acquire_lock, and release_lock.

For ease of use, a context object employs these methods, allowing for seamless implementation:

Determining a stale lock and safe upsert

Much like the Java implementation of the Lock Client, our Python port uses a UUIDv4 referred to as the revision version number (RVN). This randomly generated UUIDv4 value is stored as part of the lock reservation along with the UTC timestamp and lease duration.

As part of the mutex client’s execution loop, the RVN is stored on an existing lock. The mutex client determines if a lock is stale by comparing the current time to the lock’s start time plus its duration. If the current time exceeds the existing lock’s start time plus its duration and the RVN matches the client’s stored value, the lock is deemed stale. The client can then safely attempt to upsert the item in DynamoDB with its own RVN, lease start time, and lease duration.

During the upsert, the mutex client uses DynamoDB ConditionExpression to make sure the RVN has not changed from what was previously stored. If the RVN is different, another mutex client has already upserted the lock, and the current client must reenter its wait loop.

The client loop

The following diagram shows the client loop, which will loop infinitely until one of three conditions occur:

- A client successfully secures a lock

- An unrecoverable exception, such as an SDK or network error

- The maximum wait time is exceeded

In our implementation, the mutex client runs within a Lambda function that processes messages from an SQS queue. To prevent the Lambda from timing out before securing a lock and processing data from Amazon S3, we set the maximum wait timeout to be significantly shorter than the Lambda timeout. If a Lambda invocation encounters an exception or exceeds the wait time without securing a lock, it sends the message back to the queue for reprocessing. This approach minimizes Lambda costs while promoting system resiliency and effective GDPR data deletion. For persistent exceptions, our solution funnels dead-letter queue (DLQ) messages to an error processing Lambda function, which in turn writes the error to a DynamoDB table so that the customer can manually adjudicate these errors.

When multiple clients attempt to secure a lock on the same resource, each client must either wait for the lock to be released and the item to be deleted from DynamoDB or determine if the existing lock is stale and safely upsert the item.

The following diagram illustrates the process of acquiring and releasing a lock in DynamoDB using a mutex, demonstrating how Client1 successfully acquires and releases the lock, while Client2 waits and retries until the lock becomes available.

This approach provides our customers with maximum flexibility in handling their GDPR data while providing strict compliance by guaranteeing the correct data is deleted.

Conclusion

In this post, we showed how we developed a scalable and cost-effective GDPR compliance solution using Amazon DynamoDB to efficiently manage erasure requests. By using AWS services such as Lambda, Amazon SQS, Aurora PostgreSQL, and Amazon S3, we were able to design a robust system that promotes data privacy and regulatory compliance while minimizing operational disruptions.

This solution can be adapted for other use cases requiring secure, distributed locking mechanisms or efficient data management across large datasets. For a deeper dive into distributed locks, check out the AWS blog post on Building Distributed Locks with the DynamoDB Lock Client.

We invite you to leave your comments and share your thoughts or questions about this implementation.

About the Authors

Ryan Love is a Sr. Software Development Engineer on the AWS Service Sector Industry Solutions team supporting enterprise customers. Ryan has over 15 years of experience building custom solutions for the public and private sectors.

Ryan Love is a Sr. Software Development Engineer on the AWS Service Sector Industry Solutions team supporting enterprise customers. Ryan has over 15 years of experience building custom solutions for the public and private sectors.

Lee Hannigan is a Sr. DynamoDB Specialist Solutions Architect based in Donegal, Ireland. He brings a wealth of expertise in distributed systems, backed by a strong foundation in big data and analytics technologies. In his role as a DynamoDB Specialist Solutions Architect, Lee excels in assisting customers with the design, evaluation, and optimization of their workloads using the capabilities of DynamoDB.

Lee Hannigan is a Sr. DynamoDB Specialist Solutions Architect based in Donegal, Ireland. He brings a wealth of expertise in distributed systems, backed by a strong foundation in big data and analytics technologies. In his role as a DynamoDB Specialist Solutions Architect, Lee excels in assisting customers with the design, evaluation, and optimization of their workloads using the capabilities of DynamoDB.

Source: Read More