Large Language Models find it challenging to understand Mathematical reasoning. Mathematical reasoning involves various cognitive tasks like understanding and manipulating mathematical concepts, solving problems, and making logical deductions. Existing methods in this domain have been established to enhance the mathematical ability of LLMs. However, few recognize the value of state transition for LLM reasoning, which can significantly improve the reasoning abilities of LLMs but has yet to be widely recognized or utilized.

Current methods focus on enhancing the characteristic mathematical abilities of LLMs through training such as GPT, LLaMA, and MetaMath. These models use large-scale mathematical prompting to guide stepwise reasoning during problem-solving. CoT and Best-of-N explore how to fully harness the potential of LLMs during inference to boost mathematical performance. Monte Carlo Tree Search and Process Reward Model have achieved remarkable results by decomposing the problem-solving process into multiple steps while simultaneously providing timely rewards. However, these methods have limitations in efficiency and adaptability across different problem types.

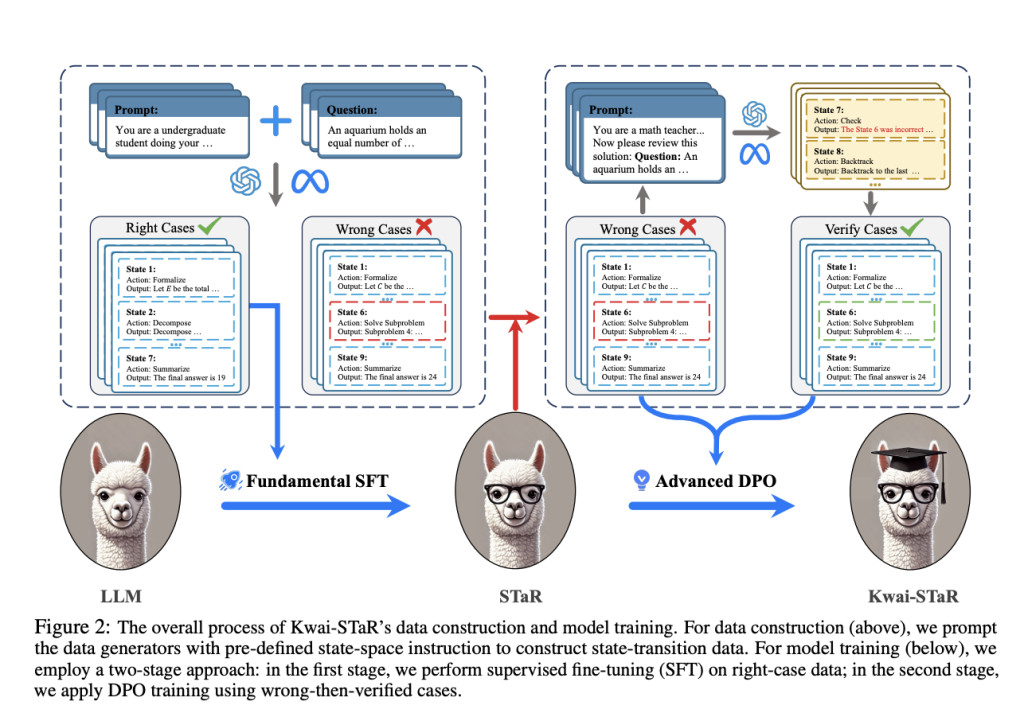

Kwai-STaR, a framework to transform general LLMs into state transition reasoners, which systematically solve problems by performing state transition, has been proposed to overcome this challenge.

Researchers from Tsinghua University, Kuaishou Technology, the Institute of Automation, and the Chinese Academy of Sciences have proposed Kwai-STaR. The process involves three main steps: defining a state space for problem-solving, constructing a state-transition dataset, and training LLMs using a two-stage curriculum. The dataset contains two types of instances: a majority of correct cases and a minority of wrong-then-verified cases from the data generator and trained reasoner. The training strategy consists of two stages to maximize learning efficiency: a fundamental stage and an advanced stage. The fundamental stage trains the model with the majority of right cases, enabling it to solve relatively simple problems and to grasp the state-transition manner. The advanced stage includes pairs of wrong and verified cases to further strengthen the proficiency. Kwai-DStar is trained on benchmarks such as GSM8K, which showed Kwai-STaR’s impressive performance and efficiency. It also showed that Kwai-STaR achieves high accuracy rates with simpler inference processes than those required by traditional methods.

In conclusion, Kwai-DStar transforms a traditional LLM into a state-transition reasoner, which enhances its reasoning capabilities for tackling mathematical problems. The current Kwai-STaR has only validated its effectiveness in the field of mathematics. While the mathematical domain is both challenging and representative, the potential of state space for enhancing LLM reasoning in general scenarios remains unverified, which limits the generalizability of the Kwai-STaR. Therefore, the researchers are actively working to provide additional experimental results in more diverse and general settings to demonstrate the generalizability of the Kwai-STaR approach further.Â

Check out the Paper. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter and join our Telegram Channel and LinkedIn Group. If you like our work, you will love our newsletter.. Don’t Forget to join our 55k+ ML SubReddit.

[AI Magazine/Report] Read Our Latest Report on ‘SMALL LANGUAGE MODELS‘

The post Kwai-STaR: An AI Framework that Transforms LLMs into State-Transition Reasoners to Improve Their Intuitive Reasoning Capabilities appeared first on MarkTechPost.

Source: Read MoreÂ