Artificial Intelligence (AI) systems have made impressive strides in recent years, showing proficiency in tackling increasingly challenging problems. However, when it comes to advanced mathematical reasoning, a substantial gap still exists between what these models can achieve and what is required to solve real-world complex problems. Despite the progress in AI capabilities, current state-of-the-art models struggle to solve more than 2% of the problems presented in advanced mathematical benchmarks, highlighting the gap between AI and the expertise of human mathematicians.

Meet FrontierMath

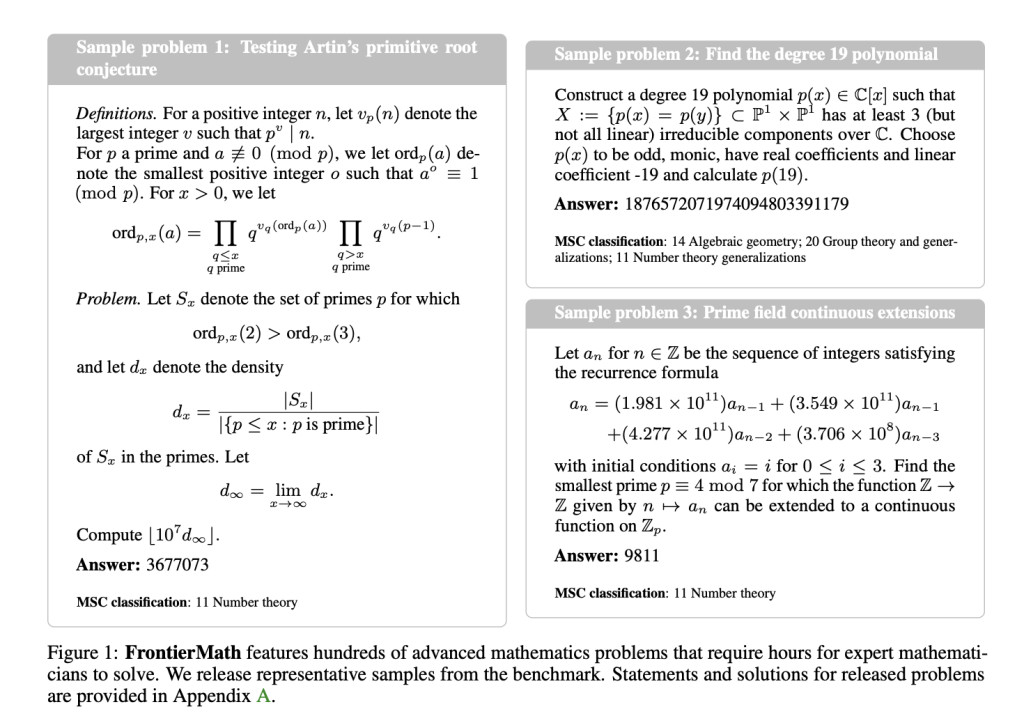

Meet FrontierMath: a new benchmark composed of a challenging set of mathematical problems spanning most branches of modern mathematics. These problems are crafted by a diverse group of over 60 expert mathematicians from renowned institutions, including MIT, UC Berkeley, Harvard, and Cornell. The questions range from computationally intensive problems in number theory to abstract challenges in algebraic geometry, covering 70% of the top-level subjects in the 2020 Mathematics Subject Classification (MSC2020). Notably, the problems are original and unpublished, specifically designed to ensure the evaluation of AI without data contamination that can skew results.

FrontierMath addresses key limitations of existing benchmarks, such as GSM8K and the MATH dataset, which primarily focus on high-school and undergraduate-level problems. As AI models are close to saturating these earlier benchmarks, FrontierMath pushes the boundaries by including research-level problems requiring deep theoretical understanding and creativity. Each problem is designed to require hours, if not days, of effort from expert human mathematicians, emphasizing the significant gap in capability that still exists between current AI models and human expertise.

Technical Details and Benefits of FrontierMath

FrontierMath is not just a collection of challenging problems; it also introduces a robust evaluation framework that emphasizes automated verification of answers. The benchmark incorporates problems with definitive, computable answers that can be verified using automated scripts. These scripts utilize Python and the SymPy library to ensure that solutions are reproducible and verifiable without human intervention, significantly reducing the potential for subjective biases or inconsistencies in grading. This design also helps eliminate manual grading effort, providing a scalable way to assess AI capabilities in advanced mathematics.

To ensure fairness, the benchmark is designed to be “guessproof.†This means that problems are structured to prevent models from arriving at correct solutions by mere guessing. The verification process checks for exact matches, and many problems have numerical answers that are deliberately complex and non-obvious, which further reduces the chances of successful guessing. This robust structure ensures that any AI capable of solving these problems genuinely demonstrates a level of mathematical reasoning akin to a trained human mathematician.

The Importance of FrontierMath and Its Findings

FrontierMath is crucial because it directly addresses the need for more advanced benchmarks to evaluate AI models in fields requiring deep reasoning and creative problem-solving abilities. With existing benchmarks becoming saturated, FrontierMath is positioned as a benchmark that moves beyond simple, structured questions to tackle problems that mirror the challenges of ongoing research in mathematics. This is particularly important as the future of AI will increasingly involve assisting in complex domains like mathematics, where mere computational power isn’t enough—true reasoning capabilities are necessary.

The current performance of leading language models on FrontierMath underscores the difficulty of these problems. Models like GPT-4, Claude 3.5 Sonnet, and Google DeepMind’s Gemini 1.5 Pro have been evaluated on the benchmark, and none managed to solve even 2% of the problems. This poor performance highlights the stark contrast between AI and human capabilities in high-level mathematics and the challenge that lies ahead. The benchmark serves not just as an evaluation tool but as a roadmap for AI researchers to identify specific weaknesses and improve the reasoning and problem-solving abilities of future AI systems.

Conclusion

FrontierMath is a significant advancement in AI evaluation benchmarks. By presenting exceptionally difficult and original mathematical problems, it addresses the limitations of existing datasets and sets a new standard of difficulty. Automated verification ensures scalable, unbiased evaluation, making FrontierMath a valuable tool for tracking AI progress toward expert-level reasoning.

Early evaluations of models on FrontierMath reveal that AI still has a long way to go to match human-level reasoning in advanced mathematics. However, this benchmark is a crucial step forward, providing a rigorous testing ground to help researchers measure progress and push AI’s capabilities. As AI evolves, benchmarks like FrontierMath will be essential in transforming models from mere calculators into systems capable of creative, deep reasoning—needed to solve the most challenging problems.

Check out the Paper. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter and join our Telegram Channel and LinkedIn Group. If you like our work, you will love our newsletter.. Don’t Forget to join our 55k+ ML SubReddit.

[AI Magazine/Report] Read Our Latest Report on ‘SMALL LANGUAGE MODELS‘

The post FrontierMath: The Benchmark that Highlights AI’s Limits in Mathematics appeared first on MarkTechPost.

Source: Read MoreÂ